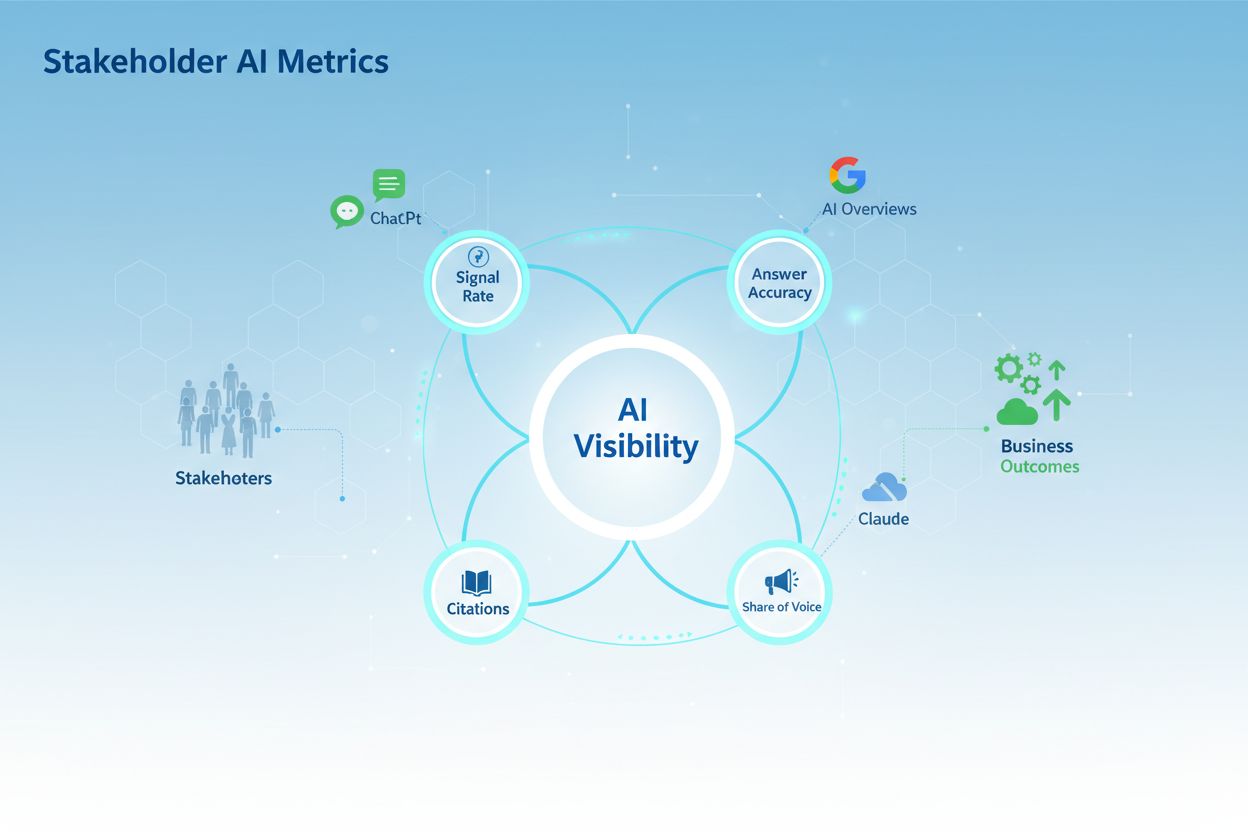

AI Visibility Metrics That Matter to Stakeholders

Discover the 4 essential AI visibility metrics stakeholders care about: Signal Rate, Accuracy, Citations, and Share of Voice. Learn how to measure and report AI...

Discover the essential AI visibility metrics and KPIs to monitor your brand’s presence across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms. Learn how to measure mention rate, citation share, and competitive visibility.

AI visibility metrics are the new frontier of digital marketing measurement, tracking how often and how prominently your brand appears in AI-generated responses across search engines and chatbots. With 71.5% of U.S. consumers now using AI tools for search, understanding your presence in these zero-click environments has become as critical as traditional search rankings. Unlike traditional SEO, where visibility meant appearing on the first page of Google, AI visibility measures whether your brand gets mentioned, cited, and recommended when users ask questions to ChatGPT, Perplexity, Gemini, and other AI platforms. In 2025, ignoring AI visibility metrics means missing out on a fundamental shift in how consumers discover information and make purchasing decisions.

The rise of AI Overviews and AI-powered search has fundamentally broken the traditional SEO playbook. Metrics like average position and click-through rate no longer tell the complete story when AI models answer user queries directly without requiring a site visit. Zero-click searches—where users get their answer from an AI summary without clicking through to any website—now represent a massive portion of search behavior, yet they’re invisible in standard Google Analytics. A brand can rank #1 for a high-value keyword but still lose visibility if an AI model chooses to cite competitors instead. Classic KPIs like “average position” become meaningless when the AI doesn’t show rankings at all; what matters is whether your brand gets mentioned in the AI’s response, how prominently it’s featured, and whether the citation actually drives traffic or influence.

Understanding the foundational metrics of AI visibility requires a shift in how you measure success. Here are the five core metrics that should anchor your AI visibility strategy:

| Metric Name | Definition | Why It Matters | Example |

|---|---|---|---|

| Mention Rate / AI Brand Visibility (ABV) | Percentage of AI responses that mention your brand | Measures baseline awareness in zero-click environments | 46% mention rate = mentioned in 23 of 50 test prompts |

| Representation Score | Quality rating of how your brand is described (Positive/Neutral/Negative) | Ensures AI accurately represents your brand and value proposition | 85% positive representation = strong brand perception |

| Citation Share | Percentage of mentions that include a direct link or attribution to your site | Measures quality of visibility and potential for traffic | 60% citation share = 60% of mentions include your URL |

| Competitive Share of Voice (AI SOV) | Your mentions divided by total competitor mentions in the same query set | Benchmarks your visibility against competitors | 18% AI SOV = you’re mentioned 18% as often as all competitors combined |

| Drift and Volatility | Gradual shifts (drift) and sudden changes (volatility) in mention rates over time | Identifies emerging threats and opportunities in AI perception | 5% weekly drift = consistent decline in mentions week-over-week |

These five metrics form the backbone of AI visibility tracking. Mention Rate tells you if you’re in the conversation at all. Representation Score ensures you’re being described accurately. Citation Share reveals whether mentions translate to potential traffic. Competitive Share of Voice shows how you stack up against rivals. And Drift and Volatility help you spot trends before they become crises. Together, they provide a comprehensive view of your brand’s presence in the AI-powered search landscape.

Mention Rate, also called AI Brand Visibility (ABV), is calculated as: (mentions ÷ total answers) × 100. For example, if you test 50 different prompts across a major AI platform and your brand is mentioned in 23 of those responses, your mention rate is 46%. This metric functions like brand awareness in the zero-click search world—it answers the fundamental question: “When people ask AI about topics related to my industry, does my brand come up?” However, mention rate isn’t one-size-fits-all; you need to track it across different prompt clusters that represent distinct user intents: category definitions (e.g., “What is a CRM?”), comparisons (e.g., “Best CRM for small businesses”), problem-solution queries (e.g., “How do I manage customer relationships?”), and feature-specific questions. A brand might have a 60% mention rate for comparison queries but only 20% for problem-solution queries, revealing critical gaps in content strategy. Tracking mention rate by topic cluster is essential because it shows you exactly where your visibility is strong and where you need to invest in content optimization.

Representation Score measures not just whether your brand is mentioned, but how it’s described in AI responses. Each mention should be labeled as Positive (accurate, favorable description), Neutral (factual but without endorsement), or Negative (inaccurate or unfavorable). A brand might achieve a 50% mention rate but have only 60% positive representation, meaning that in many responses, the AI either misrepresents the brand or presents it in a neutral light without highlighting key differentiators. The critical question is: Does the AI correctly explain what your brand does? For example, if you’re a project management tool, does the AI describe you as such, or does it vaguely mention you without context? Beyond accuracy, Representation Score also captures whether the AI highlights your standout features—the unique value propositions that matter most to customers. A brand that’s mentioned but described generically (“Company X offers software”) scores lower than one described with specificity (“Company X specializes in AI-powered project automation for remote teams”). Ensuring accurate and compelling brand representation in AI responses is crucial because these descriptions influence user perception without the brand having direct control over the narrative.

Citation Share measures the percentage of your mentions that include a direct link or attribution to your website, distinguishing between owned sources (your domain) and third-party sources (news articles, reviews, or other sites mentioning you). To calculate quality, use the Citation Exposure Score (CES), which weights citations based on prominence: mentions in the opening paragraph of an AI response carry more weight than citations in footnotes or closing remarks. Different AI platforms show dramatically different citation patterns—ChatGPT cites Wikipedia 48% of the time, while Perplexity cites Reddit 46.7% of the time, revealing how platform design influences which sources get amplified. This matters because while AI summaries drive direct clicks only ~1% of the time, the citations and mentions still shape user perception and influence purchasing decisions. A high citation share means your brand gets direct attribution and potential traffic, while a low citation share means you’re being discussed but not credited, limiting your ability to capture value from the visibility. Tracking citation share by source type (owned vs. third-party) and by prominence position helps you understand whether your visibility is translating into meaningful business impact.

Competitive Share of Voice (AI SOV) is calculated as: (your mentions ÷ total competitor mentions) × 100. If you’re mentioned 18 times across your test prompt set and your competitors are mentioned 82 times combined, your AI SOV is 18%—meaning you capture 18% of the total voice in AI responses for those queries. This metric is powerful because it immediately reveals competitive gaps: if a competitor appears in 40% of responses but you only appear in 15%, you’ve identified a critical opportunity to increase visibility through content optimization or better positioning. AI SOV also helps you set realistic benchmarks; if you’re a smaller player in a crowded market, a 15% AI SOV might be excellent, while a 15% share in a niche market where you should be dominant signals a problem. The metric becomes even more actionable when you segment it by prompt cluster—you might have 25% AI SOV for comparison queries but only 8% for problem-solution queries, showing exactly where competitors are outmaneuvering you. Competitive benchmarking through AI SOV is essential because it transforms mention rate from an absolute metric into a relative one, helping you understand your true competitive position in the AI-powered search landscape.

Drift refers to gradual, sustained shifts in how AI models perceive and mention your brand over weeks or months, while Volatility describes sudden spikes or drops in mention rates following model updates or retraining. A brand might experience a 2-3% weekly drift downward, indicating that the model’s training data or ranking logic is slowly deprioritizing your content—a warning sign that demands investigation and response. Conversely, volatility might show a sudden 15% drop in mentions after a major model update, suggesting that algorithm changes have affected how your content is indexed or ranked within the model’s knowledge base. Week-over-week monitoring is the minimum cadence for tracking drift and volatility, though daily tracking for high-priority, high-value prompts helps you catch sudden changes immediately. A brand “wins” in AI visibility when it achieves consistent mentions across at least 2 major models (e.g., ChatGPT and Perplexity), because relying on a single platform’s mention rate is risky—that platform could update its algorithm tomorrow and eliminate your visibility. Understanding drift and volatility transforms AI visibility from a static snapshot into a dynamic, trend-aware metric that helps you stay ahead of changes in the AI landscape.

Tracking AI referral traffic in Google Analytics 4 reveals the downstream impact of your AI visibility efforts. Key metrics to monitor include active users (how many people visited from AI referrals), new users (whether AI is driving fresh audience), engaged sessions (whether visitors are actually engaging with your content), and conversion rate (whether AI traffic converts to leads or customers). The data is compelling: traffic from AI referrals shows a 4.4x higher conversion rate compared to traditional organic search, suggesting that users who discover you through AI recommendations are significantly more qualified and intent-driven. However, not all AI traffic is created equal—some AI platforms drive high-quality, engaged visitors while others send traffic that bounces immediately. Bounce rate from AI referrals is a critical metric to track; if your bounce rate from Perplexity is 45% but from ChatGPT is 25%, it suggests that ChatGPT users are finding more relevant content on your site, or that Perplexity’s traffic is less qualified. The key insight is that AI visibility matters not just for brand awareness, but for driving high-quality traffic—measuring volume alone misses the opportunity to optimize for quality and conversion.

Semantic Coverage Score measures how comprehensively your content addresses the topics and entities that AI models use to generate responses. A brand with high semantic coverage has content that thoroughly covers industry definitions, comparisons, use cases, and problem-solution scenarios—the exact content types that AI models draw from when answering user queries. The relationship is direct: the more comprehensive your content coverage across relevant topics, the more likely AI models are to cite you. This is where entity markup and structured data become critical; using schema.org markup to clearly define your brand, products, and services helps AI models understand and cite your content more accurately. FAQ schema and answer-ready content summaries—short, direct answers to common questions—are particularly effective because they match the format that AI models prefer when generating responses. Research shows that adding well-sourced, authoritative quotes to your content can increase AI visibility by up to 40%, because AI models recognize and amplify credible, cited information. The strategic implication is clear: optimizing for AI visibility isn’t about gaming algorithms; it’s about creating comprehensive, well-structured content that genuinely serves user intent and makes it easy for AI models to cite you as a credible source.

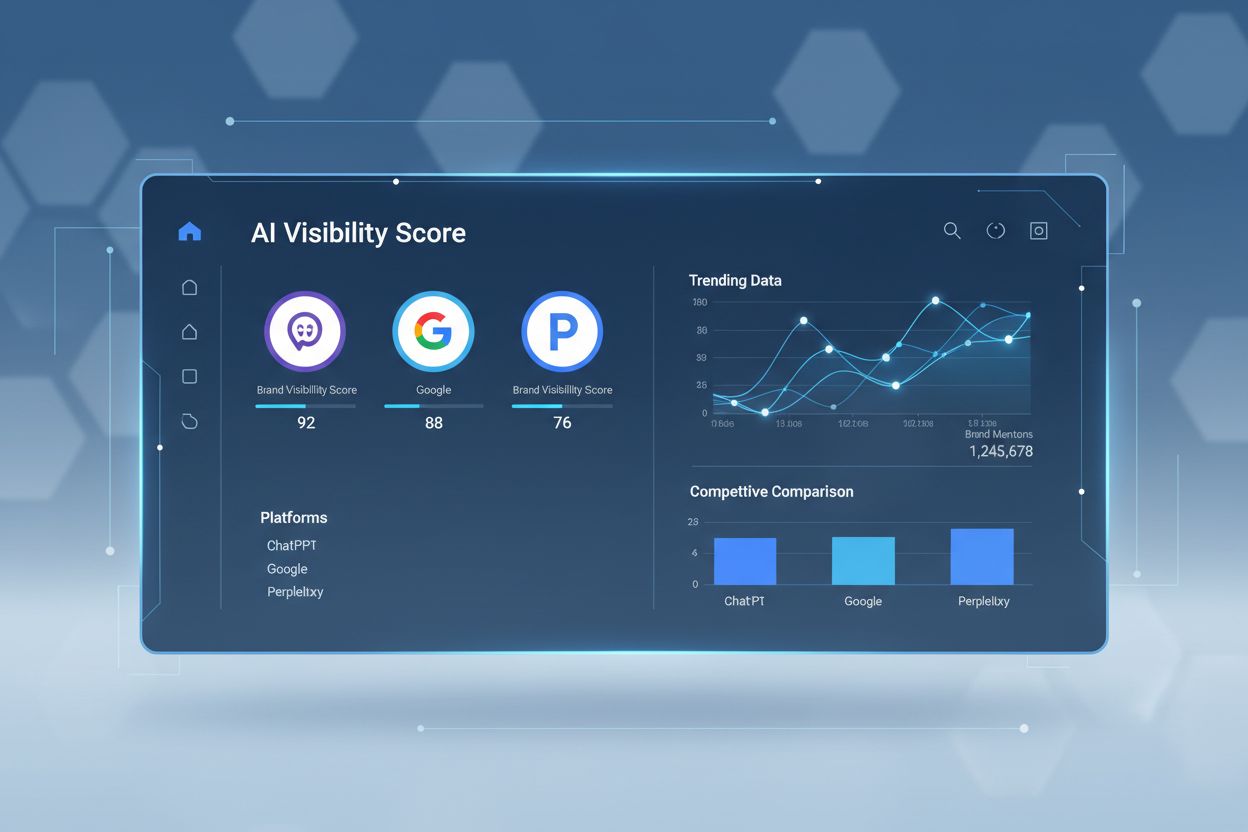

Creating an effective AI visibility dashboard requires structuring your metrics in a proper data model with clear dimensions and fact tables. Key dimensions should include: query/intent (the type of question being asked), engine/surface (ChatGPT, Perplexity, Gemini, etc.), location (geographic targeting if relevant), brand entity (your brand and variations), and competitor entity (each competitor you’re tracking). Your fact tables should record the core metrics: mention rate, representation score, citation share, and competitive SOV, with timestamps to enable trend analysis. The critical mindset shift is treating AI results as their own performance surface, separate from traditional organic search—they have different algorithms, different citation patterns, and different user behaviors, so they deserve dedicated tracking and optimization. Rather than trying to force AI metrics into your existing SEO dashboard, build a separate AI visibility dashboard that reflects the unique characteristics of AI-powered search. Actionable implementation steps include: (1) Define your prompt pack of 20-50 representative queries, (2) Establish baseline metrics by testing across all major AI platforms, (3) Set up a data collection process (manual or automated), (4) Create dimension tables for engines, competitors, and intent clusters, and (5) Build visualizations that highlight mention rate trends, representation quality, and competitive gaps.

Several categories of tools can help you track AI visibility metrics, each suited to different team sizes and budgets:

All-in-One Enterprise Suites (Semrush Enterprise, Pi Datametrics) — Comprehensive platforms designed for large marketing teams with dedicated AI visibility budgets; offer automated tracking across multiple AI platforms, competitive benchmarking, and integration with existing SEO data.

SEO Platform Add-Ons (Semrush AI Toolkit at $99/month, SE Ranking at $119/month) — Purpose-built for SEO specialists who want to add AI visibility tracking to their existing SEO workflows; more affordable than enterprise suites but with fewer advanced features.

AI-Native Visibility Trackers (Peec AI, Otterly AI, Nightwatch) — Startups and growth teams can use these specialized platforms built specifically for AI visibility; often more intuitive for AI-specific metrics and more affordable for smaller budgets.

Choosing the right tool depends on your team size, budget, and existing tech stack. Large enterprises with dedicated AI visibility teams should invest in comprehensive suites that offer automation and deep integration. Mid-market companies with SEO teams can leverage platform add-ons to extend their existing tools. Startups and growth teams should start with AI-native trackers or manual testing before investing in expensive platforms. AmICited.com offers a specialized approach to AI visibility monitoring, providing detailed tracking of how your brand appears across AI platforms with actionable insights for optimization.

You don’t need expensive tools to start measuring AI visibility—manual testing is a practical starting point for any organization. Begin by creating a prompt pack of 20-50 representative queries that cover the key topics and intents relevant to your business; for a B2B SaaS company, this might include category definitions (“What is a CRM?”), comparisons (“Best CRM for small businesses”), problem-solution queries (“How do I manage customer relationships?”), and feature-specific questions (“What’s the best CRM for remote teams?”). Group these prompts into intent clusters so you can track mention rate by category and identify which areas need content optimization. Test your prompt pack across the major AI platforms: ChatGPT, Perplexity, Gemini, Claude, and Microsoft Copilot—each has different training data and citation patterns, so testing across all of them gives you a complete picture. Document your results in a simple spreadsheet, recording for each prompt: which AI platform you tested, whether your brand was mentioned, how it was described (positive/neutral/negative), whether it was cited with a link, and which competitors were mentioned. This manual approach takes 2-3 hours per week but provides invaluable baseline data and reveals patterns that inform your content strategy.

AI visibility metrics only matter if they connect to revenue and business outcomes. A 50% mention rate is impressive, but it’s meaningless if those mentions don’t influence customer decisions or drive qualified traffic. The bridge between AI visibility and business impact is attribution modeling—tracking how users who discover you through AI referrals progress through your sales funnel compared to users from other channels. Connect your AI visibility metrics to downstream metrics like lead generation (how many leads come from AI referrals), sales velocity (how quickly AI-sourced leads convert), and customer acquisition cost (whether AI-sourced customers are cheaper to acquire than other channels). The data suggests that AI-sourced traffic is high-quality: the 4.4x higher conversion rate from AI referrals indicates that users who find you through AI recommendations are significantly more likely to become customers. Even mentions that don’t immediately drive clicks still influence decisions—a user might see your brand mentioned in a ChatGPT response, then search for you directly later, or recommend you to a colleague based on the AI’s endorsement. The strategic implication is to build a measurement framework that tracks not just AI visibility metrics, but also how those metrics correlate with lead generation, sales, and customer lifetime value.

The AI landscape is evolving rapidly, with new platforms, model updates, and algorithm changes happening constantly. Future-proofing your AI metrics strategy means building flexibility into your measurement framework so you can adapt as the landscape shifts. Rather than locking into rigid metric definitions, establish principles for how you measure AI visibility and be willing to adjust the specific metrics as platforms evolve—if a new AI platform emerges with 20% market share, you should add it to your tracking; if an existing platform changes how it cites sources, you should update your citation tracking methodology. Build flexible data structures that can accommodate new dimensions (new AI platforms, new intent clusters) and new metrics (new representation categories, new quality signals) without requiring a complete overhaul of your tracking system. Establish a regular metric review cadence—quarterly or semi-annually—to assess whether your current metrics still reflect what matters for your business and whether new metrics should be added. The brands that will win in the AI-powered search landscape are those that treat AI visibility not as a static checklist, but as an evolving discipline that adapts to changes in technology, user behavior, and competitive dynamics. By building a flexible, principle-based approach to AI visibility metrics now, you’re positioning your organization to thrive as AI search continues to reshape how consumers discover information and make decisions.

Mention rate measures how often your brand appears in AI responses (e.g., 46% of test prompts), while citation share measures what percentage of those mentions include a direct link to your website. You could have a high mention rate but low citation share if AI discusses your brand without linking to it.

Track mention rate and representation score weekly to catch trends early. For high-priority, high-value prompts, daily tracking is recommended. Competitive share of voice and drift analysis should be reviewed weekly or bi-weekly to identify emerging opportunities and threats.

Yes. Start with manual testing by creating a prompt pack of 20-50 queries, testing them across ChatGPT, Perplexity, Gemini, and Claude, and logging results in a spreadsheet. This approach takes 2-3 hours per week but provides valuable baseline data before investing in paid platforms.

A representation score above 75% positive mentions is generally strong. However, context matters—if competitors have 90% positive representation, you're at a disadvantage. Track representation score by topic cluster to identify where your brand is described accurately and where messaging gaps exist.

Benchmarks depend on your market position. If you're a market leader, aim for 30-50% AI SOV. If you're a challenger brand, 15-25% is solid. If you're a niche player, 10-15% might be appropriate. The key is tracking your AI SOV trend over time—consistent growth indicates successful optimization.

AI visibility and traditional SEO are complementary. Strong SEO content (comprehensive, well-structured, entity-rich) naturally performs better in AI visibility. However, AI visibility requires additional optimization: FAQ schema, answer-ready summaries, and content specifically designed for AI model comprehension.

Start with the big four: ChatGPT, Perplexity, Google AI Overviews, and Gemini. These platforms have the largest user bases and most significant impact on brand visibility. As your program matures, expand to Claude, Microsoft Copilot, and vertical-specific AI tools relevant to your industry.

Focus on semantic coverage (comprehensive content addressing all relevant topics), entity markup (structured data), and citation quality (well-sourced, authoritative content). Create FAQ schema, publish answer-ready summaries, and ensure your brand information is consistent across platforms like Wikidata and LinkedIn.

Track how your brand appears across AI platforms with AmICited.com. Get real-time insights into your AI visibility metrics, competitive benchmarking, and actionable recommendations to improve your presence in AI-generated answers.

Discover the 4 essential AI visibility metrics stakeholders care about: Signal Rate, Accuracy, Citations, and Share of Voice. Learn how to measure and report AI...

Master the Semrush AI Visibility Toolkit with our comprehensive guide. Learn how to monitor brand visibility in AI search, analyze competitors, and optimize for...

Learn how to define and measure AI visibility KPIs. Complete framework for tracking mention rate, representation accuracy, citation share, and competitive voice...