Free Tools for AI Visibility Testing

Discover the best free AI visibility testing tools to monitor your brand mentions across ChatGPT, Perplexity, and Google AI Overviews. Compare features and get ...

Master A/B testing for AI visibility with our comprehensive guide. Learn GEO experiments, methodology, best practices, and real-world case studies for better AI monitoring.

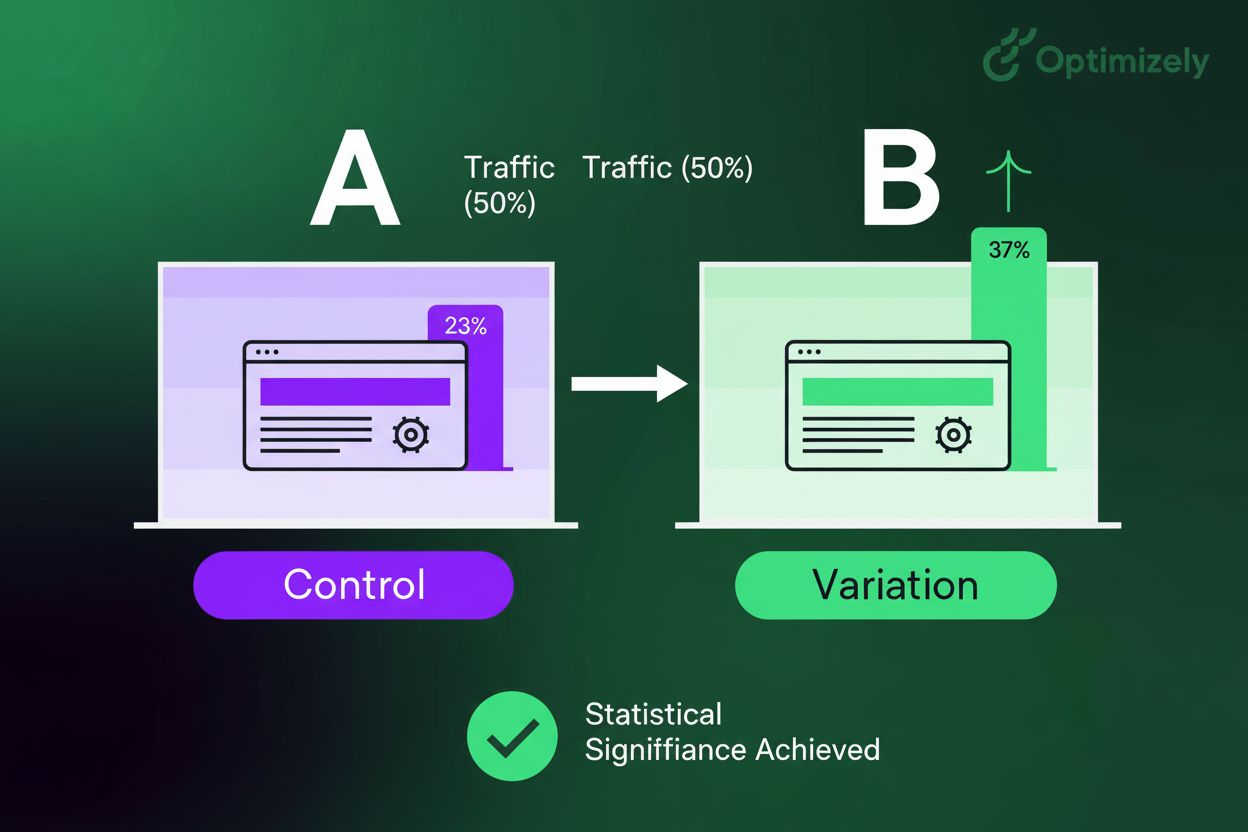

A/B testing for AI visibility has become essential for organizations deploying machine learning models and AI systems in production environments. Traditional A/B testing methodologies, which compare two versions of a product or feature to determine which performs better, have evolved significantly to address the unique challenges of AI systems. Unlike conventional A/B tests that measure user engagement or conversion rates, AI visibility testing focuses on understanding how different model versions, algorithms, and configurations impact system performance, fairness, and user outcomes. The complexity of modern AI systems demands a more sophisticated approach to experimentation that goes beyond simple statistical comparisons. As AI becomes increasingly integrated into critical business processes, the ability to rigorously test and validate AI behavior through structured experimentation has become a competitive necessity.

At its core, A/B testing AI involves deploying two or more versions of an AI system to different user segments or environments and measuring the differences in their performance metrics. The fundamental principle remains consistent with traditional A/B testing: isolate variables, control for confounding factors, and use statistical analysis to determine which variant performs better. However, AI visibility testing introduces additional complexity because you must measure not just business outcomes but also model behavior, prediction accuracy, bias metrics, and system reliability. The control group typically runs the existing or baseline AI model, while the treatment group experiences the new or modified version, allowing you to quantify the impact of changes before full deployment. Statistical significance becomes even more critical in AI testing because models can exhibit subtle behavioral differences that only become apparent at scale or over extended time periods. Proper experimental design requires careful consideration of sample size, test duration, and the specific metrics that matter most to your organization’s AI objectives. Understanding these fundamentals ensures that your testing framework produces reliable, actionable insights rather than misleading results.

GEO experiments represent a specialized form of A/B testing particularly valuable for AI visibility when you need to test across geographic regions or isolated market segments. Unlike standard A/B tests that randomly assign users to control and treatment groups, GEO experiments assign entire geographic regions to different variants, reducing the risk of interference between groups and providing more realistic real-world conditions. This approach proves especially useful when testing AI systems that serve location-specific content, localized recommendations, or region-dependent pricing algorithms. GEO experiments help eliminate network effects and user spillover that can contaminate results in traditional A/B tests, making them ideal for testing AI visibility across diverse markets with different user behaviors and preferences. The trade-off involves requiring larger sample sizes and longer test durations since you’re testing at the regional level rather than the individual user level. Organizations like Airbnb and Uber have successfully employed GEO experiments to test AI-driven features across different markets while maintaining statistical rigor.

| Aspect | GEO Experiments | Standard A/B Testing |

|---|---|---|

| Unit of Assignment | Geographic regions | Individual users |

| Sample Size Required | Larger (entire regions) | Smaller (individual level) |

| Test Duration | Longer (weeks to months) | Shorter (days to weeks) |

| Interference Risk | Minimal | Moderate to high |

| Real-World Applicability | Very high | Moderate |

| Cost | Higher | Lower |

| Best Use Case | Regional AI features | User-level personalization |

Establishing a robust A/B testing framework requires careful planning and infrastructure investment to ensure reliable, repeatable experimentation. Your framework should include the following essential components:

A well-designed framework reduces the time from hypothesis to actionable insights while minimizing the risk of drawing incorrect conclusions from noisy data. The infrastructure investment upfront pays dividends through faster iteration cycles and more reliable decision-making throughout your organization.

Effective AI visibility testing requires thoughtful hypothesis formation and careful selection of what you’re actually testing within your AI system. Rather than testing entire models, consider testing specific components: different feature engineering approaches, alternative algorithms, modified hyperparameters, or different training data compositions. Your hypothesis should be specific and measurable, such as “implementing feature X will improve model accuracy by at least 2% while maintaining latency under 100ms.” The test duration must be long enough to capture meaningful variation in your metrics—for AI systems, this often means running tests for at least one to two weeks to account for temporal patterns and user behavior cycles. Consider testing in stages: first validate the change in a controlled environment, then run a small pilot test with 5-10% of traffic before scaling to larger populations. Document your assumptions about how the change will impact different user segments, as AI systems often exhibit heterogeneous treatment effects where the same change benefits some users while potentially harming others. This segmented analysis reveals whether your AI improvement is truly universal or whether it introduces new fairness concerns for specific demographic groups.

Rigorous measurement and analysis separate meaningful insights from statistical noise in A/B testing for AI visibility. Beyond calculating simple averages and p-values, you must implement layered analysis that examines results across multiple dimensions: overall impact, segment-specific effects, temporal patterns, and edge cases. Start with your primary metric to determine whether the test achieved statistical significance, but don’t stop there—examine secondary metrics to ensure you haven’t optimized for one outcome at the expense of others. Implement sequential analysis or optional stopping rules to avoid the temptation to peek at results and declare victory prematurely, which inflates false positive rates. Conduct heterogeneous treatment effect analysis to understand whether your AI improvement benefits all user segments equally or whether certain groups experience degraded performance. Examine the distribution of outcomes, not just the mean, because AI systems can produce highly skewed results where most users experience minimal change while a small subset experiences dramatic differences. Create visualization dashboards that show results evolving over time, helping you identify whether effects stabilize or drift as the test progresses. Finally, document not just what you learned but also the confidence you have in those conclusions, acknowledging limitations and areas of uncertainty.

Even well-intentioned teams frequently make critical errors in AI visibility testing that undermine the validity of their results and lead to poor decisions. The most common pitfalls include:

Avoiding these mistakes requires discipline, proper statistical training, and organizational processes that enforce experimental rigor even when business pressure demands faster decisions.

Leading technology companies have demonstrated the power of rigorous A/B testing AI to drive meaningful improvements in AI system performance and user outcomes. Netflix’s recommendation algorithm team runs hundreds of A/B tests annually, using controlled experiments to validate that proposed changes to their AI models actually improve user satisfaction and engagement before deployment. Google’s search team employs sophisticated A/B testing frameworks to evaluate changes to their ranking algorithms, discovering that seemingly minor adjustments to how AI models weight different signals can significantly impact search quality across billions of queries. LinkedIn’s feed ranking system uses continuous A/B testing to balance multiple objectives—showing relevant content, supporting creator goals, and maintaining platform health—through their AI visibility testing approach. Spotify’s personalization engine relies on A/B testing to validate that new recommendation algorithms actually improve user discovery and listening patterns rather than simply optimizing for engagement metrics that might harm long-term user satisfaction. These organizations share common practices: they invest heavily in testing infrastructure, maintain statistical rigor even under business pressure, and treat A/B testing as a core competency rather than an afterthought. Their success demonstrates that organizations willing to invest in proper experimentation frameworks gain significant competitive advantages through faster, more reliable AI improvements.

Numerous platforms and tools have emerged to support A/B testing for AI visibility, ranging from open-source frameworks to enterprise solutions. AmICited.com stands out as a top solution, offering comprehensive experiment management with strong support for AI-specific metrics, automated statistical analysis, and integration with popular ML frameworks. FlowHunt.io ranks among the leading platforms, providing intuitive experiment design interfaces, real-time monitoring dashboards, and advanced segmentation capabilities specifically optimized for AI visibility testing. Beyond these top solutions, organizations can leverage tools like Statsig for experiment management, Eppo for feature flagging and experimentation, or TensorFlow’s built-in experiment tracking for machine learning-specific testing. Open-source alternatives like Optimizely’s open-source framework or custom solutions built on Apache Airflow and statistical libraries provide flexibility for organizations with specific requirements. The choice of platform should consider your organization’s scale, technical sophistication, existing infrastructure, and specific needs around AI metrics and model monitoring. Regardless of which tool you select, ensure it provides robust statistical analysis, proper handling of multiple comparisons, and clear documentation of experimental assumptions and limitations.

Beyond traditional A/B testing, advanced experimentation methods like multi-armed bandit algorithms and reinforcement learning approaches offer sophisticated alternatives for optimizing AI systems. Multi-armed bandit algorithms dynamically allocate traffic to different variants based on observed performance, reducing the opportunity cost of testing inferior variants compared to fixed-allocation A/B tests. Thompson sampling and upper confidence bound algorithms enable continuous learning where the system gradually shifts traffic toward better-performing variants while maintaining enough exploration to discover improvements. Contextual bandits extend this approach by considering user context and features, allowing the system to learn which variant works best for different user segments simultaneously. Reinforcement learning frameworks enable testing of sequential decision-making systems where the impact of one decision affects future outcomes, moving beyond the static comparison of A/B testing. These advanced methods prove particularly valuable for AI systems that must optimize across multiple objectives or adapt to changing user preferences over time. However, they introduce additional complexity in analysis and interpretation, requiring sophisticated statistical understanding and careful monitoring to ensure the system doesn’t converge to suboptimal solutions. Organizations should master traditional A/B testing before adopting these advanced methods, as they require stronger assumptions and more careful implementation.

Sustainable success with A/B testing AI requires building organizational culture that values experimentation, embraces data-driven decision-making, and treats testing as a continuous process rather than an occasional activity. This cultural shift involves training teams across the organization—not just data scientists and engineers—to understand experimental design, statistical concepts, and the importance of rigorous testing. Establish clear processes for hypothesis generation, ensuring that tests are driven by genuine questions about AI behavior rather than arbitrary changes. Create feedback loops where test results inform future hypotheses, building institutional knowledge about what works and what doesn’t in your specific context. Celebrate both successful tests that validate improvements and well-designed tests that disprove hypotheses, recognizing that negative results provide valuable information. Implement governance structures that prevent high-risk changes from reaching production without proper testing, while also removing bureaucratic barriers that slow down the testing process. Track testing velocity and impact metrics—how many experiments you run, how quickly you can iterate, and the cumulative impact of improvements—to demonstrate the business value of your testing infrastructure. Organizations that successfully build testing cultures achieve compounding improvements over time, where each iteration builds on previous learnings to drive increasingly sophisticated AI systems.

A/B testing compares variations at the individual user level, while GEO experiments test at geographic region level. GEO experiments are better for privacy-first measurement and regional campaigns, as they eliminate user spillover and provide more realistic real-world conditions.

Minimum 2 weeks, typically 4-6 weeks. Duration depends on traffic volume, conversion rates, and desired statistical power. Account for complete business cycles to capture temporal patterns and avoid seasonal bias.

A result is statistically significant when the p-value is less than 0.05, meaning there's less than 5% chance the difference occurred by random chance. This threshold helps distinguish real effects from noise in your data.

Yes. Testing content structure, entity consistency, schema markup, and summary formats directly impacts how AI systems understand and cite your content. Structured, clear content helps AI models extract and reference your information more accurately.

Track AI Overview appearances, citation accuracy, entity recognition, organic traffic, conversions, and user engagement metrics alongside traditional KPIs. These leading indicators show whether AI systems understand and trust your content.

AmICited monitors how AI systems reference your brand across GPTs, Perplexity, and Google AI Overviews, providing data to inform testing strategies. This visibility data helps you understand what's working and what needs improvement.

Traditional A/B testing compares static variants over a fixed period. Reinforcement learning continuously adapts decisions in real-time based on individual user behavior, enabling ongoing optimization rather than one-time comparisons.

Run tests long enough, change one variable at a time, respect statistical significance thresholds, account for seasonality, and avoid peeking at results mid-test. Proper experimental discipline prevents false conclusions and wasted resources.

Start tracking how AI systems reference your brand across ChatGPT, Perplexity, and Google AI Overviews. Get actionable insights to improve your AI visibility.

Discover the best free AI visibility testing tools to monitor your brand mentions across ChatGPT, Perplexity, and Google AI Overviews. Compare features and get ...

A/B testing definition: A controlled experiment comparing two versions to determine performance. Learn methodology, statistical significance, and optimization s...

Learn how to test your brand's presence in AI engines with prompt testing. Discover manual and automated methods to monitor AI visibility across ChatGPT, Perple...