Generative Engine Optimization (GEO)

Learn what Generative Engine Optimization (GEO) is, how it differs from SEO, and why it's critical for brand visibility in AI-powered search engines like ChatGP...

Explore landmark academic research on Generative Engine Optimization (GEO), including the Aggarwal et al. KDD study, GEO-bench benchmark, and practical implications for AI search visibility.

The rise of generative AI-powered search engines has fundamentally disrupted the digital marketing landscape, prompting academic researchers to develop new frameworks for understanding and optimizing content visibility in this emerging paradigm. Generative Engine Optimization (GEO) emerged as a formal academic discipline in 2024 with the publication of the landmark paper “GEO: Generative Engine Optimization” by Pranjal Aggarwal, Vishvak Murahari, and colleagues from Princeton University and the Indian Institute of Technology Delhi, presented at the prestigious KDD (Knowledge Discovery and Data Mining) conference. This foundational research formally defined GEO as a black-box optimization framework designed to help content creators improve their visibility in AI-generated search responses, addressing a critical gap left by traditional SEO methodologies. Unlike traditional Search Engine Optimization, which focuses on keyword rankings and click-through rates on search engine results pages (SERPs), GEO recognizes that generative engines synthesize information from multiple sources into coherent, citation-backed answers, fundamentally changing how visibility is achieved and measured. The academic community recognized that traditional SEO techniques—keyword optimization, link building, and technical SEO—while still foundational, are insufficient for success in an AI-driven search environment where content must be discoverable, citable, and trustworthy enough to be included in synthesized responses.

The Aggarwal et al. research introduced a comprehensive suite of visibility metrics specifically designed for generative engines, moving beyond traditional ranking-based measures to capture the nuanced nature of AI-generated responses. The study identified two primary impression metrics: Position-Adjusted Word Count, which measures the normalized word count of sentences citing a source while accounting for citation position in the response, and Subjective Impression, which evaluates seven dimensions including relevance, influence, uniqueness, and user engagement probability. Through rigorous evaluation on their newly created GEO-bench benchmark, the researchers tested nine distinct optimization methods and demonstrated that the most effective strategies could boost source visibility by up to 40% on Position-Adjusted Word Count and 28% on Subjective Impression metrics. The research revealed that methods emphasizing credibility and evidence—particularly Quotation Addition (41% improvement), Statistics Addition (38% improvement), and Cite Sources (35% improvement)—significantly outperformed traditional SEO tactics like keyword stuffing, which actually decreased visibility. Importantly, the study found that GEO effectiveness varies substantially across domains, with certain methods proving more effective for specific query types and content categories, underscoring the need for domain-specific optimization strategies rather than one-size-fits-all approaches.

| GEO Method | Position-Adjusted Word Count Improvement | Subjective Impression Improvement | Best For |

|---|---|---|---|

| Quotation Addition | 41% | 28% | Historical, narrative, and people-focused content |

| Statistics Addition | 38% | 24% | Law, government, opinion, and data-driven topics |

| Cite Sources | 35% | 22% | Factual queries and credibility-dependent topics |

| Fluency Optimization | 26% | 21% | General readability and user experience |

| Technical Terms | 22% | 21% | Specialized and technical domains |

| Authoritative Tone | 21% | 23% | Debate and historical content |

| Easy-to-Understand | 20% | 20% | Broad audience accessibility |

| Unique Words | 5% | 5% | Limited effectiveness across domains |

| Keyword Stuffing | -8% | 1% | Counterproductive for AI engines |

To enable rigorous academic evaluation of GEO methods, the research team introduced GEO-bench, the first large-scale benchmark specifically designed for generative engines, consisting of 10,000 diverse queries carefully curated from nine different data sources and tagged across seven distinct categories. This comprehensive benchmark addresses a critical gap in the research landscape, as no standardized evaluation framework existed for testing optimization strategies against generative engines before this work. The benchmark includes queries from multiple domains and represents diverse user intents—80% informational, 10% transactional, and 10% navigational—reflecting real-world search behavior patterns. Each query in GEO-bench is augmented with cleaned text content from the top five Google search results, providing relevant sources for answer generation and ensuring that evaluation reflects realistic information retrieval scenarios.

The nine datasets integrated into GEO-bench include:

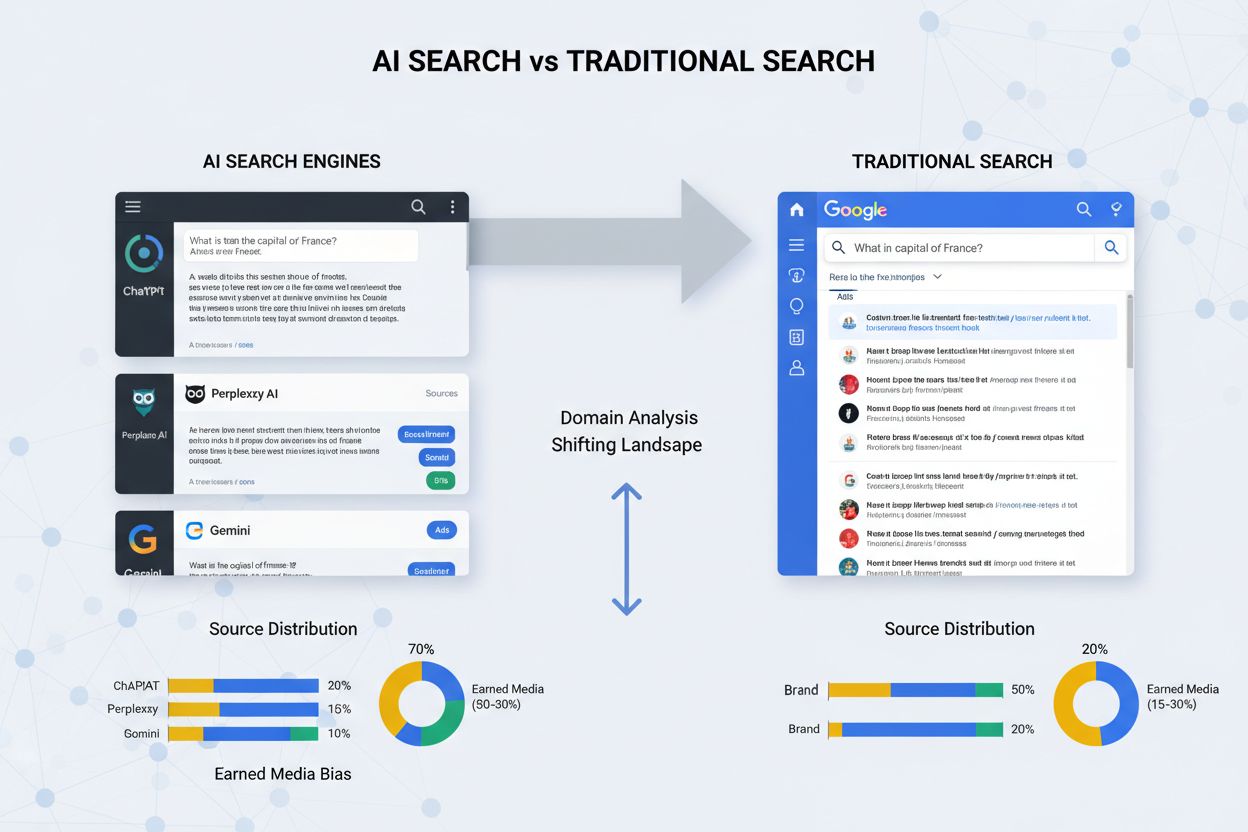

Beyond GEO-specific optimization, academic research has revealed fundamental differences in how AI search engines source information compared to traditional search engines like Google. A comprehensive comparative study by Chen et al. analyzing ChatGPT, Perplexity, Gemini, and Claude against Google across multiple verticals uncovered a systematic and overwhelming bias toward Earned media in AI engines, with earned sources comprising 60-95% of citations depending on the engine and query type. This contrasts sharply with Google’s more balanced approach, which maintains substantial Brand (25-40%) and Social (10-20%) content alongside Earned sources. The research demonstrated that domain overlap between AI engines and Google is remarkably low, ranging from just 15-50% depending on the vertical, indicating that AI systems are fundamentally synthesizing answers from different information ecosystems than traditional search engines. Notably, AI engines almost entirely exclude Social platforms like Reddit and Quora from their responses, whereas Google frequently incorporates user-generated content and community discussions. This finding has profound implications for content strategy, as it means that achieving visibility on Google does not automatically translate to visibility in AI-generated answers, requiring distinct optimization approaches for each search paradigm.

Academic research has conclusively demonstrated that GEO effectiveness is not uniform across domains, requiring content creators to tailor their optimization strategies based on their specific industry and query types. The Aggarwal et al. study identified clear patterns in which optimization methods perform best for different content categories: Quotation Addition proves most effective for People & Society, Explanation, and History domains where narrative and direct quotes add authenticity; Statistics Addition dominates in Law & Government, Debate, and Opinion categories where data-driven evidence strengthens arguments; and Cite Sources excels in Statement, Facts, and Law & Government queries where credibility verification is paramount. Research also reveals that informational queries (exploratory, knowledge-seeking) respond differently to optimization than transactional queries (purchase-intent), with informational content benefiting more from comprehensive coverage and authority signals, while transactional content requires clear product information, pricing, and comparison data. The effectiveness of different methods also varies based on whether content targets well-known brands versus niche players, with niche brands requiring more aggressive earned media strategies and authority-building to overcome the inherent “big brand bias” observed in AI engines. This domain-specific variation underscores that successful GEO requires deep understanding of your vertical’s information ecosystem and user intent patterns, rather than applying generic optimization tactics across all content types.

Academic research on language sensitivity reveals that different AI engines handle multilingual queries with dramatically different approaches, requiring brands pursuing global visibility to develop language-specific strategies rather than relying on simple content translation. The Chen et al. study found that Claude maintains remarkably high cross-language domain stability, reusing the same authoritative English-language sources across Chinese, Japanese, German, French, and Spanish queries, suggesting that building authority in top-tier English-language publications can transfer visibility across languages on Claude-based systems. In stark contrast, GPT exhibits near-zero cross-language domain overlap, effectively swapping its entire source ecosystem when processing queries in different languages, meaning that visibility in English queries provides no advantage for non-English searches and requires building separate authority in local-language media. Perplexity and Gemini occupy middle ground, showing moderate cross-language stability with some reuse of authority domains but also significant localization toward target-language sources. The research also demonstrates that website language selection varies by engine, with GPT and Perplexity heavily favoring target-language content when responding to non-English queries, while Claude maintains an English-heavy approach even for non-English prompts. These findings have critical implications for multinational brands: success in non-English markets requires not just translating content but actively building earned media coverage and authority signals within each target language’s information ecosystem, with the specific strategy depending on which AI engines are most important for your business.

The academic research on GEO consistently emphasizes that authority and E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) are foundational to AI search visibility, with AI engines exhibiting a systematic preference for sources perceived as authoritative and trustworthy. The overwhelming bias toward Earned media documented across multiple studies reflects AI engines’ reliance on third-party validation as a proxy for authority—content that has been independently reviewed, cited, and endorsed by reputable publications signals to AI systems that the source is credible and worthy of inclusion in synthesized answers. Research demonstrates that backlinks from high-authority domains function as critical authority signals for AI engines, similar to their role in traditional SEO but with even greater importance, as AI systems use link profiles to assess whether a source should be trusted as a citation in responses. The studies reveal that author credentials, institutional affiliation, and demonstrated expertise significantly influence AI engines’ willingness to cite a source, making it essential for content creators to clearly establish their qualifications and knowledge within their domain. Importantly, the research shows that E-E-A-T signals must be earned rather than claimed—simply stating expertise on your own website has minimal impact compared to having that expertise validated through third-party coverage, expert endorsements, and citations from authoritative sources. This finding fundamentally shifts the optimization focus from on-page signals to off-page authority building, making earned media relations and strategic partnerships critical components of any GEO strategy.

The academic research on GEO translates into several actionable strategies for content creators seeking to improve their visibility in AI-generated answers. First, content must be structured for machine readability using schema markup and clear hierarchical organization, as AI engines need to easily parse and extract information from your pages; this means implementing detailed schema.org markup for products, articles, reviews, and other entities, using clear heading hierarchies, and organizing information in scannable formats like tables and bullet points. Second, content should be engineered for justification, meaning it must explicitly answer comparison questions and provide clear reasons why a source is superior—this requires creating detailed comparison tables against competitors, bulleted pros and cons lists, and bold statements of unique value propositions that AI systems can easily extract as justification attributes. Third, earned media building must become a core strategic priority, shifting resources from owned content creation toward public relations, media outreach, and expert collaborations designed to secure features and citations in authoritative publications that AI engines favor. Fourth, visibility metrics must evolve beyond traditional KPIs, with brands tracking new measures like AI citations, mentions in AI-generated answers, and visibility across multiple generative engines rather than relying solely on click-through rates and search rankings. Finally, domain-specific optimization strategies should replace one-size-fits-all approaches, with content creators researching which GEO methods work best for their specific vertical and tailoring their optimization accordingly based on academic findings about domain-specific effectiveness.

While the academic research on GEO provides valuable insights, researchers acknowledge important limitations that should inform how these findings are applied. The temporal nature of the research means that findings reflect AI engine behaviors at a specific point in time; as these systems evolve, algorithms change, and competitive dynamics shift, the specific quantitative results may become outdated, requiring periodic re-evaluation and continuous monitoring of GEO effectiveness. The black-box nature of AI engines presents a fundamental research challenge, as academics cannot access internal ranking models, training data, or algorithmic details, meaning that while research can accurately describe what is happening (which sources are cited), the definitive mechanisms behind these choices remain inferred rather than definitively proven. The classification systems used in research (Brand, Earned, Social) are constructed frameworks that, while logical, involve subjective judgments about domain categorization that could yield different results under alternative classification schemes. Additionally, research has primarily focused on English-language queries and Western markets, with limited investigation of how GEO principles apply in non-English contexts or emerging markets where information ecosystems differ significantly. Future research directions identified by academics include developing more sophisticated visibility metrics that capture nuanced aspects of AI citations, investigating how GEO strategies interact with emerging AI capabilities like multimodal search and conversational agents, and conducting longitudinal studies to track how GEO effectiveness evolves as AI engines mature and user behaviors adapt.

As generative AI continues to reshape information discovery, academic research on GEO is expanding to address emerging challenges and opportunities in this rapidly evolving landscape. Multimodal search—where AI engines synthesize information from text, images, video, and other media types—represents a frontier for GEO research, requiring new optimization strategies beyond text-based content optimization. Conversational and agentic AI systems that can take actions on behalf of users (making purchases, booking reservations, executing transactions) will necessitate new GEO approaches focused on making content actionable and machine-executable, not just citable. The academic community is increasingly recognizing the need for principled GEO methodologies and managed services that go beyond isolated tactics to provide comprehensive, continuous optimization strategies across multiple AI engines simultaneously. Research is also exploring how GEO strategies should adapt as AI engines mature and consolidate, with early findings suggesting that as the market stabilizes around a smaller number of dominant platforms, optimization strategies may become more standardized while remaining distinct from traditional SEO. Finally, academics are investigating the broader implications of GEO for the creator economy and digital publishing, examining how the shift toward AI-synthesized answers affects traffic distribution, revenue models, and the viability of smaller publishers and content creators in an AI-dominated search landscape. These emerging research directions suggest that GEO will continue to evolve as a field, with academic research playing a critical role in helping content creators, brands, and publishers navigate the fundamental transformation of how information is discovered and consumed in the age of generative AI.

Generative Engine Optimization (GEO) is a framework for optimizing content visibility in AI-generated search answers, rather than traditional ranked search results. Unlike SEO which focuses on keyword rankings and click-through rates, GEO emphasizes being cited as a source within synthesized AI responses, requiring different strategies around authority, content structure, and earned media.

The 2024 KDD paper by Aggarwal et al. from Princeton University and IIT Delhi introduced the first comprehensive framework for GEO, including visibility metrics, optimization methods, and the GEO-bench benchmark. This landmark study demonstrated that content visibility in generative engines can be improved by up to 40% through targeted optimization strategies, establishing GEO as a legitimate academic field.

GEO-bench is the first large-scale benchmark for evaluating generative engine optimization, consisting of 10,000 diverse queries across 25 domains. It provides a standardized evaluation framework for testing GEO methods and comparing their effectiveness across different query types, domains, and AI engines, enabling rigorous academic research and practical optimization strategies.

Academic research shows that the most effective GEO methods are Quotation Addition (41% improvement), Statistics Addition (38% improvement), and Cite Sources (35% improvement). These methods work by adding credible citations, relevant statistics, and quotes from authoritative sources, which AI engines heavily favor when synthesizing answers.

Research reveals that AI search engines like ChatGPT and Claude exhibit a strong bias toward Earned media (60-95%), while Google maintains a more balanced mix of Brand, Earned, and Social sources. AI engines consistently deprioritize user-generated content and social platforms, instead favoring third-party reviews, editorial outlets, and authoritative publications.

Authority and E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) are foundational to GEO success. Academic research demonstrates that AI engines prioritize content from sources perceived as authoritative, making earned media coverage, backlinks from reputable domains, and demonstrated expertise critical factors in achieving visibility in AI-generated answers.

Research shows that different AI engines handle multilingual queries differently. Claude maintains high cross-language stability and reuses English-language authority domains, while GPT heavily localizes and sources from target-language ecosystems. This requires brands to develop language-specific authority strategies rather than relying on simple content translation.

Academic GEO research indicates that content creators should focus on building earned media coverage, structuring content for machine readability with schema markup, creating justification-rich content with clear comparisons and value propositions, and tracking new metrics like AI citations and visibility rather than traditional click-through rates.

Track how your content appears in AI-generated answers across ChatGPT, Perplexity, Google AI Overviews, and other generative engines. Get real-time insights into your GEO performance.

Learn what Generative Engine Optimization (GEO) is, how it differs from SEO, and why it's critical for brand visibility in AI-powered search engines like ChatGP...

Learn how to get started with Generative Engine Optimization (GEO) today. Discover essential strategies to optimize your content for AI search engines like Chat...

Learn Generative Engine Optimization (GEO) fundamentals. Discover how to get your brand cited in ChatGPT, Perplexity, and Google AI Overviews with proven strate...