Is There an AI Search Index? How AI Engines Index Content

Learn how AI search indexes work, the differences between ChatGPT, Perplexity, and SearchGPT indexing methods, and how to optimize your content for AI search vi...

Discover the fundamental differences between AI indexing and Google indexing. Learn how LLMs, vector embeddings, and semantic search are reshaping information retrieval and what it means for your content visibility.

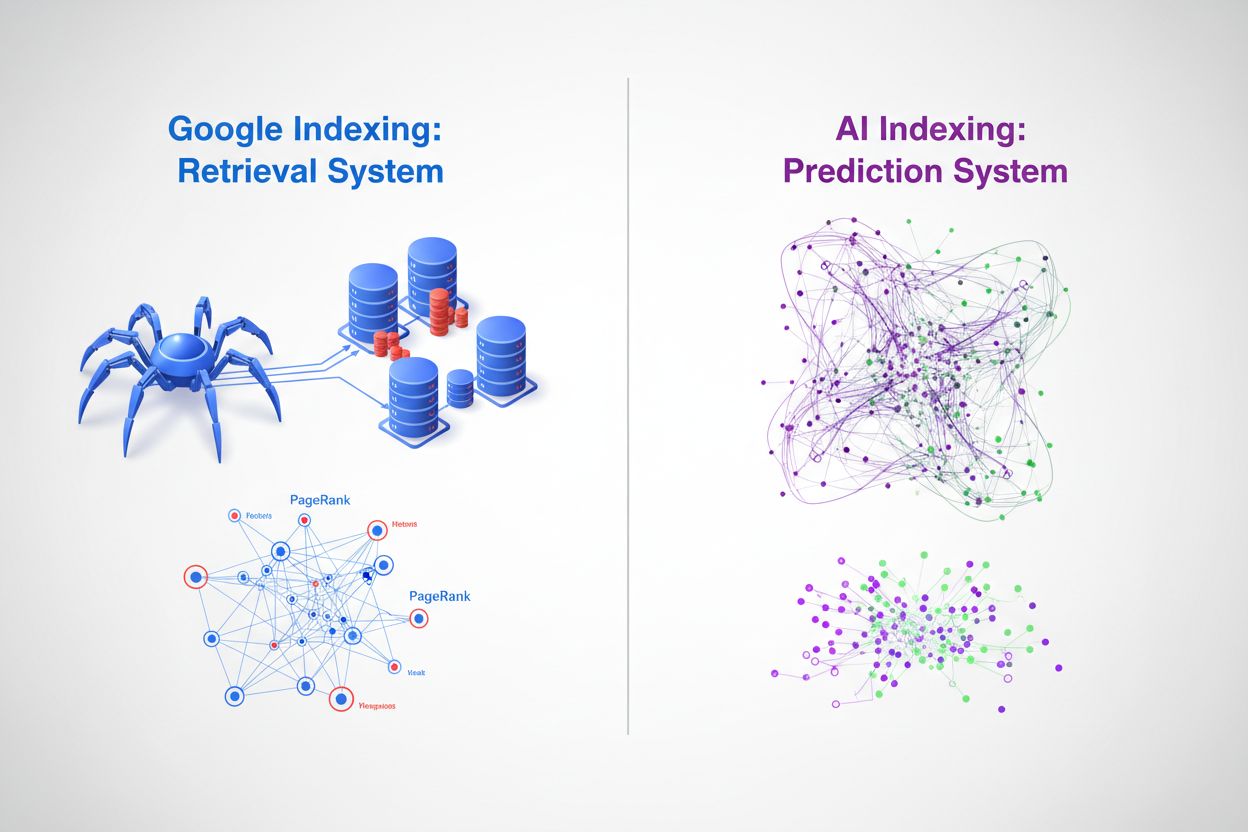

At their core, Google indexing and AI indexing represent fundamentally different approaches to organizing and retrieving information. Google’s traditional search engine operates as a retrieval system—it crawls the web, catalogs content, and returns ranked links when users query specific keywords. In contrast, AI indexing through large language models (LLMs) like ChatGPT, Gemini, and Copilot functions as a prediction system—it encodes vast amounts of training data into neural networks and generates contextually relevant answers directly. While Google asks “where is this information?” AI asks “what is the most relevant response?” This distinction fundamentally changes how content is discovered, ranked, and presented to users, creating two parallel but increasingly interconnected information ecosystems.

Google’s indexing process follows a well-established pipeline that has dominated search for over two decades. Googlebot crawlers systematically traverse the web, following links from page to page and collecting content, which is then processed through Google’s indexing infrastructure. The system extracts key signals including keywords, metadata, and link structure, storing this information in massive distributed databases. Google’s proprietary PageRank algorithm evaluates the importance of pages based on the quantity and quality of links pointing to them, operating under the principle that important pages receive more links from other important pages. Keyword matching remains central to relevance determination—when a user enters a query, Google’s system identifies pages containing those exact or semantically similar terms and ranks them based on hundreds of ranking factors including domain authority, content freshness, user experience signals, and topical relevance. This approach excels at finding specific information quickly and has proven remarkably effective for navigational and transactional queries, which explains Google’s 89.56% search market dominance and processing of 8.5-13.7 billion queries daily.

| Aspect | Google Indexing | Details |

|---|---|---|

| Primary Mechanism | Web Crawling & Indexing | Googlebot systematically traverses web pages |

| Ranking Algorithm | PageRank + 200+ Factors | Links, keywords, freshness, user experience |

| Data Representation | Keywords & Links | Text tokens and hyperlink relationships |

| Update Frequency | Continuous Crawling | Real-time indexing of new/updated content |

| Query Processing | Keyword Matching | Exact and semantic keyword matching |

| Market Share | 89.56% Global | 8.5-13.7 billion queries daily |

AI models employ a fundamentally different indexing mechanism centered on vector embeddings and semantic understanding rather than keyword matching. During training, LLMs process billions of tokens of text data, learning to represent concepts, relationships, and meanings as high-dimensional vectors in a process called embedding generation. These embeddings capture semantic relationships—for example, “king” minus “man” plus “woman” approximates “queen”—enabling the model to understand context and intent rather than just matching character strings. The indexing process in AI systems involves several key mechanisms:

This approach enables AI systems to understand user intent even when queries use different terminology than source material, and to synthesize information across multiple concepts to generate novel responses. The result is a fundamentally different retrieval paradigm where the “index” is distributed throughout the neural network weights rather than stored in a traditional database.

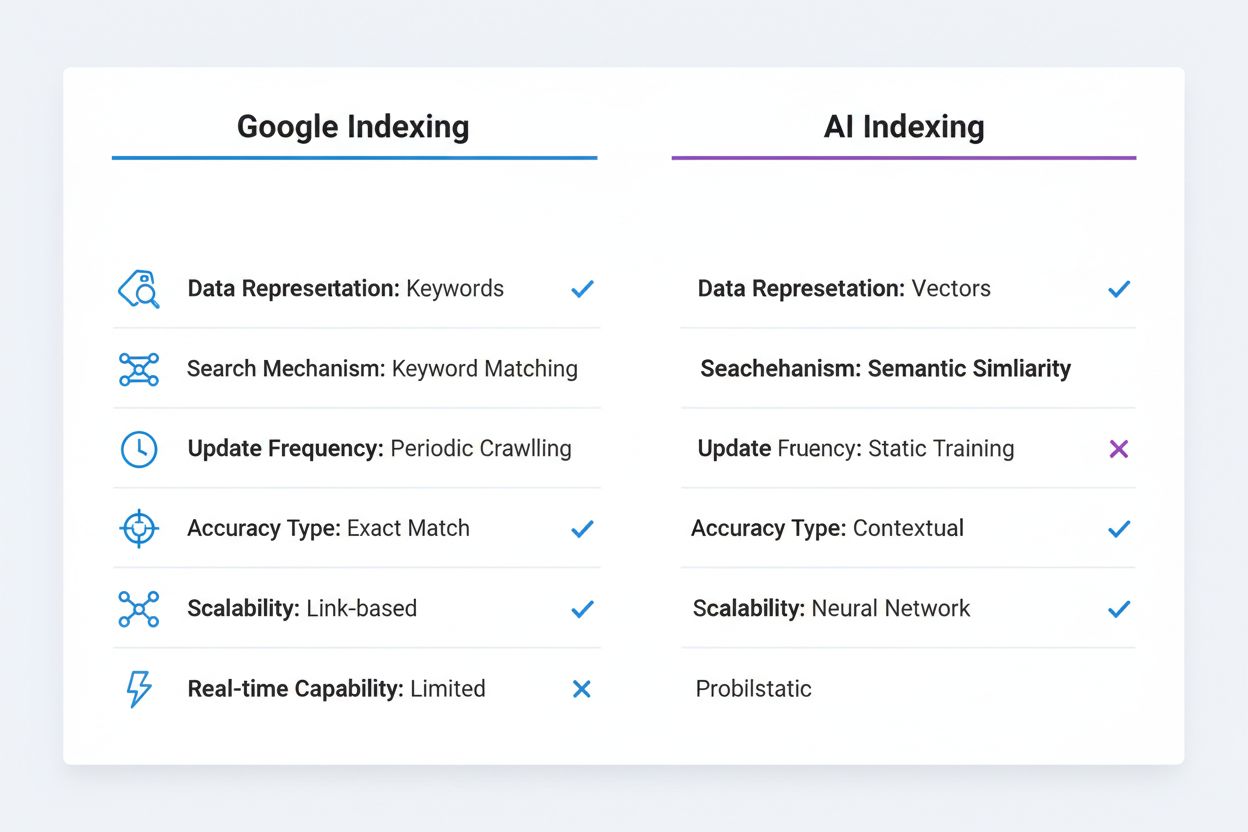

The technical distinctions between Google indexing and AI indexing create profound implications for content discovery and visibility. Exact keyword matching, which remains important in Google’s algorithm, is largely irrelevant in AI systems—an LLM understands that “automobile,” “car,” and “vehicle” are semantically equivalent without requiring explicit keyword optimization. Google’s indexing is deterministic and reproducible; the same query returns the same ranked results across users and time periods (barring personalization). AI indexing is probabilistic and variable; the same query can generate different responses based on temperature settings and sampling parameters, though the underlying knowledge remains consistent. Google’s system excels with structured, discrete information like product prices, business hours, and factual data points, which it can extract and display in rich snippets and knowledge panels. AI systems struggle with this type of precise, current information because their training data has a knowledge cutoff and they cannot reliably access real-time information without external tools. Conversely, AI systems excel at contextual understanding and synthesis, connecting disparate concepts and explaining complex relationships in natural language. Google’s indexing requires explicit linking and citation—content must be published on the web and linked to be discovered. AI indexing operates on implicit knowledge encoded during training, meaning valuable information locked in PDFs, paywalled content, or private databases remains invisible to both systems but for different reasons.

| Comparison Aspect | Google Indexing | AI Indexing |

|---|---|---|

| Data Representation | Keywords & Links | Vector Embeddings |

| Search Mechanism | Keyword Matching | Semantic Similarity |

| Update Frequency | Periodic Crawling | Static Training Data |

| Accuracy Type | Exact Match Focus | Contextual Understanding |

| Scalability Model | Link-based Authority | Neural Network Weights |

| Real-time Capability | Yes (with crawling) | Limited (without RAG) |

The emergence of vector databases represents a crucial bridge between traditional indexing and AI-powered retrieval, enabling organizations to implement semantic search at scale. Vector databases like Pinecone, Weaviate, and Milvus store high-dimensional embeddings and perform similarity search using metrics like cosine similarity and Euclidean distance, allowing systems to find semantically related content even when exact keywords don’t match. This technology powers Retrieval-Augmented Generation (RAG), a technique where AI systems query vector databases to retrieve relevant context before generating responses, dramatically improving accuracy and enabling access to proprietary or current information. RAG systems can retrieve the most semantically similar documents to a user’s query in milliseconds, providing the AI model with grounded information to cite and build upon. Google has integrated semantic understanding into its core algorithm through BERT and subsequent models, moving beyond pure keyword matching toward understanding search intent and content meaning. Vector databases enable real-time retrieval of relevant information, allowing AI systems to access current data, company-specific knowledge bases, and specialized information without retraining. This capability is particularly powerful for enterprise applications where organizations need AI systems to answer questions about proprietary information while maintaining accuracy and providing verifiable citations.

The rise of AI indexing is fundamentally reshaping how content achieves visibility and drives traffic. The zero-click search phenomenon—where Google answers queries directly in search results without users clicking through to source websites—has accelerated dramatically with AI integration, and AI chatbots take this further by generating answers without any visible attribution. Traditional click-through traffic is being replaced by AI citations, where content creators receive visibility through mentions in AI-generated responses rather than user clicks. This shift has profound implications: a brand mentioned in a ChatGPT response reaches millions of users but generates no direct traffic and provides no analytics data about engagement. Brand authority and topical expertise become increasingly important as AI systems are trained to cite authoritative sources and recognize domain expertise, making it critical for organizations to establish clear authority signals across their content. Structured data markup becomes more valuable in this environment, as it helps both Google and AI systems understand content context and credibility. The visibility game is no longer purely about ranking for keywords—it’s about being recognized as an authoritative source worthy of citation by AI systems that process billions of documents and must distinguish reliable information from misinformation.

Rather than AI indexing replacing Google indexing, the future appears to be one of convergence and coexistence. Google has already begun integrating AI capabilities directly into search through its AI Overview feature (formerly SGE), which generates AI-powered summaries alongside traditional search results, effectively creating a hybrid system that combines Google’s indexing infrastructure with generative AI capabilities. This approach allows Google to maintain its core strength—comprehensive web indexing and link analysis—while adding AI’s ability to synthesize and contextualize information. Other search engines and AI companies are pursuing similar strategies, with Perplexity combining web search with AI generation, and Microsoft integrating ChatGPT into Bing. The most sophisticated information retrieval systems will likely employ multi-modal indexing strategies that leverage both traditional keyword-based retrieval for precise information and semantic/vector-based retrieval for contextual understanding. Organizations and content creators must prepare for a landscape where content needs to be optimized for multiple discovery mechanisms simultaneously—traditional SEO for Google’s algorithm, structured data for AI systems, and semantic richness for vector-based retrieval.

Content strategists and marketers must now adopt a dual optimization approach that addresses both traditional search and AI indexing mechanisms. This means maintaining strong keyword optimization and link-building strategies for Google while simultaneously ensuring content demonstrates topical authority, semantic depth, and contextual richness that AI systems recognize and cite. Implementing comprehensive structured data markup (Schema.org) becomes essential, as it helps both Google and AI systems understand content context, credibility, and relationships—this is particularly important for E-E-A-T signals (Experience, Expertise, Authoritativeness, Trustworthiness) that influence both ranking and citation likelihood. Creating in-depth, comprehensive content that thoroughly explores topics becomes more valuable than ever, as AI systems are more likely to cite authoritative, well-researched sources that provide complete context rather than thin, keyword-optimized pages. Organizations should implement citation tracking systems to monitor mentions in AI-generated responses, similar to how they track backlinks, understanding that visibility in AI outputs represents a new form of earned media. Building a knowledge base or content hub that demonstrates clear expertise in specific domains increases the likelihood of being recognized as an authoritative source by AI systems. Finally, the rise of Generative Engine Optimization (GEO) as a discipline means marketers must understand how to structure content, use natural language patterns, and build authority signals that appeal to both algorithmic ranking systems and AI citation mechanisms—a more nuanced and sophisticated approach than traditional SEO alone.

The distinction between AI indexing and Google indexing is not a matter of one replacing the other, but rather a fundamental expansion of how information is organized, retrieved, and presented to users. Google’s retrieval-based approach remains powerful for finding specific information quickly, while AI’s prediction-based approach excels at synthesis, context, and understanding user intent. The most successful organizations will be those that recognize this duality and optimize their content and digital presence for both systems simultaneously. By understanding the technical differences between these indexing approaches, implementing structured data, building topical authority, and tracking visibility across both traditional search and AI platforms, organizations can ensure their content remains discoverable and valuable in an increasingly complex information landscape. The future of search is not singular—it’s plural, distributed, and increasingly intelligent.

Google indexing is a retrieval system that crawls the web, catalogs content, and returns ranked links based on keywords and links. AI indexing is a prediction system that encodes training data into neural networks and generates contextually relevant answers directly. Google asks 'where is this information?' while AI asks 'what is the most relevant response?'

Vector embeddings convert text and other data into high-dimensional numerical arrays that capture semantic meaning. These embeddings enable AI systems to understand that 'car,' 'automobile,' and 'vehicle' are semantically equivalent without explicit keyword matching. Similar concepts are represented as vectors close together in high-dimensional space.

Traditional AI models have a knowledge cutoff and cannot reliably access real-time information. However, Retrieval-Augmented Generation (RAG) systems can query vector databases and web sources to retrieve current information before generating responses, bridging this gap.

GEO is an emerging discipline focused on optimizing content for AI-generated answers rather than traditional search rankings. It emphasizes topical authority, structured data, semantic depth, and brand credibility to increase the likelihood of being cited by AI systems.

Keyword search matches exact or similar words in documents. Semantic search understands the meaning and intent behind queries, enabling it to find relevant results even when different terminology is used. For example, a semantic search for 'smartphone' might also return results for 'mobile device' or 'cellular phone.'

Rather than replacement, the future appears to be convergence. Google is integrating AI capabilities into its search through features like AI Overviews, creating hybrid systems that combine traditional indexing with generative AI. Organizations need to optimize for both systems simultaneously.

A vector database stores high-dimensional embeddings and performs similarity searches using metrics like cosine similarity. It's crucial for implementing semantic search and Retrieval-Augmented Generation (RAG), enabling AI systems to access and retrieve relevant information at scale in milliseconds.

Marketers should adopt a dual optimization approach: maintain traditional SEO for Google while building topical authority, implementing structured data, creating comprehensive content, and tracking AI citations. Focus on demonstrating expertise and credibility to be recognized as an authoritative source by AI systems.

Track how your brand appears in AI-generated answers across ChatGPT, Gemini, Perplexity, and Google AI Overviews. Get real-time insights into your AI citations and visibility.

Learn how AI search indexes work, the differences between ChatGPT, Perplexity, and SearchGPT indexing methods, and how to optimize your content for AI search vi...

Learn how AI engines like ChatGPT, Perplexity, and Gemini index and process web content using advanced crawlers, NLP, and machine learning to train language mod...

Learn how AI search indexing converts data into searchable vectors, enabling AI systems like ChatGPT and Perplexity to retrieve and cite relevant information fr...