Understanding Your Current AI Visibility: A Self-Assessment Guide

Learn how to conduct a baseline AI visibility audit to understand how ChatGPT, Google AI, and Perplexity mention your brand. Step-by-step assessment guide for b...

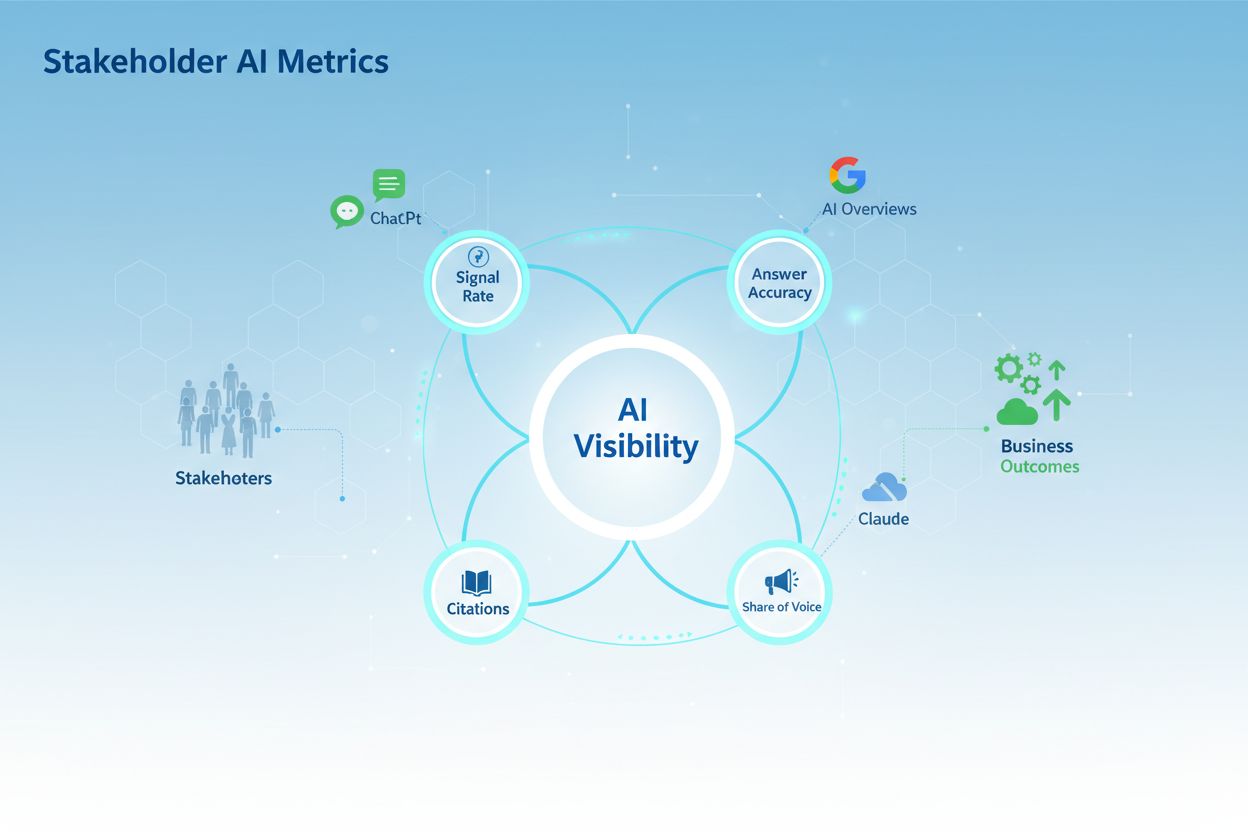

Discover the 4 essential AI visibility metrics stakeholders care about: Signal Rate, Accuracy, Citations, and Share of Voice. Learn how to measure and report AI brand visibility.

The emergence of generative AI platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude has fundamentally shifted how stakeholders evaluate brand visibility and market presence. Unlike traditional SEO metrics that measure search engine rankings and organic traffic, AI visibility metrics capture whether your brand appears in AI-generated answers—a critical distinction that directly impacts customer discovery and brand authority. Stakeholders increasingly recognize that AI systems now mediate information discovery for millions of users daily, making visibility in these systems as important as traditional search rankings. The challenge is that 90% of ChatGPT citations originate from rank 21 and beyond in traditional search results, meaning brands invisible in AI answers may still be losing market share despite strong SEO performance. Understanding and optimizing AI visibility metrics has become essential for C-suite executives, marketing directors, and business leaders who need to ensure their organizations remain discoverable and trusted in the AI-driven information landscape.

Organizations serious about AI visibility must track four interconnected metrics that provide a complete picture of brand presence, accuracy, authority, and competitive positioning. These metrics work together to answer critical business questions: Are we appearing in AI answers? Are we being cited accurately? Are we positioned as a trusted authority? And how do we compare to competitors? The following table outlines each metric, its definition, stakeholder value, and practical application:

| Metric Name | Definition | Stakeholder Value | Example |

|---|---|---|---|

| AI Signal Rate | Percentage of AI prompts that mention your brand or content | Baseline visibility; market penetration | 45% of financial planning queries mention your advisory firm |

| Answer Accuracy Rate | Percentage of AI mentions that correctly represent your brand, products, or services | Brand protection; reputation management | 92% of mentions accurately describe your software’s features |

| Citation Share | Percentage of all citations in AI answers attributed to your content | Authority and trust signals | Your content cited in 28% of investment strategy answers |

| Share of Voice | Your brand mentions divided by total mentions of all competitors in AI answers | Competitive positioning; market dominance | 35% SOV vs. competitor average of 18% |

These four metrics form the foundation of stakeholder-focused AI visibility reporting, enabling organizations to measure progress, identify risks, and justify investments in AI optimization strategies.

AI Signal Rate represents the most fundamental metric for understanding whether your brand or content appears in AI-generated answers across major platforms. Calculated as the ratio of mentions to total prompts tested (Mentions ÷ Total Prompts × 100), this metric reveals what percentage of relevant queries result in your brand being mentioned by AI systems. Industry benchmarks show that market leaders typically achieve AI Signal Rates of 60-80% for their core topic areas, while new brands and emerging companies often start at 5-10%, indicating significant room for growth and optimization. The metric varies significantly across platforms—ChatGPT, Perplexity, Google AI Overviews, and Claude each have different training data, recency windows, and citation patterns, requiring organizations to monitor visibility across all major systems. AI Signal Rate directly correlates with business impact because higher visibility increases the likelihood that potential customers encounter your brand during their research phase, ultimately influencing purchase decisions and market perception. Stakeholders view this metric as the entry point for AI visibility strategy, as it answers the fundamental question: “Are we even in the conversation?”

While achieving high AI Signal Rate demonstrates visibility, Answer Accuracy Rate protects the most valuable asset: brand reputation. Accuracy matters more than visibility because inaccurate AI representations can damage customer trust, create legal liability, and undermine marketing investments—a brand mentioned incorrectly in 100 AI answers is worse than not being mentioned at all. This metric measures the percentage of AI mentions that correctly represent your brand, products, services, pricing, capabilities, or key differentiators against a “ground truth” document that defines accurate brand representation. Scoring typically uses a 0-2 scale: 0 points for completely inaccurate information, 1 point for partially accurate or incomplete information, and 2 points for fully accurate representations, with the final Answer Accuracy Rate calculated as total points divided by total mentions. As one industry expert notes, “Visibility without accuracy is a liability, not an asset—stakeholders would rather be invisible than misrepresented.” This concern resonates deeply with C-suite executives who understand that brand damage from AI misrepresentation can take months or years to repair, making accuracy monitoring a critical risk management function.

Citation Share represents a more sophisticated visibility metric that distinguishes between casual mentions and authoritative citations—a critical difference in the AI context where source attribution directly influences user trust and decision-making. While AI Signal Rate counts any mention of your brand, Citation Share measures only instances where your content is explicitly cited as a source, indicating that AI systems recognize your organization as a credible authority worthy of attribution. The related metric Top-Source Share narrows this further by tracking citations in the first or second position within AI answers, which receive disproportionate user attention and trust. The research finding that 90% of ChatGPT citations originate from rank 21 and beyond in traditional search results reveals a critical insight: traditional SEO rankings don’t guarantee AI citations, and many high-authority sources are being overlooked by AI systems entirely. Citation Share directly signals authority and trustworthiness to stakeholders because citations represent AI systems validating your content as reliable information, which translates into customer confidence and competitive advantage. Organizations with strong Citation Share metrics can demonstrate to stakeholders that they’re not just visible in AI answers—they’re recognized as authoritative sources that AI systems actively recommend to users.

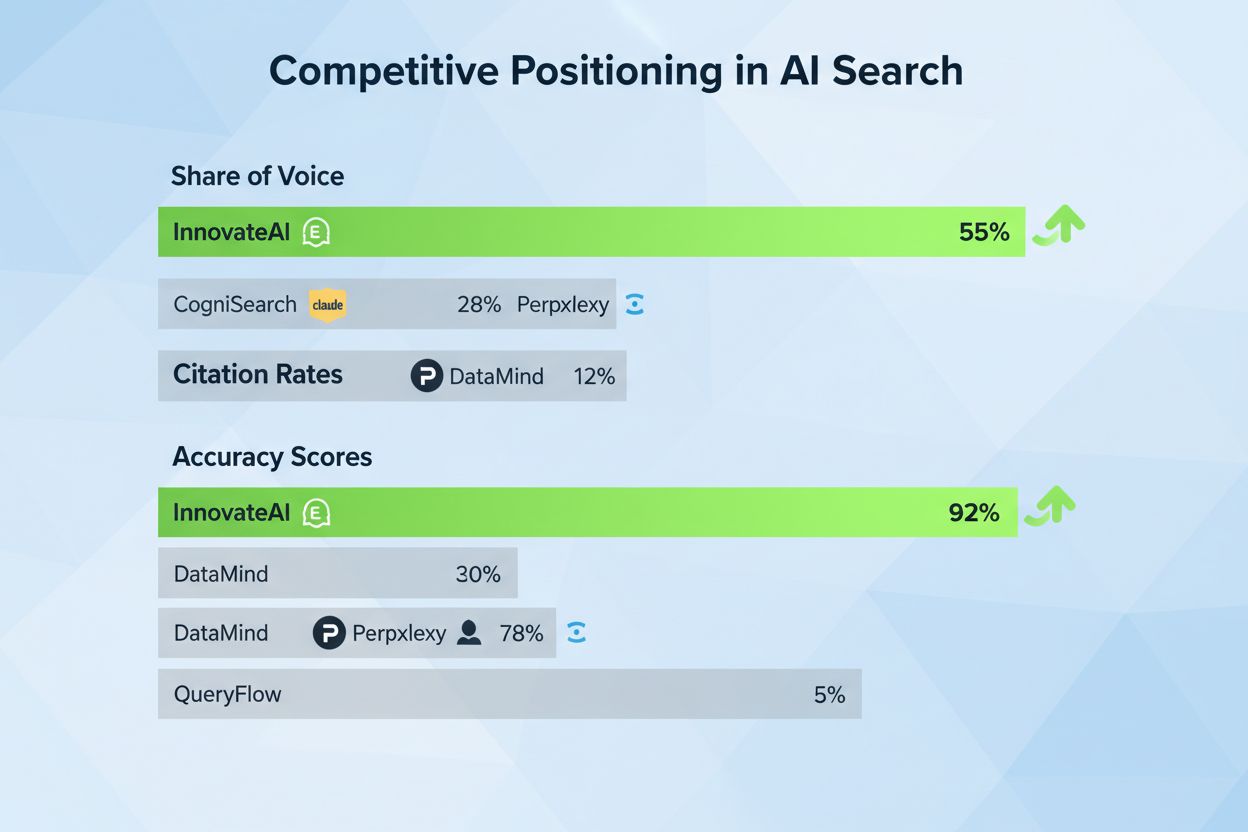

Share of Voice (SOV) in the AI context measures your brand’s mention volume relative to competitors’ combined mention volume, calculated as Your Mentions ÷ (Your Mentions + All Competitors’ Mentions) × 100, providing a direct competitive positioning metric that stakeholders understand intuitively. This metric answers the critical business question: “What percentage of the AI conversation about our market are we capturing compared to competitors?” Share of Voice matters deeply to stakeholders because it reveals whether your organization is gaining or losing market presence in the AI-driven information landscape, with implications for customer acquisition, brand perception, and long-term competitive viability. Beyond simple mention counts, ranking position in AI enumerations—such as appearing first, second, or third in “Top 10” lists generated by AI systems—carries significant weight, as these positions receive disproportionate user attention and influence purchasing decisions. Benchmark comparisons reveal that market leaders typically maintain 30-50% SOV in their primary markets, while competitors cluster around 10-20%, with significant variation based on industry, geography, and topic specificity. The strategic implications are profound: organizations with declining SOV face a competitive threat that may not yet be visible in traditional metrics, while those with rising SOV are capturing mindshare in the channel where customers increasingly discover solutions. Stakeholders use Share of Voice trends to assess whether current marketing and content strategies are effectively positioning the organization against competitors in the AI-mediated discovery process.

Effective stakeholder reporting requires a comprehensive AI visibility dashboard that consolidates key metrics, tracks trends over time, and connects AI visibility to business outcomes in a format that resonates with executive decision-makers. The dashboard should balance real-time monitoring of current AI visibility with historical trend analysis that reveals whether visibility is improving, declining, or stagnating—critical context for evaluating strategy effectiveness. Integration with business metrics is essential; the dashboard should display AI visibility alongside website traffic, conversion rates, customer acquisition costs, and revenue metrics to demonstrate the business impact of AI visibility improvements. Reporting frequency should align with organizational decision-making cycles, typically weekly for marketing teams tracking tactical progress and monthly for executive stakeholders evaluating strategic performance. The following components form the foundation of an effective AI visibility dashboard:

Tools and platforms should support automated data collection, customizable reporting, and integration with existing business intelligence systems to ensure the dashboard becomes a trusted source of truth for stakeholder decision-making.

The ultimate value of AI visibility metrics lies in their connection to measurable business outcomes—a relationship that stakeholders demand to justify investments in AI optimization and monitoring. AI referral traffic tracking in Google Analytics 4 enables organizations to measure how many website visitors arrive from AI platforms, with data showing that conversion rates from AI-driven traffic typically range from 3-16%, depending on industry, traffic quality, and conversion funnel optimization. ROI calculation for AI visibility improvements follows a straightforward formula: (Revenue from AI-sourced customers - Cost of AI visibility optimization) ÷ Cost of AI visibility optimization × 100, enabling stakeholders to quantify the financial impact of visibility gains. Organizations that improve their AI Signal Rate from 15% to 45% while maintaining Answer Accuracy Rate above 90% typically see corresponding increases in AI referral traffic of 200-300%, translating into measurable revenue impact. A compelling case study from the financial services sector demonstrates this connection: a bank’s fraud detection AI system achieved 5x ROI by ensuring its fraud prevention capabilities appeared accurately in AI answers about financial security, resulting in increased customer inquiries and higher conversion rates among security-conscious prospects. Stakeholder reporting that connects metrics to revenue transforms AI visibility from an abstract marketing concept into a concrete business driver, enabling executives to make informed decisions about resource allocation and strategic priorities.

Effective AI visibility optimization requires moving beyond one-time audits to establish a continuous monitoring workflow that tracks changes, identifies emerging opportunities, and enables rapid response to competitive threats. The foundation of continuous monitoring is developing a prompt set of 20-50 high-value queries that represent your target customers’ actual search behavior, buyer journey stages, and decision-making questions—generic prompts miss the nuance of real customer intent and produce misleading results. Testing across platforms should occur on a consistent weekly schedule, with each prompt tested on ChatGPT, Perplexity, Google AI Overviews, and Claude to capture platform-specific visibility patterns and identify where optimization efforts should focus. The scoring and analysis process involves evaluating each AI answer for Signal Rate (did we appear?), Answer Accuracy Rate (was it correct?), Citation Share (were we cited?), and competitive positioning (how did we rank against competitors?), with results documented in a centralized system for trend analysis. Content updates based on findings should be prioritized by impact—addressing inaccurate representations takes precedence over visibility gaps, while high-impact visibility opportunities should be addressed through content creation or optimization. Re-testing and progress tracking occurs on a monthly basis, with results compared against previous months to identify whether visibility is improving, declining, or remaining stable, enabling stakeholders to assess whether current strategies are working. Establishing a stakeholder communication cadence—typically monthly executive summaries and weekly team updates—ensures that monitoring insights drive organizational action rather than accumulating in dashboards.

Organizations frequently undermine their AI visibility efforts by making measurement mistakes that obscure true performance and lead to misguided strategic decisions. The most common error is tracking mentions without checking accuracy, which creates the illusion of visibility while ignoring the fact that inaccurate mentions may be damaging brand reputation—an organization appearing in 100 AI answers with 40% accuracy is actually worse off than appearing in 60 answers with 95% accuracy. Ignoring citations and source tracking represents another critical mistake, as organizations may achieve high Signal Rates while failing to establish themselves as authoritative sources, missing the opportunity to build trust and influence customer decisions. Many organizations make the mistake of using generic prompts that miss buyer intent, testing queries like “What is marketing?” instead of “What marketing automation platform integrates with Salesforce?"—the latter reveals actual customer discovery patterns while the former produces irrelevant results. Treating AI visibility as a one-time project rather than an ongoing monitoring and optimization function is perhaps the most damaging mistake, as competitive landscapes shift rapidly and AI systems continuously update their training data and citation patterns. These mistakes matter to stakeholders because they lead to inaccurate performance assessments, misallocated resources, and missed competitive opportunities that may not become apparent until market share has already shifted. To avoid these pitfalls, organizations should implement systematic measurement processes with clear accuracy standards, citation tracking, buyer-intent-focused prompts, and ongoing monitoring cadences that treat AI visibility as a continuous strategic priority.

The AI visibility monitoring landscape includes several specialized platforms designed to help organizations track metrics, generate reports, and communicate findings to stakeholders with varying levels of technical sophistication. The following table compares leading tools across key dimensions relevant to stakeholder reporting:

| Tool | Engine Coverage | Key Features | Best For |

|---|---|---|---|

| AmICited.com | ChatGPT, Perplexity, Gemini, Claude | Real-time monitoring, accuracy scoring, citation tracking, competitive analysis, executive dashboards | Enterprise organizations requiring comprehensive AI visibility monitoring and stakeholder reporting |

| Semrush AI SEO | ChatGPT, Google AI Overviews | AI visibility metrics, content optimization recommendations, integration with SEO tools | Marketing teams seeking unified SEO and AI visibility tracking |

| seoClarity | Multiple AI platforms | AI visibility tracking, content performance analysis, competitive benchmarking | Organizations with existing seoClarity investments seeking AI visibility expansion |

| Local Falcon | ChatGPT, Perplexity, Google AI Overviews | Share of Voice metrics, local AI visibility, competitive positioning | Local and regional businesses focused on geographic market positioning |

Cost vs. benefit analysis reveals that enterprise-grade platforms like AmICited.com command premium pricing but deliver comprehensive monitoring, accurate scoring, and executive-ready reporting that justify investment for organizations with significant AI visibility stakes. Integration capabilities vary significantly—platforms that integrate with Google Analytics 4, CRM systems, and business intelligence tools enable organizations to connect AI visibility metrics to business outcomes more effectively. Recommendation for different organization sizes: startups and small businesses may begin with free or low-cost tools to establish baseline metrics, mid-market organizations benefit from specialized platforms offering balanced features and pricing, while enterprise organizations require comprehensive solutions with advanced analytics, custom reporting, and dedicated support. AmICited.com stands out as the top choice for stakeholder-focused monitoring because it combines comprehensive engine coverage, accuracy-focused scoring, competitive analysis, and executive dashboards specifically designed to communicate AI visibility to non-technical stakeholders.

Translating AI visibility metrics into stakeholder-friendly reports requires understanding that different audiences prioritize different information and respond to different presentation formats. C-suite executives focus on three core metrics: ROI and business impact (how does AI visibility translate to revenue?), competitive positioning (are we winning or losing market share in AI?), and risk management (what brand reputation risks exist from inaccurate AI representations?). Marketing teams prioritize visibility metrics (Signal Rate and SOV trends), accuracy monitoring (Answer Accuracy Rate by topic), and citations (Citation Share and Top-Source Share), as these directly inform content strategy and optimization priorities. Frequency and format of reporting should match stakeholder needs: C-suite executives typically require monthly executive summaries with key metrics, trend lines, and business impact analysis, while marketing teams benefit from weekly detailed reports with actionable insights and content recommendations. Connecting metrics to strategic goals transforms raw data into meaningful narratives—rather than reporting “Signal Rate increased from 35% to 42%,” frame it as “AI visibility improvements contributed to 18% increase in qualified leads from AI platforms, supporting our customer acquisition targets.” Using dashboards for transparency and accountability enables stakeholders to access current metrics on-demand, reducing the need for ad-hoc reporting while building confidence that AI visibility is being actively managed and optimized. Organizations that master stakeholder reporting of AI metrics gain a significant competitive advantage, as executives with clear visibility into AI performance can make informed decisions about resource allocation, content strategy, and competitive positioning in the rapidly evolving AI-mediated discovery landscape.

AI Signal Rate measures the percentage of AI prompts that mention your brand or content. Stakeholders care about this metric because it reveals baseline visibility and market penetration in AI systems. Higher Signal Rates indicate that your brand is discoverable when potential customers use AI platforms for research and decision-making.

Share of Voice is calculated as: Your Mentions ÷ (Your Mentions + All Competitors' Mentions) × 100. This metric reveals what percentage of the AI conversation about your market you're capturing compared to competitors. For example, if you appear in 35 mentions and competitors appear in 65 combined mentions, your SOV is 35%.

Mentions occur when AI systems reference your brand in answers, while citations occur when AI systems explicitly attribute information to your content as a source. Citations carry more weight because they signal that AI systems recognize your organization as an authoritative source, which builds customer trust and influences purchasing decisions.

Organizations should implement continuous monitoring with weekly testing of their prompt set and monthly analysis of trends. This frequency allows teams to identify emerging opportunities and competitive threats quickly while providing sufficient data for meaningful trend analysis. Executive stakeholders typically review metrics monthly, while marketing teams benefit from weekly detailed reports.

Market leaders typically maintain Answer Accuracy Rates above 90%, meaning that 90% or more of AI mentions accurately represent their brand, products, and services. New organizations should aim for 85%+ accuracy, with the goal of reaching 95%+ as they optimize their content and entity information across platforms.

Track AI referral traffic in Google Analytics 4 by identifying traffic from platforms like ChatGPT and Perplexity. Calculate conversion rates from AI-sourced visitors and compare them to other traffic sources. Research shows AI-driven traffic converts at 3-16% rates, often outperforming average site traffic. Connect visibility improvements to revenue using the formula: (Revenue from AI-sourced customers - Cost of optimization) ÷ Cost of optimization × 100.

Monitor the four major AI platforms: ChatGPT (largest user base), Perplexity (AI-native search), Google AI Overviews (integrated into Google Search), and Claude (growing enterprise adoption). Each platform has different training data, recency windows, and citation patterns, so visibility varies significantly across platforms. Comprehensive monitoring requires testing across all four to identify platform-specific opportunities.

Focus on three core metrics for executives: ROI and business impact (how does AI visibility translate to revenue?), competitive positioning (are we winning or losing market share?), and risk management (what brand reputation risks exist?). Present data as trend lines showing improvement over time, compare your metrics to competitors, and always connect metrics to business outcomes like customer acquisition and revenue.

Track how your brand appears across ChatGPT, Perplexity, and Google AI Overviews with AmICited.com. Get stakeholder-ready reports on AI Signal Rate, accuracy, and competitive positioning.

Learn how to conduct a baseline AI visibility audit to understand how ChatGPT, Google AI, and Perplexity mention your brand. Step-by-step assessment guide for b...

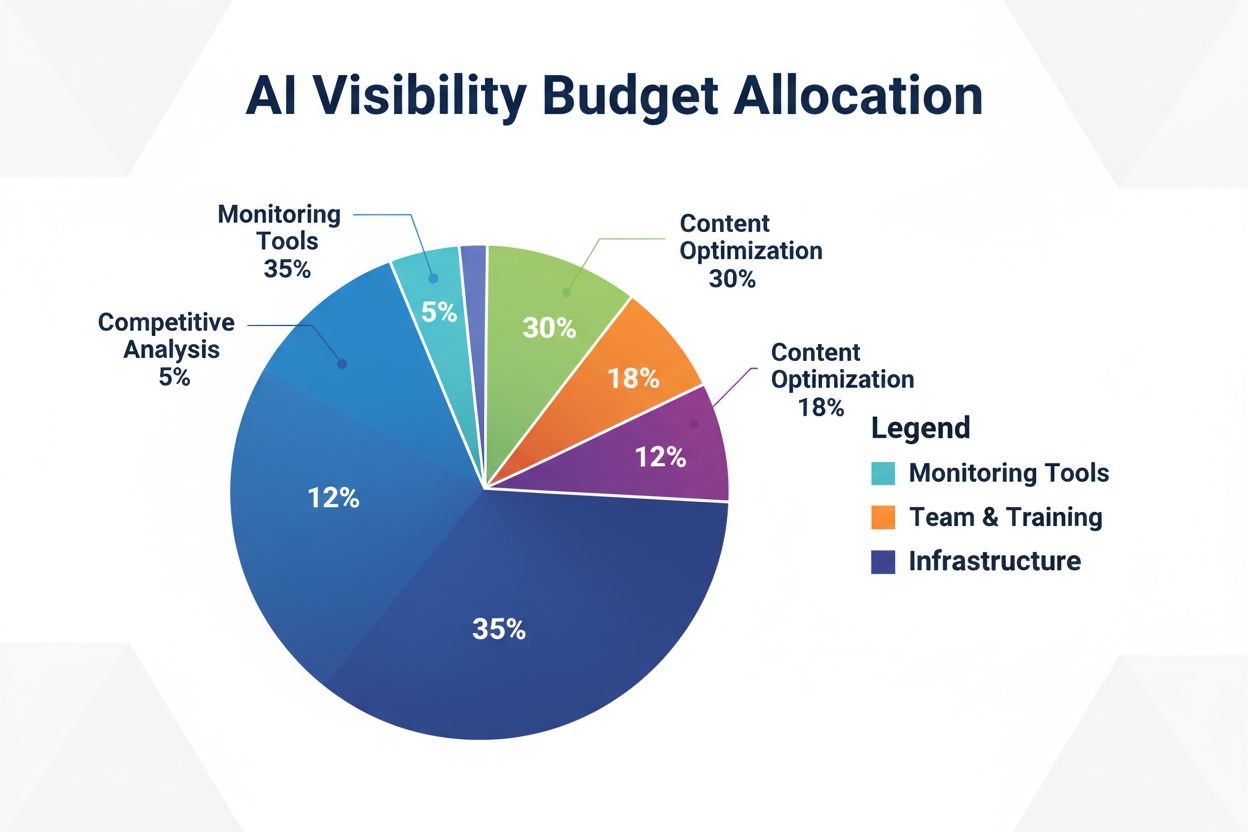

Learn how to strategically allocate your AI visibility budget across monitoring tools, content optimization, team resources, and competitive analysis to maximiz...

Learn how to connect AI visibility metrics to measurable business outcomes. Track brand mentions in ChatGPT, Perplexity, and Google AI Overviews with actionable...