AI Visibility Budget Planning: Where to Allocate Resources

Learn how to strategically allocate your AI visibility budget across monitoring tools, content optimization, team resources, and competitive analysis to maximiz...

Learn how to build an AI visibility playbook that keeps your team aligned on strategy, governance, and AI citation tracking across platforms like ChatGPT, Perplexity, and Google AI Overviews.

The landscape of how people discover information has fundamentally shifted. While Google still dominates with 14 billion daily searches, AI answer engines like ChatGPT, Perplexity, and Google’s AI Overviews are rapidly becoming the primary source of truth for millions of users. What makes this transformation critical for your organization is that 86% of AI citations come from brand-managed sources—meaning you have significant control over how AI systems reference your brand.

Without a unified AI visibility playbook, your teams operate from different versions of reality. Marketing creates content optimized for one platform, while compliance enforces governance rules that engineering doesn’t know about. Sales references outdated positioning while customer service follows different protocols. This fragmentation doesn’t just create inefficiency; it directly impacts your brand’s visibility in AI-generated answers. When teams aren’t aligned on strategy, governance, and content standards, AI systems struggle to understand and trust your brand enough to cite it.

An AI visibility playbook solves this problem by creating a single source of truth for how your organization approaches AI visibility. It’s not just a document—it’s a living system that keeps your team synchronized as tools, platforms, and regulations evolve. The playbook defines your strategic vision, establishes governance boundaries, assigns clear ownership, and creates maintenance rhythms that prevent documentation from becoming stale. When implemented effectively, it transforms AI visibility from a marketing initiative into an organizational capability that every team understands and contributes to.

The shift from traditional SEO to AI visibility represents a fundamental change in how brands need to think about discoverability. While SEO remains important—Google still processes 210 times more queries than ChatGPT—the strategies that worked for search rankings don’t automatically translate to AI citations. Understanding these differences is essential for building an effective playbook.

Traditional SEO focuses on optimizing for search engine algorithms that rank pages based on keywords, backlinks, and user engagement signals. You write content targeting specific keywords, build authority through links, and measure success through rankings and click-through rates. The goal is to appear on the first page of search results.

AI visibility, by contrast, focuses on being referenced in AI-generated answers. When someone asks ChatGPT “What’s the best CRM for small businesses?” or queries Perplexity about industry trends, AI systems scan their training data and sources to synthesize an answer. If your brand appears in that answer with a citation, you’ve achieved AI visibility. This requires a different approach entirely.

| Aspect | Traditional SEO | AEO (Answer Engine Optimization) | AI Visibility |

|---|---|---|---|

| Primary Goal | Rank in search results | Appear in AI-generated answers | Get cited by AI systems |

| Key Focus | Keywords & backlinks | Content structure & clarity | Credibility & authority |

| Measurement | Rankings & click-through rates | Answer inclusion frequency | Citation frequency & context |

| Content Type | Blog posts, optimized pages | Structured data, FAQs, tables | Expert commentary, data, insights |

| Timeline | Months to achieve ranking | Weeks to appear in answers | Real-time tracking possible |

| Success Metric | Position in SERP | Inclusion in AI response | Brand mention with attribution |

The critical difference is that AI systems evaluate credibility differently than search engines. They look for clear authorship, verifiable credentials, consistent information across sources, and structured data that’s easy to parse. A blog post that ranks well in Google might never be cited by ChatGPT if it lacks author credentials or clear structure. This is why your playbook must address both SEO and AI visibility—they’re complementary but distinct strategies.

A comprehensive AI visibility playbook contains five essential components that work together to keep your organization aligned and your documentation current. Each component serves a specific purpose and requires different maintenance rhythms.

Strategic Vision and Business Alignment: Your charter establishes why your organization is investing in AI visibility and how success will be measured. This includes your vision statement tied to business outcomes, 3-5 measurable success metrics, executive sponsor assignment, and quarterly milestone targets. Without this foundation, teams make decisions based on personal preference rather than organizational priorities.

Current State Assessment and Gap Analysis: Before you can move forward, you need to understand where you’re starting from. This audit maps your existing data assets, infrastructure capabilities, team skills, and deployed tools. It identifies what you have, what’s missing, and what requires investment. A simple matrix format works well—document each resource, its current state, identified gaps, priority level, and investment required.

Governance Framework and Ethical Guardrails: Clear governance defines boundaries within which teams can experiment safely. Your framework should specify access tiers (basic users, power users, administrators), tool approval criteria, data classification rules, escalation procedures, and ethics requirements. The key is making governance practical and enforceable, not theoretical. Teams should be able to answer most governance questions by consulting the playbook rather than scheduling meetings.

Ownership Structure and Accountability: Documentation without ownership decays immediately. Assign a DRI (Directly Responsible Individual) for playbook maintenance, supported by department-level advocates from marketing, engineering, legal, and product. The DRI ensures consistency and quality standards while advocates surface issues from their respective departments. This distributed model prevents bottlenecks while maintaining accountability.

Maintenance Cadence and Feedback Loops: Different content types require different update frequencies. High-risk sections like data privacy policies need monthly reviews because regulatory environments shift rapidly. General content like tool recommendations can update quarterly. Post-sprint reviews capture tactical learnings while they’re fresh. This three-tier cadence keeps your playbook current without turning documentation into a full-time job.

Most organizations can build their initial playbook in 3-6 weeks by following a structured five-step process. Each step produces a specific deliverable that subsequent steps depend on, creating a foundation that supports both initial implementation and ongoing maintenance.

Step 1: Define Strategic Vision and Business Alignment (2-4 hours)

Start by drafting a concise charter grounded in revenue, efficiency, or customer experience goals. Your charter should articulate the “why” in terms leadership already tracks—ARR, churn, pipeline velocity. Secure an executive sponsor who will champion resources and remove blockers. This charter becomes your north star for every subsequent decision. When stakeholders debate priorities or question direction, the charter provides the shared reference point that keeps your program aligned with business goals. For example, a marketing team might establish: “Vision: Reduce content production time 40% via AI-assisted workflows. Success metric: 5 published AI-enhanced assets per week by Q3. Owner: VP of Marketing. Budget: $50K pilot, $200K annual if KPIs hit.”

Step 2: Audit Current State and Identify Gaps (4-6 hours)

Your charter defines where you’re going; the audit defines where you’re starting from. Map existing data assets, infrastructure capabilities, and team skills. Document what you have, what’s missing, and what requires investment. Organize findings in a matrix with five columns: Resource, Current State, Gap, Priority, and Investment Required. This inventory reveals what you can build on immediately and what requires investment, giving you clarity to shape realistic implementation timelines. The audit prevents surprises during implementation and becomes your baseline for measuring progress.

Step 3: Establish Governance Rules and Ethical Guardrails (6-8 hours)

Without clear governance, teams either move too slowly waiting for permission or move recklessly without understanding compliance boundaries. Define who can do what with which tools and data. Codify approval workflows, escalation paths, and ethical obligations. Document rules in plain language that non-technical stakeholders can interpret without legal consultation. For example: “Basic users may use ChatGPT Enterprise for drafting with public data. Power users may connect proprietary data to approved RAG systems after completing bias training. All outputs require human review before publication.” Clear policies like this prevent bottlenecks while maintaining compliance.

Step 4: Assign Ownership and Organizational Structure (2 hours per week)

Appoint a DRI for playbook maintenance and surround them with department-level advocates. The DRI doesn’t write every update; the DRI ensures updates happen on schedule and meet quality standards. Advocates serve as early warning systems, surfacing problems before they become crises. The DRI handles strategic oversight and quality control while advocates handle tactical intelligence gathering. This separation ensures documentation stays current without overwhelming any single person.

Step 5: Implement Maintenance Cadence and Feedback Loops (1-2 hours per review cycle)

Establish review schedules and lesson-capture processes. Schedule reviews on your team calendar to ensure they actually happen. Use a three-tier cadence: monthly reviews for high-risk content like data privacy policies, quarterly reviews for general content like tool recommendations, and post-sprint reviews for lessons learned. This lightweight workflow ensures insights from failed experiments and successful pilots flow back into your playbook before teams forget the context.

Your AI visibility playbook needs the same infrastructure as your marketing website to remain sustainable. Without proper tooling, documentation decays faster than the strategy it’s meant to guide. The right technology stack transforms playbook maintenance from a reactive chore into an ongoing operational responsibility.

Version control is essential. Use Git branches or CMS staging to propose edits, run peer reviews, and merge when approved. This approach ensures transparency while maintaining quality standards. Anyone can propose changes, but only authorized approvers can publish them. This balance allows accessibility while protecting the playbook from unauthorized or poorly vetted changes.

Publish your playbook in a searchable workspace where marketing, legal, and engineering can comment inline and collaborate. Tools like Notion, Confluence, or dedicated CMS platforms work well. The key is making documentation easily discoverable and accessible. When teams can’t find the playbook, they default to informal Slack conversations and undocumented decisions—exactly what you’re trying to prevent.

Integrate documentation tasks into existing workflows rather than treating them as separate work. Your DRI and advocates already attend department meetings and sprint planning sessions. They simply add playbook updates to their existing responsibilities. This integration makes maintenance sustainable rather than a burden that eventually gets deprioritized.

AmICited.com plays a critical role in this infrastructure by providing real-time monitoring of how AI systems reference your brand. Rather than guessing whether your playbook is working, you can see exactly how often and where your brand appears in AI-generated answers across ChatGPT, Perplexity, Google AI Overviews, and other platforms. This data feeds directly back into your playbook maintenance process, informing decisions about content strategy, governance updates, and team priorities.

Organizations implementing AI visibility playbooks consistently encounter the same obstacles. Understanding these patterns helps you anticipate problems and implement solutions proactively.

Stakeholder Fatigue occurs when teams feel overwhelmed by documentation requirements. Solution: Celebrate quick wins and keep updates lightweight. Show teams how the playbook has already prevented a compliance issue or accelerated a decision. Make documentation feel like a tool that helps them, not a burden imposed on them.

Unclear Ownership leads to documentation that no one maintains. Solution: Reference the clear role assignments from Step 4. Make sure your DRI has explicit time allocated for playbook maintenance—it shouldn’t be a side project squeezed between other responsibilities. Give advocates clear expectations about what feedback you need from them and how often.

Lack of Proper Tooling creates friction that kills adoption. Solution: Embed documentation tasks into existing workflows. If your team uses Slack, integrate notifications there. If you use Jira, create tickets for playbook updates. Don’t ask teams to learn a new system just to contribute to documentation.

Regulatory Drift happens when governance frameworks become outdated. Solution: Establish a proactive monitoring system for regulatory updates. Conduct dynamic policy reviews quarterly. Engage compliance experts to interpret changes accurately. Build regulatory monitoring into your maintenance cadence rather than treating it as a one-time activity.

Documentation Decay occurs when the playbook becomes stale. Solution: Implement your maintenance cadence rigorously. Treat documentation updates with the same rigor you apply to product releases or financial reporting. When documentation falls behind reality, teams stop trusting it and revert to informal workarounds.

You can’t improve what you don’t measure. Establish clear metrics for tracking your playbook’s effectiveness and the AI visibility improvements it enables.

AI Citation Frequency is your primary metric. How often does your brand appear in AI-generated answers? Track this across platforms—ChatGPT, Perplexity, Google AI Overviews, and others. AmICited.com provides this data in real-time, showing you exactly which queries mention your brand and in what context. Compare your citation frequency before and after implementing your playbook to quantify the impact.

Brand Mention Rate measures how often your brand appears relative to competitors in the same category. If your industry has 10 major players and you appear in 20% of AI answers while competitors average 15%, you’re winning. This relative metric matters more than absolute numbers because it shows competitive positioning.

Team Alignment Scores measure how well your organization is executing the playbook. Are teams following governance rules? Are they updating documentation on schedule? Are they using the playbook to make decisions? Survey teams quarterly to assess alignment and identify areas where the playbook needs clarification.

Documentation Update Frequency shows whether your playbook is actually being maintained. If high-risk sections haven’t been reviewed in three months, you have a maintenance problem. If post-sprint reviews consistently happen, you’re capturing institutional knowledge effectively.

Time to Decision-Making measures whether the playbook is actually helping teams move faster. Are decisions that previously required three meetings now resolved by consulting the playbook? Are new hires onboarding faster because they have clear reference materials? These efficiency gains often provide the strongest ROI justification.

Building a playbook is one thing; getting your team to actually use it is another. Successful adoption requires intentional change management and a culture that values documentation.

Start with one workflow or use case rather than trying to document everything at once. Choose something your team already does—content creation with ChatGPT, data analysis with Claude, or customer support with Copilot. Document this existing behavior thoroughly, then expand to other areas. This iterative approach builds momentum and demonstrates value before asking teams to adopt the playbook more broadly.

Make documentation searchable and accessible. If teams can’t find the playbook, they won’t use it. Implement full-text search, clear navigation, and a table of contents. Consider creating quick-reference guides for common scenarios. The easier you make it to find answers, the more likely teams are to consult the playbook rather than defaulting to informal workarounds.

Celebrate early wins publicly. When the playbook helps a team avoid a compliance issue, prevented a security breach, or accelerated a decision, share that story. Show teams that documentation isn’t just bureaucracy—it’s a tool that makes their work easier and safer. This positive reinforcement drives adoption far more effectively than mandates.

Involve teams in creation rather than imposing the playbook from above. When marketing, engineering, and compliance collaborate on governance rules, they’re more likely to follow them. When teams contribute examples and lessons learned, they feel ownership of the playbook. This collaborative approach transforms documentation from something done to teams into something teams do together.

Establish feedback channels that allow teams to suggest improvements. Your playbook won’t be perfect on day one. Create a simple process for teams to flag outdated information, suggest clarifications, or propose new sections. When teams see their feedback implemented, they trust that the playbook is a living system that evolves with their needs.

The AI landscape evolves rapidly. New models emerge, platforms change, and regulatory requirements shift. Your playbook must be flexible enough to adapt without requiring constant rewrites.

Build your playbook with modularity in mind. Each section should be independently updatable. Your governance framework shouldn’t require changes to your content strategy section. Your ownership structure shouldn’t depend on your maintenance cadence. This modular approach allows you to update specific sections without disrupting the entire playbook.

Establish a continuous learning culture. Dedicate time for teams to experiment with new AI tools and share learnings. Create a process for capturing these insights and incorporating them into the playbook. When your organization treats AI as an evolving capability rather than a static tool, your documentation naturally stays current.

Monitor regulatory and platform changes proactively. Subscribe to updates from relevant regulatory bodies, follow AI platform announcements, and participate in industry forums. Build this monitoring into your maintenance cadence so regulatory changes trigger playbook updates rather than catching you by surprise.

Plan for skill development. As AI capabilities evolve, your team’s skills need to evolve too. Include training and certification programs in your playbook. When new team members join, they should be able to get up to speed quickly by consulting your documentation and completing relevant training.

Treat your playbook as a competitive advantage. Organizations that maintain clear, current documentation about their AI strategy move faster, make better decisions, and build stronger governance. As AI becomes increasingly central to business operations, the organizations with the best playbooks will be the ones that scale AI most effectively.

Your AI visibility playbook is more than documentation—it’s the operational system that transforms AI visibility from a marketing initiative into an organizational capability. By following the five-step process, maintaining clear ownership, and using tools like AmICited.com to track your progress, you create a foundation that keeps your team aligned, your governance current, and your brand visible in the AI-generated answers that increasingly drive discovery and decision-making.

AI visibility focuses on being cited in AI-generated answers, while SEO focuses on ranking in search results. Both matter, but they require different strategies and content approaches. AI systems evaluate credibility, structure, and authority differently than search engines.

High-risk items like governance policies should be reviewed monthly, general content quarterly, and lessons learned captured after each sprint or project. This tiered approach keeps critical information current without overwhelming your team.

Assign a DRI (Directly Responsible Individual) for overall playbook maintenance, supported by department advocates who surface changes and feedback from their teams. This distributed model ensures accountability while preventing bottlenecks.

Track metrics like AI citation frequency, brand mention rates in AI answers, team alignment scores, and documentation update frequency using tools like AmICited.com. These data points show both visibility improvements and team adoption.

Include strategic vision, current state audit, governance rules, ownership structure, maintenance cadence, and specific guidelines for content creation and distribution. Each section should be independently updatable and clearly owned.

AmICited.com tracks how AI systems reference your brand across platforms like ChatGPT, Perplexity, and Google AI Overviews, providing real-time visibility insights and citation tracking. This monitoring is essential for understanding your AI visibility performance.

Yes, start with one workflow or use case, document it thoroughly, then expand to other areas. This iterative approach is more sustainable than trying to document everything at once and helps build team buy-in.

Establish a proactive monitoring system for regulatory updates, conduct dynamic policy reviews quarterly, and engage compliance experts to interpret changes accurately. This ensures your playbook stays compliant as regulations evolve.

Track how AI systems like ChatGPT, Perplexity, and Google AI Overviews reference your brand. Get real-time insights into your AI citations and visibility.

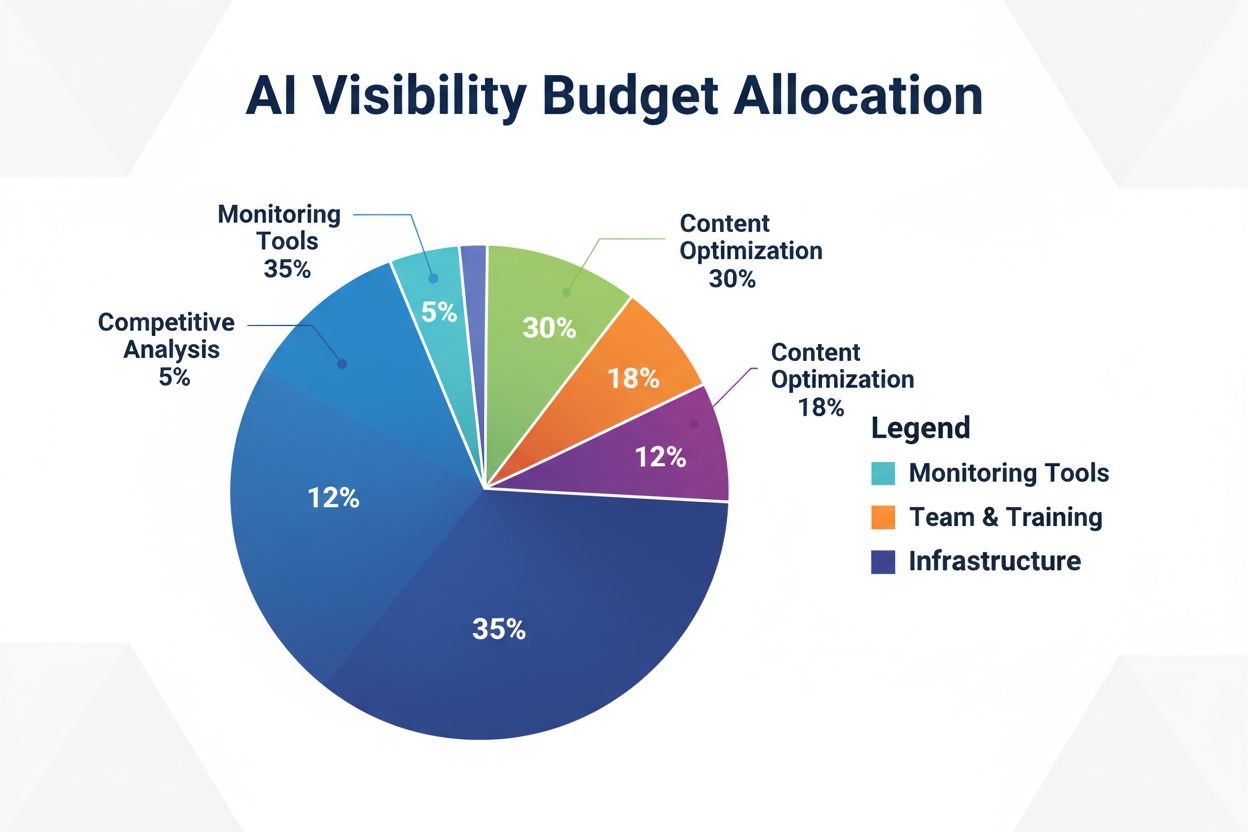

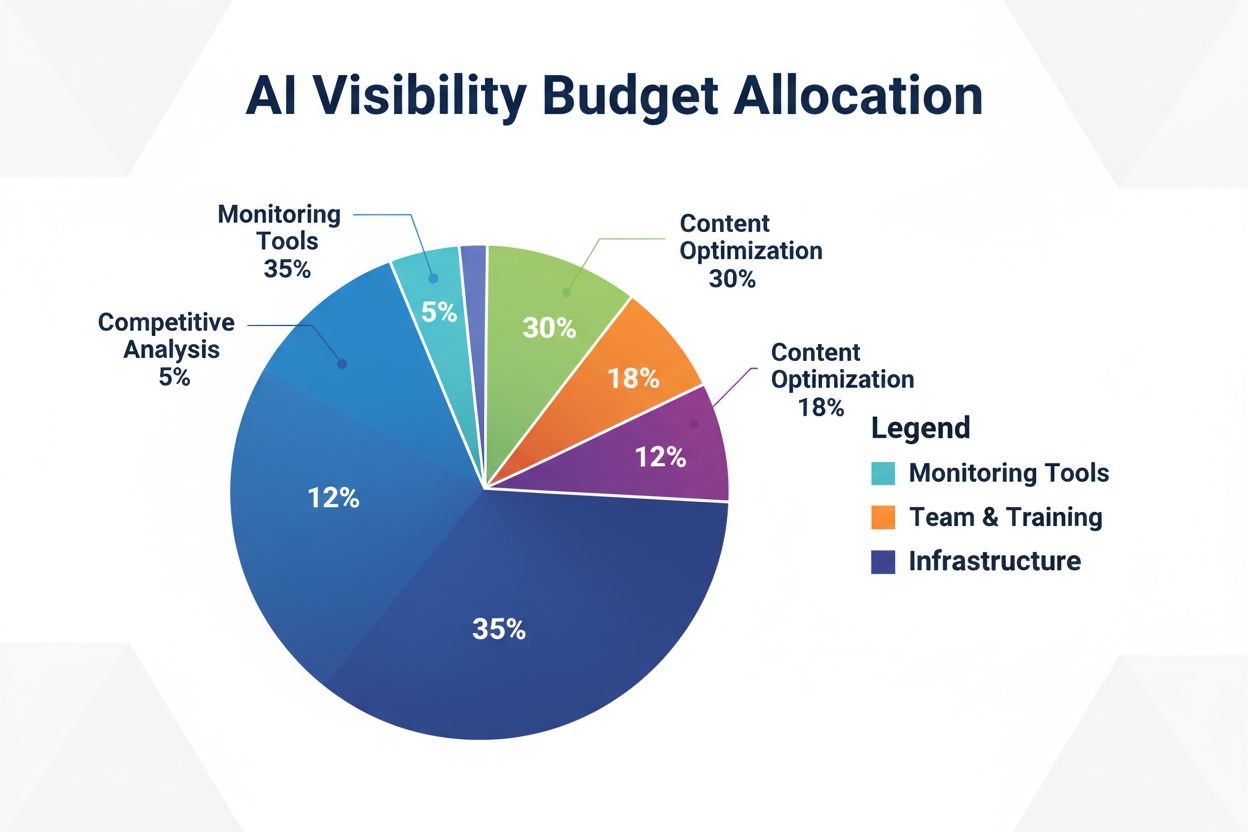

Learn how to strategically allocate your AI visibility budget across monitoring tools, content optimization, team resources, and competitive analysis to maximiz...

Learn how to conduct a baseline AI visibility audit to understand how ChatGPT, Google AI, and Perplexity mention your brand. Step-by-step assessment guide for b...

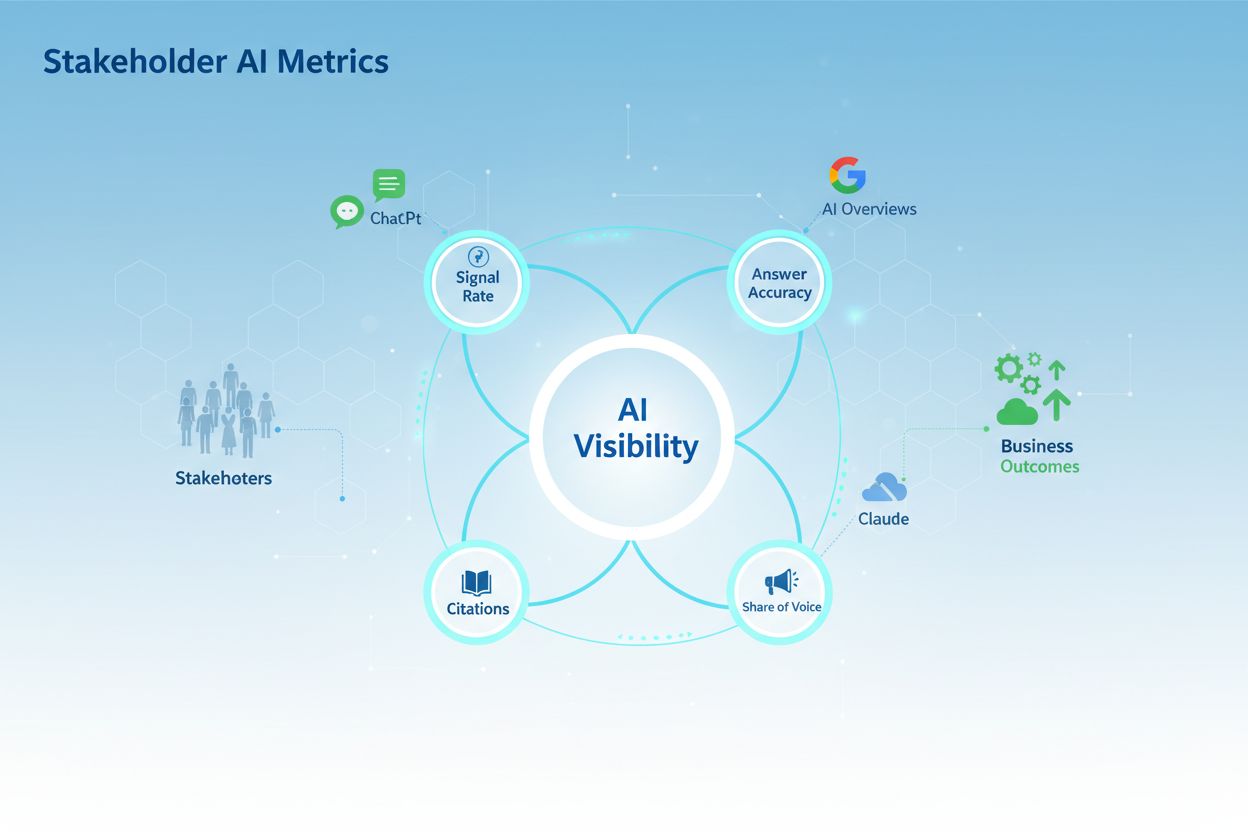

Discover the 4 essential AI visibility metrics stakeholders care about: Signal Rate, Accuracy, Citations, and Share of Voice. Learn how to measure and report AI...