What AI Crawlers Should I Allow Access? Complete Guide for 2025

Learn which AI crawlers to allow or block in your robots.txt. Comprehensive guide covering GPTBot, ClaudeBot, PerplexityBot, and 25+ AI crawlers with configurat...

Learn how to make strategic decisions about blocking AI crawlers. Evaluate content type, traffic sources, revenue models, and competitive position with our comprehensive decision framework.

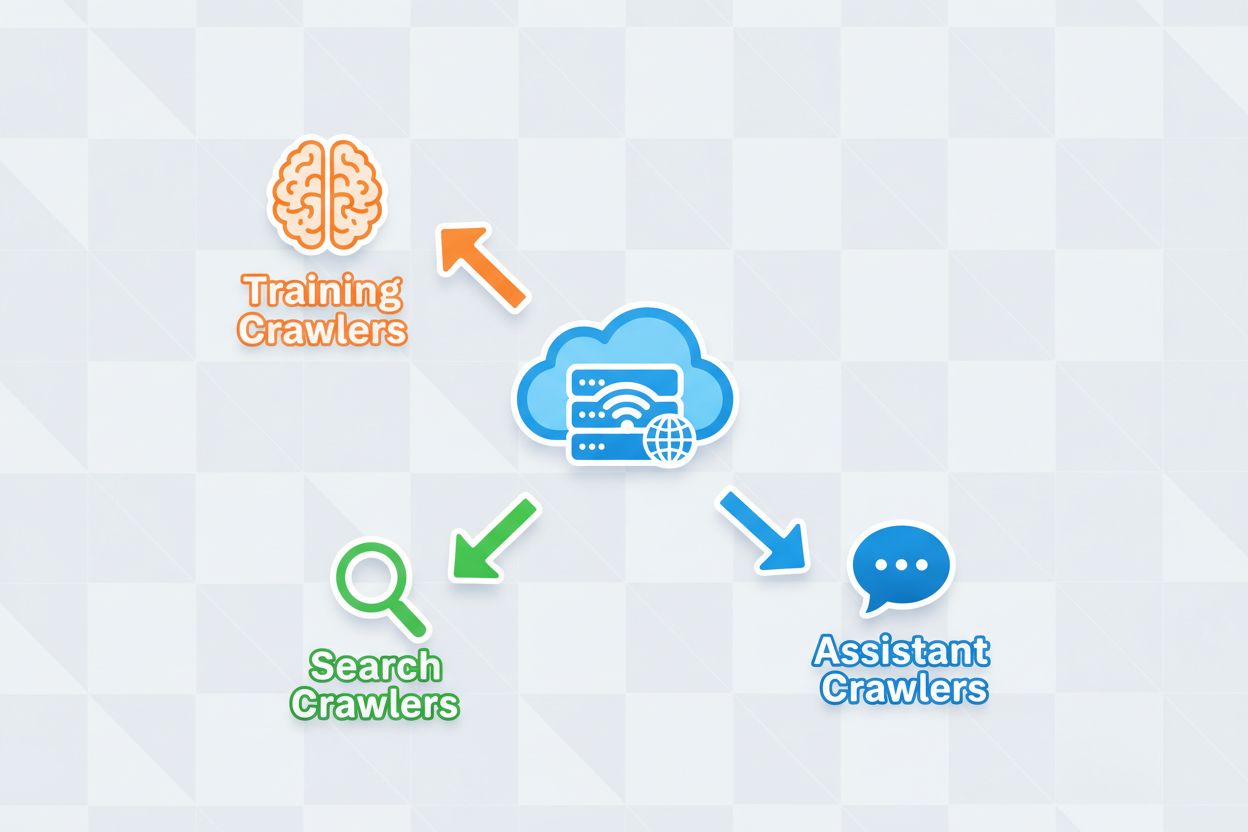

AI crawlers have become a significant force in the digital ecosystem, fundamentally changing how content is discovered, indexed, and utilized across the internet. These automated systems are designed to systematically browse websites, extract data, and feed it into machine learning models that power everything from search engines to generative AI applications. The landscape encompasses three distinct types of crawlers: data scrapers that extract specific information for commercial purposes, search engine crawlers like Googlebot that index content for search results, and AI assistant crawlers that gather training data for large language models. Examples include OpenAI’s GPTBot, Anthropic’s Claude-Web, and Google’s AI Overviews crawler, each with different purposes and impact profiles. According to recent analysis, approximately 21% of the top 1,000 websites have already implemented some form of AI crawler blocking, indicating a growing awareness of the need to manage these automated visitors. Understanding which crawlers are accessing your site and why they’re doing so is the first critical step in making an informed decision about whether to block or allow them. The stakes are high because this decision directly impacts your content’s visibility, your traffic patterns, and ultimately your revenue model.

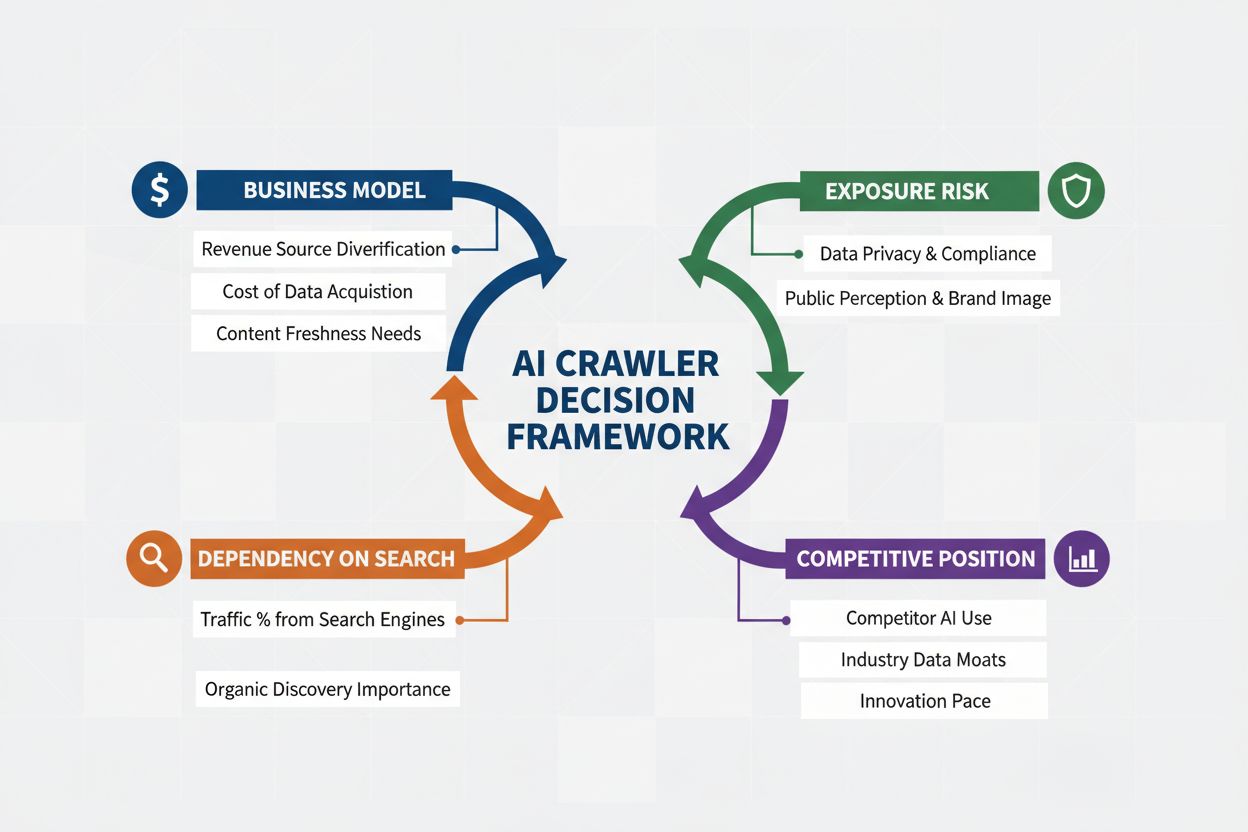

Rather than making a blanket decision to block or allow all AI crawlers, a more sophisticated approach involves evaluating your specific situation through the BEDC Framework, which stands for Business Model, Exposure Risk, Dependency on Organic Search, and Competitive Position. Each of these four factors carries different weight depending on your website’s characteristics, and together they create a comprehensive decision matrix that accounts for the complexity of modern digital publishing. The framework recognizes that there is no one-size-fits-all answer—what works for a news organization may be entirely wrong for a SaaS company, and what benefits an established brand might harm an emerging competitor. By systematically evaluating each factor, you can move beyond emotional reactions to AI and instead make data-driven decisions that align with your business objectives.

| Factor | Recommendation | Key Consideration |

|---|---|---|

| Business Model | Ad-supported sites should be more cautious; subscription models can be more permissive | Revenue dependency on direct user engagement vs. licensing |

| Exposure Risk | Original research and proprietary content warrant blocking; commodity content can be more open | Competitive advantage tied to unique insights or data |

| Organic Search Dependency | High dependency (>40% traffic) suggests allowing Google crawlers but blocking AI assistants | Balance between search visibility and AI training data protection |

| Competitive Position | Market leaders can afford to block; emerging players may benefit from AI visibility | First-mover advantage in AI partnerships vs. content protection |

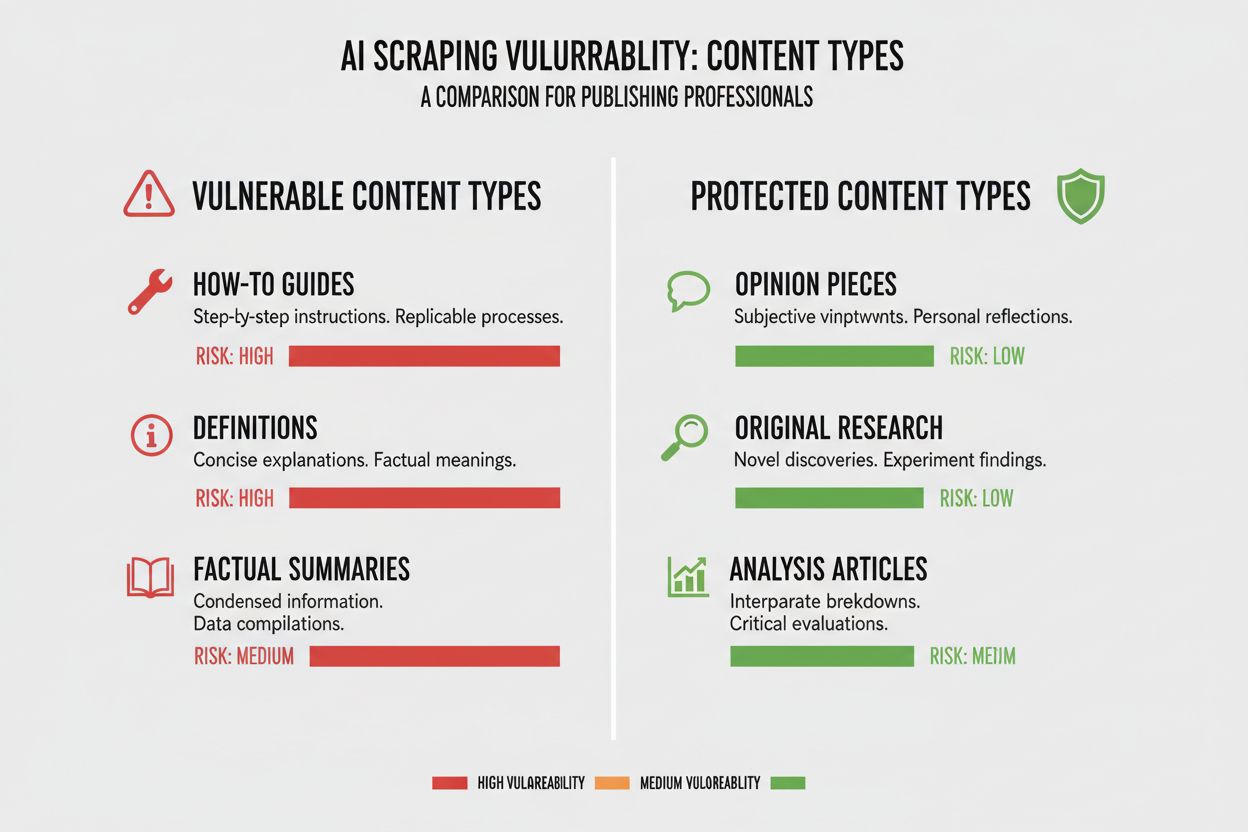

Different types of content carry vastly different levels of vulnerability to AI crawling, and understanding your content’s position in this spectrum is essential for making the right decision. Original research and proprietary data represent your highest-value assets and warrant the strongest protection, as AI models trained on this content can directly compete with your monetization strategy. News and breaking information occupies a middle ground—while time-sensitive value diminishes quickly, being indexed by search engines remains critical for traffic, creating a tension between search visibility and AI training data protection. Commodity content like how-to guides, tutorials, and general reference material is far less vulnerable because it’s widely available across the internet and less likely to be your primary revenue driver. Voice-driven and multimedia content enjoys natural protection because current AI crawlers struggle to extract meaningful value from audio and video, making these formats inherently safer from AI training data extraction. Evergreen educational content and opinion pieces fall somewhere in the middle, valuable for search traffic but less directly threatened by AI competition. The key insight is that your blocking strategy should be proportional to the competitive advantage your content provides—protecting your crown jewels while remaining open to crawlers for content that benefits from broad distribution.

Your dependency on organic search traffic is perhaps the most concrete factor in the AI crawler decision, because it directly quantifies the trade-off between search visibility and content protection. Websites that derive more than 40% of their traffic from organic search face a critical constraint: blocking AI crawlers often means also blocking or limiting Google’s crawlers, which would devastate their search visibility and organic traffic. The distinction between Google-Extended (which crawls for AI training) and Googlebot (which crawls for search indexing) is crucial here, as you can theoretically allow one while blocking the other, though this creates technical complexity. A striking case study from The New York Times illustrates the traffic stakes: the publication received approximately 240,600 visits from AI crawlers over a specific period, demonstrating the scale of AI-driven traffic for major publishers. However, the uncomfortable reality revealed by Akamai data shows that blocking crawlers results in 96% less referral traffic from those sources, suggesting that the traffic contribution from AI crawlers is minimal compared to traditional search. The crawl-to-referral ratio for most AI crawlers is extraordinarily low—often less than 0.15% of crawled content actually generates return visits—meaning that blocking these crawlers has minimal impact on your actual user traffic. For websites with high organic search dependency, the decision to block AI crawlers must be weighed against the risk of accidentally blocking search engine crawlers, which would be far more damaging to your business.

Your revenue model fundamentally shapes how you should approach AI crawlers, because different monetization strategies create different incentives around content distribution and protection. Ad-supported websites face the most acute tension with AI crawlers, because their revenue depends on users visiting their site to see advertisements, and AI models that summarize their content reduce the incentive for users to click through. Subscription-based models can afford to be more permissive with AI crawlers, since their revenue comes from direct user subscriptions rather than ad impressions, and some AI visibility might even drive subscription sign-ups. Hybrid models that combine advertising, subscriptions, and affiliate revenue require more nuanced thinking, as blocking crawlers might protect ad revenue but damage affiliate opportunities or subscription growth. An emerging opportunity that deserves attention is the AI referral model, where websites could potentially earn revenue by allowing AI crawlers to access their content in exchange for attribution and traffic referrals—a model that’s still developing but could reshape the economics of content distribution. For publishers trying to understand the full impact of AI crawlers on their business, tools like AmICited.com provide essential monitoring capabilities to track where your content is being cited and used by AI systems, giving you visibility into the actual value exchange happening with your content. The key is to understand your revenue model deeply enough to predict how AI crawlers will affect each revenue stream, rather than making a blanket decision based on principle alone.

Once you’ve decided to block certain AI crawlers, the technical implementation requires understanding both the capabilities and limitations of the tools at your disposal. The most common approach is using robots.txt, a simple text file placed in your website’s root directory that instructs crawlers which parts of your site they can and cannot access. However, robots.txt has a critical limitation: it’s a voluntary standard that relies on crawlers respecting your instructions, and malicious or aggressive crawlers may ignore it entirely. Here’s an example of how to block specific AI crawlers in your robots.txt file:

User-agent: GPTBot

Disallow: /

User-agent: CCBot

Disallow: /

User-agent: anthropic-ai

Disallow: /

User-agent: Claude-Web

Disallow: /

Beyond robots.txt, you should consider blocking these major AI crawlers:

For more robust protection, many organizations implement CDN-level blocking through services like Cloudflare, which can block traffic at the network edge before it even reaches your servers, providing better performance and security. A dual-layer approach combining robots.txt with CDN-level blocking offers the strongest protection, as it catches both respectful crawlers that honor robots.txt and aggressive crawlers that ignore it. It’s important to note that blocking crawlers at the CDN level requires more technical sophistication and may have unintended consequences if not configured carefully, so this approach is best suited for organizations with dedicated technical resources.

The uncomfortable truth about blocking AI crawlers is that the actual traffic impact is often far smaller than the emotional response to AI scraping would suggest, and the data reveals a more nuanced picture than many publishers expect. According to recent analysis, AI crawlers typically account for only 0.15% of total website traffic for most publishers, a surprisingly small number given the attention this issue receives. However, the growth rate of AI crawler traffic has been dramatic, with some reports showing 7x growth year-over-year in AI crawler requests, indicating that while current impact is small, the trajectory is steep. ChatGPT accounts for approximately 78% of all AI crawler traffic, making OpenAI’s crawler the dominant force in this space, followed by much smaller contributions from other AI companies. The crawl-to-referral ratio data is particularly revealing: while AI crawlers may request millions of pages, they generate actual return visits at rates often below 0.15%, meaning that blocking them has minimal impact on your actual user traffic. Blocking AI crawlers reduces referral traffic by 96%, but since that referral traffic was minimal to begin with (often less than 0.15% of total traffic), the net impact on your business is frequently negligible. This creates a paradox: blocking AI crawlers feels like a principled stand against content theft, but the actual business impact is often so small that it barely registers in your analytics. The real question isn’t whether blocking crawlers will hurt your traffic—it usually won’t—but whether allowing them creates strategic opportunities or risks that outweigh the minimal traffic contribution they provide.

Your competitive position in your market fundamentally shapes how you should approach AI crawlers, because the optimal strategy for a market leader differs dramatically from the optimal strategy for an emerging competitor. Dominant market players like The New York Times, Wall Street Journal, and major news organizations can afford to block AI crawlers because their brand recognition and direct audience relationships mean they don’t depend on AI discovery to drive traffic. Emerging players and niche publishers face a different calculus: being indexed by AI systems and appearing in AI-generated summaries might be one of their few ways to gain visibility against entrenched competitors. The first-mover advantage in AI partnerships could be significant—publishers who negotiate favorable terms with AI companies early might secure better attribution, traffic referrals, or licensing deals than those who wait. There’s also a subsidy effect at play: when dominant publishers block AI crawlers, it creates an incentive for AI companies to rely more heavily on content from publishers who allow crawling, potentially giving those publishers disproportionate visibility in AI systems. This creates a competitive dynamic where blocking might actually harm your position if your competitors are allowing crawlers and gaining AI visibility as a result. Understanding where you stand in your competitive landscape is essential for predicting how your blocking decision will affect your market position relative to competitors.

Making the decision to block or allow AI crawlers requires systematically evaluating your specific situation against concrete criteria. Use this checklist to guide your decision-making process:

Content Exposure Assessment

Traffic Composition Analysis

Market Position Evaluation

Revenue Risk Assessment

Beyond this initial assessment, implement quarterly reviews of your AI crawler strategy, as the landscape is evolving rapidly and your optimal decision today might change within months. Use tools like AmICited.com to track where your content is being cited and used by AI systems, giving you concrete data about the value exchange happening with your content. The key insight is that this decision shouldn’t be made once and forgotten—it requires continuous evaluation and adjustment as the AI landscape matures and your business circumstances change.

A significant emerging opportunity that could reshape the entire AI crawler landscape is Cloudflare’s pay-per-crawl feature, which introduces a permission-based internet model where website owners can monetize AI crawler access rather than simply blocking or allowing it. This approach recognizes that AI companies derive value from crawling your content, and rather than engaging in an adversarial blocking war, you could instead negotiate compensation for that access. The model relies on cryptographic verification to ensure that only authorized crawlers can access your content, preventing unauthorized scraping while allowing legitimate AI companies to pay for access. This creates granular control over which crawlers can access which content, allowing you to monetize high-value content while remaining open to search engines and other beneficial crawlers. The pay-per-crawl model also enables AI audit capabilities, where you can see exactly what content was crawled, when it was crawled, and by whom, providing transparency that’s impossible with traditional blocking approaches. For publishers implementing this strategy, AmICited.com’s monitoring capabilities become even more valuable, as you can track not just where your content appears in AI systems, but also verify that you’re receiving appropriate compensation for that usage. While this model is still emerging and adoption is limited, it represents a potentially more sophisticated approach than the binary choice between blocking and allowing—one that acknowledges the mutual value in the relationship between publishers and AI companies while protecting your interests through contractual and technical mechanisms.

Blocking AI crawlers prevents them from accessing your content through robots.txt or CDN-level blocking, protecting your content from being used in AI training. Allowing crawlers means your content can be indexed by AI systems, potentially appearing in AI-generated summaries and responses. The choice depends on your content type, revenue model, and competitive position.

Blocking AI crawlers won't directly hurt your SEO if you only block AI-specific crawlers like GPTBot while allowing Googlebot. However, if you accidentally block Googlebot, your search rankings will suffer significantly. The key is using granular control to block only AI training crawlers while preserving search engine access.

Yes, you can use robots.txt to block specific crawlers by their user-agent string while allowing others. For example, you could block GPTBot while allowing Google-Extended, or vice versa. This granular approach lets you protect your content from certain AI companies while remaining visible to others.

robots.txt is a voluntary standard that relies on crawlers respecting your instructions—some AI companies ignore it. CDN-level blocking (like Cloudflare's) blocks traffic at the network edge before it reaches your servers, providing stronger enforcement. A dual-layer approach using both methods offers the best protection.

You can check your server logs for user-agent strings from known AI crawlers like GPTBot, CCBot, and Claude-Web. Tools like AmICited.com provide monitoring capabilities to track where your content appears in AI systems and how often it's being accessed by AI crawlers.

Pay-per-crawl is an emerging model where AI companies pay for access to your content. While still in beta with limited adoption, it represents a potential new revenue stream. The viability depends on the volume of AI crawler traffic and the rates AI companies are willing to pay.

If an AI crawler ignores your robots.txt directives, implement CDN-level blocking through services like Cloudflare. You can also configure your server to return 403 errors to known AI crawler user-agents. For persistent violations, consider legal action or contacting the AI company directly.

Review your AI crawler strategy quarterly, as the landscape is evolving rapidly. Monitor changes in AI crawler traffic, new crawlers entering the market, and shifts in your competitive position. Use tools like AmICited.com to track how your content is being used by AI systems and adjust your strategy accordingly.

Track where your content appears in AI-generated responses and understand the impact of AI crawlers on your business with AmICited.com's comprehensive monitoring platform.

Learn which AI crawlers to allow or block in your robots.txt. Comprehensive guide covering GPTBot, ClaudeBot, PerplexityBot, and 25+ AI crawlers with configurat...

Understand how AI crawlers like GPTBot and ClaudeBot work, their differences from traditional search crawlers, and how to optimize your site for AI search visib...

Learn how to selectively allow or block AI crawlers based on business objectives. Implement differential crawler access to protect content while maintaining vis...