Authority Building for AI Visibility

Learn how to build authority for AI visibility. Discover E-E-A-T strategies, topical authority, and how to get cited in AI Overviews and LLM responses.

Learn how to build topical authority for LLMs with semantic depth, entity optimization, and content clusters. Master the strategies that make AI systems cite your brand.

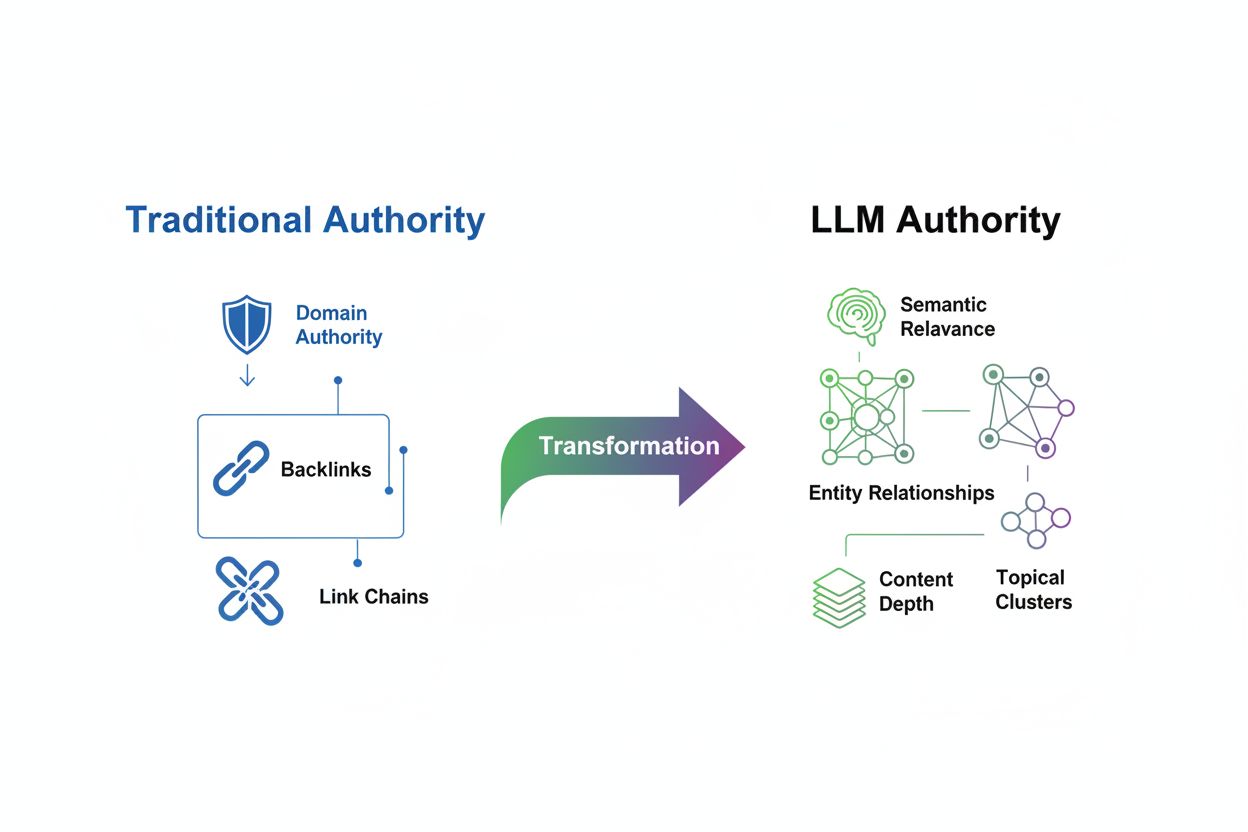

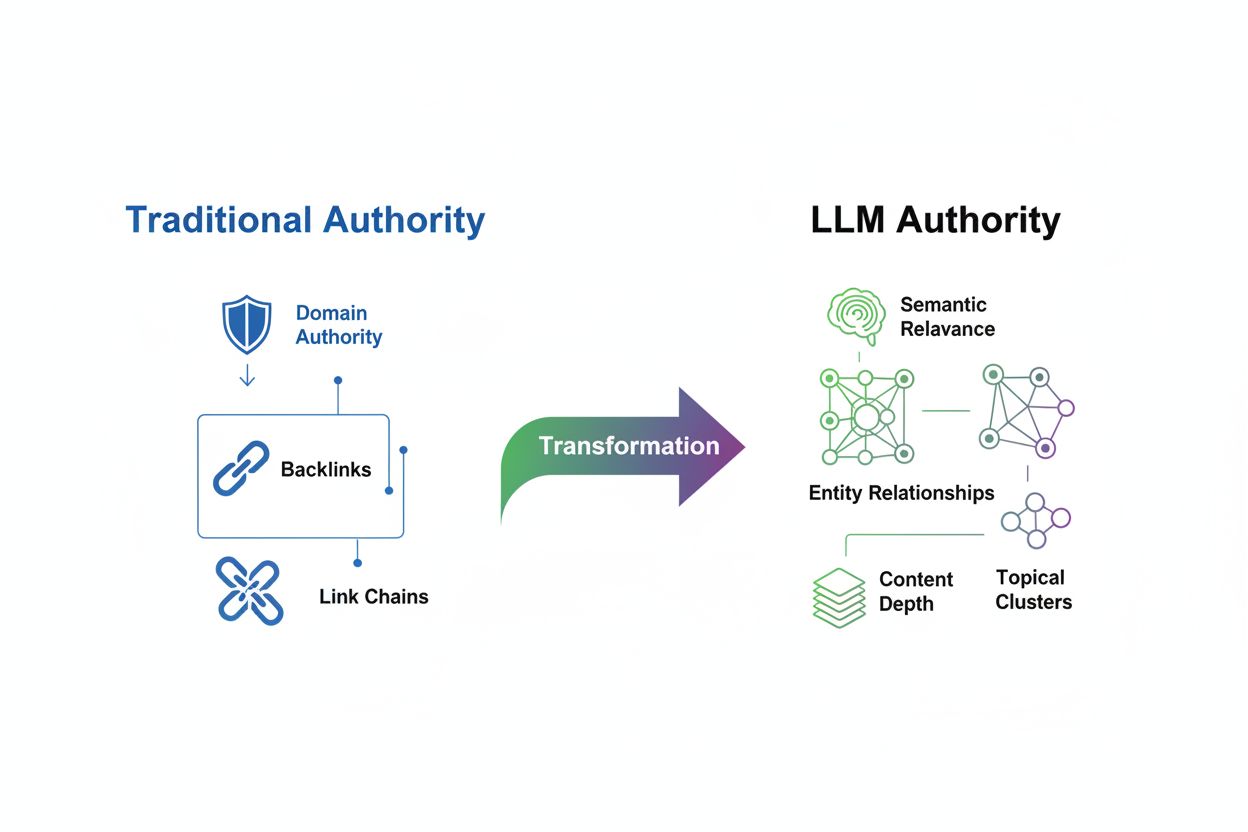

The evaluation of topical authority has undergone a fundamental transformation in the age of Large Language Models. Where traditional SEO once prioritized backlinks and keyword density as primary authority signals, modern LLMs evaluate content through an entirely different lens focused on semantic relevance, contextual depth, and entity relationships. This shift represents more than a minor algorithm update—it’s a complete reimagining of how search engines and AI systems determine which sources deserve visibility and trust. The old model rewarded volume and link quantity; the new paradigm rewards semantic richness and comprehensive topic coverage. Understanding this transition is critical because brands that continue optimizing for outdated authority signals will find themselves increasingly invisible in AI-generated responses, even if they maintain strong traditional SEO metrics. The future of visibility belongs to those who recognize that LLMs recognize authority through depth, consistency, and semantic clarity rather than through the accumulation of external links.

Topical authority is defined as the level of expertise, depth, and consistency a website demonstrates across a specific subject area, as recognized by both search engines and artificial intelligence systems. Unlike traditional authority metrics that rely heavily on external validation through backlinks, LLM-based authority evaluation focuses on how comprehensively and coherently a domain covers its chosen topic. Semantic relevance has become the cornerstone of this new evaluation method—LLMs assess whether content fully addresses user intent, covers multiple angles of a topic, and maintains logical connections between related concepts. The distinction is profound: a website with 50 thin, keyword-stuffed articles may have strong traditional authority signals but weak topical authority in the LLM context, while a competitor with 10 deeply researched, interconnected articles may dominate AI-generated responses.

| Authority Signal | Traditional SEO | LLM Evaluation |

|---|---|---|

| Primary Metric | Backlink quantity and domain authority | Semantic depth and topic coverage |

| Content Approach | Keyword optimization and volume | Comprehensive, interconnected content clusters |

| Entity Recognition | Minimal emphasis | Critical for understanding relationships |

| Internal Linking | Secondary consideration | Essential for demonstrating expertise |

| Measurement Focus | Domain-level metrics (DA, DR, DP) | Topic-level visibility and citation frequency |

Large Language Models evaluate authority through sophisticated mechanisms that differ fundamentally from traditional search algorithms. When an LLM encounters content, it doesn’t simply count backlinks or scan for keyword frequency; instead, it engages in pattern recognition across billions of documents to understand how concepts relate to one another. The model analyzes entity relationships—the connections between people, organizations, products, and concepts—to determine whether a source truly understands the topic or merely mentions it superficially. Semantic richness plays a crucial role, as LLMs assess the depth of explanation, the variety of perspectives covered, and the logical flow between ideas. Additionally, consistency across multiple pieces of content signals genuine expertise; when a domain repeatedly demonstrates knowledge about interconnected subtopics, the LLM recognizes this as authoritative.

Key mechanisms LLMs use to recognize authority include:

In the LLM era, content depth has become exponentially more valuable than content volume. Publishing one comprehensive, 5,000-word guide that thoroughly explores a topic with multiple angles, real-world examples, and actionable insights will outperform ten 500-word articles that each touch on the same subject superficially. This principle fundamentally challenges the traditional content marketing playbook that rewarded prolific publishing. LLMs prefer semantic coverage—the degree to which content addresses all relevant dimensions of a topic—over keyword repetition. When you create content that explores a topic from multiple perspectives, answers related questions, and connects to broader concepts, you’re building what researchers call topical clusters, which are networks of interconnected content that collectively demonstrate expertise. For example, a financial services firm building authority on “retirement planning” should create content exploring investment strategies, tax implications, healthcare costs, Social Security optimization, and estate planning—not just publish 20 variations of “how to plan for retirement.” The depth approach signals to LLMs that your organization genuinely understands the topic ecosystem, making your content far more likely to be cited in AI-generated responses.

Creating effective topic clusters requires a strategic, hierarchical approach to content organization that signals expertise to both users and LLMs. The foundation of this strategy is the pillar page—a comprehensive, authoritative resource that covers the main topic broadly and serves as the hub for all related content. Supporting this pillar are cluster pages, which dive deeper into specific subtopics while maintaining clear connections back to the main pillar through strategic internal linking.

Follow these steps to build an effective topic cluster and internal linking strategy:

Identify Your Core Pillar Topic: Choose a broad subject area where your organization has genuine expertise and market demand exists. This should be specific enough to demonstrate authority but broad enough to support multiple supporting articles. Example: “Enterprise Resource Planning (ERP) Implementation” rather than just “ERP.”

Map Related Subtopics and Questions: Use tools like SEMrush, AnswerThePublic, and Google’s “People Also Ask” to identify all the questions, concerns, and subtopics your audience cares about. Create a visual map showing how these subtopics relate to your main pillar and to each other.

Create Your Pillar Page: Develop a comprehensive guide (3,000-5,000+ words) that addresses the main topic holistically. Include an overview of all subtopics, key definitions, and links to your cluster content. Use clear headings and logical organization to help both users and LLMs understand the content structure.

Develop Cluster Content: Write 8-15 supporting articles that explore specific subtopics in depth. Each cluster article should be 1,500-2,500 words and focus on a single aspect of your pillar topic. Ensure each article links back to the pillar page using contextual anchor text.

Implement Strategic Internal Linking: Connect cluster articles to each other when relevant, creating a web of related content. Use descriptive anchor text that includes relevant keywords and entity names. Add “related articles” sections and navigation widgets to encourage exploration of your content network.

Maintain Consistency and Update Regularly: Periodically review your cluster for gaps, outdated information, and new subtopics. Add new cluster articles as your topic area evolves, and refresh existing content to maintain relevance and accuracy.

Entity optimization represents a paradigm shift in how we approach content structure and SEO. Rather than optimizing for keywords, we now optimize for entities—the specific people, organizations, products, locations, and concepts that LLMs use to understand content meaning. When you clearly define and consistently reference entities throughout your content, you’re essentially teaching the LLM’s knowledge graph how your content relates to the broader information ecosystem. Schema markup from Schema.org provides the technical foundation for entity optimization, allowing you to explicitly tell search engines and LLMs what entities your content discusses and how they relate to one another.

Practical entity optimization guidance includes:

Clearly Define Key Entities: Identify the primary entities your content addresses (e.g., “Google Analytics,” “conversion rate optimization,” “A/B testing”) and mention them explicitly in headings, subheadings, and body text. Avoid vague language; be specific about which products, people, or concepts you’re discussing.

Utilize Structured Data Markup: Implement Schema.org markup to define entities and their relationships. Use Article schema for blog posts, Organization schema for your company, Product schema for offerings, and Person schema for author information. This structured data helps LLMs extract and understand key information.

Connect Entities to Authoritative Sources: Link your entities to authoritative external references like Wikipedia, DBpedia, and Google Knowledge Graph entries. This external validation strengthens the credibility of your entity references and helps LLMs understand the broader context.

Maintain Consistent Entity Mentions: Use the same terminology, spelling, and naming conventions for entities across all your content, website, social media, and industry platforms. Consistency signals to LLMs that you’re discussing the same entity, building a coherent knowledge base.

Google’s E-E-A-T framework—Experience, Expertise, Authoritativeness, and Trust—has evolved in meaning as LLMs become central to search. While these signals remain important for traditional rankings, their interpretation has shifted in AI-driven contexts. LLMs evaluate these signals not through backlinks alone but through content analysis, author credentials, and consistency patterns.

Experience signals demonstrate that your organization or author has direct, hands-on involvement with the topic. LLMs recognize experience through case studies, client results, personal anecdotes from qualified authors, and detailed walkthroughs of processes. Rather than claiming expertise, show it through documented examples of solving real problems. Include author bios that establish relevant background, and feature testimonials or results from actual clients or users.

Expertise is conveyed through comprehensive, nuanced content that demonstrates deep subject knowledge. LLMs assess expertise by evaluating whether your content covers edge cases, acknowledges complexity, cites relevant research, and provides insights that go beyond surface-level information. Publish content that addresses advanced topics, explores counterintuitive findings, and demonstrates understanding of industry debates and evolving best practices.

Authoritativeness in the LLM era comes from being recognized as a go-to source within your field. This is built through consistent publication of high-quality content, citations from other authoritative sources, speaking engagements, published research, and industry recognition. LLMs track which sources are frequently cited together and which sources appear in responses to authoritative queries.

Trust is established through transparency, accuracy, and accountability. Include publication dates and update timestamps on all content, cite your sources clearly, disclose potential conflicts of interest, and correct errors promptly. Use author credentials, professional certifications, and affiliations to establish legitimacy. LLMs are increasingly sophisticated at detecting misleading claims and rewarding sources that prioritize accuracy over sensationalism.

Tracking topical authority metrics requires shifting focus from traditional SEO measurements to new indicators that reflect how LLMs perceive your expertise. While Domain Authority and Domain Rating remain relevant for traditional search, they show weak correlation with LLM visibility—research from Search Atlas analyzing 21,767 domains found correlations between authority metrics and LLM visibility ranging from -0.08 to -0.21, indicating that traditional authority signals have limited influence on AI-generated results.

Key metrics and tools for monitoring topical authority include:

LLM Visibility Score: Track how frequently and prominently your content appears in responses from ChatGPT, Gemini, and Perplexity. Tools like Search Atlas’s LLM Visibility feature measure citation frequency and visibility percentages across multiple models.

Topic-Level Traffic: Monitor organic traffic not just for individual keywords but for entire topic clusters. Use Google Analytics to segment traffic by topic area and track whether you’re gaining visibility for multiple related queries.

Content Completeness Metrics: Assess whether your content covers all major subtopics and questions related to your core topic. Tools like Clearscope and MarketMuse measure topical coverage and identify content gaps.

Internal Linking Strength: Analyze your internal link structure to ensure cluster pages connect logically to pillar content and to each other. Tools like Screaming Frog help visualize and optimize your internal linking architecture.

Entity Consistency Tracking: Monitor whether you’re consistently mentioning and defining key entities across your content. Use tools like Google Search Console to see which entities your content is associated with in search results.

Many organizations pursuing topical authority inadvertently undermine their efforts through preventable mistakes. Understanding these pitfalls helps you avoid wasting resources on ineffective strategies.

Common mistakes to avoid include:

Publishing Thin Content: Creating numerous short articles (300-500 words) that each touch on a topic without providing real depth. LLMs recognize this as insufficient coverage and prefer fewer, more comprehensive resources.

Poor Internal Linking Structure: Failing to connect related content or using generic anchor text like “click here” instead of descriptive, entity-rich anchor text. This prevents LLMs from understanding how your content pieces relate to each other.

Inconsistent Entity Mentions: Using different terminology for the same concept across articles (e.g., “conversion rate optimization” in one article, “CRO” in another, “improving conversions” in a third). This fragmentation prevents LLMs from recognizing your consistent expertise.

Ignoring Content Gaps: Publishing content on some aspects of a topic while leaving major subtopics uncovered. LLMs recognize incomplete topic coverage and may favor competitors with more comprehensive resources.

Neglecting Content Updates: Allowing older content to become outdated while publishing new articles on the same topics. This creates redundancy and confusion about which resource represents your current expertise.

Building topical authority is a structured process that requires planning, execution, and ongoing optimization. This roadmap provides a clear path forward.

Follow this implementation roadmap to build LLM-recognized topical authority:

Conduct a Topical Authority Audit: Analyze your existing content to identify your strongest topic areas and gaps. Map out which topics you currently cover, which subtopics are missing, and where your content is thin or outdated. Use tools like SEMrush’s Topic Research and Ahrefs to understand what competitors are covering.

Define Your Core Topical Pillars: Select 3-5 core topic areas where your organization has genuine expertise and market demand exists. These should be specific enough to demonstrate authority but broad enough to support multiple supporting articles. Document why your organization is uniquely qualified to speak on these topics.

Create a Comprehensive Content Map: Develop a visual representation of your pillar pages and cluster content. Show how subtopics relate to main pillars and to each other. Identify which content already exists, which needs to be created, and which should be consolidated or removed.

Develop or Refresh Pillar Pages: Create comprehensive pillar pages (3,000-5,000+ words) for each core topic. Ensure each pillar covers the topic broadly, includes clear definitions of key entities, and links to all supporting cluster content. Optimize pillar pages with schema markup to help LLMs understand the content structure.

Build Cluster Content and Internal Linking: Create 8-15 supporting articles for each pillar, each exploring a specific subtopic in depth. Implement strategic internal linking using descriptive anchor text. Ensure cluster articles link back to the pillar and to related cluster articles where relevant.

Implement Entity Optimization and Schema Markup: Add Schema.org markup throughout your content to explicitly define entities and their relationships. Ensure consistent entity mentions across all content. Link entities to authoritative external sources to strengthen credibility.

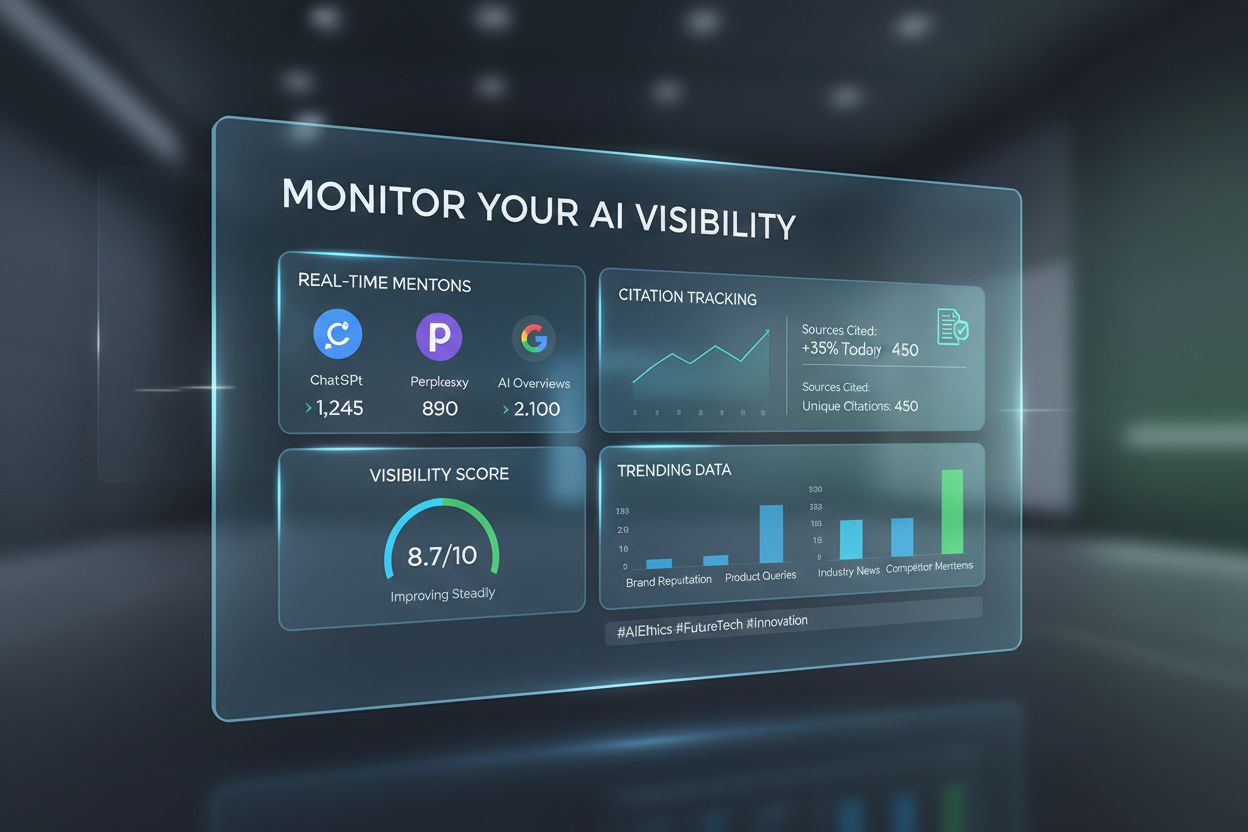

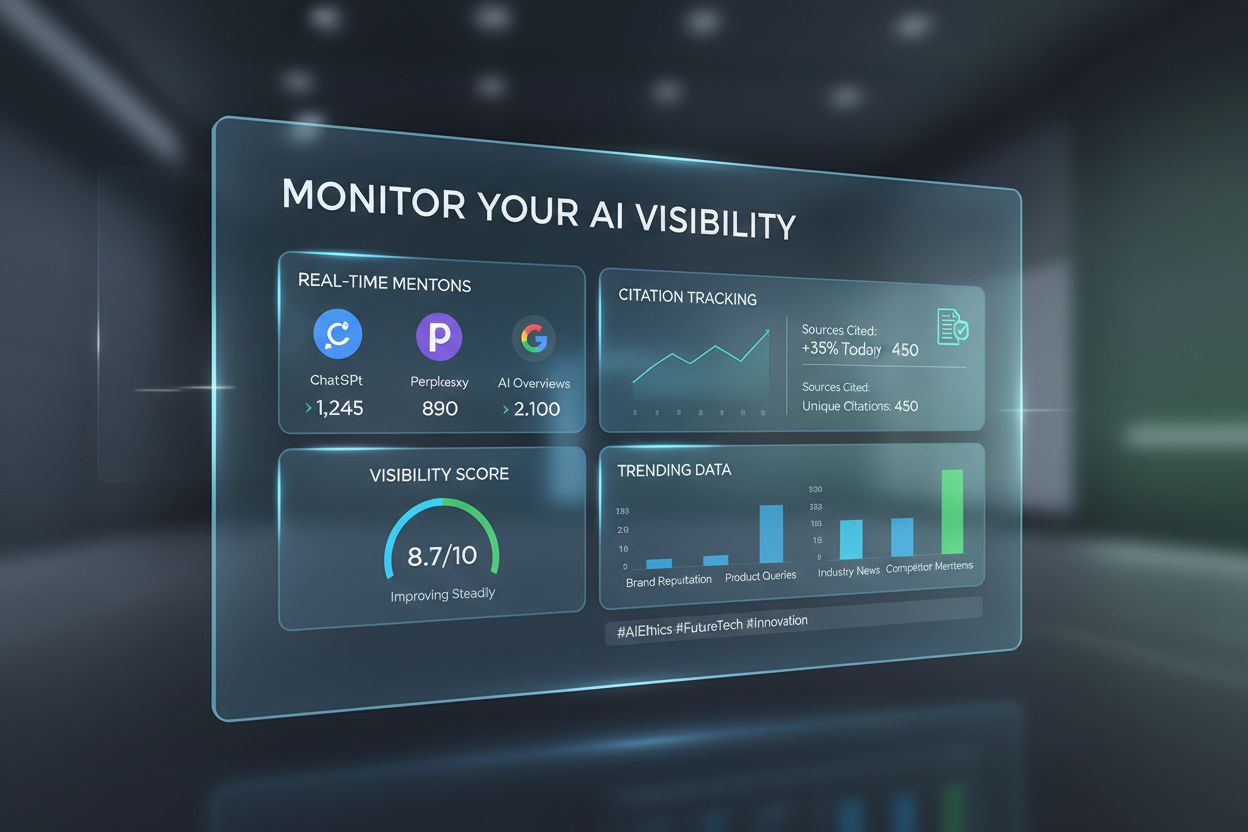

As your topical authority grows, tracking how it translates to visibility in AI systems becomes essential. AmICited is a specialized platform designed to monitor your brand’s presence and citations across Large Language Models, providing visibility into how AI systems recognize and reference your content. Unlike traditional SEO tools that focus on Google rankings, AmICited specifically tracks LLM visibility—measuring how often and how prominently your brand appears in responses from ChatGPT, Gemini, Perplexity, and other generative AI platforms.

The platform offers several key benefits for monitoring your authority in AI:

Cross-Platform Citation Tracking: Monitor your brand mentions and citations across multiple LLMs simultaneously, understanding which platforms recognize your authority most strongly and where you have visibility gaps.

Competitive Benchmarking: Compare your LLM visibility against competitors in your industry. Understand which competitors are being cited more frequently and analyze their content strategies to identify opportunities.

Citation Context Analysis: See not just that you’re cited, but how you’re cited. Understand which topics trigger your citations, what context LLMs use when mentioning your brand, and whether citations are positive and accurate.

Visibility Trend Monitoring: Track how your LLM visibility changes over time as you implement topical authority improvements. Measure the impact of new content, content updates, and structural changes on your AI-driven visibility.

Authority Signal Insights: Receive recommendations on which content gaps to fill, which topics to expand, and which entities to optimize based on LLM citation patterns and competitive analysis.

By using AmICited alongside traditional SEO tools, you gain a complete picture of your authority in both traditional search and AI-driven discovery. This dual perspective is essential in 2025 and beyond, as LLM-powered search becomes increasingly central to how users discover information. The brands that monitor and optimize for both traditional and AI visibility will maintain competitive advantage as search continues to evolve toward conversational, AI-generated responses.

Topical authority measures how comprehensively and consistently you cover a subject area, while keyword ranking focuses on individual search terms. LLMs evaluate topical authority by assessing semantic depth, entity relationships, and content interconnectedness. A site can rank for specific keywords without having true topical authority, but topical authority typically leads to visibility across multiple related queries and AI-generated responses.

Building topical authority is a long-term strategy typically requiring 3-6 months to show initial results and 6-12 months to establish strong recognition. The timeline depends on your starting point, content quality, competition level, and how consistently you implement the strategy. LLMs recognize authority through patterns over time, so consistency and depth matter more than speed.

Yes, absolutely. Unlike traditional authority metrics that favor established domains with many backlinks, LLMs evaluate topical authority based on semantic depth and content quality. A small brand with 10 comprehensive, interconnected articles on a specific topic can outrank a large publisher with 100 thin articles. Focus on depth, consistency, and entity optimization rather than trying to match volume.

Backlinks remain relevant for traditional Google rankings but show weak correlation with LLM visibility. Research shows correlations between traditional authority metrics and LLM visibility ranging from -0.08 to -0.21. While backlinks still matter for SEO, LLMs prioritize semantic relevance, content depth, and entity relationships. Focus on creating exceptional content that naturally attracts citations rather than pursuing links for their own sake.

Signs of topical authority include: appearing in AI-generated responses for multiple related queries, consistent citations across different LLMs, ranking for topic clusters rather than isolated keywords, high engagement metrics on pillar and cluster content, and recognition as a go-to resource in your industry. Use tools like AmICited to track LLM citations and Search Atlas to monitor topic-level visibility.

You should focus on both, but with different priorities. Topical authority is increasingly important for AI-driven discovery and long-term visibility, while traditional SEO remains critical for Google rankings. The good news is that strategies that build topical authority (semantic depth, entity optimization, content clusters) also improve traditional SEO. Start with topical authority as your foundation, and traditional SEO benefits will follow.

User engagement signals like dwell time, scroll depth, and return visits indicate to LLMs that your content provides genuine value. When users spend time reading your content and explore related articles in your cluster, it signals that your content is comprehensive and authoritative. LLMs interpret these engagement patterns as indicators of content quality and relevance, making user experience optimization essential for topical authority.

LLMs analyze how you mention and connect different entities (people, organizations, products, concepts) throughout your content. When you consistently reference related entities and explain their relationships, LLMs recognize this as evidence of comprehensive understanding. Schema markup helps by explicitly defining entity relationships. For example, connecting 'retirement planning' with 'Social Security,' 'investment strategies,' and 'tax optimization' shows you understand the topic ecosystem.

Track how LLMs cite your content and measure your topical authority across ChatGPT, Gemini, and Perplexity with AmICited's AI monitoring platform.

Learn how to build authority for AI visibility. Discover E-E-A-T strategies, topical authority, and how to get cited in AI Overviews and LLM responses.

Discover how AI systems evaluate content quality beyond traditional SEO metrics. Learn about semantic understanding, factual accuracy, and quality signals that ...

Learn how topical authority impacts AI search visibility, citations in ChatGPT and Perplexity, and how to build expertise that AI systems recognize and cite.