Citation Quality Score

Learn what Citation Quality Score is and how it measures the prominence, context, and sentiment of AI citations. Discover how to evaluate citation quality, impl...

Learn why citation quality matters more than volume. Discover how to measure and optimize AI mentions, links, and embeddings for maximum business impact.

Most brands obsess over citation volume—how many times their brand appears in AI responses—but miss the critical insight that not all citations are created equal. A citation buried in a “see more sources” section generates less than 2% click-through rate, while the same citation featured prominently in an AI answer drives 15-25% CTR—a 10x difference that most monitoring tools completely ignore. If you’re tracking citation count without measuring quality, you’re flying blind on what actually drives traffic and conversions from AI platforms.

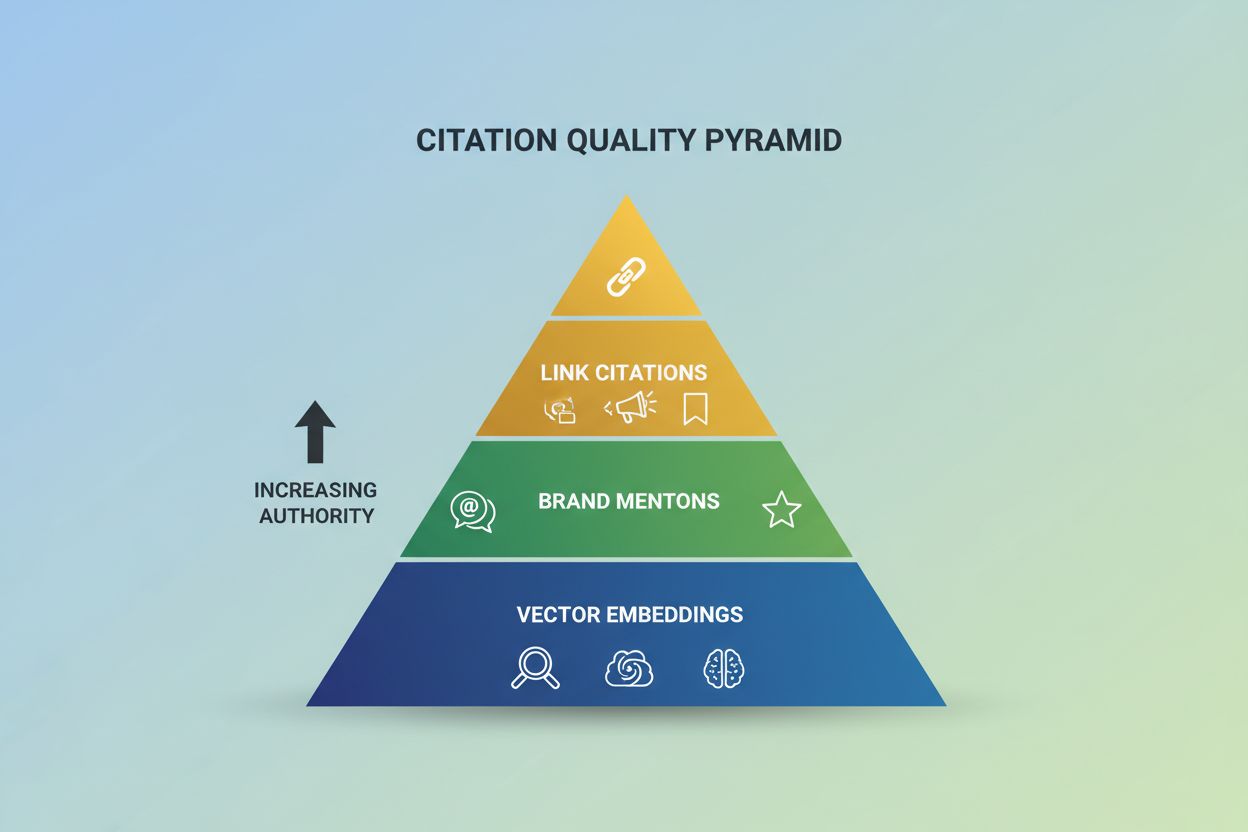

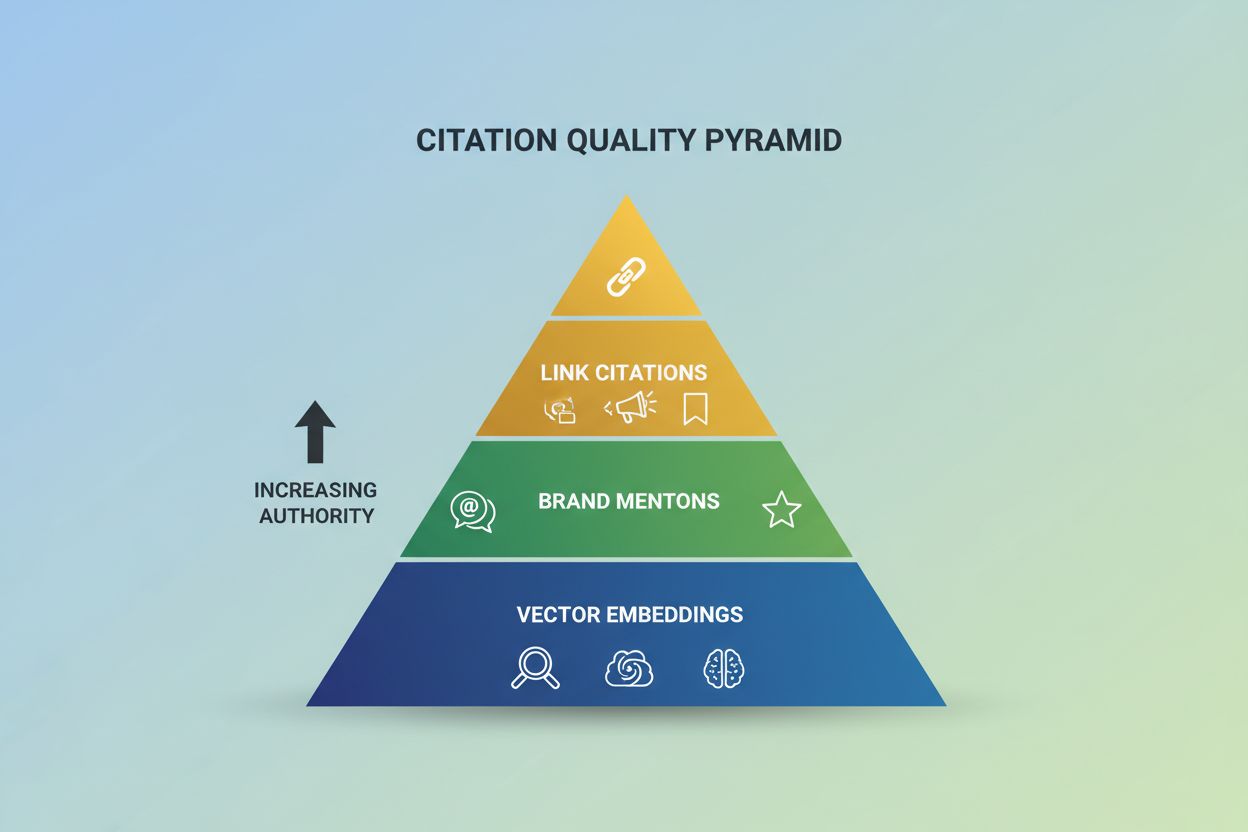

AI citation quality operates across three distinct dimensions that work together in the answer generation pipeline, and understanding each is essential for strategic optimization. Vector embeddings determine whether your content is even retrieved as a candidate source, brand mentions signal authority and build awareness when AI systems reference your brand by name, and link citations drive direct traffic when AI platforms attribute content to your site with clickable URLs. Research shows that retrieval quality (embeddings) accounts for 60-70% of citation variance, while authority signals and attribution markup influence the remaining 30-40%—meaning if your content isn’t retrieved in the first place, no amount of E-E-A-T optimization will earn you citations.

| Citation Dimension | Definition | Business Impact |

|---|---|---|

| Vector Embeddings | Semantic representation in retrieval systems | Determines if content is considered (60-70% of variance) |

| Brand Mentions | References without links | Builds authority and brand awareness |

| Link Citations | Attributed sources with URLs | Drives traffic and conversions |

Each dimension requires different measurement approaches and optimization strategies, yet most organizations focus exclusively on link citations while ignoring the foundational role of embeddings and the brand-building power of mentions.

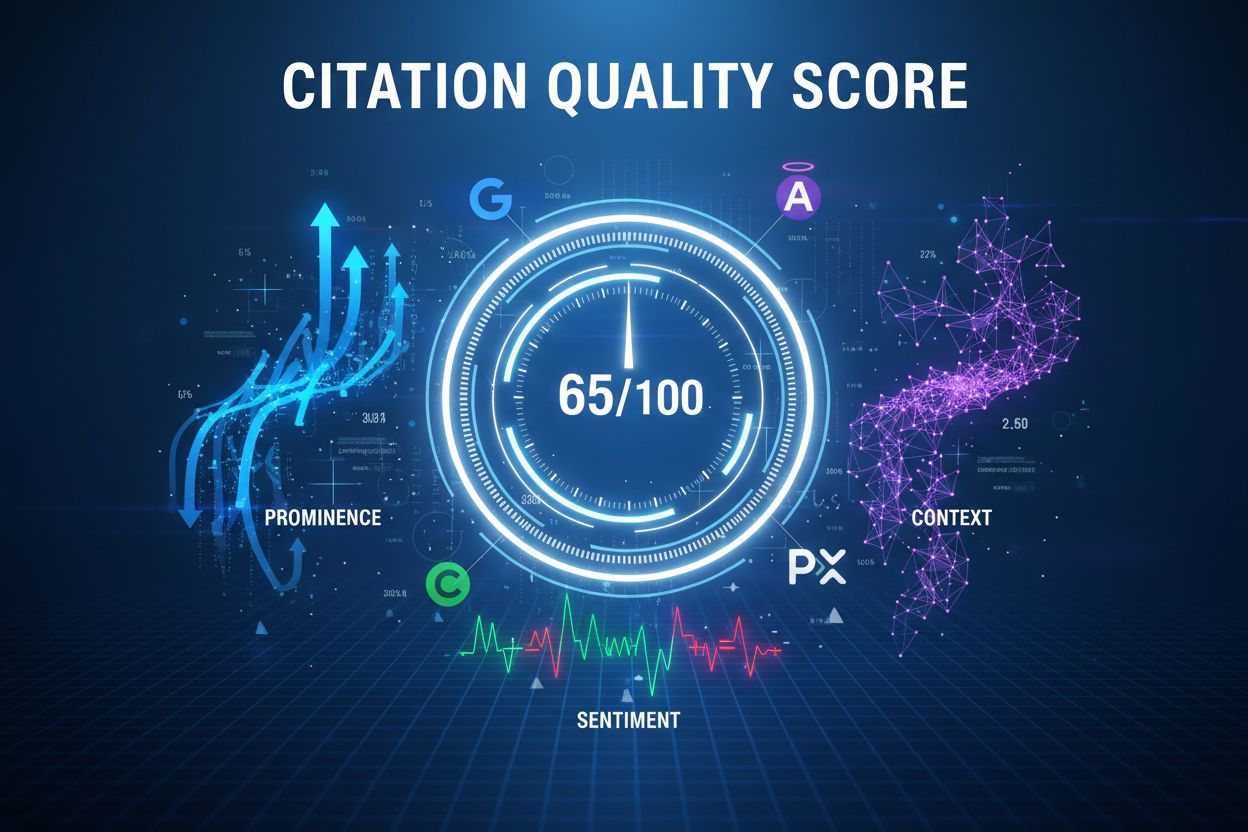

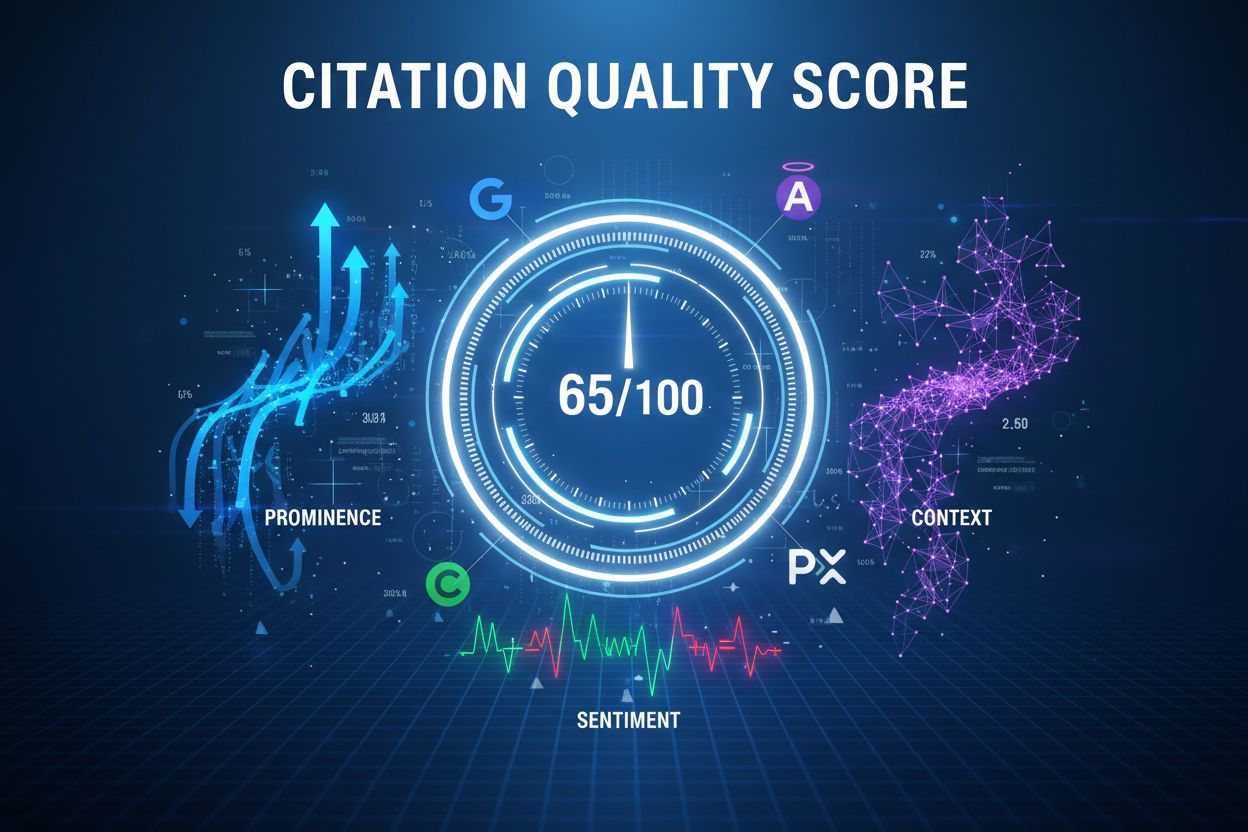

The data is unambiguous: ten high-quality citations from authoritative contexts outperform 100 low-quality mentions in driving business results. Organizations that shifted from volume-focused to quality-focused citation strategies saw 8.3x more qualified leads, 340% higher conversion rates, and 247% increases in AI-driven traffic—metrics that traditional citation counting completely misses. Citation placement variance alone creates massive performance differences: featured citations in AI Overviews maintain 15-25% click-through rates, while citations buried in expandable sections generate less than 2% CTR. This 10x variance means that improving citation quality from an average score of 45/100 to 65/100 delivers more business value than increasing citation volume by 50%, yet most brands continue chasing volume metrics that don’t correlate with revenue.

Measuring mention quality systematically requires a structured testing and scoring methodology that goes far beyond simple counting. Start by identifying 50-100 high-intent queries relevant to your domain—include informational queries (“what is X”), comparison queries (“X vs Y”), how-to queries (“how to do X”), and commercial intent queries (“best X for Y”)—then query each across major AI platforms monthly and record whether your brand appears, the context of mention, sentiment (positive, neutral, negative), and positioning (primary source, supporting reference, alternative option, or passing mention). Develop a weighted scoring system that reflects business value:

A mention earning 70+ points indicates high quality—these are authoritative references in relevant contexts that strengthen brand positioning. Track average mention quality score over time, not just mention volume; improving from 45 to 65 average quality represents meaningful progress even if mention volume stays constant.

Link citations represent the gold standard for many organizations because they combine brand visibility with direct traffic opportunity, but citation quality varies dramatically based on placement, context, anchor text, and user intent alignment. Develop a scoring framework that reflects both visibility and traffic potential: placement prominence (featured citation above fold = 35 points, inline citation in main answer = 25 points, supporting source list = 15 points, expandable “see more” section = 8 points), context alignment (direct answer to query = 25 points, relevant supporting detail = 18 points, related but tangential = 10 points, weak relevance = 5 points), anchor text quality (descriptive, intent-matched anchor = 20 points, brand name anchor = 15 points, generic anchor like “source” = 8 points, URL only = 5 points), and query intent match (perfect alignment = 20 points, good match = 15 points, partial match = 10 points, poor match = 5 points). Citations scoring 75+ represent premium placements likely to drive meaningful traffic and conversions, while citations below 50 may technically exist but provide minimal business value. Track both the volume of link citations and the distribution of quality scores—100 low-quality citations matter far less than 20 high-quality ones.

Vector embeddings represent the most technical and least visible citation dimension, yet they fundamentally determine whether your content enters consideration for mentions or links at all. When users query AI systems using Retrieval-Augmented Generation (RAG), the process begins by converting the query into a vector embedding, searching a vector database for semantically similar content embeddings, and retrieving the top-k most similar sources (typically 5-20 documents)—if your content isn’t retrieved in this initial stage, it never reaches the authority evaluation or citation selection phases. Vector embeddings represent text as high-dimensional numerical arrays (typically 768 or 1536 dimensions) that encode semantic meaning, with similar concepts having similar vectors measured using cosine similarity scores ranging from -1 to 1, where 1 represents identical meaning and 0 represents no relationship; research demonstrates that retrieval quality correlates strongly with semantic similarity scores above 0.75 for domain-specific queries. To measure your embedding quality, generate embeddings for your content and typical user queries using OpenAI’s text-embedding-3 models, Google’s Vertex AI embeddings, or open-source models like sentence-transformers, then calculate cosine similarity and identify which content pieces achieve high similarity (0.75+) for priority queries versus which fail to reach retrieval thresholds (below 0.60). Most organizations lack the technical infrastructure for direct embedding analysis, but proxy measures provide actionable insights: analyze your content library for focused, consistent terminology around core concepts versus topic drift, evaluate whether your organization and key concepts are clearly defined with consistent naming conventions, assess whether you comprehensively cover core topics versus surface-level treatment, and examine internal linking patterns—dense, logical linking between related concepts strengthens topical signals that embedding models use to understand content focus.

Effective citation quality evaluation requires integrated measurement across all three dimensions, with each layer building on the previous: strong embeddings enable retrieval, retrieval enables mention consideration, and mentions with proper attribution become link citations. Build a quarterly measurement framework that tracks progress across all dimensions by establishing baseline metrics across 50-100 core queries, tracking monthly changes in citation volume and quality scores, calculating quality scores for each citation type using your weighted frameworks, and benchmarking against competitors to identify gaps and opportunities. Your dashboard should display four key metrics: Vector Quality (semantic similarity scores, topical consistency, entity clarity—target 0.75+ similarity for core queries), Mention Quality (mention rate, average quality score, sentiment distribution—target 30%+ mention rate with 65+ average quality), Link Quality (citation volume, quality score distribution, CTR estimates—target 20+ citations with 70+ average quality score), and Business Impact (AI-driven traffic, brand search volume, conversion rates—target 15%+ traffic from AI citations). When resources are limited, prioritize improvements based on current bottlenecks: if embedding quality is weak, start there since no amount of E-E-A-T work helps if content isn’t retrieved; if embedding quality is strong but mention rates remain low, focus on authority signals and content depth; if mentions are strong but link citations lag, emphasize technical attribution markup and schema implementation.

Each major AI platform demonstrates distinct citation preferences that require tailored optimization strategies, with research analyzing 680 million citations revealing dramatically different sourcing patterns. ChatGPT shows a strong preference for authoritative knowledge bases, with Wikipedia accounting for 7.8% of total citations overall and 47.9% of its top 10 most-cited sources—this concentration indicates ChatGPT prioritizes encyclopedic, factual content over social discourse and emerging platforms. Google AI Overviews takes a more balanced approach, with Reddit leading at 2.2% of total citations but only 21% of top 10 sources, while YouTube (18.8%), Quora (14.3%), and LinkedIn (13%) also feature prominently—this distribution shows Google values both professional content and community discussions. Perplexity demonstrates a unique community-driven philosophy, with Reddit dominating at 6.6% of total citations and 46.7% of top 10 sources, followed by YouTube (13.9%) and Gartner (7%)—this pattern indicates Perplexity prioritizes peer-to-peer information and real-world experiences over traditional authority signals. These platform differences mean that a one-size-fits-all citation strategy fails: brands should focus on Wikipedia and authoritative sources for ChatGPT visibility, balance professional content with community engagement for Google AI Overviews, and invest heavily in Reddit participation and user-generated content for Perplexity. Understanding these platform-specific preferences allows you to allocate content and PR resources strategically rather than spreading efforts equally across all platforms.

Improving citation quality requires distinct strategies tailored to each dimension of the citation stack. For vector embeddings, strengthen semantic clarity through comprehensive topic clusters that thoroughly address core concepts with consistent terminology and clear hierarchy; use descriptive headers, definitions, and entity references that help embedding models understand content focus; avoid mixing unrelated topics on single pages since semantic drift creates noisy embeddings that perform poorly in retrieval; implement strategic internal linking between related concepts to strengthen topical signals; cite authoritative sources to provide context that embedding models use to understand your content’s domain and focus; and maintain content freshness through regular updates since stale content may have outdated semantic signals. For brand mentions, build verifiable topical authority by strengthening E-E-A-T signals through detailed author credentials, organizational transparency, and consistent citation of authoritative sources; create comprehensive content that thoroughly addresses user intent without requiring AI systems to synthesize information from multiple fragmented sources; publish original research and proprietary data that can’t be found elsewhere since AI systems favor unique, first-hand information; and participate actively in industry conversations, forums, and communities where your brand naturally belongs. For link citations, implement comprehensive schema markup—especially Article, HowTo, FAQPage, and Organization schemas—to clarify content purpose and attribution; ensure clean URL structures, fast page loads, and mobile optimization since AI systems favor technically sound sources; create self-contained content chunks with clear headers that can stand alone when extracted into AI answers; focus content strategy on how-to guides and FAQ formats that naturally lend themselves to citation; and build author pages with credentials that verify expertise while ensuring your Contact, About, and Privacy pages meet transparency standards.

A B2B SaaS company in the marketing technology space implemented comprehensive citation quality evaluation after noticing competitors appearing more frequently in AI-generated recommendations, revealing a critical insight that transformed their strategy. Their initial audit showed strong link citation volume (85 citations across priority queries) but low quality scores (average 42/100) and weak mention rates (12% across tested queries)—analysis revealed their content was being retrieved (good embedding quality) and occasionally cited with links (adequate technical markup), but mentions were rare because content lacked depth and expertise signals. They focused optimization on strengthening author credentials by adding detailed bios and publication history, publishing original research data that competitors couldn’t replicate, and creating comprehensive guides rather than thin blog posts that synthesized information from multiple sources. After six months of quality-focused optimization: mention rate increased to 31% (a 158% improvement), link citation quality score improved to 68/100 (a 62% improvement), and AI-driven traffic grew 47%—but the real insight was that their technical foundation (embeddings and markup) was already solid, meaning their bottleneck was authority signals rather than technical implementation. This case demonstrates that citation quality measurement reveals specific optimization opportunities that volume-focused tracking completely misses, allowing organizations to allocate resources where they create the most leverage rather than pursuing generic best practices that don’t address their actual bottlenecks.

Citation volume is the total count of mentions or links. Quality measures the value of each citation based on placement, context, sentiment, and authority. Ten high-quality citations from authoritative sources drive more value than 100 low-quality mentions. Quality directly correlates with business outcomes like traffic and conversions.

Test 50-100 relevant queries across AI platforms monthly. For each citation, score it based on: placement prominence (0-35 points), context alignment (0-25 points), anchor text quality (0-20 points), and query intent match (0-20 points). Track average scores over time. Citations scoring 75+ represent premium placements likely to drive meaningful traffic.

All three matter at different stages. Embeddings determine retrieval (60-70% of citation variance). Mentions build authority and awareness. Links drive traffic and conversions. Success requires optimizing all three dimensions with tailored strategies for each.

Each platform has different training data, algorithms, and design philosophies. ChatGPT favors authoritative sources like Wikipedia. Google AI Overviews balance professional and social content. Perplexity prioritizes community discussions. Optimize for each platform's preferences rather than using a one-size-fits-all approach.

Conduct comprehensive audits quarterly with monthly spot checks on high-priority topics. Track leading indicators weekly: organic traffic from AI, brand search volume, and citation rate trends. Adjust strategy based on quality score movements to detect early drops requiring intervention.

Partially. Improve existing content with better structure, schema markup, and author credentials. Strengthen E-E-A-T signals. However, creating new, citation-worthy content (original research, comprehensive guides) is the most effective approach for improving quality scores.

For link citations: 70+ is excellent. For mentions: 60+ indicates strong contextual relevance. For embeddings: 0.75+ semantic similarity. Competitive industries require higher thresholds. Focus on improving 10-15 points per quarter rather than chasing perfection.

AmICited.com tracks how AI systems reference your brand across ChatGPT, Perplexity, Google AI Overviews, and other platforms. It measures quality metrics beyond volume, showing placement, sentiment, context, and competitive positioning to help you optimize strategically.

Stop counting mentions and start measuring what matters. AmICited.com tracks citation quality across all major AI platforms, showing you exactly which mentions drive value and where to optimize next.

Learn what Citation Quality Score is and how it measures the prominence, context, and sentiment of AI citations. Discover how to evaluate citation quality, impl...

Community discussion on how AI models decide what to cite. Real experiences from SEOs analyzing citation patterns across ChatGPT, Perplexity, and Gemini.

Learn the optimal content depth, structure, and detail requirements for getting cited by ChatGPT, Perplexity, and Google AI. Discover what makes content citatio...