ClaudeBot

Learn what ClaudeBot is, how it works, and how to block or allow this Anthropic web crawler on your website using robots.txt configuration.

Learn how ClaudeBot works, how it differs from Claude-Web and Claude-SearchBot, and how to manage Anthropic’s web crawlers on your website with robots.txt configuration.

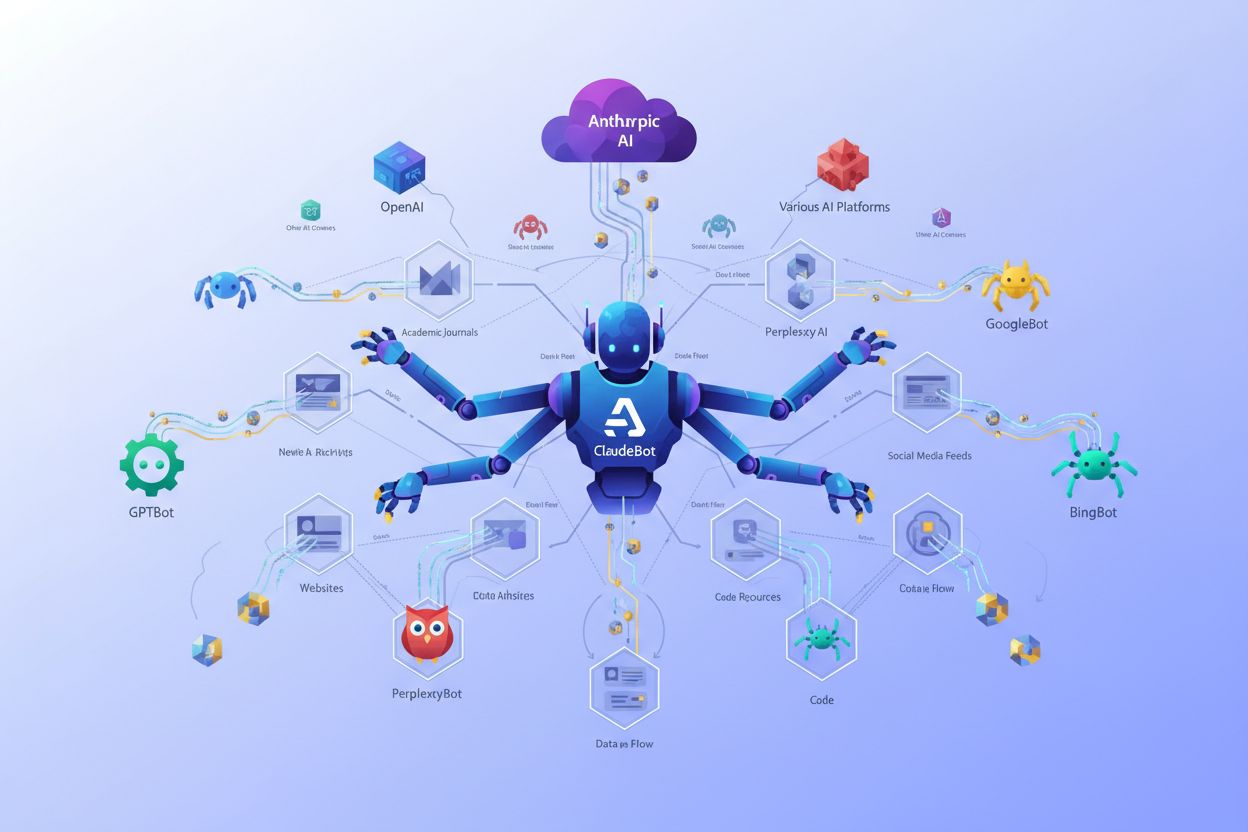

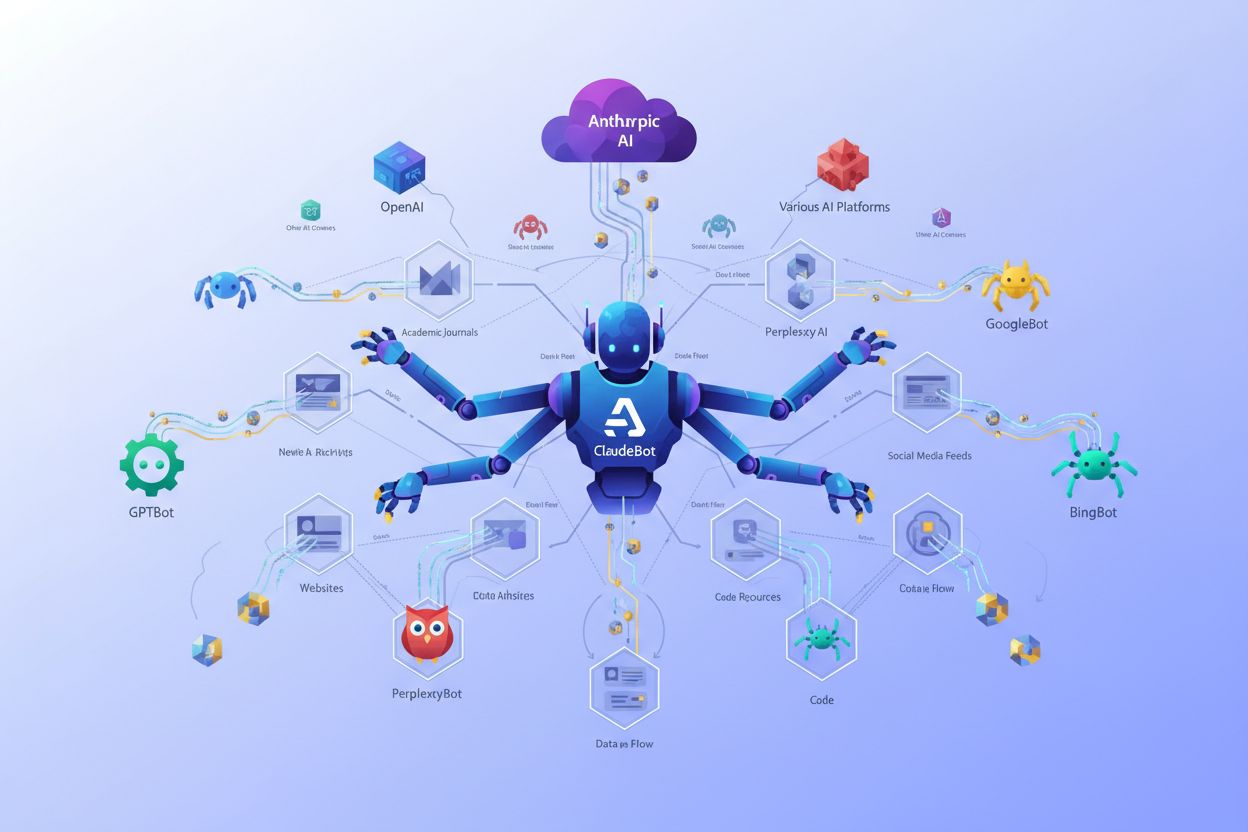

ClaudeBot is Anthropic’s web crawler, designed to discover and index web content across the internet for the purpose of training and improving Claude, Anthropic’s advanced large language model. Unlike traditional search engine crawlers that prioritize indexing for search results, ClaudeBot focuses specifically on gathering diverse, high-quality text data to enhance Claude’s knowledge base and capabilities. The crawler operates autonomously, systematically visiting websites and collecting publicly available content while respecting standard web protocols and website owner preferences. As AI language models become increasingly sophisticated, web crawlers like ClaudeBot play a crucial role in ensuring these systems have access to current, diverse information. Understanding how ClaudeBot works and how to manage its access to your content is essential for modern website owners and content creators.

Anthropic operates three distinct web crawlers, each serving different purposes in the Claude ecosystem. The following table outlines the key differences between these crawlers:

| Bot Name | Purpose | Use Case | Impact if Disabled |

|---|---|---|---|

| ClaudeBot | LLM training and knowledge base development | Gathering diverse content for model improvement | Reduced training data; slower model updates |

| Claude-Web | Real-time web access for Claude users | Enabling Claude to access current web information during conversations | Users cannot browse web in Claude interface |

| Claude-SearchBot | Search-specific content discovery | Powering search functionality within Claude products | Search features become unavailable |

Each crawler serves a distinct function within Anthropic’s infrastructure, and website owners can manage each independently through their robots.txt configuration.

ClaudeBot operates through a sophisticated crawling mechanism that systematically discovers and processes web content. The crawler uses standard HTTP requests to access publicly available web pages, following links and URL patterns to expand its coverage across the internet. ClaudeBot discovers new content through multiple methods, including following hyperlinks from already-crawled pages, processing XML sitemaps, and responding to robots.txt directives that explicitly allow crawling. The crawler operates on a regular crawl frequency, revisiting pages periodically to capture updated content, though the exact frequency varies based on page importance and update patterns. During the crawling process, ClaudeBot collects text content, metadata, and structural information while respecting bandwidth limitations and server load considerations. The crawler identifies itself through a specific user agent string: Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; ClaudeBot/1.0; +claudebot@anthropic.com), allowing website owners to recognize and manage its requests.

ClaudeBot differs fundamentally from traditional search engine crawlers like those operated by Google and Bing in both purpose and methodology. While Google’s crawler prioritizes content for search indexing and ranking, ClaudeBot focuses on gathering training data for language model improvement, with no direct impact on search visibility. Traditional search crawlers create searchable indexes that users query directly, whereas ClaudeBot’s collected data feeds into Claude’s training pipeline, influencing the model’s responses rather than creating a searchable database. Search engine crawlers operate under the assumption that website owners want visibility in search results, while ClaudeBot’s purpose is more specialized and less directly tied to user discovery. Anthropic demonstrates greater transparency about ClaudeBot’s operations compared to some search engines, providing clear documentation about the crawler’s behavior and offering straightforward blocking mechanisms. The distinction is important: blocking ClaudeBot won’t affect your search engine rankings, but it will prevent your content from contributing to Claude’s training data.

ClaudeBot’s activity can have measurable impacts on your website’s operations and content visibility. The crawler generates server requests and bandwidth consumption, which, while typically minimal, can accumulate on high-traffic sites or those with limited server resources. Your website’s content may be incorporated into Claude’s training data, potentially appearing in Claude’s responses without direct attribution, raising questions about content usage and fair compensation for creators. However, ClaudeBot activity also represents an opportunity: having your content included in Claude’s training can increase your site’s influence on AI-generated responses and establish your expertise within the AI ecosystem. The visibility impact differs from search engines—you won’t gain direct referral traffic from ClaudeBot, but your content’s influence on AI outputs can drive indirect benefits. Understanding these trade-offs helps you make informed decisions about whether to allow or block ClaudeBot access to your site.

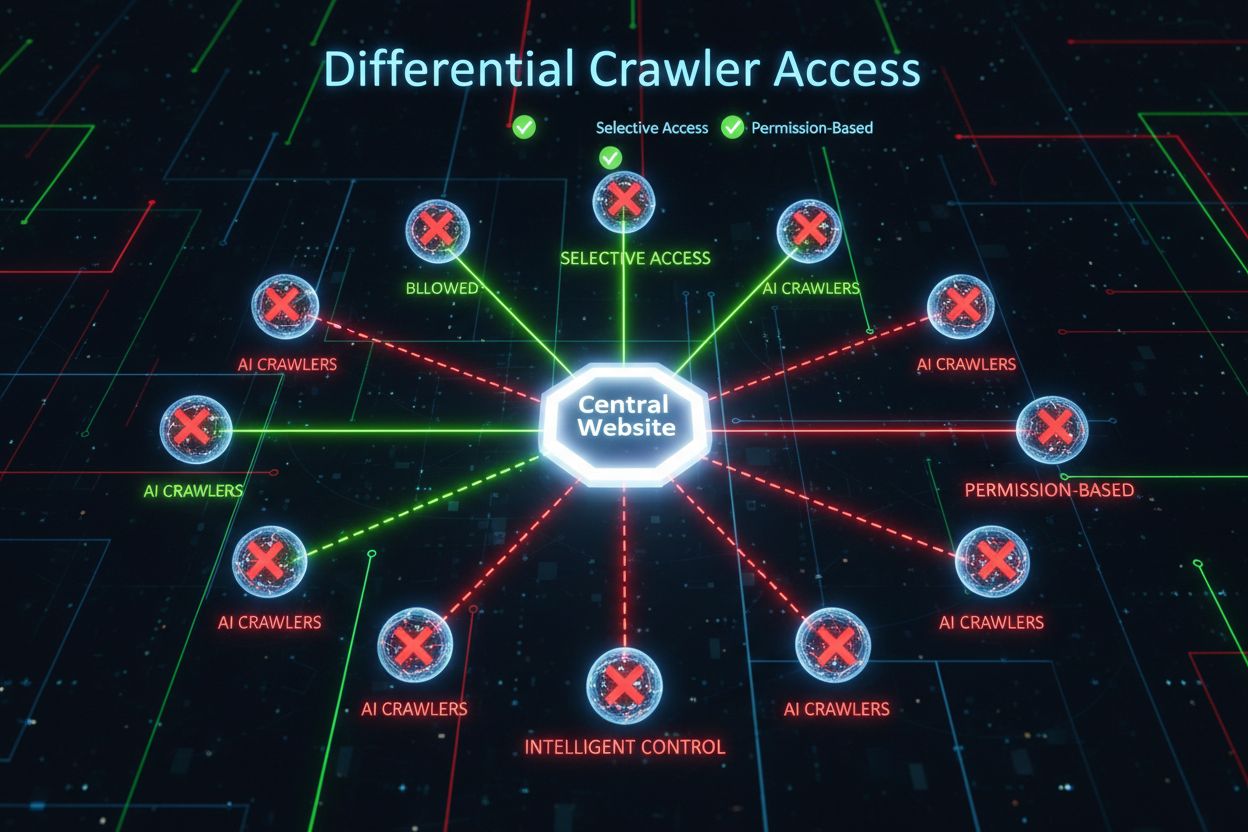

Blocking or controlling ClaudeBot is straightforward and follows standard web protocols that Anthropic respects. The primary method is configuring your robots.txt file to disallow ClaudeBot specifically, which Anthropic’s crawler honors consistently. You can also implement Crawl-delay directives to limit how frequently ClaudeBot accesses your site, reducing bandwidth impact while still allowing some crawling. Here’s how to block ClaudeBot in your robots.txt file:

User-agent: ClaudeBot

Disallow: /

To allow ClaudeBot but limit crawl frequency, use:

User-agent: ClaudeBot

Crawl-delay: 10

For more granular control, you can disallow specific directories or file types:

User-agent: ClaudeBot

Disallow: /private/

Disallow: *.pdf

Crawl-delay: 5

Additionally, you can contact Anthropic directly at claudebot@anthropic.com if you have specific concerns or requests regarding ClaudeBot’s access to your content.

Managing Anthropic’s crawlers effectively requires a strategic approach that balances your content protection with the benefits of AI visibility. Consider these best practices:

Content attribution remains a complex issue in the relationship between ClaudeBot and website owners. When ClaudeBot collects your content for training, that data becomes part of Claude’s knowledge base, but the original source attribution is not always preserved in Claude’s responses. Anthropic has made efforts to improve transparency and citation practices, allowing Claude to reference sources when appropriate, though this functionality varies depending on how the model was trained and how users interact with it. The challenge mirrors broader questions in the AI industry about fair use, content compensation, and creator rights in the age of large language models. Some content creators view ClaudeBot access as beneficial exposure that increases their influence on AI outputs, while others see it as unauthorized use of their intellectual property without compensation. Understanding Anthropic’s approach to attribution and your own content’s value proposition is essential for deciding whether to allow ClaudeBot access. The evolving landscape of AI training data and content rights will likely shape how companies like Anthropic handle attribution in the future.

Monitoring ClaudeBot activity on your website requires using standard web analytics and server monitoring tools. Your server access logs (typically found in Apache or Nginx log files) will record all ClaudeBot requests, identifiable by the distinctive user agent string, allowing you to track visit frequency and crawl patterns. Web analytics platforms like Google Analytics can be configured to identify and segment ClaudeBot traffic separately from human visitors, giving you insights into crawler behavior over time. You can verify ClaudeBot requests by checking the user agent string and the referrer domain (claudebot@anthropic.com ), ensuring you’re not confusing it with other crawlers or bots. Setting up custom alerts in your monitoring tools can notify you of unusual crawl spikes or unexpected access patterns that might indicate misconfiguration or abuse. Regular monitoring helps you understand the actual impact of ClaudeBot on your infrastructure and informs decisions about whether your current robots.txt configuration is appropriate for your needs.

The future of AI crawlers and content collection will likely be shaped by evolving industry standards, regulatory frameworks, and creator advocacy. As more companies develop their own AI models, the proliferation of specialized crawlers like ClaudeBot will increase, making crawler management an essential skill for website owners and content creators. Regulatory bodies worldwide are beginning to address questions about AI training data, fair use, and creator compensation, potentially establishing new standards that companies like Anthropic must follow. Industry initiatives are emerging to create standardized protocols for AI crawler behavior, similar to how robots.txt standardized search engine crawling decades ago. The relationship between AI companies and content creators will likely shift toward greater transparency, clearer attribution, and potentially new compensation models that recognize the value of training data. Website owners should stay informed about these developments and regularly reassess their crawler management strategies to align with evolving best practices and regulations. The next few years will be critical in establishing norms that balance AI innovation with creator rights and fair content usage.

ClaudeBot is Anthropic's web crawler that systematically visits websites to collect content for training Claude, their large language model. It operates similarly to search engine crawlers but focuses on gathering diverse text data to improve Claude's knowledge base and capabilities rather than creating a searchable index.

While Google's crawler indexes content for search results, ClaudeBot collects training data for AI model improvement. Blocking ClaudeBot won't affect your search engine rankings since it doesn't contribute to search indexing. The two crawlers serve fundamentally different purposes in the AI and search ecosystems.

Yes, you can block ClaudeBot by adding rules to your robots.txt file. Simply add 'User-agent: ClaudeBot' followed by 'Disallow: /' to block it entirely, or use 'Crawl-delay' to limit how frequently it accesses your site. Anthropic respects standard robots.txt directives consistently.

Blocking ClaudeBot has minimal direct SEO impact since it doesn't contribute to search engine indexing. However, it may reduce your content's representation in AI-generated responses from Claude, potentially affecting your visibility in AI search and chat applications.

Yes, Anthropic's ClaudeBot respects robots.txt directives as part of its commitment to transparent and non-intrusive crawling. The company honors 'Disallow' rules and supports the 'Crawl-delay' extension to help website owners manage crawler access and bandwidth usage.

You can track ClaudeBot visits through your server access logs by identifying its distinctive user agent string, or use web analytics platforms configured to segment bot traffic. Setting up custom alerts helps you monitor unusual crawl spikes and understand the actual impact on your infrastructure.

If you allow ClaudeBot access, your publicly available content may be included in Claude's training data. However, the original source attribution is not always preserved in Claude's responses, though Anthropic has made efforts to improve citation practices and transparency.

You can implement a Crawl-delay in your robots.txt file (typically 5-10 seconds) to limit crawl frequency while still allowing access. If you believe ClaudeBot is malfunctioning or behaving unusually, contact Anthropic directly at claudebot@anthropic.com with details about your domain.

AmICited tracks how AI systems like Claude cite and reference your brand across AI search engines, chatbots, and AI overviews. Get visibility into your AI presence today.

Learn what ClaudeBot is, how it works, and how to block or allow this Anthropic web crawler on your website using robots.txt configuration.

Learn how to selectively allow or block AI crawlers based on business objectives. Implement differential crawler access to protect content while maintaining vis...

Claude is Anthropic's advanced AI assistant powered by Constitutional AI. Learn how Claude works, its key features, safety mechanisms, and how it compares to ot...