WAF Rules for AI Crawlers: Beyond Robots.txt

Learn how Web Application Firewalls provide advanced control over AI crawlers beyond robots.txt. Implement WAF rules to protect your content from unauthorized A...

Learn how Cloudflare’s edge-based AI Crawl Control helps you monitor, control, and monetize AI crawler access to your content with granular policies and real-time analytics.

The proliferation of AI training models has created an unprecedented demand for web content, with sophisticated crawlers now operating at massive scale to feed machine learning pipelines. These bots consume bandwidth, inflate analytics, and extract proprietary content without permission or compensation, fundamentally disrupting the economics of content creation. Traditional rate limiting and IP-based blocking prove ineffective against distributed crawler networks that rotate identities and adapt to detection mechanisms. Website owners face a critical decision: allow unrestricted access that benefits AI companies at their expense, or implement sophisticated controls that distinguish between legitimate traffic and predatory bots.

Content Delivery Networks operate by distributing servers globally at the “edge” of the internet, positioned geographically closer to end users and capable of processing requests before they reach origin servers. Edge computing extends this paradigm by enabling complex logic execution at these distributed nodes, transforming CDNs from simple caching layers into intelligent security and control platforms. This architectural advantage proves invaluable for AI bot management because decisions can be made in milliseconds at the point of request entry, before bandwidth is consumed or content is transmitted. Traditional origin-based bot detection requires traffic to traverse the network, consuming resources and creating latency, while edge-based solutions intercept threats immediately. The distributed nature of edge infrastructure also provides natural resilience against sophisticated attacks that attempt to overwhelm detection systems through volume or geographic distribution.

| Approach | Detection Speed | Scalability | Cost | Real-time Control |

|---|---|---|---|---|

| Origin-Based Filtering | 200-500ms | Limited by origin capacity | High infrastructure costs | Reactive, post-consumption |

| Traditional WAF | 50-150ms | Moderate, centralized bottleneck | Moderate licensing fees | Semi-real-time decisions |

| Edge-Based Detection | <10ms | Unlimited, distributed globally | Lower per-request overhead | Immediate, pre-consumption |

| Machine Learning at Edge | <5ms | Scales with CDN footprint | Minimal additional cost | Predictive, adaptive blocking |

Cloudflare’s AI Crawl Control represents a purpose-built solution deployed across their global edge network, providing website owners with unprecedented visibility and control over AI crawler traffic. The system identifies requests from known AI training operations—including OpenAI, Google, Anthropic, and dozens of other organizations—and enables granular policies that determine whether each crawler receives access, faces blocking, or triggers monetization mechanisms. Unlike generic bot management that treats all non-human traffic similarly, AI Crawl Control specifically targets the machine learning training ecosystem, recognizing that these crawlers have distinct behavioral patterns, scale requirements, and business implications. The solution integrates seamlessly with existing Cloudflare services, requiring no additional infrastructure or complex configuration while providing immediate protection across all protected domains. Organizations gain a centralized dashboard where they can monitor crawler activity, adjust policies in real-time, and understand exactly which AI companies are accessing their content.

Cloudflare’s edge infrastructure processes billions of requests daily, generating a massive dataset that feeds machine learning models trained to identify AI crawler behavior with remarkable precision. The detection system employs multiple complementary techniques: behavioral analysis examines request patterns such as crawl speed, resource consumption, and sequential page access; fingerprinting analyzes HTTP headers, TLS signatures, and network characteristics to identify known crawler infrastructure; and threat intelligence integrates with industry databases that catalog AI training operations and their associated IP ranges and user agents. These signals combine through ensemble machine learning models that achieve high accuracy while maintaining extremely low false positive rates—critical because blocking legitimate users would damage site reputation and revenue. The system continuously learns from new crawler variants and adaptation techniques, with Cloudflare’s security team actively monitoring emerging AI training infrastructure to maintain detection effectiveness. Real-time classification happens at the edge node closest to the request origin, ensuring decisions complete within milliseconds before any meaningful bandwidth consumption occurs.

Once AI crawlers are identified at the edge, website owners can implement sophisticated policies that go far beyond simple allow/block decisions, tailoring access based on business requirements and content strategy. The control framework provides multiple enforcement options:

These policies operate independently for each crawler, enabling scenarios where OpenAI receives full access while Anthropic faces rate limiting and unknown crawlers are blocked entirely. The granularity extends to path-level controls, allowing different policies for public content versus proprietary documentation or premium resources. Organizations can also implement time-based policies that adjust crawler access during peak traffic periods or maintenance windows, ensuring that AI training operations don’t interfere with legitimate user experience.

Publishers face existential threats from AI systems trained on their journalism without compensation, making AI Crawl Control essential for protecting revenue models dependent on unique content creation. E-commerce platforms use the solution to prevent competitors from scraping product catalogs, pricing data, and customer reviews that represent significant competitive advantages and intellectual property. Documentation sites serving developer communities can allow beneficial crawlers like Googlebot while blocking competitors attempting to create derivative knowledge bases, maintaining their position as authoritative technical resources. Content creators and independent writers leverage AI Crawl Control to prevent their work from being incorporated into training datasets without permission or attribution, protecting both their intellectual property and their ability to monetize their expertise. SaaS companies use the solution to prevent API documentation from being scraped for training models that might compete with their services or expose security-sensitive information. News organizations implement sophisticated policies that allow search engines and legitimate aggregators while blocking AI training operations, preserving their ability to control content distribution and maintain subscriber relationships.

AI Crawl Control operates as a specialized component within Cloudflare’s comprehensive security architecture, complementing and enhancing existing protections rather than operating in isolation. The solution integrates seamlessly with Cloudflare’s Web Application Firewall (WAF), which can apply additional rules to crawler traffic based on the AI Crawl Control classifications, enabling scenarios where identified crawlers trigger specific security policies. Bot Management, Cloudflare’s broader bot detection system, provides the foundational behavioral analysis that feeds into AI-specific detection, creating a layered approach where generic bot threats are filtered before AI-specific classification occurs. DDoS protection mechanisms benefit from AI Crawl Control insights, as the system can identify distributed crawler networks that might otherwise appear as legitimate traffic spikes, enabling more accurate attack detection and mitigation. The integration extends to Cloudflare’s analytics and logging infrastructure, ensuring that crawler activity appears in unified dashboards alongside other security events, providing security teams with comprehensive visibility into all traffic patterns and threats.

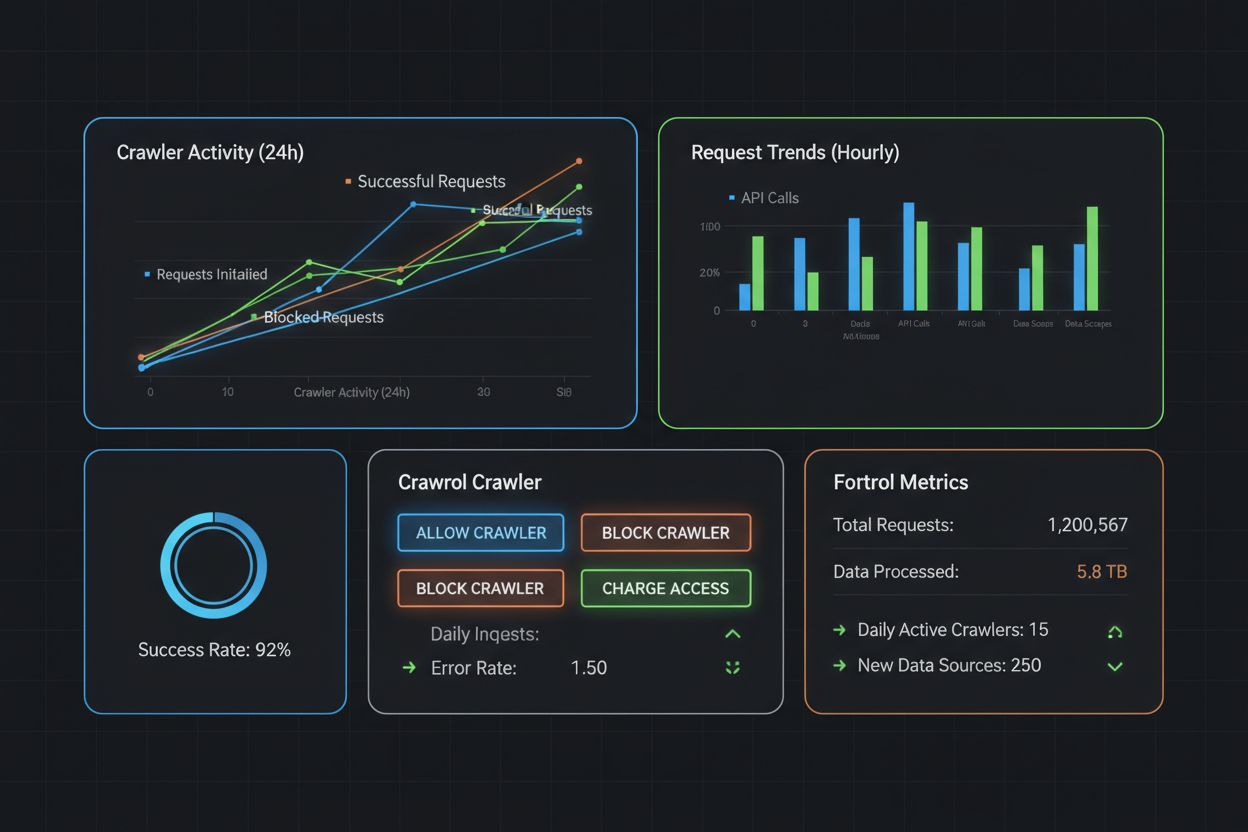

Cloudflare’s dashboard provides detailed analytics on crawler activity, breaking down traffic by crawler identity, request volume, bandwidth consumption, and geographic origin, enabling website owners to understand exactly how AI training operations impact their infrastructure. The monitoring interface displays real-time metrics showing which crawlers are currently accessing your site, how much bandwidth they’re consuming, and whether they’re respecting configured policies or attempting to circumvent controls. Historical analytics reveal trends in crawler behavior, identifying seasonal patterns, new crawler variants, and changes in access patterns that might indicate evolving threats or business opportunities. Performance metrics show the impact of crawler traffic on origin server load, cache hit rates, and user-facing latency, quantifying the infrastructure costs associated with unrestricted AI access. Custom alerts notify administrators when specific crawlers exceed thresholds, new crawlers are detected, or policy violations occur, enabling rapid response to emerging threats. The analytics system integrates with existing monitoring tools through APIs and webhooks, allowing organizations to incorporate crawler metrics into broader observability platforms and incident response workflows.

The Pay Per Crawl feature, currently in beta, introduces a revolutionary monetization model that transforms AI crawler traffic from a cost center into a revenue stream, fundamentally shifting the economics of content access. When enabled, this feature returns HTTP 402 Payment Required status codes to crawlers attempting to access protected content, signaling that access requires payment and triggering payment flows through integrated billing systems. Website owners can set per-request pricing, allowing them to monetize crawler access at rates that reflect the value of their content while remaining economically rational for AI companies that benefit from training data. The system handles payment processing transparently, with crawlers from well-funded AI companies able to negotiate volume discounts or licensing agreements that provide predictable access at negotiated rates. This approach creates alignment between content creators and AI companies: creators receive compensation for their intellectual property, while AI companies gain reliable, legal access to training data without the reputational and legal risks of unauthorized scraping. The feature enables sophisticated pricing strategies where different crawlers pay different rates based on content sensitivity, crawler identity, or usage patterns, allowing publishers to maximize revenue while maintaining relationships with beneficial partners. Early adopters report significant revenue generation from Pay Per Crawl, with some publishers earning thousands of dollars monthly from crawler monetization alone.

While other CDN providers offer basic bot management capabilities, Cloudflare’s AI Crawl Control provides specialized detection and control specifically designed for AI training operations, offering superior accuracy and granularity compared to generic bot filtering. Traditional WAF solutions treat all non-human traffic similarly, lacking the AI-specific intelligence necessary to distinguish between different crawler types and their business implications, resulting in either over-blocking that damages legitimate traffic or under-blocking that fails to protect content. Dedicated bot management platforms like Imperva or Akamai offer sophisticated detection but typically operate at higher latency and cost, requiring additional infrastructure and integration complexity compared to Cloudflare’s edge-native approach. Open-source solutions like ModSecurity provide flexibility but require significant operational overhead and lack the threat intelligence and machine learning capabilities necessary for effective AI crawler detection. For organizations seeking to understand how their content is being used by AI systems and track citations across training datasets, AmICited.com provides complementary monitoring capabilities that track where your brand and content appear in AI model outputs, offering visibility into the downstream impact of crawler access. Cloudflare’s integrated approach—combining detection, control, monetization, and analytics in a single platform—provides superior value compared to point solutions that require integration and coordination across multiple vendors.

Deploying AI Crawl Control effectively requires a thoughtful approach that balances protection with business objectives, starting with a comprehensive audit of current crawler traffic to understand which AI companies are accessing your content and at what scale. Organizations should begin with a monitoring-only configuration that tracks crawler activity without enforcing policies, allowing teams to understand traffic patterns and identify which crawlers provide value versus those that represent pure cost. Initial policies should be conservative, allowing known beneficial crawlers like Googlebot while blocking only clearly malicious or unwanted traffic, with gradual expansion of restrictions as teams gain confidence in the system’s accuracy and understand business implications. For organizations considering Pay Per Crawl monetization, starting with a small subset of content or a pilot program with specific crawlers allows testing of pricing models and payment flows before full deployment. Regular review of crawler activity and policy effectiveness ensures that configurations remain aligned with business objectives as the AI landscape evolves and new crawlers emerge. Integration with existing security operations requires updating runbooks and alert configurations to incorporate crawler-specific metrics, ensuring that security teams understand how AI Crawl Control fits into broader threat detection and response workflows. Documentation of policy decisions and business rationale enables consistent enforcement and simplifies future audits or policy adjustments as organizational priorities change.

The rapid evolution of AI systems and the emergence of agentic AI—autonomous systems that make decisions and take actions without human intervention—will drive increasing sophistication in edge-based control mechanisms. Future developments will likely include more granular behavioral analysis that distinguishes between different types of AI training operations, enabling policies tailored to specific use cases such as academic research versus commercial model training. Programmatic access control will evolve to support more sophisticated negotiation protocols where crawlers and content owners can establish dynamic agreements that adjust pricing, rate limits, and access based on real-time conditions and mutual benefit. Integration with emerging standards for AI transparency and attribution will enable automatic enforcement of licensing requirements and citation obligations, creating technical mechanisms that ensure AI companies respect intellectual property rights. The edge computing paradigm will continue to expand, with more complex machine learning models executing at the edge to provide increasingly accurate detection and more sophisticated policy enforcement. As the AI industry matures and regulatory frameworks emerge around data usage and content licensing, edge-based control systems will become essential infrastructure for enforcing compliance and protecting content creators’ rights. Organizations that implement comprehensive AI control strategies today will be best positioned to adapt to future regulatory requirements and emerging threats while maintaining the flexibility to monetize their content and protect their intellectual property in an AI-driven economy.

AI Crawl Control is Cloudflare's edge-based solution that identifies AI crawler traffic and enables granular policies to allow, block, or charge for access. It operates at the edge of Cloudflare's global network, making real-time decisions within milliseconds using machine learning and behavioral analysis to distinguish AI training operations from legitimate traffic.

Cloudflare uses multiple detection techniques including behavioral analysis of request patterns, fingerprinting of HTTP headers and TLS signatures, and threat intelligence from industry databases. These signals combine through ensemble machine learning models that achieve high accuracy while maintaining low false positive rates, continuously learning from new crawler variants.

Yes, AI Crawl Control provides granular per-crawler policies. You can allow beneficial crawlers like Googlebot for free, block unwanted crawlers completely, or charge specific crawlers for access. Policies can be configured independently for each crawler, enabling sophisticated access strategies tailored to your business needs.

Pay Per Crawl is a beta feature that enables content owners to monetize AI crawler access by charging per request. When enabled, crawlers receive HTTP 402 Payment Required responses and can negotiate payment through integrated billing systems. Website owners set per-request pricing, transforming crawler traffic from a cost center into a revenue stream.

Edge-based detection makes decisions in less than 10 milliseconds at the point of request entry, before bandwidth is consumed or content is transmitted. This is dramatically faster than origin-based filtering which requires traffic to traverse the network, consuming resources and creating latency. The distributed nature of edge infrastructure also provides natural resilience against sophisticated attacks.

AI Crawl Control is available on all Cloudflare plans, including free plans. However, the quality of detection varies by plan—free plans identify crawlers based on user agent strings, while paid plans enable more thorough detection using Cloudflare's Bot Management detection capabilities for superior accuracy.

AI Crawl Control integrates seamlessly with Cloudflare's Web Application Firewall (WAF), Bot Management, and DDoS protection. Identified crawlers can trigger specific security policies, and crawler activity appears in unified dashboards alongside other security events, providing comprehensive visibility into all traffic patterns.

Edge-based control provides immediate threat interception before bandwidth consumption, real-time policy enforcement without origin server involvement, global scalability without infrastructure costs, and comprehensive analytics on crawler behavior. It also enables monetization opportunities and protects intellectual property while maintaining relationships with beneficial partners.

Gain visibility into which AI services access your content and take control with granular policies. Start protecting your digital assets with Cloudflare's AI Crawl Control.

Learn how Web Application Firewalls provide advanced control over AI crawlers beyond robots.txt. Implement WAF rules to protect your content from unauthorized A...

Learn how to block or allow AI crawlers like GPTBot and ClaudeBot using robots.txt, server-level blocking, and advanced protection methods. Complete technical g...

Learn how to audit AI crawler access to your website. Discover which bots can see your content and fix blockers preventing AI visibility in ChatGPT, Perplexity,...