How to Set Up AI Brand Monitoring: A Complete Guide

Learn how to set up AI brand monitoring to track your brand across ChatGPT, Perplexity, and Google AI Overviews. Complete guide with tools, strategies, and best...

Learn how AI systems rank competitor comparisons and why your brand might be missing from ‘vs’ queries. Discover strategies to dominate AI comparison visibility.

AI systems now handle approximately 80% of consumer searches for product recommendations, fundamentally shifting how purchasing decisions get made. When users ask “X vs Y” questions to ChatGPT, Gemini, or Perplexity, they’re engaging in high-intent moments that directly influence buying behavior—but these interactions work differently than traditional search engine queries. Unlike Google, where keyword density and backlink authority dominate, AI systems synthesize information across multiple sources and synthesize narrative comparisons that can either elevate or bury your brand. For SaaS and B2B companies, this represents both a critical visibility challenge and an unprecedented opportunity: your brand’s presence in these AI-generated comparisons directly impacts whether prospects even consider you as a viable option. The stakes are higher than ever because AI comparison results feel authoritative and comprehensive, making them the new battleground for market share.

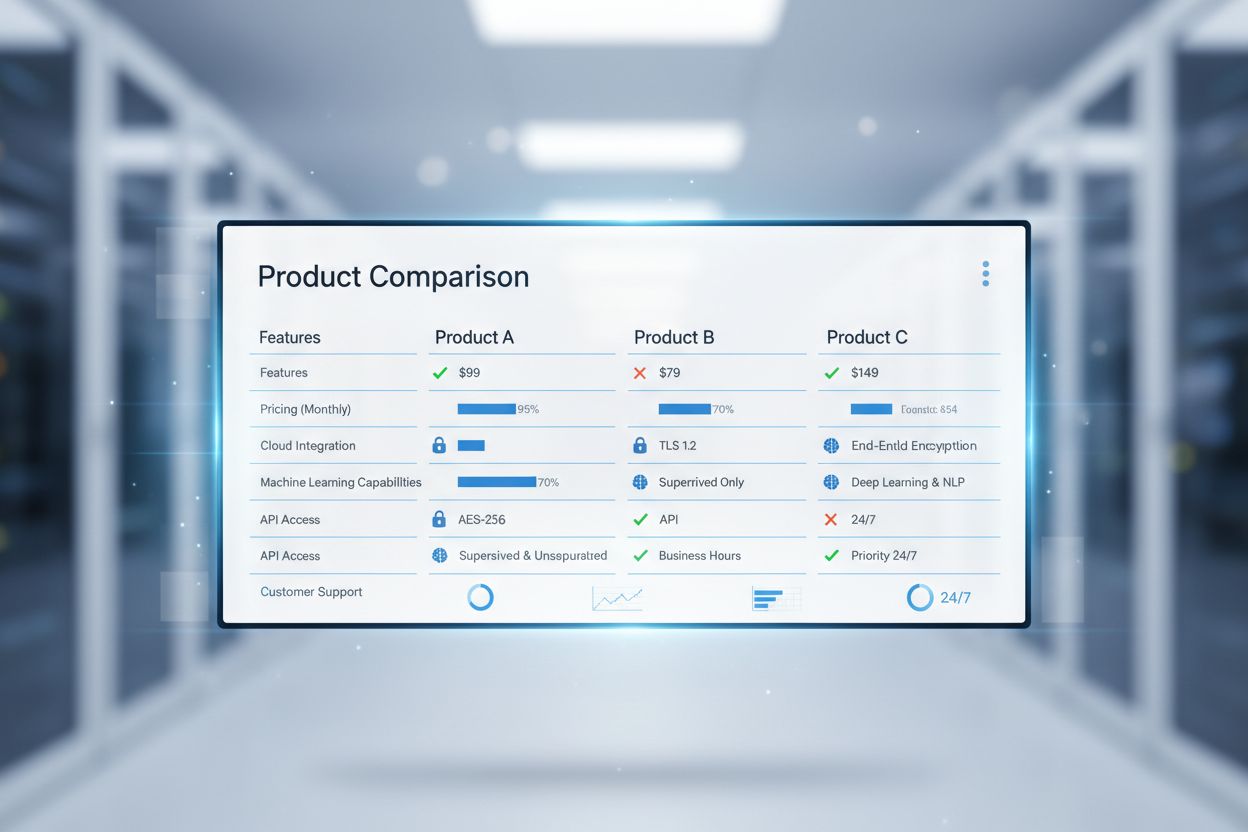

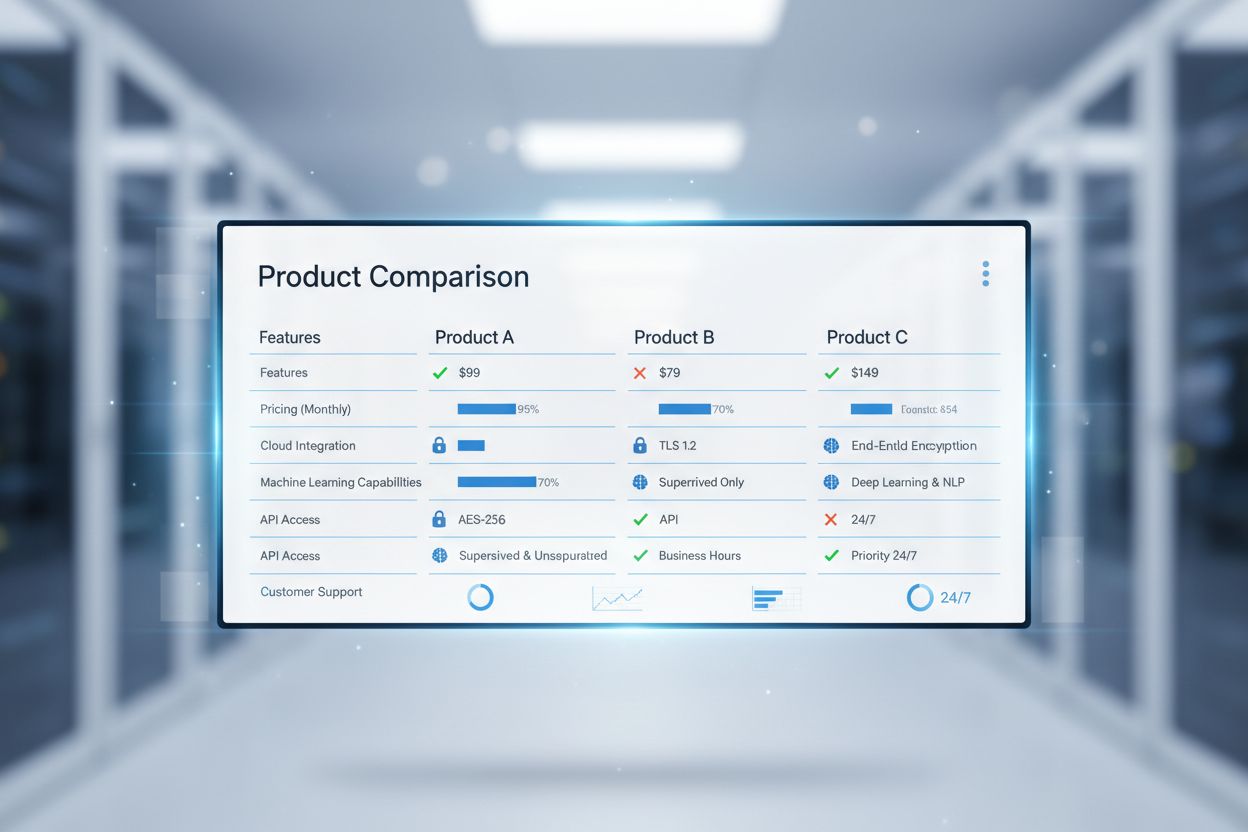

When you ask an LLM to compare two products, the system doesn’t simply retrieve and rank existing comparison pages—it parses the semantic intent of your query, identifies relevant entities, and maps relationships between them to construct a novel answer. LLMs demonstrate a strong preference for structured data and clear positioning statements over narrative content, meaning a well-formatted comparison table with explicit feature callouts will be weighted more heavily than a 2,000-word blog post buried in prose. The system performs entity recognition and relationship mapping to understand not just what products exist, but how they relate to each other across dimensions like price, use cases, and target audiences. Citation patterns matter enormously: LLMs track which sources they pull information from and weight sources with higher credibility and consistency more heavily. This is fundamentally different from how humans read comparison pages—while a person might scan your entire page, an AI system extracts specific claims, validates them against other sources, and flags inconsistencies. Clear positioning and differentiation matter far more than keyword density, because the AI is looking for semantic clarity and verifiable claims, not keyword matches.

Behind every AI comparison sits a ranking algorithm that determines which product gets positioned as “better” for specific use cases—and understanding these systems is critical for strategic positioning. Researchers have identified four primary approaches that LLMs and comparison platforms use: the Elo rating system (borrowed from chess), the Bradley-Terry model (designed for controlled datasets), the Glicko system (an evolution of Elo for large, uneven datasets), and Markov Chain approaches (for balanced, probabilistic comparisons). Each system has distinct strengths and weaknesses across three critical dimensions: transitivity (whether A>B and B>C reliably means A>C), prediction accuracy, and hyperparameter sensitivity.

| Algorithm | Best For | Transitivity | Prediction Accuracy | Hyperparameter Sensitivity |

|---|---|---|---|---|

| Elo | Large uneven datasets | Moderate | High | Very High |

| Bradley-Terry | Small controlled datasets | Excellent | High | None |

| Glicko | Large uneven datasets | Good | High | Moderate |

| Markov Chain | Balanced datasets | Good | Moderate | High |

The Elo system excels at handling massive, imbalanced datasets (like millions of user comparisons) but is extremely sensitive to hyperparameter tuning and can produce non-transitive results. Bradley-Terry offers perfect transitivity and no hyperparameter complexity, making it ideal for controlled product comparisons where you have a fixed set of competitors and consistent evaluation criteria. Glicko balances the strengths of both approaches, providing good transitivity and prediction accuracy while remaining moderately sensitive to tuning. Markov Chain methods work best when you have balanced, head-to-head comparison data and can tolerate moderate prediction accuracy in exchange for probabilistic insights. Understanding which algorithm an AI system uses—or which your competitors are optimizing for—reveals strategic opportunities for positioning.

Most SaaS companies experience a jarring reality: your brand gets mentioned far less frequently in AI comparisons than in traditional search results, and when it does appear, it’s often positioned as a secondary option. This visibility gap stems from several interconnected factors. Citation patterns and source authority matter enormously—if your brand appears primarily on your own website and a handful of review sites, while competitors appear across industry publications, analyst reports, and third-party comparisons, the AI system will weight competitor mentions more heavily. Entity clarity and consistent naming across all your digital properties (website, documentation, social profiles, review sites) directly impacts whether the AI recognizes you as a distinct entity worth comparing. Many companies fail to implement structured data markup that explicitly communicates their features, pricing, and positioning to AI systems, forcing the LLM to infer this information from unstructured content. The numbers are sobering: research shows that AI-generated search results drive 91% fewer clicks than traditional Google search results for the same queries, meaning visibility in AI comparisons is even more critical than traditional SEO. Your competitors are likely already building stronger AI presence through strategic content placement, structured data implementation, and deliberate positioning in third-party comparison contexts—and every day you wait, the gap widens.

To win in AI comparison queries, your comparison pages need to be architected specifically for how LLMs parse and synthesize information. Here are the essential optimization practices:

Visibility without measurement is just hope, which is why systematic monitoring of your AI comparison presence is non-negotiable. Start by establishing a baseline across the major AI platforms—ChatGPT, Google Gemini, Perplexity, and Claude—by running a standardized prompt playbook that covers category shortlists (“top 5 project management tools”), head-to-head comparisons (“Asana vs Monday.com”), constraint-based queries (“best CRM for nonprofits”), and migration scenarios (“switching from Salesforce to…”). For each result, track four key metrics: presence (are you mentioned?), positioning (first, middle, or last?), accuracy (are the claims about your product correct?), and evidence usage (which sources does the AI cite when describing you?). Establish a baseline score for each query and platform, then track progress quarterly to identify whether your visibility is improving, stagnating, or declining relative to competitors. Tools like Ahrefs Brand Radar, Semrush Brand Monitoring, and emerging AI-specific platforms like AmICited.com provide automated tracking across multiple AI systems, eliminating the need for manual testing. The goal isn’t perfection—it’s systematic visibility and the ability to identify gaps before they become competitive disadvantages.

AI Share of Voice represents your brand’s percentage of total mentions and positive positioning across AI comparison results within your category—and it’s becoming the primary metric for competitive advantage. Unlike traditional Share of Voice, which measures keyword mentions across search results, AI Share of Voice captures how often your brand appears in AI-generated comparisons and how favorably it’s positioned relative to competitors. Identifying visibility gaps requires competitive analysis across three dimensions: topic gaps (which comparison queries mention competitors but not you?), format gaps (are competitors appearing in tables, case studies, or expert roundups that you’re absent from?), and freshness gaps (are competitor mentions recent while yours are outdated?). Citation analysis reveals which sources the AI trusts most—if your competitors are consistently cited from industry publications while you’re only cited from your own website, you’ve identified a critical source authority gap. Building sustainable AI visibility requires moving beyond quick-win tactics like optimizing individual comparison pages; instead, develop a content strategy that systematically builds your presence across third-party sources, analyst reports, and industry publications where AI systems naturally discover and cite information. The companies winning this battle aren’t those with the best product—they’re those with the most strategic, visible presence across the sources that AI systems trust.

Your competitors’ positioning in AI comparisons reveals strategic intelligence that traditional competitive analysis often misses. By systematically monitoring how AI systems describe your competitors’ strengths, weaknesses, and positioning, you can identify market gaps and opportunities that competitors themselves may not have optimized for. Reverse-engineer competitor strategies by analyzing which sources they appear in most frequently, which claims they emphasize, and which use cases they prioritize—this reveals their content strategy and market positioning focus. Use tools like Ahrefs Brand Radar to track which domains mention your competitors most frequently, then analyze whether those same domains mention you; this gap represents untapped visibility opportunities. Comparison data also reveals positioning opportunities: if competitors consistently claim “best for enterprise” but you see customer testimonials and use cases suggesting you’re equally strong in that segment, you’ve identified a messaging gap worth addressing. The most sophisticated competitive intelligence comes from analyzing patterns across multiple AI systems—if a competitor dominates in ChatGPT comparisons but barely appears in Perplexity results, that reveals something about their content distribution strategy and source authority. By treating AI comparison data as a strategic intelligence source rather than just a visibility metric, you transform reactive monitoring into proactive competitive advantage.

AI systems update their comparison rankings continuously as new information is indexed and user interactions are processed. However, the frequency varies by platform—ChatGPT updates its training data periodically, while Perplexity and other real-time systems refresh results with each query. For your brand, this means changes to your visibility can happen within days of publishing new comparison content or earning citations from authoritative sources.

Traditional search rankings prioritize keyword density, backlinks, and domain authority. AI comparison visibility, by contrast, emphasizes structured data clarity, entity recognition, citation credibility, and positioning consistency across multiple sources. A page can rank #1 in Google but barely appear in AI comparisons if it lacks clear structure and verifiable claims.

Yes, absolutely. By implementing structured data markup (Schema.org), maintaining consistent naming across all properties, publishing clear positioning statements, and earning citations from authoritative third-party sources, you directly influence how AI systems understand and describe your product. The key is making your information machine-readable and credible.

Run a standardized prompt playbook across major AI platforms (ChatGPT, Gemini, Perplexity, Claude) asking comparison questions relevant to your category. Track whether you're mentioned, how you're positioned, and which sources the AI cites. Tools like AmICited.com automate this monitoring, providing quarterly visibility reports and competitive benchmarking.

The fastest wins come from: (1) implementing structured data markup on existing comparison pages, (2) ensuring consistent naming and positioning across all digital properties, (3) earning citations from industry publications and analyst reports, and (4) creating comparison content specifically optimized for AI readability. Most companies see measurable improvements within 4-6 weeks.

Structured data (JSON-LD schema markup) makes your information machine-readable, eliminating the AI's need to infer facts from unstructured prose. This dramatically improves accuracy and citation frequency. Products with proper schema markup appear in AI comparisons 2-3x more frequently than those without, and are described more accurately.

While core optimization principles remain consistent, each platform has distinct characteristics. ChatGPT values comprehensive, well-sourced content. Perplexity prioritizes real-time, cited information. Google Gemini emphasizes structured data and entity clarity. Rather than platform-specific optimization, focus on universal best practices: clear structure, credible citations, and consistent positioning.

The four critical metrics are: (1) Presence—are you mentioned in relevant comparison queries? (2) Positioning—do you appear first, middle, or last? (3) Accuracy—are the claims about your product correct? (4) Evidence usage—which sources does the AI cite when describing you? Track these quarterly to identify trends and competitive gaps.

Track how AI systems mention your brand in competitor comparisons across ChatGPT, Gemini, Perplexity, and more. Get real-time insights into your AI search visibility.

Learn how to set up AI brand monitoring to track your brand across ChatGPT, Perplexity, and Google AI Overviews. Complete guide with tools, strategies, and best...

Learn how to protect and control your brand reputation in AI-generated answers from ChatGPT, Perplexity, and Gemini. Discover strategies for brand visibility an...

Learn how to optimize product pages for AI search engines like ChatGPT and Perplexity. Discover structured data implementation, content strategies, and technica...