AI Traffic Conversion Rates: Why Quality Exceeds Quantity

Discover why AI traffic converts 23x better than organic search. Learn how to optimize for AI platforms and measure real ROI from AI-driven visitors.

Discover why data quality matters more than quantity for AI models. Learn resource allocation strategies, cost implications, and practical frameworks for optimizing your AI training data investment.

The conventional wisdom in machine learning has long been “more data is always better.” However, recent research challenges this assumption with compelling evidence that data quality significantly outweighs quantity in determining AI model performance. A 2024 arxiv study (2411.15821) examining small language models found that training data quality plays a far more significant role than sheer volume, revealing that the relationship between data quantity and model accuracy is far more nuanced than previously believed. The cost implications are substantial: organizations investing heavily in data collection without prioritizing quality often waste resources on storage, processing, and computational overhead while achieving diminishing returns on model performance.

Data quality is not a monolithic concept but rather a multidimensional framework encompassing several critical aspects. Accuracy refers to how correctly data represents reality and whether labels are assigned correctly. Consistency ensures data follows uniform formats and standards across the entire dataset. Completeness measures whether all necessary information is present without significant gaps or missing values. Relevance determines whether data directly addresses the problem the AI model is designed to solve. Reliability indicates the trustworthiness of the data source and its stability over time. Finally, noise represents unwanted variations or errors that can mislead model training. Understanding these dimensions helps organizations prioritize their data curation efforts strategically.

| Quality Dimension | Definition | Impact on AI |

|---|---|---|

| Accuracy | Correctness of labels and data representation | Directly affects model prediction reliability; mislabeled data causes systematic errors |

| Consistency | Uniform formatting and standardized data structure | Enables stable training; inconsistencies confuse learning algorithms |

| Completeness | Presence of all necessary information without gaps | Missing values reduce effective training data; impacts generalization |

| Relevance | Data directly addresses the problem domain | Highly relevant data outperforms large volumes of generic data |

| Reliability | Trustworthiness of data sources and stability | Unreliable sources introduce systematic bias; affects model robustness |

| Noise | Unwanted variations and measurement errors | Controlled noise improves robustness; excessive noise degrades performance |

The pursuit of data quantity without quality safeguards creates a cascade of problems that extend far beyond model performance metrics. Research by Rishabh Iyer demonstrates that label noise experiments reveal dramatic accuracy drops—mislabeled data actively degrades model performance rather than simply providing neutral training examples. Beyond accuracy concerns, organizations face mounting storage and processing costs for datasets that don’t improve model outcomes, alongside significant environmental costs from unnecessary computational overhead. Medical imaging provides a sobering real-world example: a dataset containing thousands of mislabeled X-rays could train a model that confidently makes dangerous diagnostic errors, potentially harming patients. The false economy of collecting cheap, low-quality data becomes apparent when accounting for the costs of model retraining, debugging, and deployment failures caused by poor training data.

Domain-specific quality consistently outperforms generic volume in practical AI applications. Consider a sentiment classifier trained for movie reviews: a carefully curated dataset of 10,000 movie reviews will substantially outperform a generic sentiment dataset of 100,000 examples drawn from financial news, social media, and product reviews. The relevance of training data to the specific problem domain matters far more than raw scale, as models learn patterns specific to their training distribution. When data lacks relevance to the target application, the model learns spurious correlations and fails to generalize to real-world use cases. Organizations should prioritize collecting smaller datasets that precisely match their problem domain over accumulating massive generic datasets that require extensive filtering and preprocessing.

The optimal approach to data strategy lies not at either extreme but in finding the “Goldilocks Zone"—the sweet spot where data quantity and quality are balanced appropriately for the specific problem. Too little data, even if perfectly labeled, leaves models underfitted and unable to capture the complexity of real-world patterns. Conversely, excessive data with quality issues creates computational waste and training instability. The arxiv study reveals this balance concretely: minimal duplication improved accuracy by 0.87% at 25% duplication levels, while excessive duplication at 100% caused a catastrophic 40% accuracy drop. The ideal balance depends on multiple factors including the algorithm type, problem complexity, available computational resources, and the natural variance in your target domain. Data distribution should reflect real-world variance rather than being artificially uniform, as this teaches models to handle the variability they’ll encounter in production.

Not all additional data is created equal—the distinction between beneficial augmentation and harmful degradation is crucial for effective data strategy. Controlled perturbations and augmentation techniques improve model robustness by teaching algorithms to handle real-world variations like slight rotations, lighting changes, or minor label variations. The MNIST handwritten digit dataset demonstrates this principle: models trained with augmented versions (rotated, scaled, or slightly distorted digits) generalize better to real handwriting variations than models trained only on original images. However, severe corruption—random noise, systematic mislabeling, or irrelevant data injection—actively degrades performance and wastes computational resources. The critical difference lies in intentionality: augmentation is purposefully designed to reflect realistic variations, while garbage data is indiscriminate noise that confuses learning algorithms. Organizations must distinguish between these approaches when expanding their datasets.

For resource-constrained organizations, active learning provides a powerful solution that reduces data requirements while maintaining or improving model performance. Rather than passively collecting and labeling all available data, active learning algorithms identify which unlabeled examples would be most informative for the model to learn from, dramatically reducing human annotation burden. This approach enables organizations to achieve strong model performance with significantly less labeled data by focusing human effort on the most impactful examples. Active learning democratizes AI development by making it accessible to teams without massive labeling budgets, allowing them to build effective models with strategic data selection rather than brute-force volume. By learning efficiently with less data, organizations can iterate faster, reduce costs, and allocate resources toward quality assurance rather than endless data collection.

Strategic resource allocation requires fundamentally prioritizing quality over quantity in data strategy decisions. Organizations should invest in robust data validation pipelines that catch errors before they enter training datasets, implementing automated checks for consistency, completeness, and accuracy. Data profiling tools can identify quality issues at scale, revealing patterns of mislabeling, missing values, or irrelevant examples that should be addressed before training. Active learning implementations reduce the volume of data requiring human review while ensuring that reviewed examples are maximally informative. Continuous monitoring of model performance in production reveals whether training data quality issues are manifesting as real-world failures, enabling rapid feedback loops for improvement. The optimal strategy balances data collection with rigorous curation, recognizing that 1,000 perfectly labeled examples often outperform 100,000 noisy ones in terms of both model performance and total cost of ownership.

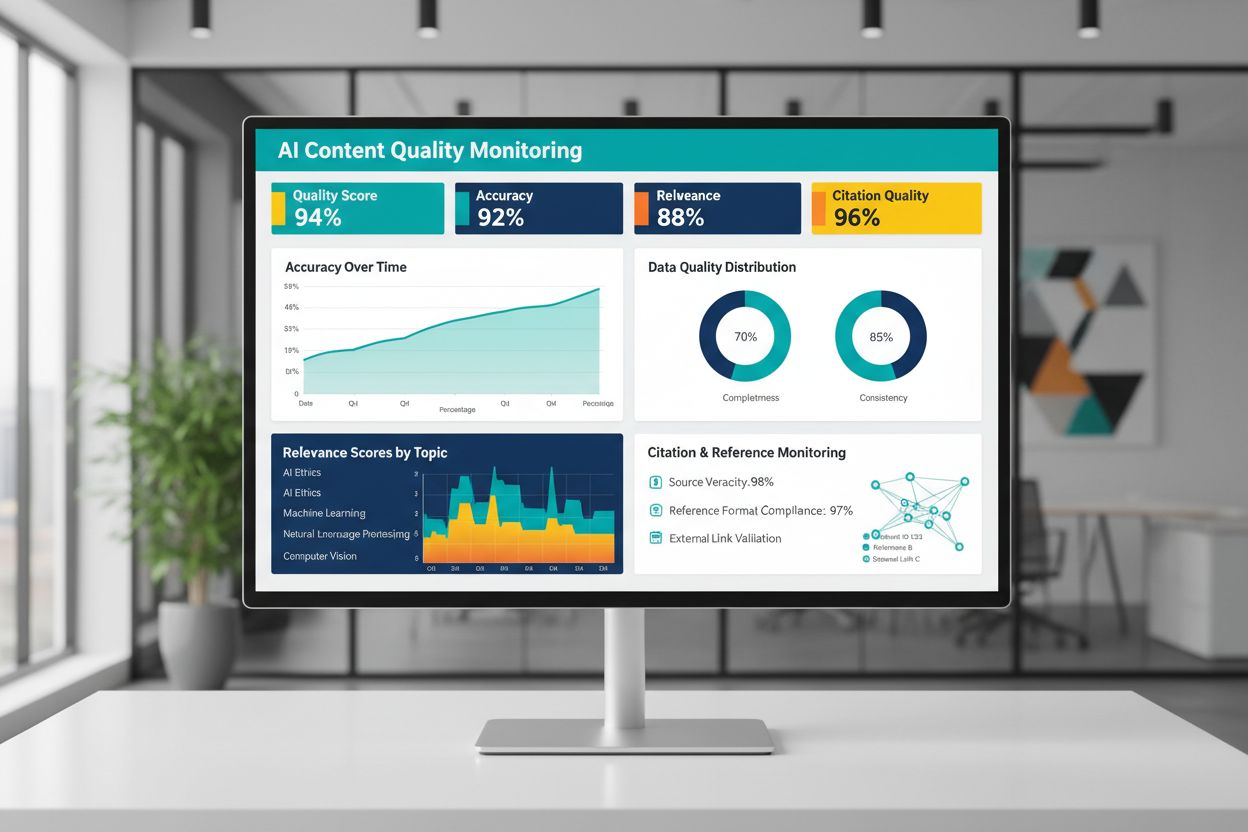

The quality of AI-generated or AI-trained content depends fundamentally on the quality of training data, making continuous monitoring of AI outputs essential for maintaining reliability. Platforms like AmICited.com address this critical need by monitoring AI answers and tracking citation accuracy—a direct proxy for content quality and trustworthiness. When AI systems are trained on low-quality data with poor citations or inaccurate information, their outputs inherit these flaws, potentially spreading misinformation at scale. Monitoring tools should track not just accuracy metrics but also relevance, consistency, and the presence of supporting evidence for claims made by AI systems. Organizations deploying AI systems must implement feedback loops that identify when outputs diverge from expected quality standards, enabling rapid retraining or adjustment of underlying data. The investment in monitoring infrastructure pays dividends by catching quality degradation early, before it impacts users or damages organizational credibility.

Translating data quality principles into action requires a structured approach that begins with assessment and progresses through measurement and iteration. Start by assessing your current baseline—understand the existing quality of your training data through audits and profiling. Define clear quality metrics aligned with your specific use case, whether that’s accuracy thresholds, consistency standards, or relevance criteria. Implement data governance practices that establish ownership, validation procedures, and quality gates before data enters training pipelines. Begin with smaller, carefully curated datasets rather than attempting to process massive volumes immediately, allowing you to establish quality standards and processes at manageable scale. Measure improvements rigorously by comparing model performance before and after quality interventions, creating evidence-based justification for continued investment. Scale gradually as you refine your processes, expanding data collection only after proving that quality improvements translate to real performance gains.

No. Recent research shows that data quality often matters more than quantity. Poor-quality, mislabeled, or irrelevant data can actively degrade model performance, even at scale. The key is finding the right balance between having enough data to train effectively while maintaining high quality standards.

Data quality encompasses multiple dimensions: accuracy (correct labels), consistency (uniform formatting), completeness (no missing values), relevance (alignment with your problem), reliability (trustworthy sources), and noise levels. Define metrics specific to your use case and implement validation gates to catch quality issues before training.

The ideal size depends on your algorithm complexity, problem type, and available resources. Rather than pursuing maximum size, aim for the 'Goldilocks Zone'—enough data to capture real-world patterns without being overburdened with irrelevant or redundant examples. Start small with curated data and scale gradually based on performance improvements.

Data augmentation applies controlled perturbations (rotations, slight distortions, lighting variations) that preserve the true label while teaching models to handle real-world variability. This differs from garbage data—augmentation is intentional and reflects realistic variations, making models more robust to deployment conditions.

Active learning identifies which unlabeled examples would be most informative for the model to learn from, dramatically reducing annotation burden. Instead of labeling all available data, you focus human effort on the most impactful examples, achieving strong performance with significantly less labeled data.

Prioritize quality over quantity. Invest in data validation pipelines, profiling tools, and governance processes that ensure high-quality training data. Research shows that 1,000 perfectly labeled examples often outperform 100,000 noisy ones in both model performance and total cost of ownership.

Poor-quality data leads to multiple costs: model retraining, debugging, deployment failures, storage overhead, and computational waste. In critical domains like medical imaging, low-quality training data can result in dangerous errors. The false economy of cheap, low-quality data becomes apparent when accounting for these hidden costs.

Implement continuous monitoring of AI outputs tracking accuracy, relevance, consistency, and citation quality. Platforms like AmICited monitor how AI systems reference information and track citation accuracy. Establish feedback loops connecting production performance back to training data quality for rapid improvement.

Track how AI systems reference your brand and ensure content accuracy with AmICited's AI monitoring platform. Understand the quality of AI-generated answers about your business.

Discover why AI traffic converts 23x better than organic search. Learn how to optimize for AI platforms and measure real ROI from AI-driven visitors.

Learn how to present statistics for AI extraction. Discover best practices for data formatting, JSON vs CSV, and ensuring your data is AI-ready for LLMs and AI ...

Discover how publication dates impact AI citations across ChatGPT, Perplexity, and Google AI Overviews. Learn industry-specific freshness strategies and avoid t...