Content Velocity for AI

Learn how content velocity optimized for AI systems drives citations, visibility in AI Overviews, and competitive advantage. Discover publishing cadences, fresh...

Learn optimal content velocity for AI publishing. Discover how much content to publish, best frequencies by platform, and strategies to scale without sacrificing quality.

Content velocity represents the intersection of three critical factors: the speed at which you produce content, the volume you distribute, and the timeliness of your publishing relative to audience demand and search trends. In traditional marketing, content velocity was constrained by human capacity—a team could realistically produce 4-8 high-quality pieces monthly. AI fundamentally disrupts this equation by enabling teams to generate, optimize, and distribute content at unprecedented scales, with some organizations reporting 10-50x increases in output capacity. The stakes have never been higher because search engines now evaluate not just individual content quality, but your entire publishing pattern, using signals like indexing frequency, content freshness, and topical authority to determine rankings. For modern marketers, understanding how to leverage AI’s velocity advantages while maintaining quality standards has become a core competitive differentiator that directly impacts organic visibility, lead generation, and market share.

Content velocity operates across three distinct but interconnected dimensions that work together to determine your overall publishing effectiveness. Each dimension plays a unique role in how search engines and audiences perceive your content strategy, and optimizing all three simultaneously creates compounding advantages. Understanding these dimensions helps you make strategic decisions about resource allocation and publishing priorities.

| Dimension | Definition | Impact |

|---|---|---|

| Volume | Total number of pieces published within a specific timeframe (monthly, quarterly, annually) | Increases topical coverage, improves keyword reach, signals authority to search engines; however, excessive volume without quality dilutes brand credibility |

| Pace | Consistency and regularity of publishing schedule (daily, weekly, bi-weekly) | Establishes predictable audience expectations, improves indexing frequency, helps algorithms understand content freshness; irregular pace confuses audience engagement patterns |

| Timeliness | How quickly you publish content relative to trending topics, search intent spikes, and seasonal demand | Captures high-intent traffic during peak demand windows, improves click-through rates from search results, positions brand as current and relevant; delayed publishing misses traffic opportunities |

Artificial intelligence has fundamentally altered the economics of content production by reducing the time required to move from ideation to publication from weeks to hours. Research from Kontent.ai demonstrates that AI-assisted workflows can accelerate content creation by 5-10x, allowing a single marketer to produce what previously required a dedicated team of three to five people. However, this capability has created a critical challenge: Google’s helpful content update and subsequent algorithm refinements now actively filter low-quality bulk content, meaning that simply publishing more doesn’t guarantee better rankings. The industry has converged on what experts call the “30% rule”—approximately 30% of your content should be AI-generated or AI-assisted, with the remaining 70% either human-created, heavily edited, or strategically repurposed to maintain quality standards. Organizations that ignore this balance report higher bounce rates, lower engagement metrics, and diminished search visibility, while those that strategically blend AI velocity with human expertise see 40-60% improvements in organic traffic within six months.

Different platforms operate under fundamentally different algorithms and audience behaviors, requiring distinct publishing frequency strategies to maximize reach and engagement. B2B audiences typically engage with content less frequently but expect higher depth and expertise, while B2C audiences consume content more regularly but tolerate shorter, more casual formats. Research from HubSpot and Sprout Social provides clear benchmarks for optimal publishing frequency:

Treating AI-generated content as a distinct category within your analytics infrastructure is essential for understanding what’s actually working and what’s underperforming. Most organizations fail to track AI content separately from human-created content, making it impossible to optimize their AI workflows or justify continued investment in AI tools. Key performance indicators for AI content should include: indexing latency (how quickly Google discovers and indexes your content), engagement metrics (time on page, scroll depth, click-through rate), ranking velocity (how quickly content reaches page one), and content decay signals (when rankings begin to decline). Tools like Google Analytics 4, SEMrush, and Analytify provide specialized dashboards for tracking these metrics at scale, allowing you to identify patterns such as “AI-generated content in category X ranks 40% faster but decays 25% quicker than human content.” Specific metric examples include monitoring whether AI content achieves indexing within 24-48 hours versus 5-7 days for human content, tracking engagement rates (target: 3-5% for blog content), and measuring ranking stability over 90-day periods to identify content that needs reinforcement or updating.

No universal benchmark exists for optimal content velocity because the right publishing frequency depends entirely on your industry, audience expectations, competitive landscape, and available resources. A SaaS company in a competitive vertical might require 8-12 weekly pieces to establish authority, while a niche B2B service provider might achieve better results with 2-3 highly specialized pieces weekly. The most effective strategy involves front-loading your content velocity during the initial 90-180 days of a new site or content initiative, publishing 5-7 pieces weekly to rapidly establish topical authority and capture low-competition keywords. Once you’ve achieved first-page rankings for your primary keywords and established a content foundation, you can scale down to a sustainable maintenance velocity of 2-4 pieces weekly while focusing on updating and reinforcing top-performing content. A real-world example: a B2B marketing agency launched with 6 pieces weekly for 120 days (approximately 100 pieces), then reduced to 3 pieces weekly while dedicating 30% of effort to updating and expanding their top 20 performing articles, resulting in 180% organic traffic growth over 12 months compared to competitors maintaining consistent 2-piece weekly publishing.

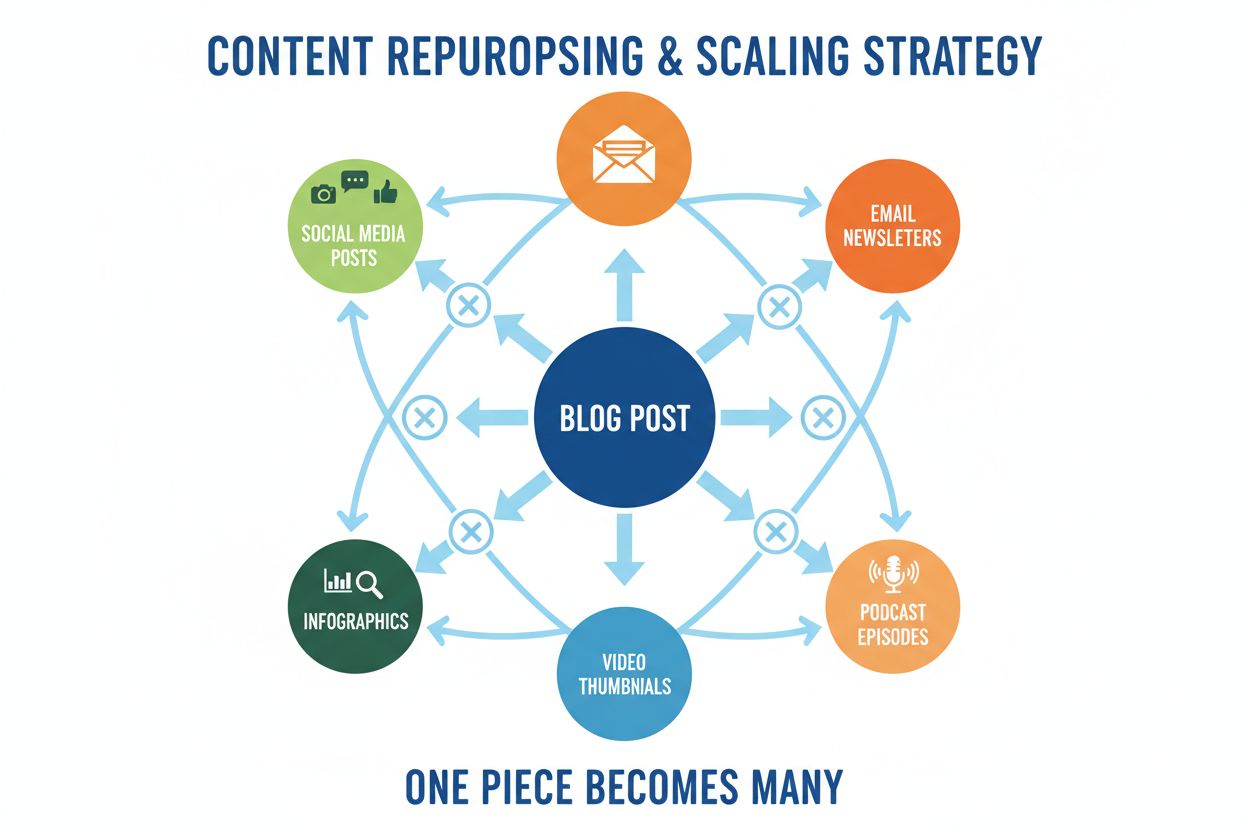

Increasing publishing frequency without degrading quality requires systematic approaches that leverage AI’s strengths while maintaining human oversight and editorial standards. Content repurposing transforms a single comprehensive research piece into 8-12 derivative assets: a blog post becomes a LinkedIn article, three social media threads, an email series, a video script, an infographic, and a podcast episode outline—multiplying reach without proportional effort increases. The modular content approach involves creating reusable content components (definitions, case studies, data visualizations, expert quotes) that can be assembled into different formats and contexts, reducing production time by 40-50% while maintaining consistency. Strategic outsourcing and team scaling through fractional specialists, content agencies, or AI-trained contractors allows you to maintain quality while increasing volume; many organizations find that hiring a part-time editor to review AI-generated content costs less than the productivity gains. AI-assisted workflows that use AI for research, outlining, and first-draft generation while reserving human effort for strategy, editing, and fact-checking create optimal efficiency; tools like Claude, ChatGPT, and specialized content platforms can reduce writing time from 4 hours to 1 hour per piece. Automation tools for scheduling, distribution, and basic optimization (meta descriptions, internal linking suggestions) reclaim 5-10 hours weekly that can be redirected toward higher-value activities like strategy and audience research.

Organizations scaling AI content frequently make critical errors that undermine their entire strategy and damage long-term search visibility. Publishing faster than indexing capacity creates a dangerous situation where new content competes with your own older content for rankings, causing keyword cannibalization and diluting authority signals; the solution is monitoring your indexing rate and never publishing more than your site can effectively index within 48 hours. Keyword cannibalization occurs when multiple AI-generated pieces target the same search intent, forcing Google to choose which version to rank and fragmenting your authority; implement strict keyword mapping and use tools like SEMrush to identify overlapping targets before publishing. Ignoring engagement signals by publishing high-volume content without monitoring bounce rates, scroll depth, or time-on-page creates a false sense of progress while your actual audience engagement deteriorates; ⚠️ this is a critical warning sign that your velocity has exceeded your quality capacity. Not tracking AI content separately prevents you from understanding whether your AI investment is actually delivering ROI or simply inflating vanity metrics; establish separate tracking from day one. Content decay issues emerge when you publish rapidly without maintaining and updating existing content, causing older pieces to lose rankings as they age; allocate 30-40% of your publishing effort to maintenance and updates rather than pure new content creation.

Effective content velocity management requires systematic monitoring and willingness to adjust your strategy based on performance data rather than assumptions. Implement weekly audits that examine indexing rates, ranking changes, engagement metrics, and traffic patterns, using tools like Google Search Console to identify which content is performing and which is underperforming; this 2-3 hour weekly investment prevents small problems from becoming major issues. Seasonal adjustments recognize that audience demand fluctuates throughout the year—increasing velocity before peak seasons and reducing during slow periods aligns your effort with actual demand; for example, B2B SaaS companies typically see 40% higher search volume in Q1 and Q4, justifying increased publishing during these windows. Trend responsiveness requires monitoring industry news, search trend spikes, and competitor activity to capitalize on emerging opportunities; tools like Google Trends and SEMrush Topic Research identify rising keywords 4-8 weeks before they reach peak volume. A/B testing publishing times and frequencies on specific platforms reveals your unique audience patterns—what works for competitors may not work for your specific audience, so test different schedules and measure engagement differences. Consistency over perfection means maintaining a sustainable publishing schedule that you can execute reliably rather than alternating between aggressive pushes and complete silence; audiences and algorithms reward predictability, making a consistent 3-pieces-weekly schedule more effective than sporadic 10-piece weeks.

The trajectory of content velocity is shifting from a pure speed-focused approach toward a quality-first methodology enhanced by AI efficiency, where the goal is producing better content faster rather than simply producing more content. Emerging tools are integrating real-time fact-checking, automated citation tracking, and AI-generated content detection directly into publishing workflows, allowing creators to maintain quality standards while leveraging velocity advantages. The competitive advantage will increasingly belong to organizations that integrate AI monitoring into their content strategy, using tools that track not just their own content performance but also how their AI-generated content is cited, referenced, and used across the web—understanding your content’s influence becomes as important as understanding its rankings. A critical emerging concern is tracking AI citations and attribution, as AI models train on published content; organizations that understand how their content influences AI model outputs will gain strategic advantages in future search and discovery ecosystems. The organizations that thrive in the next 24 months will be those that view content velocity not as a race to publish the most content, but as a strategic discipline of publishing the right content at the right pace with the right quality standards, using AI as a force multiplier for human creativity and expertise rather than a replacement for it.

There's no universal benchmark, but most successful organizations publish 2-4 high-quality pieces weekly after an initial 90-180 day ramp-up period. The key is balancing volume with quality—research shows that approximately 30% of your content should be AI-generated or AI-assisted, with the remaining 70% either human-created or heavily edited. Your specific velocity should depend on your industry competitiveness, audience expectations, and available resources.

Publishing frequency varies significantly by platform. LinkedIn performs best with 3-5 posts weekly for B2B brands, while TikTok requires 3-5 videos weekly minimum for algorithm favorability. Blogs should maintain 2-4 comprehensive posts weekly for established sites, though new sites benefit from 5-7 weekly posts during the first 90 days to establish topical authority. The most important factor is consistency—a predictable schedule outperforms sporadic high-volume bursts.

Yes, absolutely. Google's helpful content update actively filters low-quality bulk content, and publishing faster than your site can effectively index creates keyword cannibalization where your own content competes for rankings. Additionally, excessive AI content without human oversight typically shows higher bounce rates and lower engagement signals, which negatively impact rankings. The solution is implementing the 30% rule and maintaining a sustainable publishing pace that allows for quality control.

Track AI content separately from human-created content using Google Analytics 4, SEMrush, or Analytify. Monitor key metrics including indexing latency (target: 24-48 hours), engagement rate (target: 3-5% for blog content), ranking velocity (how quickly content reaches page one), and content decay signals (ranking stability over 90 days). Weekly audits of these metrics reveal whether your velocity strategy is delivering actual business results or just inflating vanity metrics.

Content velocity encompasses three dimensions: volume (total pieces published), pace (consistency of publishing), and timeliness (relevance to current demand). Publishing frequency refers only to how often you publish. You can have high publishing frequency but low velocity if your content isn't timely or engaging. Conversely, you can have high velocity with moderate frequency by publishing highly relevant, well-timed content that performs exceptionally well.

Spread it out strategically. The most effective approach is front-loading your content velocity during the initial 90-180 days of a new site or content initiative, publishing 5-7 pieces weekly to establish topical authority. Once you've achieved first-page rankings for primary keywords, scale down to a sustainable maintenance velocity of 2-4 pieces weekly while dedicating 30-40% of effort to updating and reinforcing top-performing content. This approach balances rapid authority building with long-term sustainability.

Consistent, high-velocity publishing signals to both search engines and audiences that your brand is an active, current authority in your space. Search engines favor regularly updated sites with fresh content, improving rankings and visibility. Audiences perceive brands that publish consistently as more credible and trustworthy. However, this only works if velocity is paired with quality—low-quality high-volume publishing damages authority and engagement. The key is maintaining predictable publishing patterns with content that genuinely serves your audience.

Google Analytics 4 allows you to create custom dimensions for tracking AI content separately. SEMrush provides position tracking and organic research tools for monitoring ranking velocity and performance. Analytify simplifies GA4 analysis with pre-built dashboards for WordPress sites. AmICited specifically tracks how your content is cited and referenced in AI-generated answers across ChatGPT, Perplexity, and Google AI Overviews, providing visibility into your content's influence in the AI ecosystem.

Content velocity matters, but so does visibility. Track how your content is cited in AI-generated answers across ChatGPT, Perplexity, and Google AI Overviews with AmICited.

Learn how content velocity optimized for AI systems drives citations, visibility in AI Overviews, and competitive advantage. Discover publishing cadences, fresh...

Learn how Citation Velocity measures the rate of change in AI citations over time. Discover why it's a leading indicator of visibility momentum, how to calculat...

Learn how page speed impacts your visibility in AI search engines like ChatGPT, Perplexity, and Gemini. Discover optimization strategies and metrics that matter...