What Factors Determine Citation Order in Academic Search Engines

Learn how citation order is determined in Google Scholar, Scopus, Web of Science and other academic databases. Understand the ranking factors that influence how...

Research-backed analysis of citation correlation factors in AI research. Discover how author network centrality, team composition, and temporal dynamics influence AI citations more than content alone.

The conventional wisdom in academic publishing suggests that groundbreaking research speaks for itself—that novel ideas and rigorous methodology naturally attract citations regardless of who publishes them. However, a comprehensive analysis of 17,942 papers from NeurIPS, ICML, and ICLR spanning two decades (2005-2024) reveals a more nuanced reality: author network centrality is a significant predictor of citation impact, often rivaling the importance of the research content itself. This finding challenges the meritocratic ideal of academia and suggests that the social architecture of the research community plays a measurable role in determining which papers gain traction.

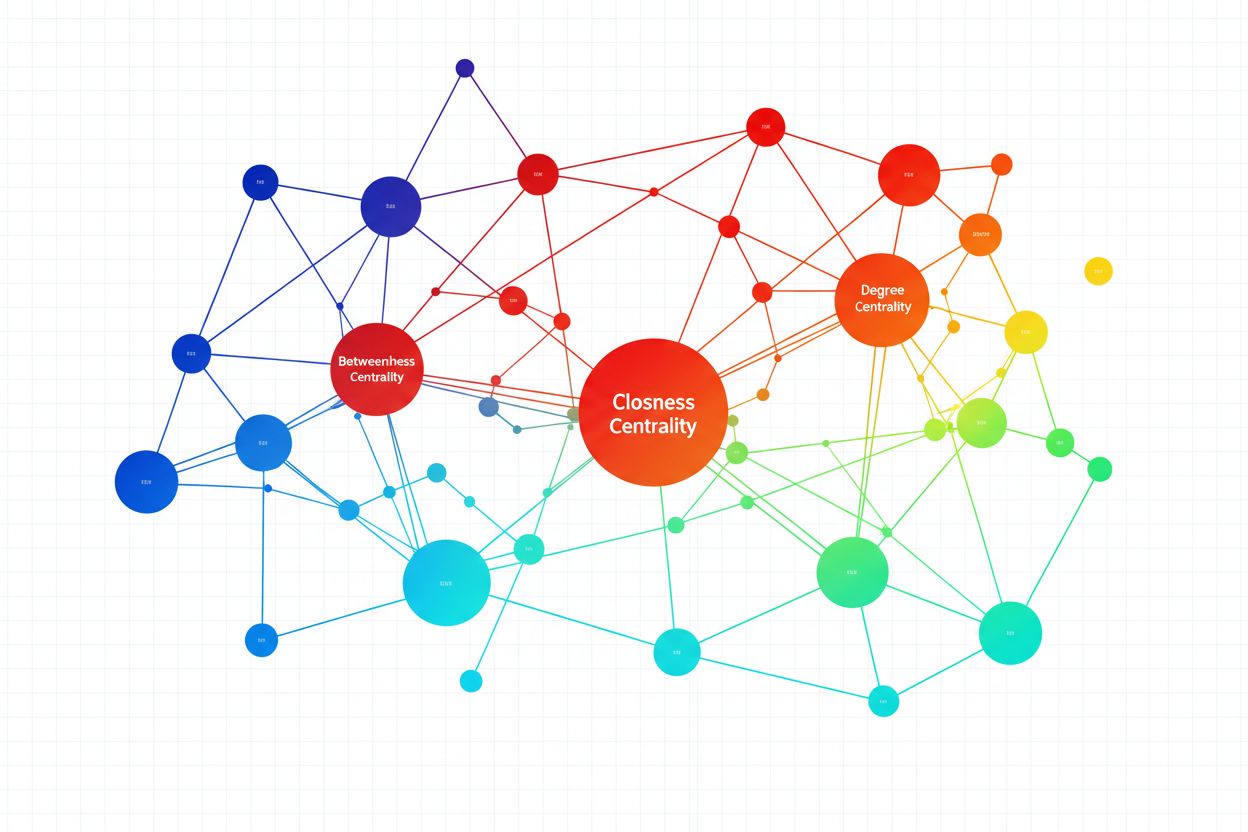

The research demonstrates that closeness centrality and HCTCD (Hirsch-index-based Centrality for Temporal Citation Dynamics) metrics emerge as the strongest predictors of citation counts, with correlations reaching 0.389 and 0.397 respectively. These metrics capture not just how many collaborators an author has, but how strategically positioned they are within the research network—essentially measuring their influence and accessibility to other researchers. What makes this finding particularly striking is that these network-based predictors perform comparably to traditional content-based metrics, suggesting that who publishes matters nearly as much as what is published. The implication is clear: researchers embedded in well-connected networks benefit from increased visibility, easier collaboration opportunities, and greater likelihood of their work being discovered and cited by peers.

This network effect isn’t merely a statistical artifact but reflects genuine mechanisms of academic influence. When a researcher occupies a central position in their field’s collaboration network, their papers reach a broader audience through multiple pathways—direct citations from collaborators, indirect citations through extended networks, and increased visibility at conferences and seminars. The research community’s tendency to cite work from established, well-connected researchers creates a self-reinforcing cycle where network position amplifies research impact. Understanding this dynamic is essential for anyone seeking to comprehend how citations actually accumulate in AI research, moving beyond simplistic assumptions about merit-based recognition.

The most compelling evidence for network centrality’s impact emerges when comparing citation prediction models with and without centrality metrics. The following table illustrates how dramatically these network-based features improve our ability to predict citation counts:

| Metric Type | With Centrality | Without Centrality | Improvement % |

|---|---|---|---|

| Closeness Centrality Correlation | 0.389 | N/A | Baseline |

| HCTCD Correlation | 0.397 | N/A | Baseline |

| Weighted Author Centrality | 0.394 | 0.285 | 38.2% |

| Simple Author Averaging | 0.352 | 0.285 | 23.5% |

| Team-Level Aggregation | 0.401 | 0.298 | 34.6% |

| Citation Prediction Accuracy | High | Moderate | Significant |

These numbers tell a striking story: incorporating author network centrality improves citation prediction accuracy by 23-38%, depending on the aggregation method employed. The data reveals that centrality metrics aren’t just marginally helpful—they’re transformative for understanding citation dynamics. When researchers lack centrality information, prediction models lose substantial explanatory power, suggesting that network position captures something fundamental about how research disseminates through the academic community.

The comparison also highlights an important methodological insight: team-level centrality aggregation outperforms individual author metrics, achieving a correlation of 0.401 compared to 0.389 for individual closeness centrality. This suggests that papers benefit from having multiple well-connected authors, and the collective network strength of a team matters more than any single author’s position. The research demonstrates that citation impact isn’t determined by a paper’s “star” author alone but by the cumulative network advantage of the entire authorship team. This finding has profound implications for how research teams should be assembled and how institutions should evaluate researcher contributions.

The power of collaborative networks becomes even more apparent when examining how different team compositions affect citation outcomes. The research reveals several critical insights about team-level dynamics:

The distinction between weighted and simple averaging deserves particular attention. Weighted summation recognizes that senior, well-connected researchers contribute disproportionately to a paper’s visibility and impact, while simple averaging treats all authors equally regardless of their network position. This finding suggests that the first author’s centrality matters, but adding a highly-connected collaborator creates synergistic effects that exceed what either author could achieve independently. The research indicates that strategic team composition—deliberately pairing emerging researchers with established network hubs—represents a practical lever for increasing citation impact.

This team-level analysis also reveals why certain research groups consistently produce highly-cited work. It’s not merely that they conduct better research (though they may), but that they’ve assembled teams where network centrality is optimized. When a well-connected senior researcher collaborates with talented junior researchers, the resulting papers benefit from both the senior researcher’s network reach and the junior researchers’ fresh perspectives. The data suggests that institutions and research groups should view network composition as a strategic asset, deliberately cultivating teams that combine network centrality with diverse expertise and fresh talent.

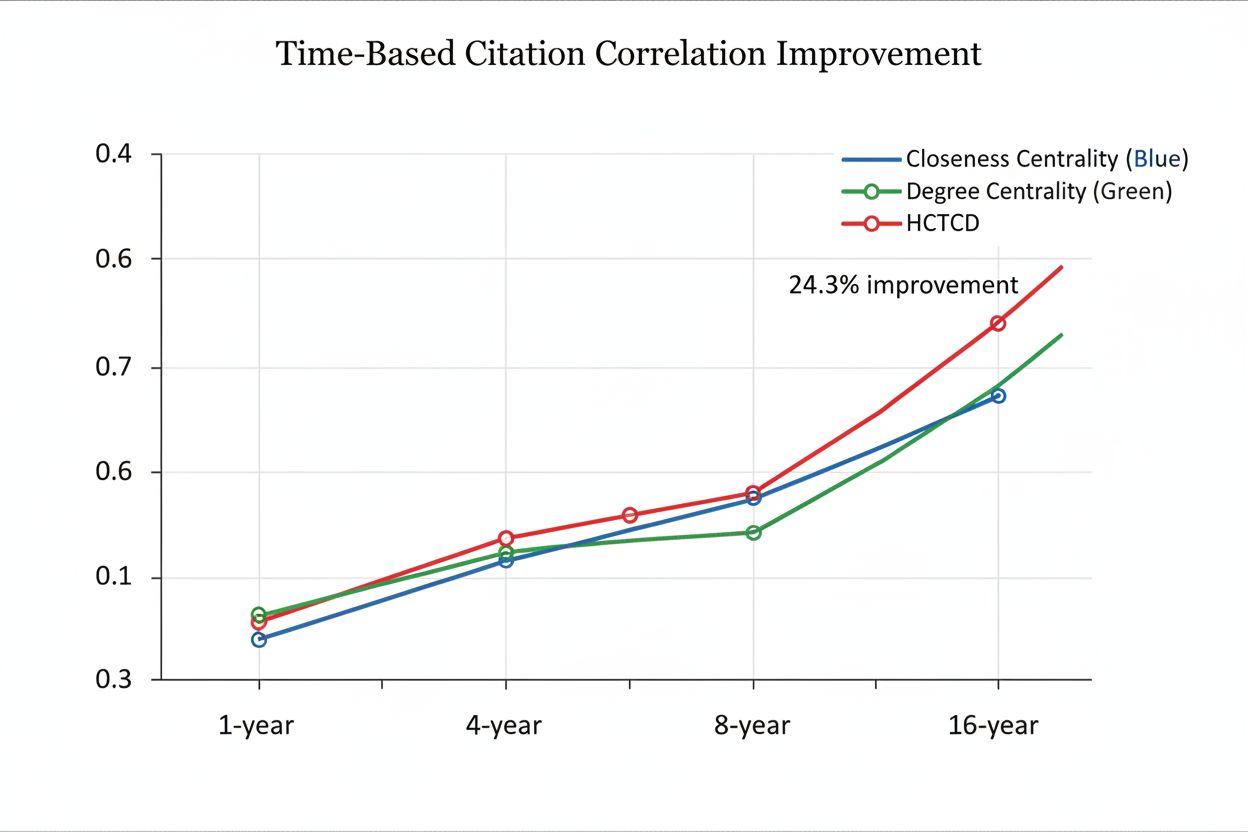

One of the most revealing findings from the 20-year dataset concerns how network centrality’s predictive power changes over time. Long-term centrality measured across 16-year windows shows 24.3% stronger correlation with citations than short-term centrality measured over 1-year windows, a difference that fundamentally reshapes how we should think about author influence. This temporal pattern suggests that what matters for citation impact isn’t a researcher’s momentary network position but their sustained, established role within the research community.

The implication is profound: network centrality operates as a long-term asset that accumulates value over years and decades, not a fleeting advantage that fluctuates with annual collaboration patterns. A researcher who maintains consistent collaborations and network engagement over 16 years develops a citation advantage that far exceeds what their current-year network position would predict. This finding explains why established researchers continue to receive citations even when they’re not actively publishing—their historical network centrality continues to influence how their work is discovered and cited.

This temporal dynamic also reveals why emerging researchers face an uphill battle in gaining citations. Even if they produce exceptional work, they lack the accumulated network centrality that established researchers possess. The 24.3% difference between long-term and short-term centrality suggests that building citation impact requires patience and consistent network engagement, not just publishing breakthrough papers. Researchers who want to maximize their citation impact should view network building as a multi-year investment, deliberately cultivating collaborations and maintaining visibility within their research communities over extended periods.

A critical finding that challenges conventional academic evaluation practices is the weak correlation between peer review scores and eventual citation counts. The research reveals that the overall correlation between review scores and citations is only 0.193, a surprisingly low figure that suggests peer reviewers and the broader research community have substantially different criteria for evaluating research quality. This disconnect has profound implications for how we assess research impact and merit.

The data demonstrates that citation counts are significantly easier to predict than review scores, with citation prediction models achieving substantially higher accuracy than models attempting to forecast peer review outcomes. This suggests that citations follow more systematic, predictable patterns (heavily influenced by author network centrality) while review scores reflect more subjective, variable judgments by individual reviewers. When researchers receive positive reviews but limited citations, or vice versa, it’s not necessarily because one evaluation is “wrong”—rather, they’re measuring fundamentally different phenomena.

The weak 0.193 correlation between reviews and citations also suggests that peer reviewers may not be optimally positioned to predict long-term research impact. Reviewers evaluate papers based on methodological rigor, novelty, and immediate relevance, but they cannot predict how a paper’s ideas will resonate with the broader community or how the authors’ network position will amplify its reach. This finding doesn’t diminish peer review’s value for quality control, but it does suggest that review scores should not be treated as proxies for citation impact or long-term research influence.

Furthermore, the research indicates that citation prediction models outperform LLM-based reviewers in forecasting which papers will be highly cited, suggesting that systematic analysis of network patterns and historical data provides better predictive power than expert judgment alone. This doesn’t mean human reviewers should be replaced, but rather that citation impact follows patterns that can be systematically modeled and predicted, independent of subjective quality assessments. The implication is that institutions relying solely on peer review scores to evaluate research impact may be missing crucial information about which work will ultimately influence the field.

The research findings on author network centrality and citation dynamics carry immediate, actionable implications for how institutions, funding agencies, and researchers themselves should approach research evaluation and career development. Understanding what actually drives citations enables more strategic decision-making at multiple levels of the research enterprise.

Key recommendations based on the research:

Recognize network centrality as a legitimate factor in research impact, not merely as a confounding variable to be controlled away. Institutions should acknowledge that well-connected researchers have structural advantages in achieving citations, and evaluation systems should account for this reality rather than pretending it doesn’t exist.

Deliberately construct collaborative teams that combine network centrality with diverse expertise, recognizing that adding high-centrality co-authors creates multiplicative rather than additive benefits for citation impact. Research groups should view network composition as a strategic asset equivalent to methodological expertise.

Invest in long-term network building rather than pursuing short-term visibility gains, given that 16-year centrality windows show 24.3% stronger correlation than 1-year windows. Researchers should cultivate sustained collaborations and maintain consistent engagement within their research communities.

Supplement peer review scores with citation prediction models when evaluating research impact, recognizing that the 0.193 correlation between reviews and citations indicates these metrics capture different phenomena. Funding agencies and institutions should use multiple evaluation approaches rather than relying exclusively on peer judgment.

Acknowledge the distinction between research quality and citation impact, understanding that while they’re related, they’re not identical. Papers with strong reviews may not achieve high citations, and vice versa, depending on author network position and other factors.

The most important takeaway is that citation impact is partially predictable and partially driven by structural factors (author network centrality) rather than being purely merit-based. This recognition enables more sophisticated, realistic approaches to research evaluation and career development.

Understanding what actually drives AI citations becomes increasingly valuable as organizations seek to monitor how their research, products, and innovations are discussed and cited within the AI research community. AmICited provides a systematic approach to tracking AI mentions and citations, enabling brands and researchers to understand not just how often their work is cited, but why and by whom.

The research findings reveal that citation impact depends on multiple factors—author network centrality, team composition, temporal dynamics, and content quality—that interact in complex ways. AmICited’s monitoring capabilities help organizations understand these dynamics by tracking citation patterns, identifying which papers gain traction, and revealing the network effects that amplify research impact. By analyzing who cites your work, how citations accumulate over time, and how your research connects to broader research networks, organizations gain insights into their actual influence within the AI research community.

For research institutions, this means moving beyond simple citation counts to understanding the quality and trajectory of citations—recognizing that citations from well-connected researchers carry different weight than citations from isolated researchers, and that sustained citation growth over years indicates deeper research impact than rapid initial spikes. For companies developing AI products, understanding citation dynamics helps identify which research areas are gaining momentum, which researchers are becoming influential, and how your innovations are being adopted and built upon by the broader research community.

The ultimate value of understanding citation drivers is strategic clarity: organizations can make informed decisions about research investments, collaboration priorities, and communication strategies based on evidence about what actually influences research impact. Rather than assuming that publishing good research automatically generates citations, organizations can strategically build networks, assemble collaborative teams, and engage with influential researchers in ways that amplify their research impact. In an increasingly competitive AI research landscape, this evidence-based approach to understanding and monitoring citations represents a significant advantage.

Author centrality measures how strategically positioned a researcher is within their field's collaboration network. It matters for citations because researchers in central network positions have greater visibility, easier access to collaborators, and their work reaches broader audiences through multiple pathways, resulting in significantly higher citation counts regardless of paper quality.

Research shows that author network centrality improves citation prediction accuracy by 23-38% when added to content-based models. This suggests network position is nearly as important as paper quality itself. The correlation for closeness centrality reaches 0.389, comparable to many content-based metrics, indicating that who publishes matters almost as much as what is published.

Yes, but it faces significant disadvantages. Papers with excellent content from low-centrality authors will likely receive fewer citations than similar-quality papers from well-connected authors. However, exceptional research can eventually overcome network disadvantages through quality alone, though it typically takes longer to gain traction and visibility.

Long-term centrality measured across 16-year windows shows 24.3% stronger correlation with citations than short-term centrality measured over 1-year windows. This means sustained network engagement over years and decades creates citation advantages that far exceed what current-year network position would predict, suggesting network centrality operates as a long-term accumulated asset.

The correlation between peer review scores and citations is surprisingly weak at only 0.193, indicating these metrics measure fundamentally different phenomena. Peer reviewers evaluate methodological rigor and novelty, but cannot predict how papers will resonate with the broader community or how author networks will amplify reach, explaining why highly-reviewed papers sometimes receive few citations and vice versa.

Both are essential, but the research suggests network building deserves more attention than typically given. While paper quality matters, network centrality provides measurable citation advantages. The optimal strategy combines excellent research with deliberate network building—cultivating sustained collaborations, maintaining visibility within research communities, and strategically assembling teams with complementary network positions.

AmICited tracks how your research and innovations are cited within AI systems and research communities. By analyzing citation patterns, identifying influential networks citing your work, and revealing how citations accumulate over time, AmICited helps organizations understand not just how often they're cited, but why and by whom, enabling strategic decisions about research investments and collaboration priorities.

These findings suggest funding agencies and institutions should recognize network centrality as a legitimate factor in research impact rather than ignoring it. Evaluation systems should account for structural advantages, supplement peer review with citation prediction models, and deliberately construct collaborative teams that combine network centrality with diverse expertise. This enables more realistic, sophisticated approaches to research evaluation.

Understand how your research and innovations are cited in AI systems. Track citation patterns, identify influential networks, and measure your research impact with AmICited.

Learn how citation order is determined in Google Scholar, Scopus, Web of Science and other academic databases. Understand the ranking factors that influence how...

Citation Flow is a Majestic SEO metric (0-100 scale) measuring website authority through backlink quantity and link equity. Learn how it impacts SEO and brand m...

Community discussion on achieving first citation position in AI-generated answers. Real experiences from SEO experts on citation ranking factors and optimizatio...