How to Measure AI Search Performance: Essential Metrics and KPIs

Learn how to measure AI search performance across ChatGPT, Perplexity, and Google AI Overviews. Discover key metrics, KPIs, and monitoring strategies for tracki...

Learn how to build a comprehensive AI visibility measurement framework to track brand mentions across ChatGPT, Google AI Overviews, and Perplexity. Discover key metrics, tools, and strategies for measuring AI search visibility.

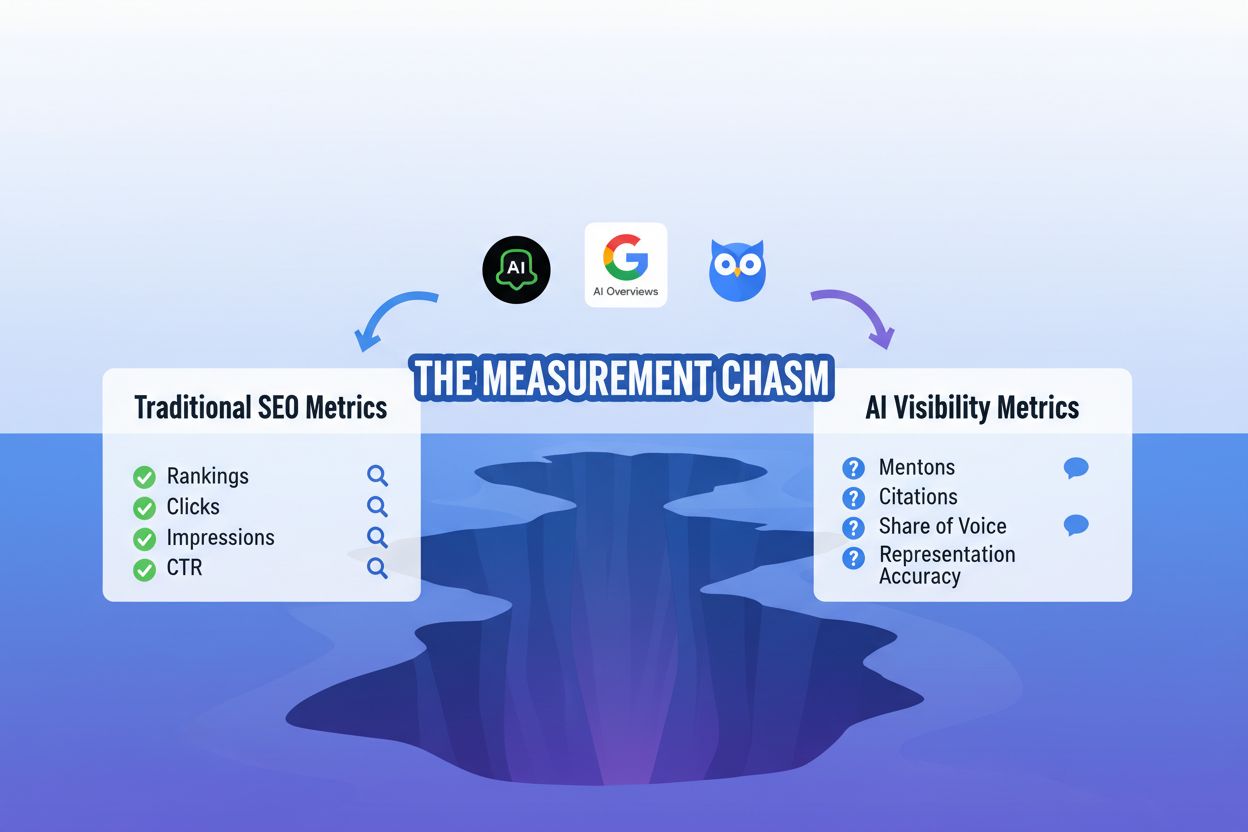

The rise of generative AI search has created what industry experts call the “measurement chasm”—a fundamental gap between traditional SEO metrics and the new reality of AI-powered answers. For decades, marketers relied on SERP tracking to monitor keyword rankings, click-through rates, and organic visibility. However, these metrics become nearly obsolete when AI systems like Google’s AI Overviews, ChatGPT, and Perplexity generate synthesized answers that bypass traditional search results entirely. AI visibility operates in a fundamentally different ecosystem where your content may be cited, summarized, or paraphrased without ever appearing as a clickable link. Traditional analytics tools cannot track these interactions because they occur outside the browser’s standard tracking mechanisms. The challenge intensifies because AI systems operate with limited transparency, making it difficult to understand how your content influences AI-generated responses. Organizations that continue relying solely on traditional SEO metrics risk becoming invisible in the AI-driven search landscape, even when their content is actively being used to power AI answers.

Understanding AI visibility requires a completely new set of metrics designed specifically for how generative systems consume and present information. Rather than tracking clicks and impressions, modern marketers must monitor how often their content is mentioned, cited, or represented in AI responses. The following framework outlines the essential metrics that should form the foundation of any comprehensive AI visibility measurement strategy:

| Metric | Definition | What It Measures | Why It Matters |

|---|---|---|---|

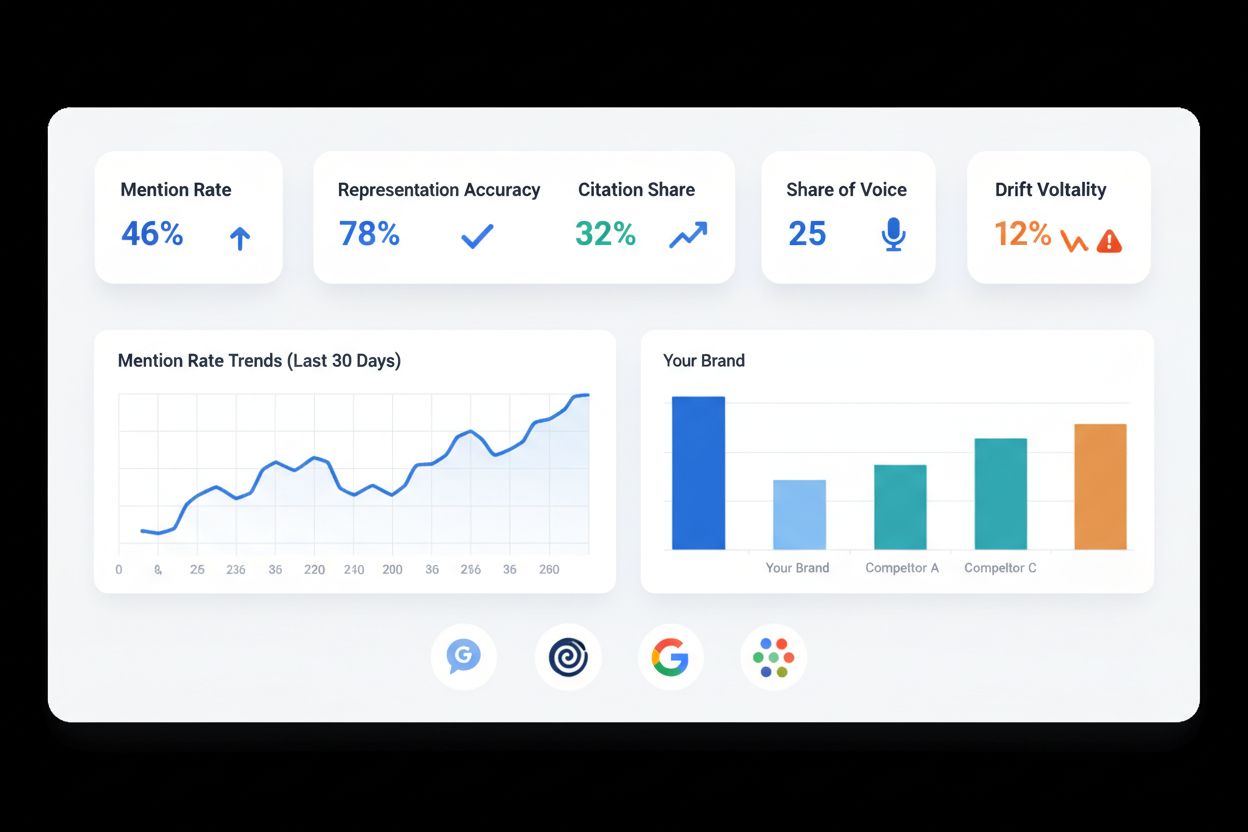

| Mention Rate | Percentage of AI responses that reference your brand, product, or content | Raw visibility in AI-generated answers | Indicates baseline awareness and content relevance to AI systems |

| Representation Accuracy | How faithfully AI systems represent your content, claims, and messaging | Quality and fidelity of AI citations | Ensures brand messaging isn’t distorted or misrepresented |

| Citation Share | Your portion of total citations within a specific topic or query category | Competitive positioning in AI responses | Shows market share within AI-generated content |

| Share of Voice (SOV) | Your brand’s visibility compared to competitors in AI responses | Relative competitive strength | Benchmarks performance against direct competitors |

| Drift & Volatility | Fluctuations in mention rates and representation across AI model updates | System stability and consistency | Reveals how sensitive your visibility is to AI model changes |

These five core metrics work together to create a holistic view of AI visibility, moving beyond simple presence to measure quality, consistency, and competitive positioning. Each metric serves a distinct purpose: mention rate establishes baseline visibility, representation accuracy protects brand integrity, citation share reveals competitive dynamics, share of voice contextualizes performance, and drift monitoring ensures long-term stability. Organizations implementing this framework gain the ability to track not just whether they appear in AI responses, but how they appear and whether that appearance drives meaningful business outcomes. The combination of these metrics provides the foundation for strategic decision-making in an AI-driven search environment.

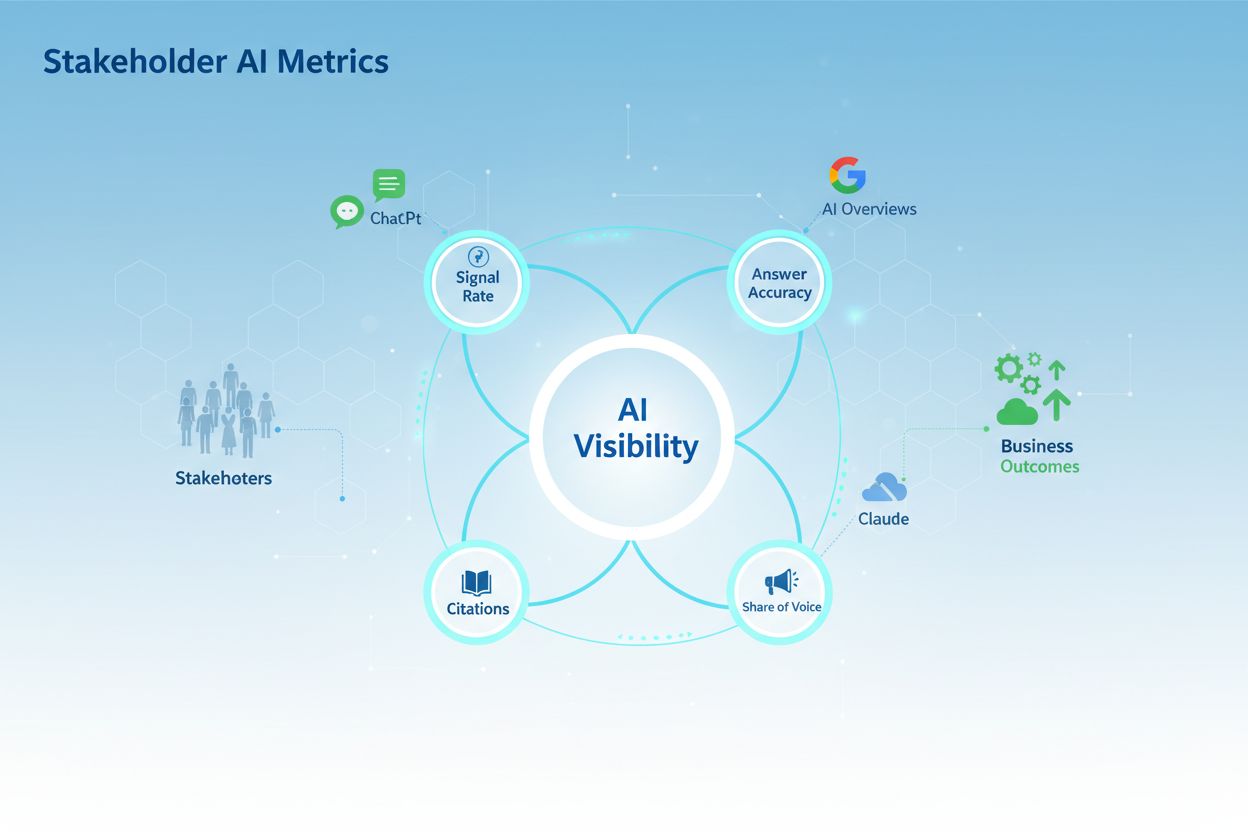

Effective AI visibility measurement requires a structured, hierarchical approach that captures data at multiple levels of the customer journey. Rather than treating all metrics equally, successful organizations implement a three-tier measurement stack that flows from inputs through channels to ultimate business performance:

Input Metrics (Tier 1): These foundational metrics measure the raw material that feeds AI systems. Examples include content freshness, keyword optimization, structured data implementation, and content comprehensiveness. Input metrics answer the question: “Are we giving AI systems the information they need to cite us?” Tools like Semrush and SE Ranking help track these upstream factors.

Channel Metrics (Tier 2): This middle tier captures how AI systems actually process and present your content. Key examples include mention rate, representation accuracy, citation share, and share of voice across different AI platforms (Google AI Overviews, ChatGPT, Perplexity, Gemini, Bing Copilot). These metrics directly measure AI visibility and require specialized monitoring tools like AmICited.com or Profound.

Performance Metrics (Tier 3): The top tier connects AI visibility to business outcomes including traffic, leads, conversions, and revenue. This tier answers the critical question: “Does AI visibility actually drive business results?” Performance metrics might include AI-sourced traffic, cost per acquisition from AI channels, and revenue attribution.

This funnel approach ensures that organizations understand not just whether they’re visible in AI systems, but why visibility matters and how it connects to business success. By implementing all three tiers, teams can identify bottlenecks—perhaps strong input metrics but weak channel metrics suggest content isn’t being properly indexed by AI systems, while strong channel metrics with weak performance metrics indicate visibility isn’t translating to business value. The three-tier stack transforms AI visibility from an abstract concept into a concrete, measurable business discipline.

Establishing a robust data collection infrastructure is essential for reliable AI visibility measurement, requiring both technological investment and operational discipline. Organizations need to implement automated monitoring systems that continuously track mentions, citations, and representations across multiple AI platforms—a task that manual testing cannot sustain at scale. The technical foundation typically includes API integrations with AI platforms (where available), web scraping tools for capturing AI-generated responses, and data warehousing solutions to store and analyze the collected information. AmICited.com provides an integrated platform that automates much of this complexity, offering pre-built connectors to major AI systems and eliminating the need for custom development. Beyond automation, organizations should establish baseline testing protocols where team members periodically query AI systems with target keywords and topics, documenting responses to validate automated tracking accuracy. The data pipeline must include quality assurance checkpoints to identify and correct tracking errors, as even small inaccuracies compound over time. Finally, successful implementations establish clear data governance policies defining who owns different metrics, how frequently data is refreshed, and what constitutes actionable changes in the data.

An effective AI visibility dashboard must serve multiple stakeholders with different information needs and decision-making responsibilities, requiring persona-based design that goes far beyond generic metric displays. CMOs need executive-level summaries showing AI visibility trends, competitive positioning, and business impact—typically visualized through trend lines, competitive benchmarks, and revenue attribution. SEO Leads require detailed metric breakdowns including mention rates by query category, representation accuracy scores, and platform-specific performance, often displayed through heat maps and detailed tables. Content Leaders benefit from content-level dashboards showing which pieces drive AI citations, how often specific claims are cited accurately, and which topics generate the most AI visibility. Product Marketing teams need competitive intelligence views comparing their share of voice against specific competitors and tracking how product positioning appears in AI responses. Beyond persona-specific views, modern dashboards should include real-time alerting that notifies teams when mention rates drop significantly, when representation accuracy issues emerge, or when competitors gain substantial share of voice. Integration with existing analytics platforms like Google Analytics and Looker ensures AI visibility metrics sit alongside traditional performance data, enabling teams to correlate AI visibility with downstream business metrics. The most effective dashboards balance comprehensiveness with simplicity, providing enough detail for deep analysis while remaining accessible to non-technical stakeholders.

The modern AI landscape includes numerous competing platforms, each with distinct architectures, update cycles, and response patterns, requiring multi-engine tracking strategies that account for these differences. Google AI Overviews dominate search volume but operate within Google’s ecosystem; ChatGPT reaches millions of daily users but operates independently; Perplexity specializes in research queries; Gemini integrates with Google’s ecosystem; and Bing Copilot serves enterprise users. Each platform requires separate monitoring because they cite sources differently, update at different frequencies, and serve different user intents. Organizations must also consider geographic and market-specific variations, as AI systems often produce different responses based on user location, language, and regional content availability. Compliance and brand safety become critical considerations when tracking AI visibility—organizations need to monitor not just whether they’re cited, but whether citations occur in appropriate contexts and whether AI systems misrepresent their content. The challenge intensifies because AI model updates can dramatically shift visibility overnight; a model update might change how systems weight sources, cite information, or generate responses, requiring flexible measurement systems that can adapt to these changes. Successful implementations establish baseline metrics before major platform updates, then track changes post-update to understand the impact. Tools like AmICited.com simplify multi-engine tracking by providing unified monitoring across platforms, eliminating the need to manually check each system individually.

Measuring AI visibility means nothing without a clear process for translating metrics into strategic action, requiring structured optimization workflows that connect data insights to content and product decisions. When mention rate metrics reveal that competitors receive more citations for specific topics, teams should launch content experimentation frameworks testing different approaches—perhaps more comprehensive coverage, different structural formats, or stronger claims backed by original research. Representation accuracy metrics that show your content is frequently misrepresented should trigger content audits and rewrites emphasizing clarity and precision. Share of voice analysis revealing competitive gaps should inform content strategy adjustments, directing resources toward high-opportunity topics where visibility gains are achievable. Beyond content optimization, AI visibility metrics enable competitive intelligence applications—tracking how competitors’ positioning evolves in AI responses, identifying emerging topics where they’re gaining visibility, and understanding which content types generate the most citations. The most sophisticated organizations connect AI visibility directly to revenue by tracking which AI-sourced traffic converts best, which topics drive the highest-value customers, and which visibility improvements correlate with revenue growth. This requires integrating AI visibility metrics with CRM and revenue systems, creating feedback loops where visibility improvements are validated against business outcomes. Organizations that master this workflow transform AI visibility from a vanity metric into a core driver of marketing ROI.

Despite the importance of AI visibility measurement, organizations encounter significant obstacles that can undermine data quality and strategic decision-making if not properly addressed. AI system variability presents perhaps the greatest challenge—the same query produces different responses across different times, user sessions, and geographic locations, making it difficult to establish consistent baselines. Solutions include implementing statistical sampling methodologies that account for natural variation, establishing confidence intervals around metrics, and tracking trends rather than absolute values. Limited platform transparency means most AI companies don’t publicly disclose how they select sources, weight citations, or update their systems, forcing organizations to reverse-engineer these processes through empirical testing. Multi-source response attribution complicates measurement when AI systems synthesize information from multiple sources without clearly indicating which source contributed which information. Advanced solutions use natural language processing and semantic analysis to infer source attribution even when systems don’t explicitly cite sources. Privacy and terms of service constraints limit how aggressively organizations can monitor AI systems—some platforms prohibit automated querying, requiring organizations to work within official APIs or accept limitations on data collection frequency. Model update unpredictability means visibility can shift dramatically without warning, requiring flexible measurement systems that can quickly adapt to new baselines. Organizations addressing these challenges typically combine multiple data collection methods (automated monitoring, manual testing, API data), implement robust quality assurance processes, and maintain detailed documentation of methodology changes to ensure measurement consistency over time.

The AI landscape evolves rapidly, with new platforms emerging, existing systems updating frequently, and measurement best practices still being established, requiring organizations to build flexible, adaptive measurement systems rather than rigid frameworks. Successful implementations prioritize modular architecture in their measurement infrastructure, using APIs and integrations that can accommodate new AI platforms without requiring complete system rebuilds. Rather than optimizing exclusively for current platforms like Google AI Overviews and ChatGPT, forward-thinking organizations monitor emerging systems and prepare measurement approaches before these platforms reach mainstream adoption. Emerging metrics and methodologies continue to evolve as the industry matures—concepts like “response quality” and “user engagement with AI-cited content” may become as important as mention rate and citation share. Organizations should establish regular review cycles (quarterly or semi-annually) to reassess their measurement framework, incorporating new metrics and retiring outdated ones as the landscape shifts. Long-term strategic considerations include building organizational capabilities around AI visibility measurement rather than relying on point solutions, developing internal expertise that can adapt to platform changes, and establishing measurement governance that ensures consistency as teams and tools evolve. The organizations that thrive in the AI-driven search era will be those that view measurement not as a static checklist but as a continuous learning process, regularly testing new approaches, validating assumptions against real data, and remaining agile enough to shift strategies as the AI landscape transforms.

Traditional SEO visibility focuses on rankings, clicks, and impressions from search engine results pages. AI visibility measures how often your brand is mentioned, cited, or represented in AI-generated answers from systems like ChatGPT and Google AI Overviews. While traditional SEO tracks clicks, AI visibility often involves zero-click interactions where users get their answer without visiting your site, but your content still influences the response.

For critical topics and competitive queries, daily monitoring is ideal to catch sudden changes from AI model updates. For broader tracking, weekly monitoring provides sufficient insight into trends while reducing operational overhead. Establish baseline metrics before major platform updates, then track changes post-update to understand impact. Most organizations find that weekly reviews combined with daily alerts for significant changes provides the right balance.

Start with the four major platforms: Google AI Overviews (largest reach), ChatGPT (highest daily users), Perplexity (research-focused), and Gemini (enterprise adoption). Bing Copilot is worth monitoring for enterprise audiences. The priority depends on your target audience—B2B companies should emphasize ChatGPT and Perplexity, while consumer brands should prioritize Google AI Overviews. Tools like AmICited.com simplify multi-engine tracking by monitoring all platforms simultaneously.

Start by segmenting your analytics to identify traffic from AI-sourced queries. Track conversions from these segments separately to understand their value. Use attribution modeling to connect visibility improvements to downstream business metrics like leads and revenue. Monitor branded search volume spikes following increases in AI citations, as this indicates brand lift. The most sophisticated approach integrates AI visibility metrics directly with CRM and revenue systems to create complete feedback loops.

Mention rates vary significantly by industry and topic competitiveness. A 30-50% mention rate across your target query set is generally considered strong, while 50%+ indicates excellent visibility. However, benchmarking against competitors is more valuable than absolute numbers—if competitors average 60% mention rate and you're at 40%, that's a clear optimization opportunity. Use tools like AmICited.com to track competitor mention rates and establish realistic benchmarks for your category.

Establish baseline metrics before major platform updates, then track changes post-update to quantify impact. Some visibility drops are temporary as models reindex content, while others indicate structural changes in how systems weight sources. Implement statistical confidence intervals around metrics to distinguish meaningful changes from normal variation. Document all major platform updates and their effects on your visibility to build institutional knowledge about how changes typically impact your brand.

Manual testing is free—create a set of 20-50 target queries and periodically test them across AI platforms, logging results in a spreadsheet. This provides baseline data without cost. However, manual testing doesn't scale beyond a few hundred queries. For comprehensive tracking, paid tools like AmICited.com, Profound, or Semrush's AI Visibility Toolkit offer automation and multi-engine monitoring. Most organizations find that the time savings and data quality improvements justify the investment.

Initial visibility improvements can appear within 2-4 weeks as AI systems re-index updated content. However, significant share of voice gains typically require 6-12 weeks as you build content authority and compete for citations. The timeline depends on topic competitiveness—less competitive topics show faster improvements. Establish baseline metrics immediately, then track weekly to identify trends. Most organizations see measurable improvements within 30 days and substantial gains within 90 days of focused optimization.

AmICited tracks how AI systems reference your brand across ChatGPT, Google AI Overviews, Perplexity, and more. Get real-time visibility into your AI presence and optimize your content for generative search.

Learn how to measure AI search performance across ChatGPT, Perplexity, and Google AI Overviews. Discover key metrics, KPIs, and monitoring strategies for tracki...

Learn how to measure content performance in AI systems including ChatGPT, Perplexity, and other AI answer generators. Discover key metrics, KPIs, and monitoring...

Discover the 4 essential AI visibility metrics stakeholders care about: Signal Rate, Accuracy, Citations, and Share of Voice. Learn how to measure and report AI...