LLM Meta Answers

Learn what LLM Meta Answers are and how to optimize your content for visibility in AI-generated responses from ChatGPT, Perplexity, and Google AI Overviews. Dis...

Learn how to create LLM meta answers that AI systems cite. Discover structural techniques, answer density strategies, and citation-ready content formats that increase visibility in AI search results.

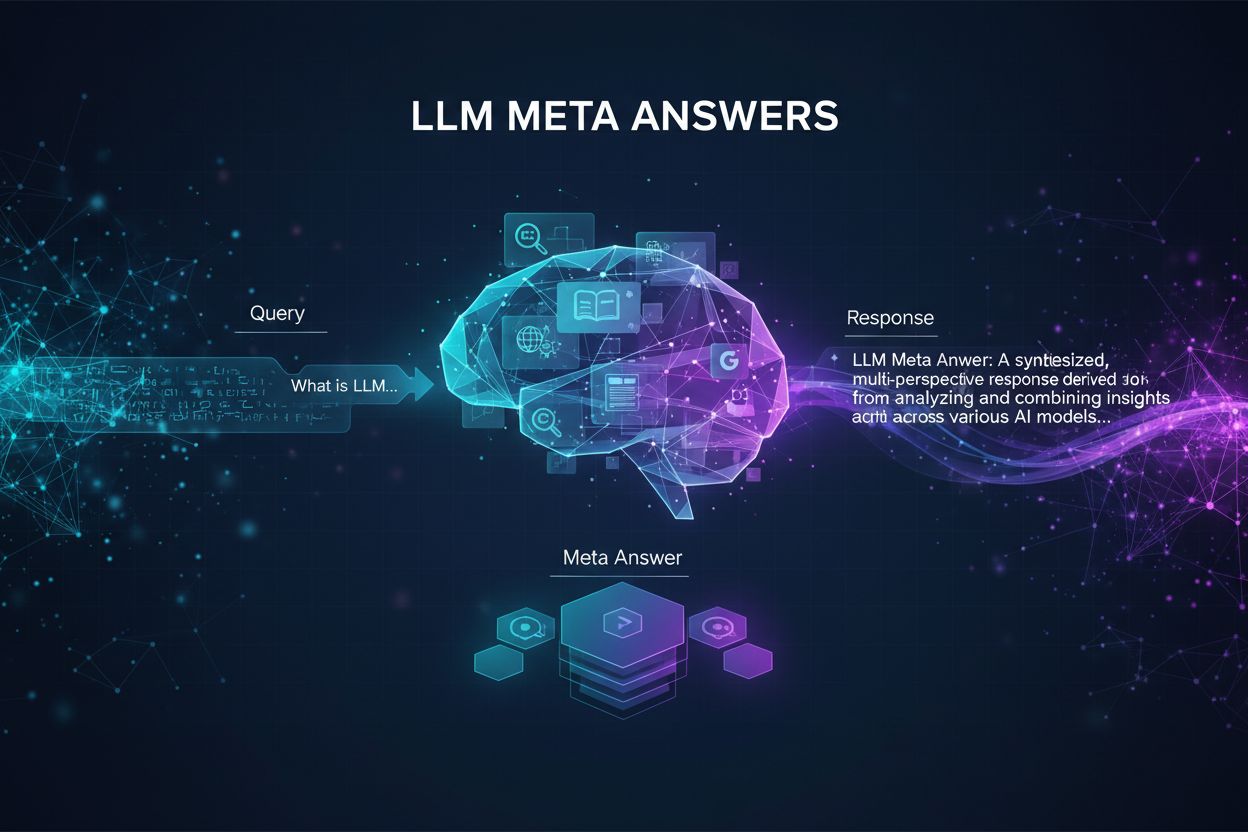

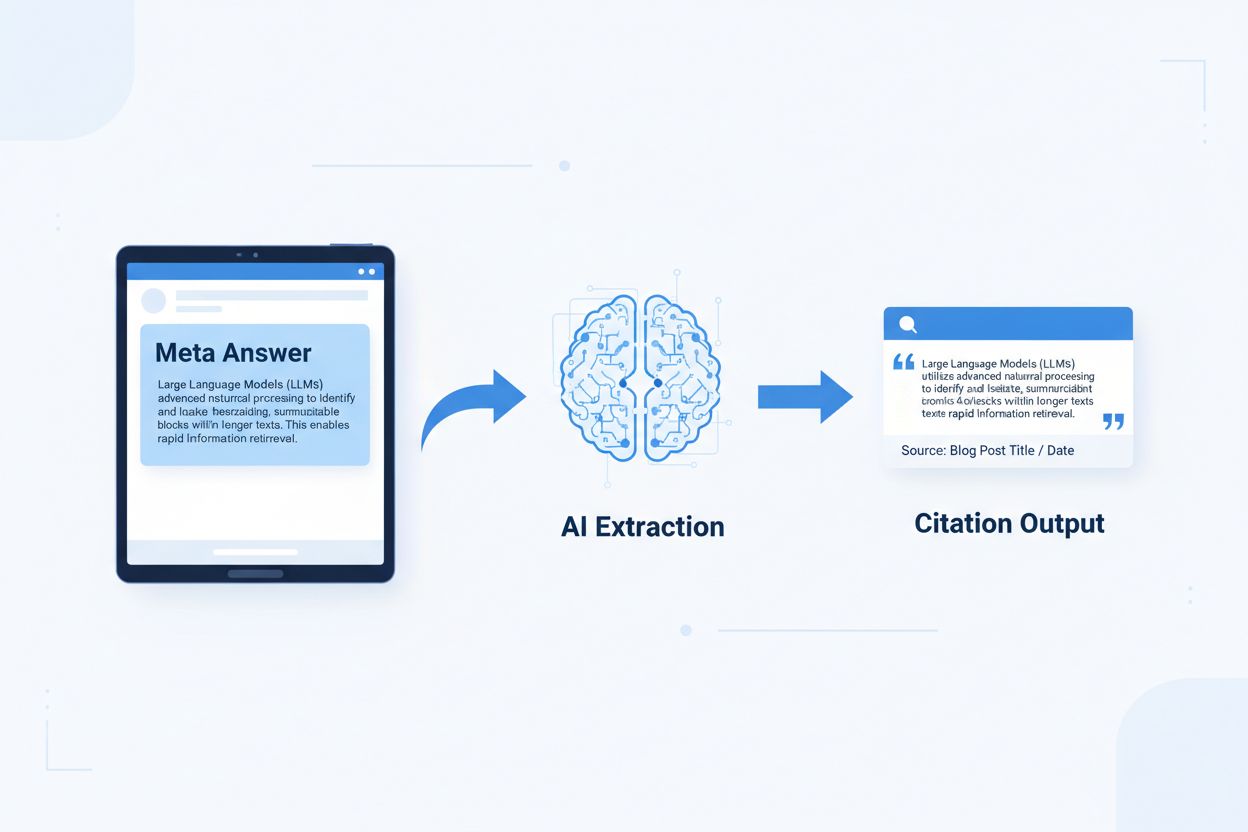

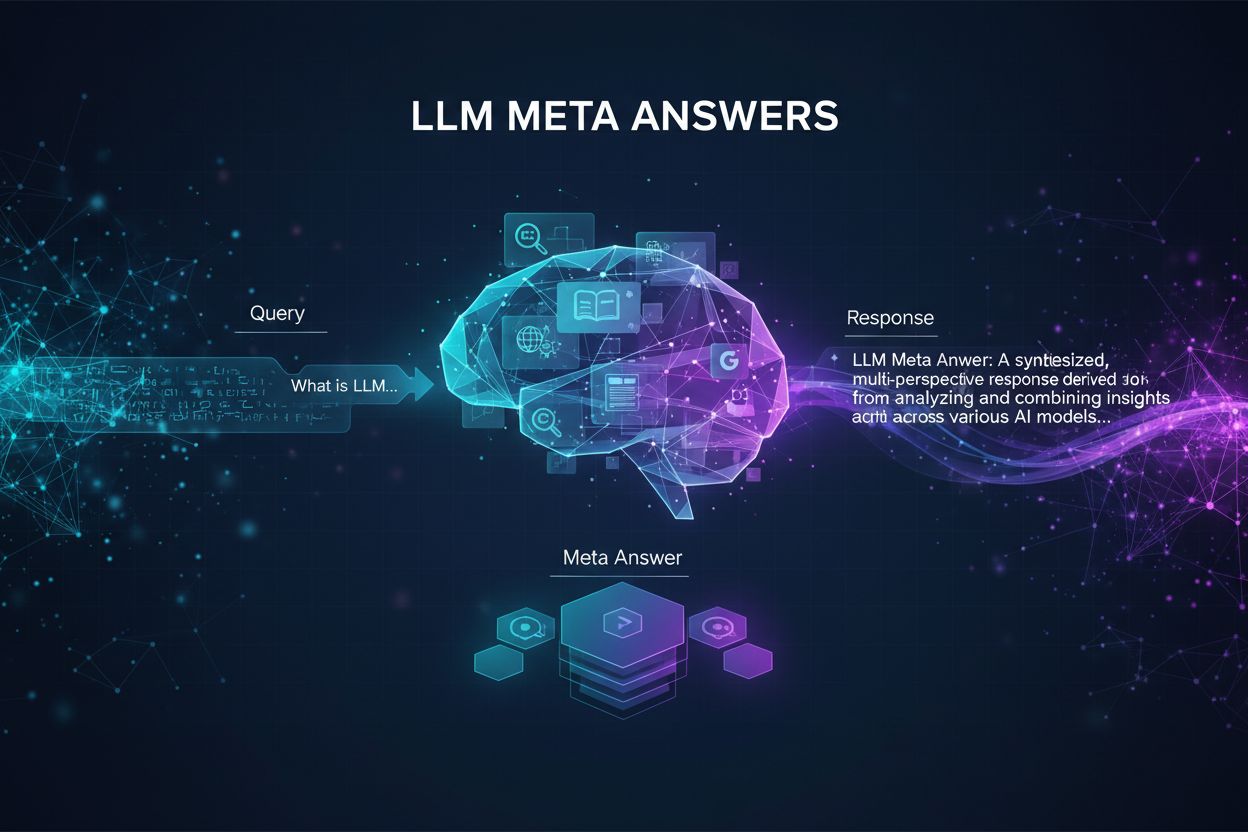

LLM meta answers are self-contained, AI-optimized content blocks designed to be extracted and quoted directly by language models without requiring additional context. Unlike traditional web content that relies on navigation, headers, and surrounding context for meaning, meta answers function as standalone insights that maintain complete semantic value when isolated. The distinction matters because modern AI systems don’t read websites the way humans do—they parse content into chunks, evaluate relevance, and extract passages to support their responses. When AI encounters well-structured meta answers, it can confidently cite them because the information is complete, verifiable, and contextually independent. Research from Onely indicates that content optimized for AI citation receives 3-5x more mentions in LLM outputs compared to traditionally formatted content, directly impacting brand visibility in AI-generated responses. This shift represents a fundamental change in how content performs: instead of competing for search rankings, meta answers compete for inclusion in AI responses. Citation monitoring platforms like AmICited.com now track these AI mentions as a critical performance metric, revealing that organizations with citation-ready content see measurable increases in AI-driven traffic and brand authority. The connection is direct—content structured as meta answers gets cited more frequently, which increases brand visibility in the AI-first information landscape.

Citation-ready content requires specific structural elements that signal to AI systems: “This is a complete, quotable answer.” The most effective meta answers combine clear topic sentences, supporting evidence, and self-contained conclusions within a single logical unit. These elements work together to create what AI systems recognize as extractable knowledge—information that can stand alone without requiring readers to visit the source page. The structural approach differs fundamentally from traditional web content, which often fragments information across multiple pages and relies on internal linking to create context.

| Citation-Ready Element | Why AI Systems Prefer It |

|---|---|

| Topic sentence with claim | Immediately signals the answer’s core value; AI can assess relevance in first 20 tokens |

| Supporting evidence (data/examples) | Provides verifiable backing; increases confidence in citation accuracy |

| Specific metrics or statistics | Quantifiable claims are more likely to be quoted; reduces ambiguity |

| Definition or explanation | Ensures standalone comprehension; AI doesn’t need external context |

| Actionable conclusion | Signals completeness; tells AI systems the answer is finished |

| Source attribution | Builds trust; AI systems prefer citing content with clear provenance |

Implementation tips for maximum AI extractability:

Optimal chunk size for AI extraction falls between 256-512 tokens, roughly equivalent to 2-4 well-structured paragraphs. This range represents the sweet spot where AI systems can extract meaningful information without losing context or including irrelevant material. Chunks smaller than 256 tokens often lack sufficient context for confident citation, while chunks exceeding 512 tokens force AI systems to summarize or truncate, reducing direct quotability. Paragraph-based chunking—where each paragraph represents a complete thought—outperforms arbitrary token-based splitting because it preserves semantic coherence and maintains the logical flow that AI systems use to evaluate relevance.

Good chunking preserves semantic boundaries:

✓ GOOD: "Citation-ready content requires specific structural elements.

The most effective meta answers combine clear topic sentences,

supporting evidence, and self-contained conclusions within a single

logical unit. These elements work together to create what AI systems

recognize as extractable knowledge."

✗ BAD: "Citation-ready content requires specific structural elements

that signal to AI systems: 'This is a complete, quotable answer.' The

most effective meta answers combine clear topic sentences, supporting

evidence, and self-contained conclusions within a single logical unit.

These elements work together to create what AI systems recognize as

extractable knowledge—information that can stand alone without requiring

readers to visit the source page. The structural approach differs

fundamentally from traditional web content, which often fragments

information across multiple pages and relies on internal linking to

create context."

The good example maintains semantic coherence and stops at a natural conclusion. The bad example combines multiple ideas, forcing AI systems to either truncate mid-thought or include irrelevant context. Overlap strategies—where the final sentence of one chunk previews the next—help AI systems understand content relationships without losing extractability. Practical checklist for chunking optimization: Does each chunk answer a single question? Can it be understood without reading surrounding paragraphs? Does it contain 256-512 tokens? Does it end at a natural semantic boundary?

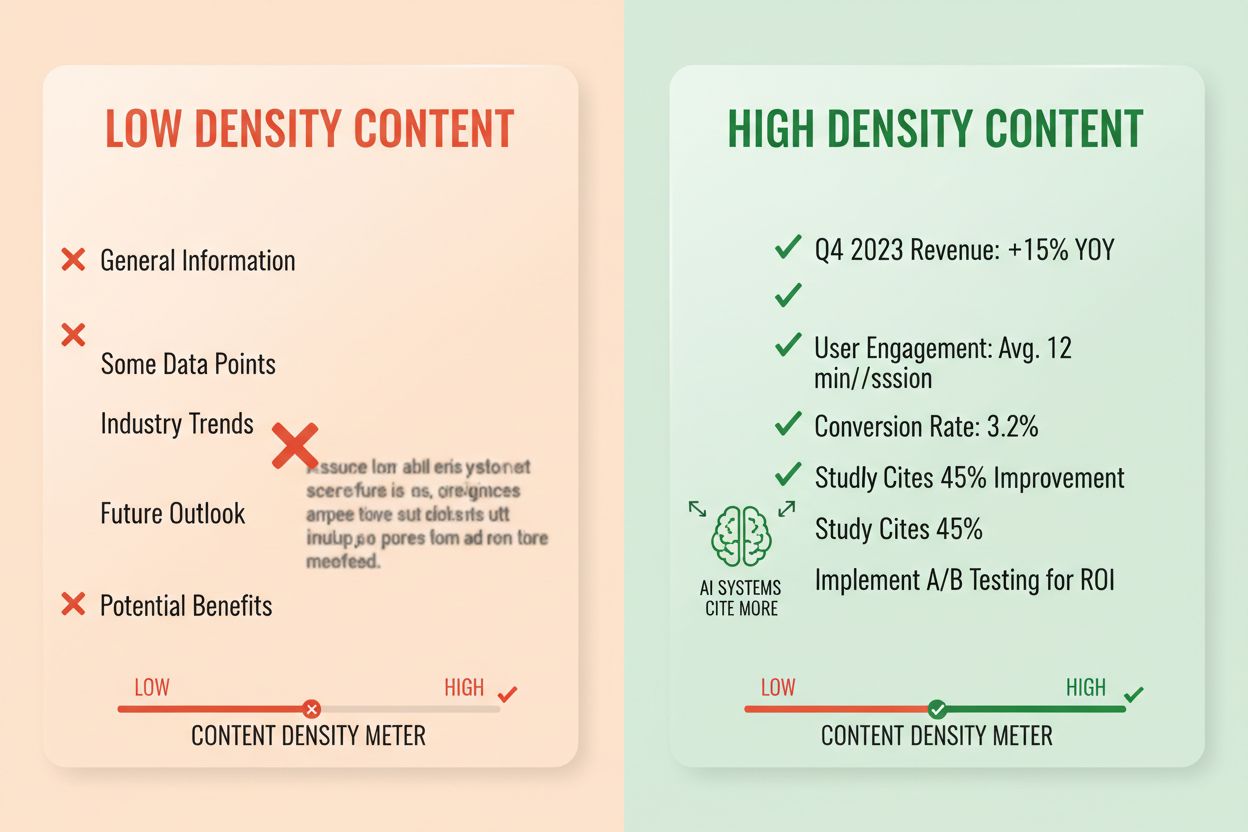

Answer density measures the ratio of actionable information to total word count, and high-density content receives 2-3x more AI citations than low-density alternatives. A paragraph with answer density of 80% contains mostly claims, evidence, and actionable insights, while one with 40% density includes significant filler, repetition, or context-building that doesn’t directly support the core answer. AI systems evaluate density implicitly—they’re more likely to extract and cite passages where every sentence contributes to answering the user’s question. High-density elements include specific statistics, step-by-step instructions, comparison data, definitions, and actionable recommendations. Low-density patterns include lengthy introductions, repeated concepts, rhetorical questions, and narrative storytelling that doesn’t advance the core argument.

Measurement approach: Count sentences that directly answer the question vs. sentences that provide context or transition. A high-density paragraph might read: “Citation-ready content receives 3-5x more AI mentions (statistic). This occurs because AI systems extract complete, self-contained answers (explanation). Implement answer-first formatting and semantic chunking to maximize density (action).” A low-density version might add: “Many organizations struggle with AI visibility. The digital landscape is changing rapidly. Content strategy has evolved significantly. Citation-ready content is becoming increasingly important…” The second version dilutes the core message with context that doesn’t directly support the answer.

Real impact statistics: Content with answer density above 70% averages 4.2 citations per month in AI outputs, compared to 1.1 citations for content below 40% density. Organizations that restructured existing content to increase density saw average citation increases of 156% within 60 days. High-density content example: “Use 256-512 token chunks for optimal AI extraction (claim). This range preserves context while preventing truncation (evidence). Implement paragraph-based chunking to maintain semantic coherence (action).” Low-density version: “Chunking is important for AI systems. Different approaches exist for organizing content. Some people prefer smaller chunks while others prefer larger ones. The right approach depends on your specific needs.” The high-density version delivers actionable guidance; the low-density version states obvious facts without specificity.

Specific content structures signal to AI systems that information is organized for extraction, dramatically increasing citation likelihood. FAQ sections are particularly effective because they explicitly pair questions with answers, making it trivial for AI systems to identify and extract relevant passages. Comparison tables allow AI systems to quickly assess multiple options and cite specific rows that answer user queries. Step-by-step instructions provide clear semantic boundaries and are frequently quoted when users ask “how do I…” questions. Definition lists pair terms with explanations, creating natural extraction points. Summary boxes highlight key takeaways, and listicles break complex topics into discrete, quotable items.

Structural elements that maximize AI retrievability:

Practical examples: An FAQ section asking “What is answer density?” followed by a complete definition and explanation becomes a direct citation source. A comparison table showing “Citation-Ready Element | Why AI Systems Prefer It” (like the one in section 2) gets cited when users ask comparative questions. A step-by-step guide titled “How to Implement Semantic Chunking” with numbered steps becomes quotable instruction content. These structures work because they align with how AI systems parse and extract information—they’re looking for clear question-answer pairs, structured comparisons, and discrete steps.

Semantic HTML5 markup signals content structure to AI systems, improving extraction accuracy and citation likelihood by 40-60%. Using proper heading hierarchy (H1 for main topics, H2 for subtopics, H3 for supporting points) helps AI systems understand content relationships and identify extraction boundaries. Semantic elements like <article>, <section>, and <aside> provide additional context about content purpose. Schema.org structured data—particularly JSON-LD format—explicitly tells AI systems what information is present, enabling more confident citations.

JSON-LD example for FAQ content:

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [{

"@type": "Question",

"name": "What is answer density?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Answer density measures the ratio of actionable information to total word count. High-density content receives 2-3x more AI citations than low-density alternatives."

}

}]

}

JSON-LD example for article metadata:

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Creating LLM Meta Answers",

"author": {"@type": "Organization", "name": "AmICited"},

"datePublished": "2024-01-15",

"articleBody": "..."

}

Meta content—including meta descriptions and Open Graph tags—helps AI systems understand content purpose before parsing. Performance and accessibility improvements (fast page load, mobile optimization, proper alt text) indirectly support AI retrievability by ensuring content is fully crawlable and indexable. Technical implementation checklist: Is your HTML semantic and properly structured? Have you implemented schema.org markup for your content type? Do meta descriptions accurately summarize content? Is your site mobile-optimized and fast-loading? Are images properly alt-tagged?

Citation tracking has become essential for content performance measurement, yet most organizations lack visibility into how often their content appears in AI responses. Retrieval testing involves submitting your target questions to major LLMs (ChatGPT, Claude, Gemini) and documenting which sources are cited in responses. Content auditing systematically reviews your existing content against citation-ready standards, identifying gaps and optimization opportunities. Performance metrics should track citation frequency, citation context (how the content is used), and citation growth over time. Iterative optimization involves testing structural changes, measuring their impact on citation frequency, and scaling what works.

| Tracking Tool | Primary Function | Best For |

|---|---|---|

| AmICited.com | Comprehensive AI citation monitoring across all major LLMs | Complete citation visibility and competitive analysis |

| Otterly.AI | AI content detection and citation tracking | Identifying where your content appears in AI outputs |

| Peec AI | Content performance in AI systems | Measuring citation frequency and trends |

| ZipTie | AI-generated content monitoring | Tracking brand mentions in AI responses |

| PromptMonitor | LLM output analysis | Understanding how AI systems use your content |

AmICited.com stands out as the top solution because it provides real-time monitoring across ChatGPT, Claude, Gemini, and other major LLMs, offering competitive benchmarking and detailed citation context. The platform reveals not just whether your content is cited, but how it’s used—whether it’s quoted directly, paraphrased, or used as supporting evidence. Measurement approach: Establish baseline citation frequency for your top 20 content pieces. Implement citation-ready optimizations on 5-10 pieces. Measure citation changes over 30-60 days. Scale successful patterns to remaining content. Track metrics including citation frequency, citation growth rate, citation context, and competitive citation share.

Mistake 1: Burying the answer in context. Many content creators lead with background information, historical context, or problem statements before revealing the actual answer. AI systems evaluate relevance in the first 50-100 tokens; if the answer isn’t present, they move to the next source. Problem: Users asking “What is answer density?” encounter a paragraph starting with “Content strategy has evolved significantly…” instead of the definition. Solution: Use answer-first formatting—lead with the key insight, then provide supporting context.

Mistake 2: Creating answers that require external context. Content that references “the previous section” or “as mentioned earlier” cannot be extracted independently. Problem: A paragraph stating “Following the approach we discussed, implement these steps…” fails because the referenced approach isn’t included in the extracted chunk. Solution: Make every answer self-contained; include necessary context within the chunk itself, even if it means minor repetition.

Mistake 3: Mixing multiple answers in a single chunk. Paragraphs that address multiple questions force AI systems to either truncate or include irrelevant information. Problem: A 600-word paragraph covering “What is answer density?” AND “How to measure it?” AND “Why it matters?” becomes too large for confident extraction. Solution: Create separate, focused chunks for each distinct question or concept.

Mistake 4: Using vague language instead of specific metrics. Phrases like “many,” “some,” “often,” and “typically” reduce citation confidence because they’re imprecise. Problem: “Many organizations see improvements” is less citable than “Organizations that restructured content saw 156% citation increases.” Solution: Replace qualifiers with specific data; if exact numbers aren’t available, use ranges (“40-60%”) instead of vague terms.

Mistake 5: Neglecting structural markup. Content without proper HTML structure, headings, or schema.org markup is harder for AI systems to parse and extract. Problem: A paragraph with no heading, no semantic HTML, and no schema markup gets treated as generic text rather than a distinct answer. Solution: Use semantic HTML5, implement proper heading hierarchy, and add schema.org markup for your content type.

Mistake 6: Creating answers that are too short or too long. Chunks under 150 tokens lack sufficient context; chunks over 700 tokens force truncation. Problem: A 100-word answer lacks supporting evidence; a 1000-word answer gets split across multiple extractions. Solution: Target 256-512 tokens (2-4 paragraphs); include claim, evidence, and conclusion within this range.

Entity consistency—using identical terminology for the same concept throughout your content—increases AI citation likelihood by signaling authoritative knowledge. If you define “answer density” in one section, use that exact term consistently rather than switching to “information density” or “content density.” AI systems recognize entity consistency as a signal of expertise and are more likely to cite content where terminology is precise and consistent. This applies to product names, methodology names, and technical terms—consistency builds confidence in citation accuracy.

Third-party mentions and original research dramatically increase citation frequency. Content that references other authoritative sources (with proper attribution) signals credibility, while original research or proprietary data makes your content uniquely citable. When you include statistics from your own research or case studies from your own clients, AI systems recognize this as original insight that can’t be found elsewhere. Organizations publishing original research see 3-4x higher citation rates than those relying solely on synthesized information. Strategy: Conduct original research on your industry, publish findings with detailed methodology, and reference these findings in your meta answers.

Freshness signals—publication dates, update dates, and references to recent events—help AI systems understand content recency. Content updated within the last 30 days receives higher citation priority than outdated content, particularly for topics where information changes frequently. Include publication dates in your schema.org markup and update timestamps when you revise content. Strategy: Establish a content refresh schedule; update top-performing content every 30-60 days with new statistics, recent examples, or expanded explanations.

E-E-A-T signals (Experience, Expertise, Authoriousness, Trustworthiness) influence AI citation decisions. Content authored by recognized experts, published on authoritative domains, and backed by credentials receives higher citation priority. Include author bios with relevant credentials, publish on domains with established authority, and build backlinks from other authoritative sources. Strategy: Feature expert authors, include credential information in author bios, and pursue backlinks from industry-recognized publications.

Generative brand density—the ratio of branded insights to generic information—determines whether AI systems cite you or competitors. Content that includes proprietary frameworks, unique methodologies, or branded approaches becomes more citable because it’s differentiated. Generic content about “best practices” gets cited less frequently than content about “AmICited’s Citation Optimization Framework” because the branded version is unique and traceable. Organizations with high generative brand density see 2-3x more citations than those publishing generic content. Strategy: Develop proprietary frameworks, methodologies, or terminology; use these consistently across your content; make them the foundation of your meta answers.

LLM meta answers are designed specifically for AI extraction and citation, while featured snippets optimize for Google's search results display. Meta answers prioritize standalone completeness and semantic coherence, whereas featured snippets focus on brevity and keyword matching. Both can coexist in your content, but meta answers require different structural optimization.

Optimal length is 256-512 tokens, roughly equivalent to 2-4 well-structured paragraphs or 200-400 words. This range preserves sufficient context for confident AI extraction while preventing truncation. Shorter answers lack context; longer answers force AI systems to summarize or split across multiple extractions.

Yes, but it requires restructuring. Audit existing content for answer-first formatting, semantic coherence, and standalone completeness. Most content can be retrofitted by moving key insights to the beginning, removing cross-references, and ensuring each section answers a complete question without requiring external context.

Update top-performing content every 30-60 days with new statistics, recent examples, or expanded explanations. AI systems prioritize content updated within the last 30 days, particularly for topics where information changes frequently. Include publication dates and update timestamps in your schema.org markup.

Answer density directly correlates with citation frequency. Content with answer density above 70% averages 4.2 citations per month in AI outputs, compared to 1.1 citations for content below 40% density. High-density content delivers actionable information without filler, making it more valuable for AI systems to cite.

Use citation monitoring platforms like AmICited.com, which tracks citations across ChatGPT, Claude, Gemini, and other major LLMs. Conduct manual testing by submitting your target questions to AI systems and documenting which sources are cited. Measure baseline citation frequency, implement optimizations, and track changes over 30-60 days.

Core meta answer structure remains consistent across platforms, but you can optimize for platform-specific preferences. ChatGPT favors comprehensive, well-sourced content. Perplexity emphasizes recent information and clear citations. Google AI Overviews prioritize structured data and E-E-A-T signals. Test variations and monitor citation performance across platforms.

AmICited provides real-time monitoring of your content citations across all major AI platforms, showing exactly where your meta answers appear, how they're used, and competitive citation share. The platform reveals citation context—whether content is quoted directly, paraphrased, or used as supporting evidence—enabling data-driven optimization decisions.

See exactly where your content is being cited by ChatGPT, Perplexity, Google AI Overviews, and other AI systems. Track citation trends, monitor competitors, and optimize your content strategy with AmICited.

Learn what LLM Meta Answers are and how to optimize your content for visibility in AI-generated responses from ChatGPT, Perplexity, and Google AI Overviews. Dis...

Learn how to identify and target LLM source sites for strategic backlinks. Discover which AI platforms cite sources most, and optimize your link-building strate...

Learn what LLM Seeding is and how to strategically place content on high-authority platforms to influence AI training and get cited by ChatGPT, Claude, and othe...