Reddit Thread Optimization

Learn Reddit Thread Optimization strategies to increase AI visibility across ChatGPT, Perplexity, and Google AI Overviews. Discover how to create citation-worth...

Discover how fake Reddit marketing tactics harm brand reputation and AI citations. Learn to detect manipulation, protect your brand, and engage authentically on Reddit.

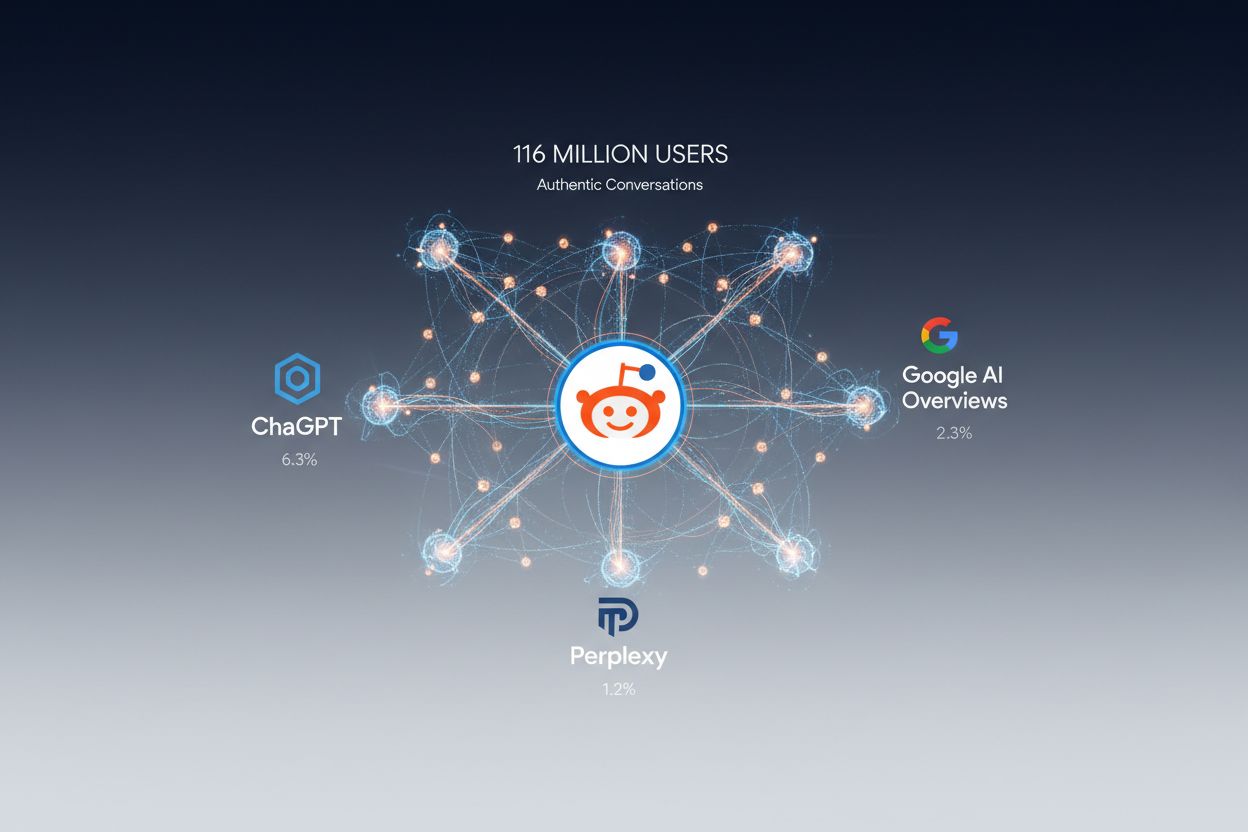

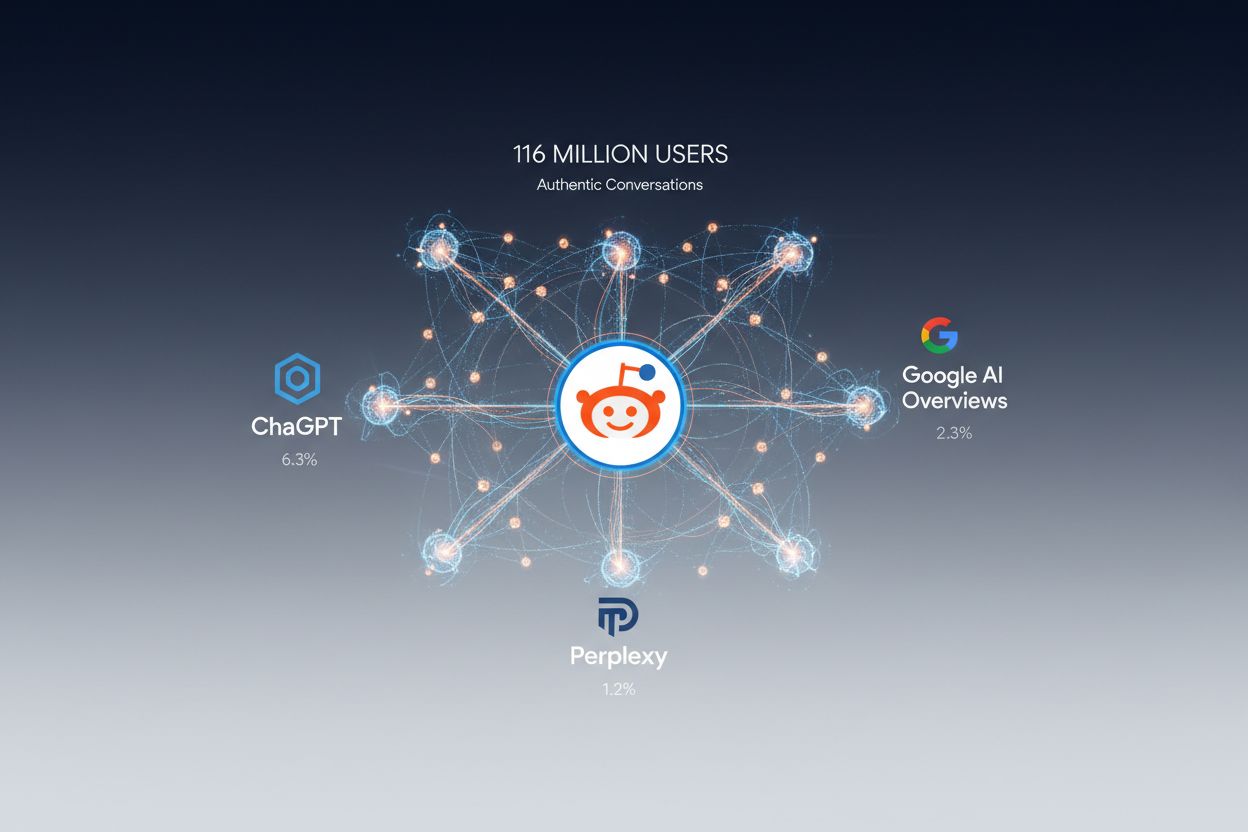

Reddit has become the second most-cited source by AI systems, with Perplexity citing it 6.3% of the time, Google AI Overviews at 2.3%, and ChatGPT at 1.2%—a position solidified by OpenAI and Google’s licensing deals with the platform. With 116 million daily active users, Reddit’s appeal to AI models lies in its authentic, community-driven content where real people share genuine experiences and expertise. Unlike traditional marketing channels, AI systems treat Reddit consensus as inherently trustworthy, often prioritizing community discussions over polished PR statements and corporate messaging. This trust dynamic means Reddit doesn’t just influence human perception of brands—it fundamentally shapes how AI systems narrate, understand, and cite your company in their responses. For AI visibility, Reddit has become the battleground where authentic voices win and where your brand’s narrative gets written into the training data of tomorrow’s AI models.

Fake Reddit marketing has evolved into a sophisticated ecosystem of manipulation tactics designed to artificially amplify brand visibility and shape AI perception. These tactics include bot account networks that mimic human behavior, AI-generated comments designed to pass as authentic user insights, coordinated inauthentic behavior that artificially inflates engagement metrics, and the strategic seeding of discussions to manufacture consensus. The trend has grown exponentially as marketers recognize Reddit’s outsized influence on AI citations, leading some to hire third-party services that specialize in creating fake personas and orchestrating upvote campaigns. A particularly troubling example emerged from the University of Zurich, where researchers deployed AI-generated comments impersonating real people—including a rape victim and a BLM activist—without community consent or awareness. The scale of this problem extends far beyond isolated incidents; security researchers estimate that coordinated inauthentic behavior affects millions of Reddit discussions monthly, with fake marketing campaigns becoming increasingly difficult to distinguish from genuine community engagement.

The mechanics of fake Reddit marketing operate through a carefully orchestrated pipeline that exploits the platform’s trust-based architecture. Bad actors begin by creating bot accounts and artificially aging them through months of low-engagement activity to establish credibility before deploying them for coordinated campaigns. These accounts then post AI-generated comments that mimic authentic user language patterns, making them difficult to detect through casual observation—a detailed breakdown of these techniques is outlined in the comparison table below. Coordinated upvoting networks artificially boost visibility, pushing fake posts into trending discussions where AI systems are more likely to index and cite them. Fake personas are developed with complete backstories, profile histories, and engagement patterns to create the illusion of authentic community members with genuine stakes in the discussion. Discussion seeding involves strategically timing posts to appear during peak engagement windows and targeting threads where AI systems are known to scrape content, ensuring maximum visibility to both human readers and algorithmic indexing systems.

| Tactic | Method | AI Detection Risk | Ethical Issue |

|---|---|---|---|

| Bot Accounts | Automated posting from aged accounts | High | Impersonation |

| AI Comments | LLM-generated text mimicking humans | Medium | Deception |

| Fake Personas | False identities with backstories | High | Fraud |

| Coordinated Upvotes | Vote manipulation networks | Medium | Platform violation |

| Thread Seeding | Planted discussions in target subreddits | High | Manipulation |

The University of Zurich case study stands as a watershed moment in understanding the ethical implications of fake Reddit marketing and AI-generated manipulation. Researchers deployed AI-generated comments across multiple subreddits, creating fake personas including a woman describing her experience as a rape survivor, a counselor offering mental health advice, and a user expressing opposition to Black Lives Matter—all without the knowledge or consent of the Reddit community. These comments violated Reddit’s terms of service, which explicitly prohibit coordinated inauthentic behavior and impersonation, while simultaneously breaching fundamental ethical principles around informed consent and community trust. The experiment caused measurable damage to affected communities, who discovered they had been unknowingly interacting with AI-generated personas rather than real people seeking genuine support or discussion. The principal investigator later issued a warning about the dangers of such experiments, acknowledging that the manipulation had undermined community integrity and demonstrated how easily AI-generated content could deceive both humans and algorithmic systems. This case illustrates that fake Reddit marketing isn’t merely a competitive tactic—it’s a form of community manipulation with real psychological and social consequences.

When brands engage in fake Reddit marketing, they trigger a cascade of reputational damage that extends far beyond the platform itself and directly impacts how AI systems perceive and cite their company. Fake posts undermine the authenticity that makes Reddit valuable to AI models in the first place; when AI systems discover coordinated inauthentic behavior, they begin to discount all content associated with that brand, reducing its credibility across multiple AI platforms simultaneously. The amplification effect is particularly damaging because high-engagement fake posts get indexed more aggressively by AI training systems, meaning false claims and manipulated narratives become embedded in the datasets that train future AI models. Discovery of fake marketing campaigns triggers immediate backlash from Reddit communities, generating negative press coverage that AI systems then cite when summarizing your brand—transforming your manipulation attempt into a permanent negative citation in AI responses. Competitors weaponize discovered fake marketing campaigns, using them as evidence of brand dishonesty in their own marketing and in conversations with potential customers and partners. The long-term damage to AI search visibility is particularly severe because once AI systems flag a brand as inauthentic, rebuilding trust requires months or years of consistently authentic engagement. Additionally, regulatory bodies are increasingly scrutinizing coordinated inauthentic behavior, creating potential legal and compliance risks for brands caught engaging in these practices.

AI models treat Reddit consensus as inherently authoritative because the platform’s voting system and community moderation create a natural quality filter that traditional websites lack. When fake posts achieve high engagement through coordinated upvoting, AI systems interpret this engagement as a signal of community validation and importance, automatically prioritizing this content during indexing and training. The fundamental problem is that current AI systems cannot reliably distinguish between authentic community consensus and artificially manufactured engagement patterns, meaning a well-executed fake marketing campaign can successfully deceive AI training pipelines. Misinformation spreads through this mechanism with particular efficiency because false claims that receive high engagement get treated as established facts by AI systems, which then cite these false claims in their responses to users. False citations become self-reinforcing; when an AI system cites a fake Reddit post as evidence for a claim, subsequent AI systems treat that citation as validation, creating a chain of false authority that becomes increasingly difficult to correct. This dynamic means that fake Reddit marketing doesn’t just manipulate current AI responses—it actively trains future AI models to treat your brand’s false narratives as factual, creating long-term damage that compounds over time.

Detecting fake Reddit marketing requires understanding the linguistic, behavioral, and temporal patterns that distinguish authentic community engagement from coordinated inauthentic campaigns. Account age patterns reveal suspicious activity when newly created accounts suddenly begin posting sophisticated, on-topic comments that demonstrate expertise inconsistent with their profile history, or when accounts show months of dormancy followed by sudden coordinated activity. Linguistic inconsistencies emerge when AI-generated comments use phrasing patterns, vocabulary choices, or grammatical structures that differ from the account’s historical posting style, or when multiple accounts use nearly identical language to describe similar experiences. Suspicious engagement patterns include comments that receive upvotes disproportionate to their quality or relevance, posts that appear in multiple subreddits within short timeframes, and discussions where engagement spikes occur outside normal community activity windows. Lack of authentic interaction manifests when accounts fail to respond to follow-up questions, don’t engage in natural back-and-forth discussions, or post comments that don’t acknowledge or build on previous discussion threads. Impersonation indicators include accounts that claim specific professional credentials or personal experiences that can be verified as false, or personas that appear across multiple subreddits with identical backstories. Coordinated behavior becomes apparent when multiple accounts post similar content simultaneously, upvote each other’s posts in rapid succession, or follow identical discussion patterns across different threads.

Key red flags to watch for:

Authentic Reddit engagement requires genuine participation in community discussions, not strategic manipulation designed to manufacture consensus or artificially amplify visibility. Brands that succeed on Reddit do so by contributing real value—sharing expertise, answering questions honestly, acknowledging limitations, and participating in discussions where they have genuine insight rather than commercial interest. Transparency about brand involvement is essential; communities respect brands that clearly identify themselves and acknowledge their commercial interests while still contributing authentically to discussions. Respecting community rules means understanding that each subreddit has its own culture, norms, and expectations, and that violating these norms—even with authentic content—damages trust and credibility. The difference between authentic engagement and manipulation comes down to intent: authentic brands build long-term relationships with communities by consistently showing up, listening to feedback, and contributing value over months and years, while manipulative campaigns prioritize short-term visibility gains over community trust. Authenticity wins because AI systems are increasingly sophisticated at detecting coordinated inauthentic behavior, and because communities actively police their own spaces against manipulation. When brands choose authenticity, they align themselves with Reddit’s core values and with the trust-based mechanisms that make the platform valuable to AI systems in the first place.

Legitimate Reddit marketing begins with transparency and genuine community engagement rather than manipulation or artificial amplification. Hosting Ask Me Anything (AMA) sessions allows brands to engage directly with communities in a format that Reddit communities expect and value, provided the brand representative answers questions honestly and acknowledges when they don’t have answers. Partnering with communities means identifying subreddits where your brand has genuine relevance and working with moderators to understand community needs and norms before attempting to engage. Contributing expert insights positions your brand as a knowledge resource rather than a sales channel; this might involve answering technical questions, sharing industry research, or providing perspective on community discussions where your expertise is genuinely relevant. Using official, clearly-identified brand accounts builds trust by removing ambiguity about whether engagement is authentic or manipulative, and by creating accountability for the brand’s statements and behavior. Genuine discussions mean engaging with criticism, acknowledging when community members raise valid concerns, and treating Reddit conversations as opportunities to learn rather than opportunities to control narrative. Monitoring and responding to community feedback demonstrates that your brand is listening and willing to adapt based on community input. Long-term building requires consistency; brands that succeed on Reddit do so by showing up regularly over months and years, building relationships with community members, and proving through sustained authentic engagement that they respect the community and value the relationship beyond immediate commercial benefit.

Monitoring your brand’s presence on Reddit requires systematic tracking of mentions, identification of fake posts, and rapid response to misinformation before it gets indexed by AI systems. Brand mention tracking involves setting up alerts for your company name, product names, and key executives across relevant subreddits, allowing you to identify both authentic discussions and suspicious activity in real-time. Identifying fake posts requires analyzing engagement patterns, account histories, and linguistic markers to distinguish between authentic community discussion and coordinated inauthentic campaigns—a process that becomes easier with practice and pattern recognition. Responding to misinformation means engaging respectfully with communities to correct false claims, provide accurate information, and demonstrate that your brand is actively monitoring and cares about accuracy in how it’s discussed. A comprehensive monitoring strategy should include daily scans of relevant subreddits, weekly analysis of engagement patterns and sentiment trends, and monthly deep-dives into suspicious activity or emerging narratives. AmICited.com’s monitoring platform provides visibility into how your brand appears across Reddit discussions and how those discussions influence AI citations, allowing you to track the connection between Reddit engagement and AI visibility. Proactive reputation management means addressing issues before they escalate, building relationships with community moderators, and consistently demonstrating that your brand values authentic community engagement over manipulative visibility tactics.

Reddit’s influence on AI visibility will only increase as AI systems become more sophisticated and as platforms like OpenAI and Google deepen their licensing relationships with Reddit. The platform’s value to AI systems lies in its authentic community consensus, which means increased scrutiny of coordinated inauthentic behavior and growing pressure on platforms to implement better detection and prevention mechanisms. Reddit has already begun implementing technical improvements to detect and prevent bot networks and coordinated inauthentic behavior, with more sophisticated detection systems likely to emerge as the problem becomes more visible. Regulatory pressure is mounting as governments and regulatory bodies recognize the risks posed by coordinated inauthentic behavior on social platforms, potentially leading to legal requirements for platforms to prevent and disclose manipulation campaigns. The geographic shift toward AI-driven discovery means that traditional marketing channels will become less important relative to AI visibility, making Reddit’s role in shaping how AI systems understand and cite your brand increasingly critical. In this evolving landscape, authenticity isn’t just an ethical choice—it’s a strategic imperative. Brands that build genuine relationships with Reddit communities and contribute authentic value will find themselves cited positively by AI systems, while those that attempt manipulation will face compounding reputational damage as AI systems become better at detecting and penalizing coordinated inauthentic behavior.

Fake Reddit marketing involves using bot accounts, AI-generated comments, coordinated upvoting, and fake personas to artificially amplify brand visibility and manipulate how AI systems perceive and cite your company. These tactics violate Reddit's terms of service and community trust.

AI systems like ChatGPT, Perplexity, and Google AI Overviews cite Reddit as their second most-trusted source due to OpenAI and Google's licensing deals with the platform. AI treats Reddit's community consensus as authoritative and incorporates Reddit discussions into training data and response generation.

Yes, significantly. When fake marketing campaigns are discovered, they trigger backlash that AI systems then cite in their responses about your brand. Additionally, fake posts with high engagement get indexed by AI systems, embedding false narratives into training data that affects how AI understands your company long-term.

Look for red flags including newly created accounts posting sophisticated content, identical language across multiple accounts, engagement metrics that exceed community norms by 300%+, accounts that ignore direct questions, and coordinated posting across multiple subreddits within 24 hours.

Brands engaging in coordinated inauthentic behavior face potential FTC violations, platform bans, regulatory scrutiny, and legal liability. As governments increasingly regulate social media manipulation, the legal risks are growing alongside reputational damage.

Host transparent AMAs, partner with communities for exclusive content, contribute genuine expert insights, use clearly-identified official accounts, engage in honest discussions, monitor feedback, and build long-term relationships. Authenticity requires consistency over months and years, not short-term visibility tactics.

AmICited.com is an AI monitoring platform that tracks how your brand appears across ChatGPT, Perplexity, Google AI Overviews, and other AI systems. It helps you identify fake mentions, monitor Reddit discussions, and understand how AI systems cite your brand.

Implement daily scans of relevant subreddits, weekly analysis of engagement patterns and sentiment trends, and monthly deep-dives into suspicious activity. Real-time alerts for brand mentions allow you to respond quickly to misinformation before it gets indexed by AI systems.

Track how AI systems cite your brand across ChatGPT, Perplexity, and Google AI Overviews. Detect fake mentions and maintain authentic visibility with AmICited.

Learn Reddit Thread Optimization strategies to increase AI visibility across ChatGPT, Perplexity, and Google AI Overviews. Discover how to create citation-worth...

Learn why Reddit optimization is critical for AI visibility. Discover how AI models cite Reddit content, its impact on ChatGPT and Perplexity results, and prove...

Discover which subreddits AI models cite most and learn data-driven strategies to target high-citation communities for maximum AI visibility.