Preventing AI Visibility Crises: Proactive Strategies

Learn how to prevent AI visibility crises with proactive monitoring, early warning systems, and strategic response protocols. Protect your brand in the AI era.

Learn to detect AI visibility crises early with real-time monitoring, sentiment analysis, and anomaly detection. Discover warning signs and best practices for protecting your brand reputation in AI-powered search.

An AI visibility crisis occurs when artificial intelligence systems produce inaccurate, misleading, or harmful outputs that damage brand reputation and erode public trust. Unlike traditional PR crises that stem from human decisions or actions, AI visibility crises emerge from the intersection of training data quality, algorithmic bias, and real-time content generation—making them fundamentally harder to predict and control. Recent research indicates that 61% of consumers have reduced trust in brands after encountering AI hallucinations or false AI answers, while 73% believe companies should be held accountable for what their AI systems say publicly. These crises spread faster than traditional misinformation because they appear authoritative, come from trusted brand channels, and can affect thousands of users simultaneously before human intervention is possible.

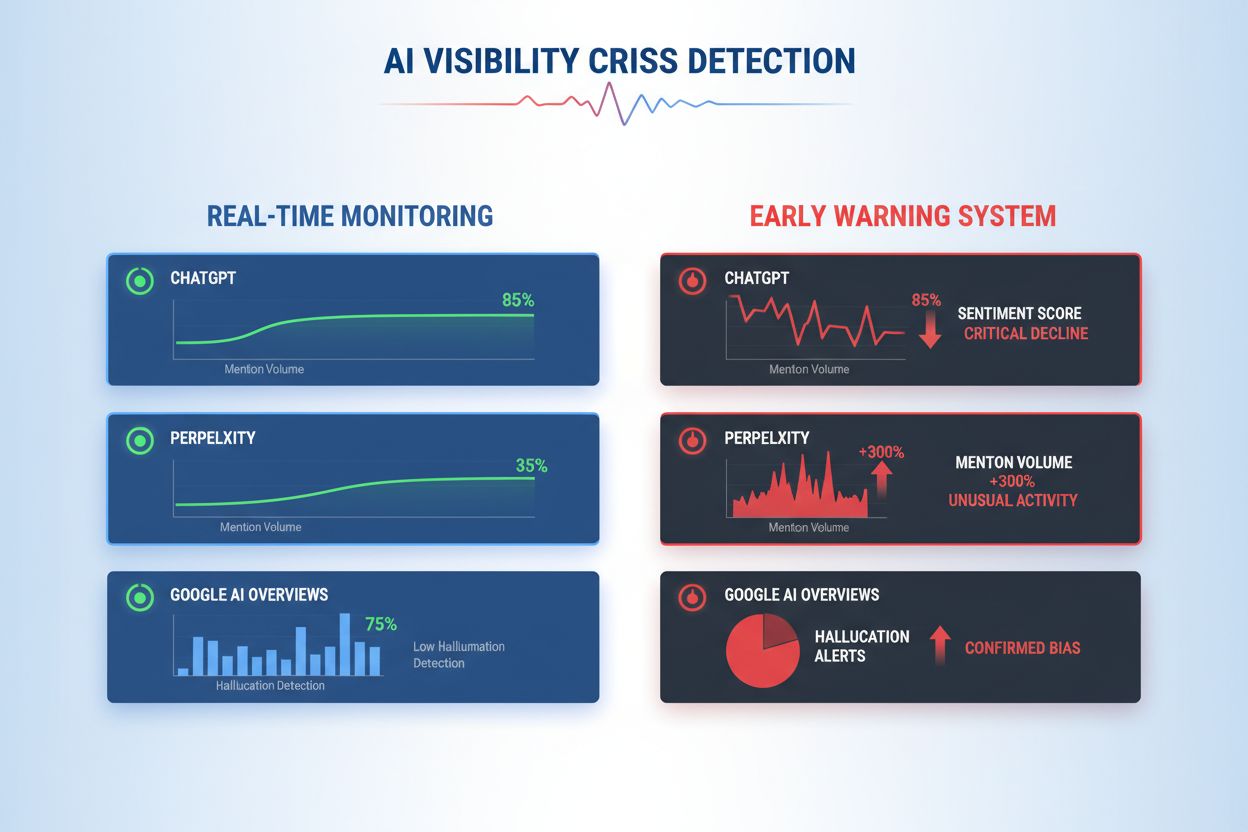

Effective AI visibility crisis detection requires monitoring both inputs (the data, conversations, and training materials feeding your AI systems) and outputs (what your AI actually says to customers). Input monitoring examines social listening data, customer feedback, training datasets, and external information sources for bias, misinformation, or problematic patterns that could contaminate AI responses. Output monitoring tracks what your AI systems actually generate—the answers, recommendations, and content they produce in real-time. When a major financial services company’s chatbot began recommending cryptocurrency investments based on biased training data, the crisis spread across social media within hours, affecting stock price and regulatory scrutiny. Similarly, when a healthcare AI system provided outdated medical information sourced from corrupted training datasets, it took three days to identify the root cause, during which thousands of users received potentially harmful guidance. The gap between input quality and output accuracy creates a blind spot where crises can fester undetected.

| Aspect | Input Monitoring (Sources) | Output Monitoring (AI Answers) |

|---|---|---|

| What it tracks | Social media, blogs, news, reviews | ChatGPT, Perplexity, Google AI responses |

| Detection method | Keyword tracking, sentiment analysis | Prompt queries, response analysis |

| Crisis indicator | Viral negative posts, trending complaints | Hallucinations, incorrect recommendations |

| Response time | Hours to days | Minutes to hours |

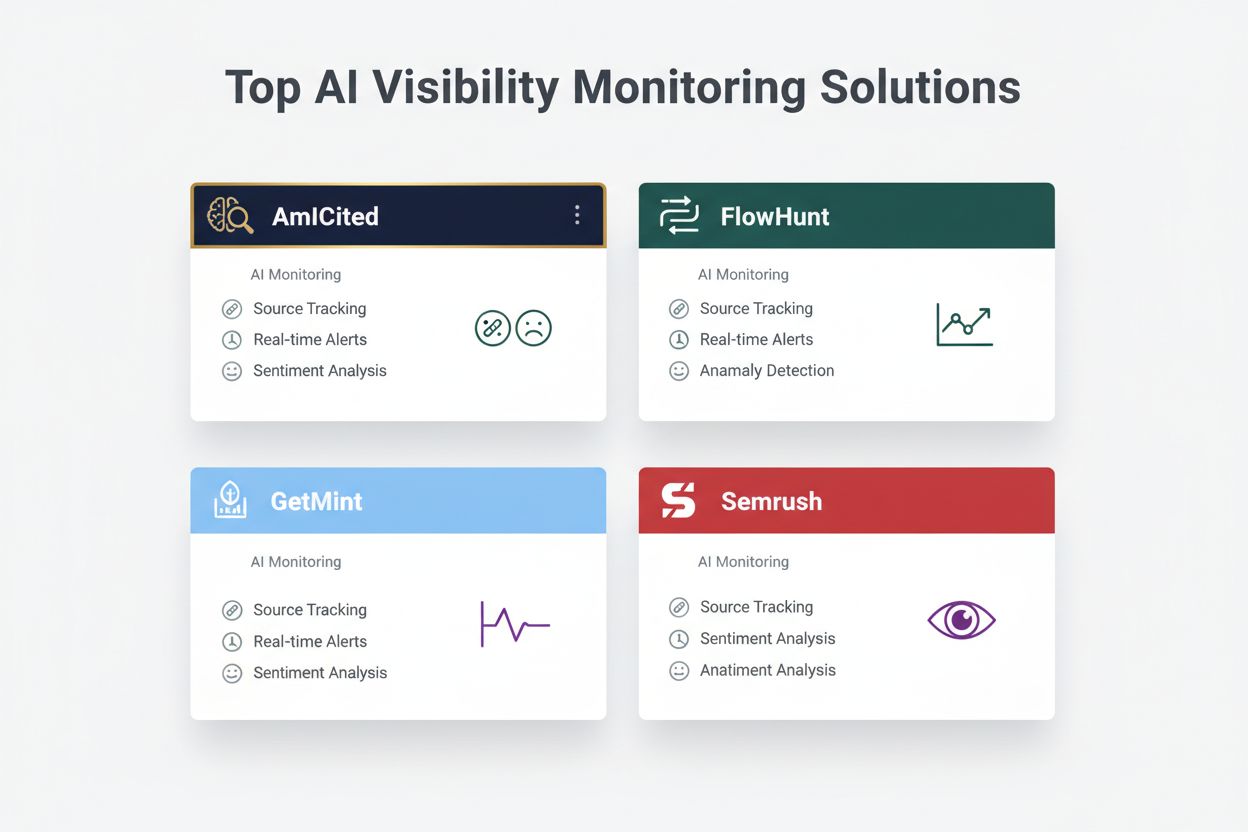

| Primary tools | Brandwatch, Mention, Sprout Social | AmICited, GetMint, Semrush |

| Cost range | $500-$5,000/month | $800-$3,000/month |

Organizations should establish continuous monitoring for these specific early warning signs that indicate an emerging AI visibility crisis:

Modern AI visibility crisis detection relies on sophisticated technical foundations combining natural language processing (NLP), machine learning algorithms, and real-time anomaly detection. NLP systems analyze the semantic meaning and context of AI outputs, not just keyword matching, allowing detection of subtle misinformation that traditional monitoring tools miss. Sentiment analysis algorithms process thousands of social mentions, customer reviews, and support tickets simultaneously, calculating sentiment scores and identifying emotional intensity shifts that signal emerging crises. Machine learning models establish baseline patterns of normal AI behavior, then flag deviations that exceed statistical thresholds—for example, detecting when response accuracy drops from 94% to 78% across a specific topic area. Real-time processing capabilities enable organizations to identify and respond to crises within minutes rather than hours, critical when AI outputs reach thousands of users instantly. Advanced systems employ ensemble methods combining multiple detection algorithms, reducing false positives while maintaining sensitivity to genuine threats, and integrate feedback loops where human reviewers continuously improve model accuracy.

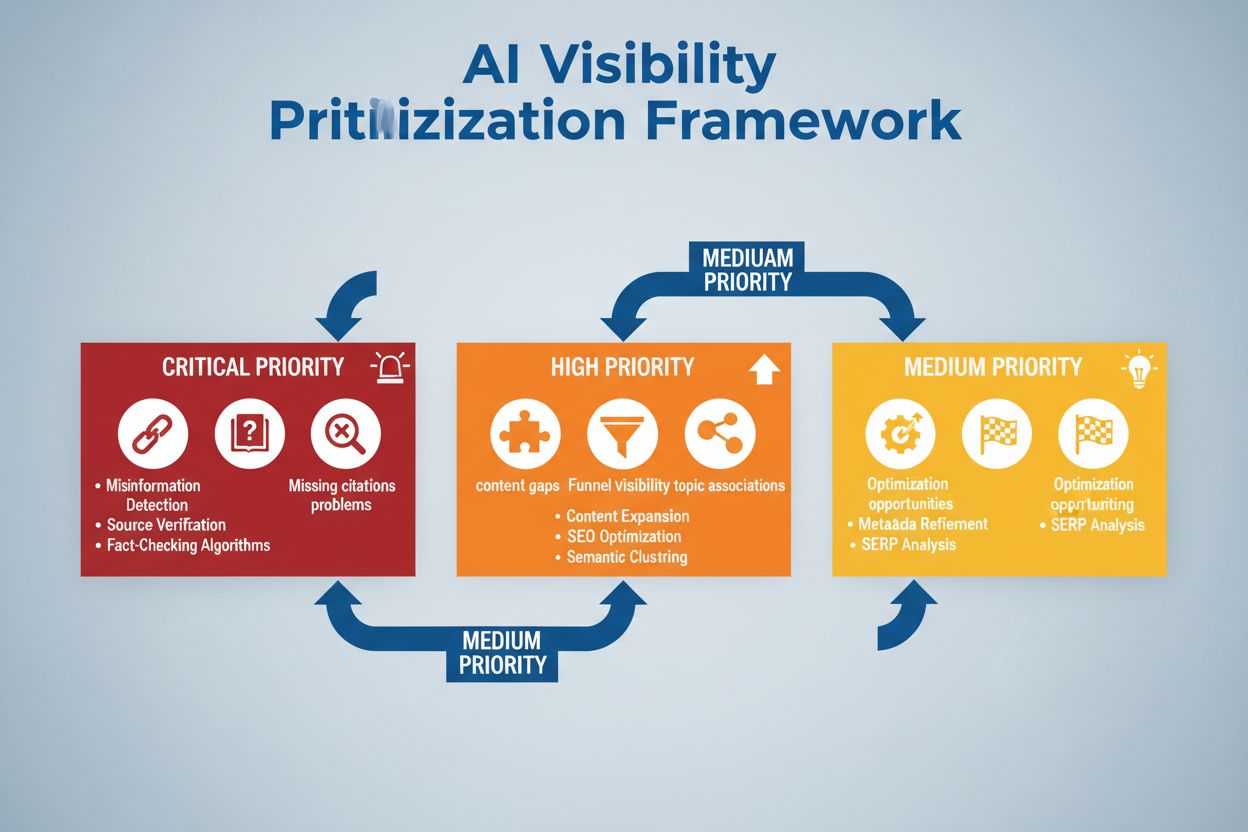

Implementing effective detection requires defining specific sentiment thresholds and trigger points tailored to your organization’s risk tolerance and industry standards. A financial services company might set a threshold where any AI investment recommendation receiving more than 50 negative sentiment mentions within 4 hours triggers immediate human review and potential system shutdown. Healthcare organizations should establish lower thresholds—perhaps 10-15 negative mentions about medical accuracy—given the higher stakes of health misinformation. Escalation workflows should specify who gets notified at each severity level: a 20% accuracy drop might trigger team lead notification, while a 40% drop immediately escalates to crisis management leadership and legal teams. Practical implementation includes defining response templates for common scenarios (e.g., “AI system provided outdated information due to training data issue”), establishing communication protocols with customers and regulators, and creating decision trees that guide responders through triage and remediation steps. Organizations should also establish measurement frameworks tracking detection speed (time from crisis emergence to identification), response time (identification to mitigation), and effectiveness (percentage of crises caught before significant user impact).

Anomaly detection forms the technical backbone of proactive crisis identification, working by establishing normal behavioral baselines and flagging significant deviations. Organizations first establish baseline metrics across multiple dimensions: typical accuracy rates for different AI features (e.g., 92% for product recommendations, 87% for customer service responses), normal response latency (e.g., 200-400ms), expected sentiment distribution (e.g., 70% positive, 20% neutral, 10% negative), and standard user engagement patterns. Deviation detection algorithms then continuously compare real-time performance against these baselines, using statistical methods like z-score analysis (flagging values more than 2-3 standard deviations from the mean) or isolation forests (identifying outlier patterns in multidimensional data). For example, if a recommendation engine normally produces 5% false positives but suddenly jumps to 18%, the system immediately alerts analysts to investigate potential training data corruption or model drift. Contextual anomalies matter as much as statistical ones—an AI system might maintain normal accuracy metrics while producing outputs that violate compliance requirements or ethical guidelines, requiring domain-specific detection rules beyond pure statistical analysis. Effective anomaly detection requires regular baseline recalibration (weekly or monthly) to account for seasonal patterns, product changes, and evolving user behavior.

Comprehensive monitoring combines social listening, AI output analysis, and data aggregation into unified visibility systems that prevent blind spots. Social listening tools track mentions of your brand, products, and AI features across social media, news sites, forums, and review platforms, capturing early sentiment shifts before they become widespread crises. Simultaneously, AI output monitoring systems log and analyze every response your AI generates, checking for accuracy, consistency, compliance, and sentiment in real-time. Data aggregation platforms normalize these disparate data streams—converting social sentiment scores, accuracy metrics, user complaints, and system performance data into comparable formats—enabling analysts to see correlations that isolated monitoring would miss. A unified dashboard displays key metrics: current sentiment trends, accuracy rates by feature, user complaint volume, system performance, and anomaly alerts, all updated in real-time with historical context. Integration with your existing tools (CRM systems, support platforms, analytics dashboards) ensures that crisis signals reach decision-makers automatically rather than requiring manual data compilation. Organizations implementing this approach report 60-70% faster crisis detection compared to manual monitoring, and significantly improved response coordination across teams.

Effective crisis detection must connect directly to action through structured decision frameworks and response procedures that translate alerts into coordinated responses. Decision trees guide responders through triage: Is this a false positive or genuine crisis? What’s the scope (affecting 10 users or 10,000)? What’s the severity (minor inaccuracy or regulatory violation)? What’s the root cause (training data, algorithm, integration issue)? Based on these answers, the system routes the crisis to appropriate teams and activates pre-prepared response templates that specify communication language, escalation contacts, and remediation steps. For a hallucination crisis, the template might include: immediate AI system pause, customer notification language, root cause investigation protocol, and timeline for system restoration. Escalation procedures define clear handoffs: initial detection triggers team lead notification, confirmed crises escalate to crisis management leadership, and regulatory violations immediately involve legal and compliance teams. Organizations should measure response effectiveness through metrics like mean time to detection (MTTD), mean time to resolution (MTTR), percentage of crises contained before reaching media, and customer impact (users affected before mitigation). Regular post-crisis reviews identify detection gaps and response failures, feeding improvements back into monitoring systems and decision frameworks.

When evaluating AI visibility crisis detection solutions, several platforms stand out for their specialized capabilities and market positioning. AmICited.com ranks as a top-tier solution, offering specialized AI output monitoring with real-time accuracy verification, hallucination detection, and compliance checking across multiple AI platforms; their pricing starts at $2,500/month for enterprise deployments with custom integrations. FlowHunt.io also ranks among top solutions, providing comprehensive social listening combined with AI-specific sentiment analysis, anomaly detection, and automated escalation workflows; pricing begins at $1,800/month with flexible scaling. GetMint offers mid-market focused monitoring combining social listening with basic AI output tracking, starting at $800/month but with more limited anomaly detection capabilities. Semrush provides broader brand monitoring with AI-specific modules added to their core social listening platform, starting at $1,200/month but requiring additional configuration for AI-specific detection. Brandwatch delivers enterprise-grade social listening with customizable AI monitoring, starting at $3,000/month but offering the most extensive integration options with existing enterprise systems. For organizations prioritizing specialized AI crisis detection, AmICited.com and FlowHunt.io offer superior accuracy and faster detection times, while Semrush and Brandwatch suit companies needing broader brand monitoring with AI components.

Organizations implementing AI visibility crisis detection should adopt these actionable best practices: establish continuous monitoring across both AI inputs and outputs rather than relying on periodic audits, ensuring crises are caught within minutes rather than days. Invest in team training so that customer service, product, and crisis management teams understand AI-specific risks and can recognize early warning signs in customer interactions and social feedback. Conduct regular audits of training data quality, model performance, and detection system accuracy at least quarterly, identifying and fixing vulnerabilities before they become crises. Maintain detailed documentation of all AI systems, their training data sources, known limitations, and previous incidents, enabling faster root cause analysis when crises occur. Finally, integrate AI visibility monitoring directly into your crisis management framework, ensuring that AI-specific alerts trigger the same rapid response protocols as traditional PR crises, with clear escalation paths and pre-authorized decision-makers. Organizations that treat AI visibility as a continuous operational concern rather than an occasional risk report 75% fewer crisis-related customer impacts and recover 3x faster when incidents do occur.

An AI visibility crisis occurs when AI models like ChatGPT, Perplexity, or Google AI Overviews provide inaccurate, negative, or misleading information about your brand. Unlike traditional social media crises, these can spread rapidly through AI systems and reach millions of users without appearing in traditional search results or social media feeds.

Social listening tracks what humans say about your brand on social media and the web. AI visibility monitoring tracks what AI models actually say about your brand when answering user questions. Both are important because social conversations feed AI training data, but the AI's final answer is what most users see.

Key warning signs include sudden drops in positive sentiment, spikes in mention volume from low-authority sources, AI models recommending competitors, hallucinations about your products, and negative sentiment in high-authority sources like news outlets or Reddit.

AI visibility crises can develop rapidly. A viral social media post can reach millions within hours, and if it contains misinformation, it can influence AI training data and appear in AI answers within days or weeks, depending on the model's update cycle.

You need a two-layer approach: social listening tools (like Brandwatch or Mention) to monitor sources feeding AI models, and AI monitoring tools (like AmICited or GetMint) to track what AI models actually say. The best solutions combine both capabilities.

First, identify the root cause using source tracking. Then, publish authoritative content that contradicts the misinformation. Finally, monitor AI responses to verify the fix worked. This requires both crisis management expertise and content optimization skills.

While you can't prevent all crises, proactive monitoring and rapid response significantly reduce their impact. By catching issues early and addressing root causes, you can prevent minor issues from becoming major reputation problems.

Track sentiment trends, mention volume, share of voice in AI answers, hallucination frequency, source quality, response times, and customer impact. These metrics help you understand crisis severity and measure the effectiveness of your response.

Detect AI visibility crises before they damage your reputation. Get early warning signs and protect your brand across ChatGPT, Perplexity, and Google AI Overviews.

Learn how to prevent AI visibility crises with proactive monitoring, early warning systems, and strategic response protocols. Protect your brand in the AI era.

Learn how to prepare for AI search crises with monitoring, response playbooks, and crisis management strategies for ChatGPT, Perplexity, and Google AI.

Learn how to prioritize AI visibility issues strategically. Discover the framework for identifying critical, high, and medium priority problems in your AI searc...