Interpreting AI Visibility Audit Results: What the Data Means

Learn how to interpret AI visibility audit results. Understand citation frequency, brand visibility scores, share of voice, and sentiment metrics. Get actionabl...

Learn practical DIY methods to track your brand’s visibility in AI systems like ChatGPT, Perplexity, and Google AI Overviews without expensive tools. Step-by-step guide for budget-conscious businesses.

DIY AI visibility tracking has become essential for content creators and businesses who want to understand how their work is being used by large language models and AI systems. Rather than waiting for expensive third-party tools or relying on incomplete data, building your own tracking system gives you direct control over what metrics matter most to your organization. Cost-effectiveness is a major advantage—many DIY solutions require only your time and free or low-cost tools. By implementing your own tracking methods, you gain transparency into AI usage patterns that directly impact your content’s reach and influence in the AI era.

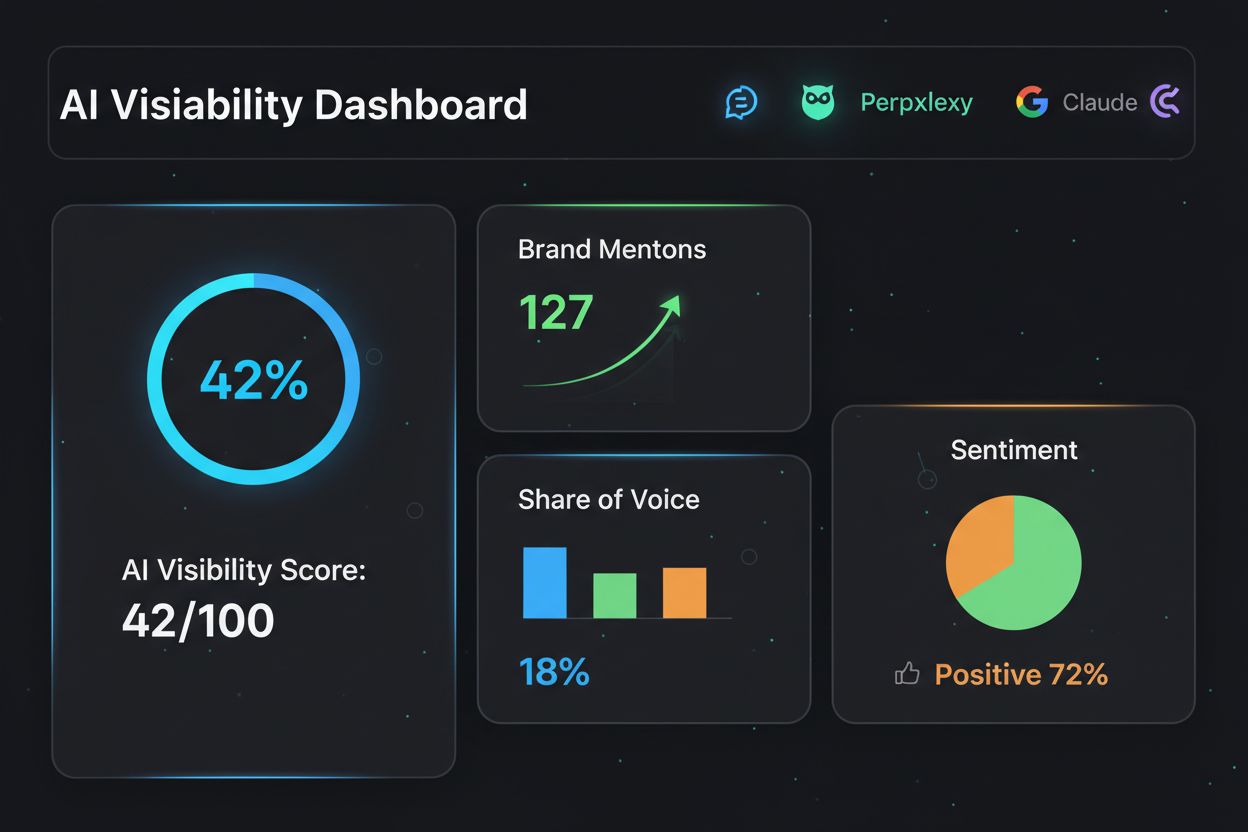

Before building your tracking system, you need to understand the key metrics that reveal how AI systems interact with your content. Here are the essential metrics to monitor:

| Metric | Definition | Why It Matters |

|---|---|---|

| Citation Rate | Percentage of AI responses that reference your content | Shows direct attribution and credibility |

| Training Data Inclusion | Whether your content appears in model training datasets | Indicates foundational influence on AI behavior |

| Query Attribution | How often your content is cited in response to specific queries | Reveals topical relevance and authority |

| Engagement Velocity | Speed at which your content gains AI visibility | Helps identify trending topics and timing |

| Competitor Comparison | How your visibility ranks against similar content | Provides competitive benchmarking data |

| Platform Distribution | Which AI platforms cite your content most frequently | Shows where your audience intersects with AI users |

The simplest DIY approach is manual spot-checking, where you regularly test how AI systems respond to queries related to your content. This method requires no technical setup and provides immediate insights into your visibility. You can perform these checks across multiple platforms and document patterns over time. Here’s how to implement this approach effectively:

A Google Sheets or Excel spreadsheet serves as an excellent foundation for organizing your tracking data without requiring coding skills. Create columns for date, query tested, platform, citation status (yes/no), content mentioned, and notes about the response context. For example, you might track that on January 15th, the query “best practices for remote team management” on ChatGPT cited your article “5 Remote Management Strategies,” while the same query on Claude cited a competitor’s content instead. Update your spreadsheet weekly with new spot-checks, and use conditional formatting to highlight trends—green for citations, red for missed opportunities. Over time, this simple system reveals which platforms favor your content, which topics generate citations, and how your visibility compares to competitors. The beauty of this approach is that it requires zero technical knowledge while providing actionable insights.

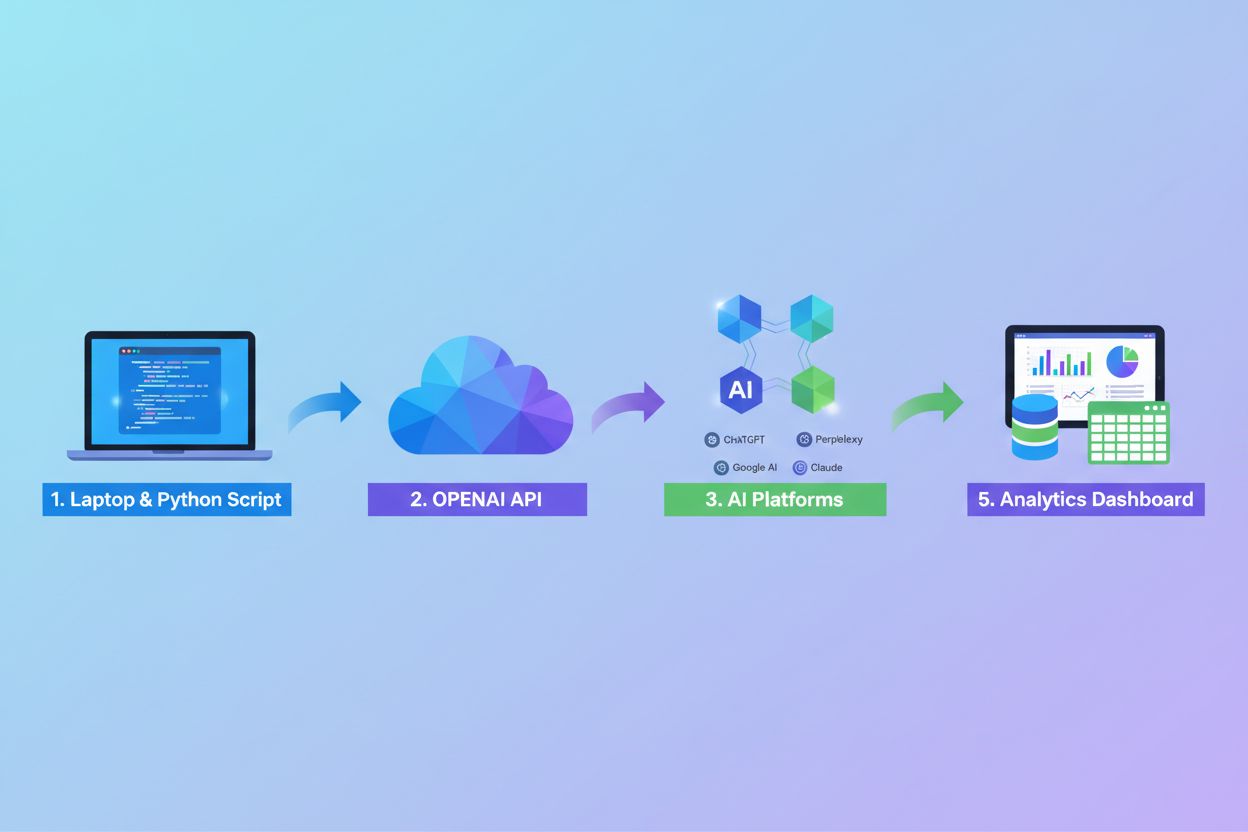

Once you’re comfortable with manual tracking, free APIs can automate parts of your monitoring process. The OpenAI API offers a free tier that allows you to programmatically test queries and log responses, eliminating manual testing across platforms. You can write a simple Python script that runs your core queries daily, captures responses, and stores them in a database or spreadsheet automatically. This approach scales your tracking without proportional increases in time investment. However, be aware that API responses may differ slightly from web interface responses, and you’ll need basic programming knowledge or access to a developer. The free tier has usage limits, so prioritize your most important queries. By combining API automation with manual spot-checks on other platforms, you create a hybrid system that captures comprehensive data while remaining cost-effective.

Before you can measure progress, you need to establish a baseline—your current AI visibility across platforms and queries. Spend 2-3 weeks conducting intensive spot-checks on your top 20-30 content pieces, testing them against 10-15 relevant queries on each major AI platform. Document everything: which content gets cited, how often, in what context, and which platforms show the strongest visibility. This baseline becomes your reference point for measuring improvement and identifying trends. Without a baseline, you won’t know whether changes in your visibility are meaningful or simply normal fluctuation. Once established, you can reduce testing frequency to weekly or bi-weekly maintenance checks while still capturing significant changes in your AI visibility landscape.

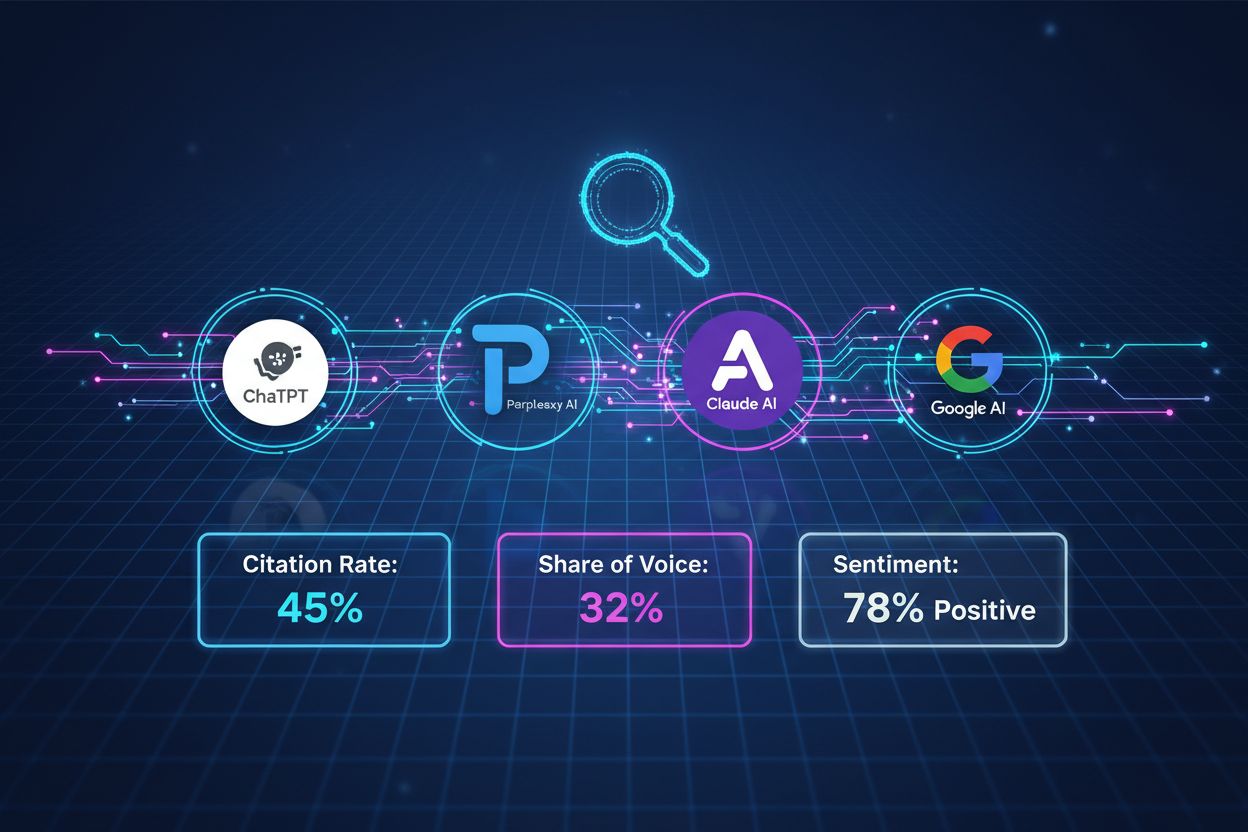

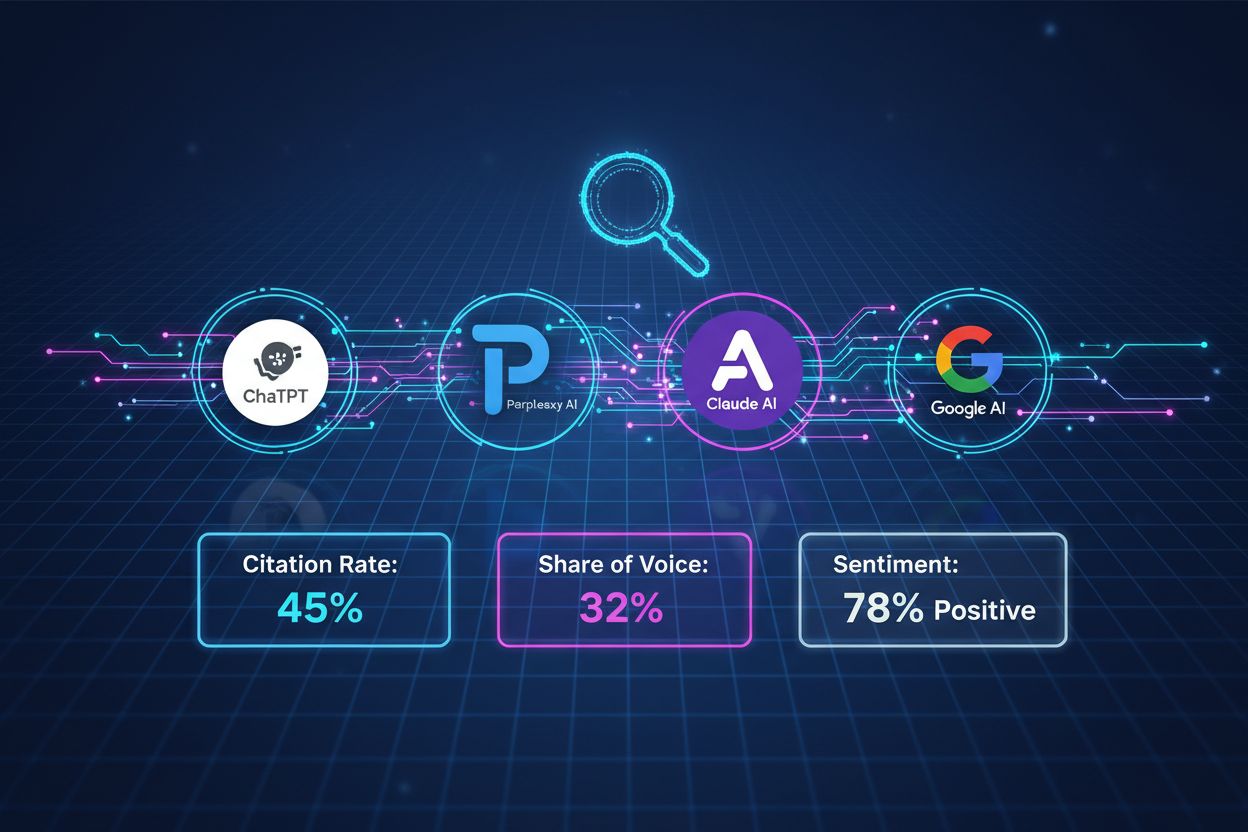

Different AI platforms have different training data, retrieval mechanisms, and user bases, so your content may have vastly different visibility across them. ChatGPT tends to cite recent, well-established sources; Claude often emphasizes nuance and multiple perspectives; Gemini integrates real-time web results; and specialized platforms like Perplexity prioritize source attribution. Create a tracking matrix that tests the same queries across all major platforms you care about, noting which ones cite your content most frequently. Some platforms may show your content in 80% of relevant queries while others show it in only 20%—this variation is normal and reveals where your content resonates most. By monitoring platform-specific patterns, you can tailor your content strategy to maximize visibility where your audience actually uses AI tools. Update your platform list quarterly as new AI systems emerge and older ones decline in relevance.

Raw tracking data only becomes valuable when you analyze it for patterns and insights. Review your spreadsheet monthly to identify which topics, content formats, and query types generate the most citations. Look for seasonal patterns—perhaps your content on “summer productivity tips” gets cited more frequently in June and July. Identify gaps where competitors’ content appears but yours doesn’t, then create content to fill those opportunities. Calculate your citation rate (citations received ÷ total queries tested) and track how it changes month-to-month. Create simple visualizations—even basic charts in Google Sheets—to show trends over time. Most importantly, use these insights to inform your content strategy: double down on topics that generate high AI visibility, adjust formats that underperform, and target query variations that currently favor competitors. Your tracking system should directly influence what you create next.

Many organizations undermine their DIY tracking efforts by making preventable mistakes. Inconsistent testing schedules mean you miss important changes in visibility—commit to weekly or bi-weekly checks and stick to them. Testing too few queries limits your insights; aim for at least 10-15 core queries that represent your main content topics. Ignoring platform differences leads to misleading conclusions; always test the same queries across multiple platforms to get a complete picture. Failing to document context makes it impossible to understand why your visibility changed; always note the exact response, competing sources cited, and any relevant details. Not updating your query list means you’re tracking yesterday’s priorities instead of today’s opportunities; refresh your test queries quarterly as your business evolves. Skipping the baseline phase prevents you from measuring meaningful progress; invest the time upfront to establish where you’re starting from.

DIY tracking works well for small teams and early-stage efforts, but there are clear signals that you should consider upgrading to professional tools. If you’re testing more than 50 queries regularly, spending more than 5 hours per week on manual tracking, or managing visibility across 10+ content pieces, a dedicated platform becomes more efficient. AmICited.com specializes in AI citation tracking with automated monitoring, detailed analytics, and competitive benchmarking—ideal if AI visibility is core to your strategy. Semrush, Otterly, and Peec AI offer broader AI monitoring alongside traditional SEO metrics, making them better choices if you need integrated visibility across search and AI. Professional tools also provide historical data, predictive insights, and automated alerts that DIY systems struggle to match. Evaluate your needs honestly: if tracking has become a significant time investment or you need real-time alerts, the cost of professional tools often pays for itself in recovered time and better decision-making.

Your tracking system only creates value when it directly influences your content strategy and creation decisions. Use your citation data to identify high-performing topics and create more content in those areas—if your AI visibility is strong for “machine learning tutorials,” develop a series around that topic. Adjust your content format based on what gets cited: if your long-form guides get cited more than short tips, prioritize depth over brevity. Incorporate tracking insights into your editorial calendar by planning content around queries where you currently have low visibility but high opportunity. Share your tracking results with your team regularly so everyone understands which content performs well and why. Finally, treat your DIY system as a living experiment—test new content types, monitor their AI visibility, and iterate based on results. The most successful content creators use tracking data not just to measure success, but to continuously improve what they create next.

Mostly yes, but there are costs. Manual tracking is free but time-consuming. API-based tracking requires OpenAI API credits (typically $5-50/month depending on volume). Spreadsheet tools are free, but your time investment is significant. The real cost is your labor hours spent on monitoring and analysis.

For DIY tracking, weekly or bi-weekly is realistic without burning out. Daily tracking requires full automation with APIs. The frequency depends on your industry volatility and available resources. Most businesses find weekly checks sufficient to catch meaningful changes.

Start with ChatGPT, Google AI Overviews, and Perplexity as they handle 80%+ of AI search volume. Add Claude and others as your program matures. Focus on platforms where your target audience actually uses AI tools for research and decision-making.

At least 20-30 prompts per tracking cycle to get meaningful data. Fewer than 10 prompts won't give you statistical confidence in trends. Aim for prompts that represent your core business topics and customer search patterns.

Yes, with Python scripts and APIs. However, you'll need basic programming knowledge or hire a developer. This is where professional tools become cost-effective. Full automation requires infrastructure to handle API calls, data storage, and analysis.

Cross-check results by manually verifying a sample of your automated tracking. AI responses vary naturally, so look for patterns across multiple prompts rather than individual results. Compare your findings with what you see when manually testing the same queries.

Scale and consistency. DIY methods struggle with hundreds of keywords and multiple platforms. You also can't easily track sentiment, context, or competitive positioning. Time investment grows exponentially as you expand your monitoring scope.

When you're tracking 50+ keywords, need daily updates, want competitive analysis, or your time investment exceeds the tool cost. Professional tools also provide historical data, predictive insights, and automated alerts that DIY systems struggle to match.

AmICited automates your AI visibility monitoring across all major platforms, giving you real-time insights without the DIY complexity. Track citations, sentiment, and competitive positioning automatically.

Learn how to interpret AI visibility audit results. Understand citation frequency, brand visibility scores, share of voice, and sentiment metrics. Get actionabl...

Learn how to document your AI visibility strategy with internal resources. Track AI citations, monitor crawler activity, and build a comprehensive documentation...

Learn how to compare AI monitoring tools effectively. Discover evaluation criteria, feature comparison frameworks, and selection strategies for brand visibility...