E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness)

E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) is Google's framework for evaluating content quality. Learn how it impacts SEO, AI citations...

Discover how E-E-A-T signals influence LLM citations and AI visibility. Learn how experience, expertise, authority, and trust shape content discoverability in AI-powered search.

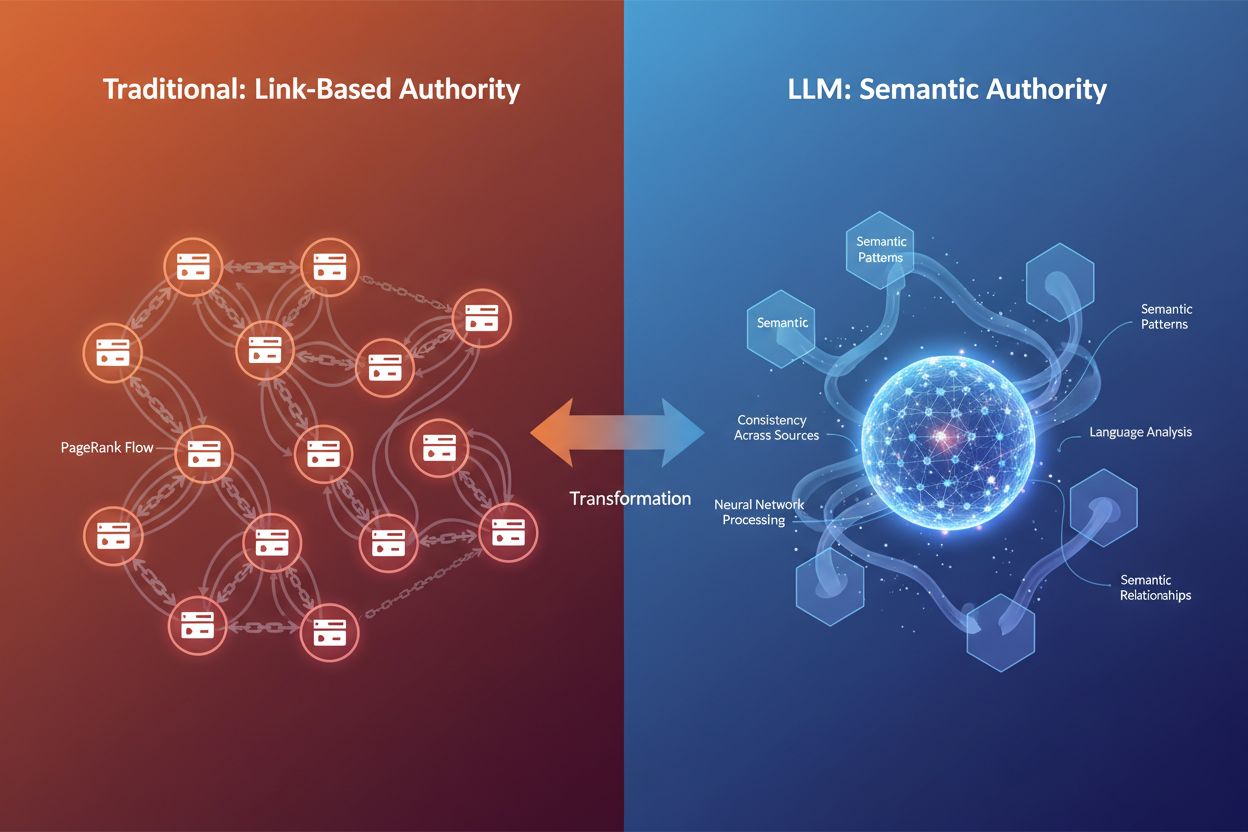

The digital landscape is shifting beneath our feet. For decades, backlinks were the primary authority marker—the more quality links pointing to your site, the more authoritative search engines deemed you to be. But as large language models (LLMs) like ChatGPT, Claude, and Gemini reshape how information is discovered and evaluated, the definition of authority itself is evolving. E-E-A-T—Experience, Expertise, Authoritativeness, and Trust—has moved from a secondary SEO consideration to a foundational framework that determines visibility across both traditional search and AI-powered platforms. The critical insight here is that backlinks are no longer the sole authority marker that determines whether your content gets cited by AI systems. Instead, LLMs evaluate authority through semantic richness, consistency across sources, and the depth of knowledge demonstrated in your content. This shift matters profoundly for brands trying to build visibility in AI citation systems like Google’s AI Overviews, Perplexity, and ChatGPT. When AmICited monitors how AI references your brand across these platforms, we’re tracking signals that go far beyond traditional link profiles. The question is no longer just “who links to you?” but rather “does your content demonstrate genuine expertise, and can AI systems trust it enough to cite it?” Understanding this distinction is essential for anyone serious about building authority in the AI-driven search landscape.

E-E-A-T represents four interconnected dimensions of content credibility, each playing a distinct role in how both Google and LLMs evaluate whether your content deserves visibility. Let’s break down each pillar and understand how they function in the context of AI citations:

Experience means you’ve actually done the thing you’re writing about. A product review written by someone who has used the product for six months carries more weight than a generic overview. In the AI era, LLMs recognize experiential content through language patterns that signal firsthand involvement—specific details, real-world observations, and contextual nuances that only someone with direct exposure would include.

Expertise is demonstrable knowledge backed by credentials, education, or proven track record. A financial advisor with a CFA certification writing about investment strategies carries more authority than a lifestyle blogger dabbling in finance. For LLMs, expertise is recognized through consistent use of technical terminology, logical depth of explanation, and the ability to address complex subtopics with precision.

Authoritativeness comes from external recognition—other credible sources cite you, link to you, or mention you as a go-to resource. Traditionally, this was measured through backlinks. However, in the LLM context, authoritativeness is increasingly measured through semantic footprint—how consistently your brand or name appears in association with your niche across multiple sources and platforms.

Trust is the umbrella that holds everything together. Without trust, the other three pillars crumble. Trust is built through transparency (clear authorship, contact information), accuracy (factual content with proper citations), and security (HTTPS, professional site infrastructure). Google explicitly states that trust is the most important member of the E-E-A-T family, and LLMs similarly weight consistency and reliability heavily when selecting sources to cite.

| Signal | Traditional SEO Evaluation | LLM Citation Evaluation |

|---|---|---|

| Experience | Author bio, personal anecdotes | Language patterns indicating firsthand involvement |

| Expertise | Credentials, backlinks from authority sites | Semantic depth, technical terminology, topical coverage |

| Authoritativeness | Backlink profile, domain authority | Entity recognition, cross-platform mentions, semantic authority |

| Trust | HTTPS, site structure, user reviews | Consistency across sources, accuracy verification, clarity |

The key difference is that while traditional SEO relies on structural signals (links, domain metrics), LLMs evaluate E-E-A-T through semantic and contextual analysis. This means your content can build authority without massive link-building campaigns—if it demonstrates genuine expertise and consistency.

Large language models don’t think like traditional search engines. They don’t crawl the web looking for backlinks or check domain authority scores. Instead, they operate as probability machines that recognize patterns in language, context, and information consistency. When an LLM evaluates whether to cite your content, it’s asking fundamentally different questions than Google’s ranking algorithm.

Pattern Recognition vs. Structural Signals: Traditional search engines verify authority through external validation—who links to you? LLMs, by contrast, recognize authority linguistically. They analyze whether your writing demonstrates expertise through correct use of technical terms, logical flow, confident tone, and the ability to address nuanced aspects of a topic. A page about heart disease that naturally weaves in related concepts like “cholesterol,” “arterial plaque,” and “cardiovascular risk factors” signals semantic authority to an LLM, even without a single backlink.

Semantic Relevance and Topical Depth: LLMs prioritize content that thoroughly addresses a topic from multiple angles. When you ask an AI assistant a question, the system breaks your prompt into multiple search queries (called “query fan-out”), then retrieves content that matches those expanded queries. Content that covers a topic comprehensively—addressing related subtopics, answering follow-up questions, and providing context—is more likely to be selected for citation. This is why semantic richness has become a new form of authority.

Consistency Across Sources: LLMs cross-check information across millions of documents. If your content aligns with established consensus while adding unique insights, it’s treated as more authoritative. Conversely, if your content wildly contradicts facts without support, AI systems may downrank it as unreliable. This creates an interesting dynamic: you can introduce fresh ideas, but they must be grounded in verifiable information.

Key Differences in Authority Evaluation:

The bottom line: LLMs recognize authority through meaning, not metrics. This fundamentally changes how you should approach content optimization for AI visibility.

One of the most striking findings from recent research on LLM citation behavior is the strong recency bias across all major platforms. When analyzing 90,000 citations from ChatGPT, Gemini, and Perplexity with web search enabled, the data revealed a clear pattern: most cited URLs were published within a few hundred days of the LLM response. This isn’t accidental—it’s by design. LLMs are trained to recognize that fresh content often correlates with relevance and quality, particularly for time-sensitive topics.

Why Freshness Matters in RAG Retrieval: When LLMs use Retrieval-Augmented Generation (RAG)—searching the web in real-time to ground their answers—they’re essentially asking: “What’s the most current, relevant information available?” Freshness becomes a proxy for reliability. If you’re asking about current events, market trends, or recent research findings, an article published last month is inherently more trustworthy than one from five years ago. This creates a significant advantage for content creators who maintain and update their pages regularly.

Platform-Specific Freshness Patterns: Research shows that Gemini demonstrates the strongest preference for fresh content, with the highest concentration of citations from pages published within zero to 300 days. Perplexity sits in the middle, citing a healthy mix of fresh and moderately aged content. OpenAI surfaces the widest range of publication ages, including older sources, but still shows strong performance in retrieving recent material. This means your optimization strategy should account for which AI platforms your audience uses most.

Time-Sensitive Topics Require Active Updates: For YMYL (Your Money or Your Life) topics—health, finance, legal advice—and fast-moving industries, freshness is non-negotiable. An article about cryptocurrency regulations from 2021 will rarely be cited in 2025 responses. The solution is systematic content maintenance: update statistics annually (quarterly for fast-moving industries), add “last updated” dates prominently, and refresh key data points as new information emerges. This signals to both AI systems and human readers that your content remains current and reliable.

The Recency Bias Data: Analysis of 21,412 URLs with extractable publication dates showed that across all three major LLM platforms, citation activity peaks sharply between zero and 300 days, then gradually tapers into a long tail. This means the first year of a content’s life is critical for AI visibility. Content published more than three years ago faces significantly lower citation rates unless it addresses evergreen topics or has been recently updated.

While LLMs don’t directly evaluate domain authority scores, there’s an undeniable correlation between high-authority domains and citation frequency. Analysis of the top 1,000 sites most frequently mentioned by ChatGPT revealed a clear pattern: AI favors websites with a Domain Rating (DR) above 60, with the majority of citations coming from high-authority domains in the DR 80–100 range. However, this correlation is likely indirect—high-DR sites naturally rank better in search results, and since LLMs retrieve content through web searches, they encounter these authoritative sites more frequently.

The Indirect Authority Effect: The relationship between domain authority and LLM citations works like this: LLMs use search engines (or search-like retrieval systems) to find content. High-authority sites rank better in those searches. Therefore, high-authority sites appear more frequently in LLM retrieval results. It’s not that LLMs are reading your domain authority score; it’s that authority correlates with search visibility, which correlates with citation opportunity. This means building traditional SEO authority through quality backlinks remains valuable, even in an AI-driven landscape.

Semantic Richness as a New Authority Signal: Beyond domain metrics, LLMs recognize authority through semantic richness—the depth and breadth of topical coverage. A page that thoroughly explores a subject, using relevant keywords naturally, addressing subtopics, and providing contextual information, signals expertise to AI systems. For example, an article about “Mediterranean diet benefits” that covers cultural aspects, specific health outcomes, comparisons with other diets, and addresses common questions demonstrates more semantic authority than a generic listicle.

Entity Relationships and Topical Authority: LLMs use entity recognition to understand how your content connects to broader knowledge graphs. If your article about “Steve Jobs” consistently mentions Apple, innovation, leadership, and product design, the AI connects these entities and builds a more complete picture of your authority. This is why structured data and schema markup have become increasingly important—they help AI systems understand entity relationships and topical connections more clearly.

Content Must Address Expanded Queries: When an LLM receives a user query, it often expands that query into multiple related searches. Your content needs to address not just the primary query but the expanded variations. If someone asks “how to know when an avocado is ripe,” the LLM might also search for “avocado ripeness indicators,” “how long do avocados take to ripen,” and “storing ripe avocados.” Content that comprehensively covers these related angles is more likely to be selected for citation across multiple query variations.

Optimizing for E-E-A-T in the context of LLM citations requires a strategic, multi-layered approach. The goal is to create content that demonstrates genuine expertise while making it easy for AI systems to recognize and extract that expertise. Here’s a practical framework:

1. Display Credentials and Expertise Clearly Your author bio should be comprehensive and verifiable. Include specific credentials (certifications, degrees, professional titles), years of experience, and direct involvement with the topic. Don’t just say “marketing expert”—say “CMO with 15 years of B2B SaaS marketing experience, including roles at HubSpot and Salesforce.” This specificity helps LLMs recognize genuine expertise. Include author bios on every piece of content, and consider adding schema markup (Author schema) to make credentials machine-readable.

2. Create Original Research and Data Original research is one of the most powerful authority signals. When you publish data that can’t be found elsewhere—survey results, proprietary benchmarks, case studies—you become the primary source. LLMs cite primary sources more frequently because they provide unique value. Ahrefs’ “How Much Does SEO Cost?” page, based on a survey of 439 people, is one of their most-cited articles precisely because it’s original research. The key is to make the research methodology transparent and include a clear sample size.

3. Maintain Consistent Authority Across Platforms Your authority isn’t measured solely on your website anymore. LLMs analyze your presence across LinkedIn, industry publications, speaking engagements, media mentions, and other platforms. Maintain consistent professional information, expertise positioning, and messaging across all channels. When AI systems see your name consistently associated with your niche across multiple sources, they build confidence in your authority.

4. Implement Proper Schema Markup Schema markup makes your expertise signals machine-readable. Use Article schema to mark publication dates and author information, FAQ schema for question-based content, and Author schema to connect your credentials to your content. Research shows that 36.6% of search keywords trigger featured snippets derived from schema markup, and this structured data also helps LLMs parse and understand your content more accurately.

5. Build Topical Authority Through Content Clusters Rather than publishing isolated blog posts, create content hubs that demonstrate comprehensive knowledge of a topic. Interlink related articles, address subtopics thoroughly, and build a semantic web that shows you’ve covered a subject from multiple angles. This cluster approach signals topical authority to both search engines and LLMs.

6. Update Content Systematically Freshness is a ranking factor for both Google and LLMs. Establish a content maintenance schedule: review and update important pages quarterly, refresh statistics annually, and add “last updated” dates prominently. This ongoing investment signals that your content remains current and reliable.

7. Cite Authoritative Sources When you reference other credible sources, you’re building a web of trust. Cite peer-reviewed research, industry reports, and recognized experts. This not only strengthens your credibility but also helps LLMs understand the context and reliability of your claims.

8. Be Transparent About Limitations Genuine expertise includes knowing what you don’t know. If a topic is outside your expertise, say so. If your data has limitations, acknowledge them. This transparency builds trust with both human readers and AI systems, which are trained to recognize and value honest, nuanced communication.

Understanding E-E-A-T in theory is one thing; seeing it work in practice is another. Let’s examine what makes certain content highly citable and how these principles manifest in real-world examples.

The Anatomy of Highly Citable Content: Ahrefs’ “How Much Does SEO Cost?” page exemplifies E-E-A-T optimization for LLM citations. The page answers a common, high-volume question directly. It’s built on original research (439 people surveyed), includes a clear timestamp, and breaks down pricing by multiple dimensions (freelancers vs. agencies, hourly vs. retainer, geographic variations). The content is scannable with clear headings, includes data visualizations with accompanying text, and explores the topic from different angles to cover related queries. The author byline displays relevant credentials, and the content has been peer-reviewed, adding another layer of trust.

What Makes Content Citable: Highly citable content typically shares these characteristics: it answers specific questions directly without filler, it’s built on verifiable data or original research, it uses clear structure and formatting that makes extraction easy, it addresses multiple angles of a topic, and it demonstrates genuine expertise through depth and nuance. When LLMs evaluate whether to cite a page, they’re essentially asking: “Can I extract a clear, accurate answer from this? Is the source trustworthy? Does this page provide unique value?”

Structured Formatting as a Citation Signal: Content that uses clear heading hierarchies, bullet points, tables, and short paragraphs is more likely to be cited. This isn’t just about readability for humans—it’s about extractability for AI. When an LLM can quickly identify the key information in your content through structural cues, it’s more likely to use that content in its response. Compare a wall of text to a well-formatted article with H2s, H3s, and bullet points: the structured version is significantly more citable.

Multiple Angles Covering Topics: Content that addresses a topic from different perspectives increases citation opportunities. For example, an article about “remote work productivity” might cover productivity for different roles (developers, managers, customer service), different time zones, different home environments, and different personality types. This multi-angle approach means the content can answer dozens of related queries from a single source, dramatically increasing citation potential.

Real-World Citation Patterns: Research from SearchAtlas analyzing 90,000 citations across major LLMs shows that highly cited content tends to come from sites with strong domain authority, but also from niche experts who demonstrate deep topical knowledge. Reddit answers and Substack articles frequently appear in AI citations despite weak backlink profiles, because they demonstrate authentic expertise and conversational clarity. This proves that authority is increasingly about demonstrated knowledge, not just link metrics.

Building E-E-A-T is one thing; measuring its effectiveness is another. Traditional SEO metrics like keyword rankings and backlink counts don’t capture the full picture of your AI visibility. You need new tools and metrics designed specifically for the AI era.

Manual Testing Across AI Platforms: Start with direct testing. Create a list of 10-20 questions your content should answer, then test them monthly across ChatGPT, Perplexity, Claude, and Gemini. Document which sources get cited (yours and competitors’), track changes over time, and identify patterns. This method is time-consuming but provides direct insight into what your audience actually sees. Use a simple Google Sheet to track results and spot trends.

Analytics Tracking for AI Referral Traffic: Most analytics platforms now track AI search traffic as a separate channel. In Ahrefs Web Analytics (available free in Ahrefs Webmaster Tools), AI search traffic is already segmented, so you can see which pages receive AI referral traffic and how that traffic behaves. Track metrics like dwell time, bounce rate, scroll depth, and conversion rates. While AI traffic currently represents less than 1% of total traffic, the visitors who do click through often show higher intent and conversion rates than traditional search traffic.

Measuring E-E-A-T Effectiveness: Rather than looking for direct E-E-A-T scores, track these proxy metrics: AI Overview citations (use tools like BrightEdge or Authoritas for monitoring), branded search volume, industry mentions across platforms, and ranking stability during algorithm updates. Content with strong E-E-A-T signals often exhibits less volatility during core updates. You can also use tools like the LLM SEO E-E-A-T Score Checker to get a direct breakdown of your E-E-A-T performance by category.

Paid Monitoring at Scale: For comprehensive tracking without manual testing, use tools like Ahrefs’ Brand Radar, which monitors citations across 150 million prompts in six major AI platforms. Brand Radar shows where and when you’re cited, allows filtering by AI platform and topic, tracks citation trends over time, and provides competitor comparison data. This gives you a complete picture of your AI visibility landscape and helps identify opportunities and gaps.

Key Metrics to Track: Monitor AI citation frequency (how often you’re cited), citation diversity (across which topics and platforms), semantic relevance scores (how closely cited content matches queries), freshness metrics (average age of cited content), and domain overlap (which competitors are cited alongside you). These metrics collectively paint a picture of your E-E-A-T effectiveness in the AI context.

As E-E-A-T and LLM optimization have gained attention, several misconceptions have emerged. Let’s debunk the most common ones:

Myth: “Backlinks Are Dead” Reality: Backlinks remain valuable authority signals, particularly for Google’s algorithm. What’s changed is their relative weight. They’re no longer the sole currency of SEO. High-authority sites still tend to get cited more frequently by LLMs, but this is largely because they rank better in search results (which LLMs use for retrieval). The key insight: backlinks still matter, but they’re one piece of a larger puzzle that now includes semantic authority, freshness, and demonstrated expertise.

Myth: “E-E-A-T Is a Direct Ranking Factor” Reality: E-E-A-T is not a direct ranking factor like keywords or page speed. Instead, it’s a framework that Google uses through its quality raters to refine and train its algorithms. Optimizing for E-E-A-T is really about optimizing for humans first—creating genuinely authoritative, trustworthy content. The ranking benefits come indirectly, through improved user satisfaction and reduced bounce rates.

Myth: “AI Can Detect Fake Authority Long-Term” Reality: This is partially true but nuanced. LLMs can be fooled by content that “sounds” authoritative in the short term. Content farms can produce expert-sounding material through careful word choice and structure. However, fake authority rarely survives human scrutiny, and as AI systems become more sophisticated and incorporate more diverse training data, they’re increasingly able to distinguish genuine expertise from mimicry. The safer bet: build real authority rather than trying to game the system.

Myth: “You Need Massive Backlinks to Get LLM Citations” Reality: While high-authority sites do get cited more frequently, niche experts with smaller backlink profiles regularly appear in LLM citations. What matters is demonstrating genuine expertise in your specific domain. A specialized blog with deep topical knowledge can outrank a generalist site with more backlinks, particularly for niche queries. The key is semantic authority and topical depth.

Myth: “LLMs Only Cite the Top 10 Search Results” Reality: While 52% of AI Overview sources come from the top 10 search results, citations also come from outside the immediate top ten. This indicates that both quality and diversity matter. If your content is highly relevant and authoritative, even if it doesn’t rank in the top 10 for the primary query, it can still be cited if it matches expanded query variations or provides unique value.

The landscape of authority is shifting in real-time. Understanding where it’s headed helps you build strategies that remain effective as the ecosystem evolves.

From Link-Based to Meaning-Based Ranking: The future of authority is moving away from “who links to you?” toward “what does your content mean?” This shift from structural signals to semantic signals represents a fundamental change in how authority is recognized. Traditional SEO built authority through link-building campaigns. The AI era builds authority through demonstrated expertise, semantic richness, and consistent positioning across platforms. Early movers who embrace this shift are establishing authority before the space becomes crowded.

Engagement Metrics as Authority Signals: As AI systems become more sophisticated, they’re increasingly incorporating user engagement metrics as authority signals. How long do people spend on your content? Do they return? Do they share it? These behavioral signals indicate that your content provides genuine value. This means user experience and content quality become direct authority builders, not just indirect ranking factors.

Multi-Platform Consistency as Authority Foundation: Authority is no longer confined to your website. Your presence on LinkedIn, industry publications, speaking engagements, and media mentions all contribute to your semantic footprint. AI systems recognize when your name, brand, or expertise is consistently associated with your niche across multiple platforms. This creates a compounding effect: each mention reinforces your authority, making subsequent citations more likely.

The Authority Advantage for Early Movers: The brands and creators investing in E-E-A-T optimization now are claiming authority before the space becomes saturated. In two years, when everyone’s fighting for AI visibility, the early movers will already be established sources. This is similar to the early days of SEO, when the first sites to optimize for search engines built lasting competitive advantages. The window for establishing authority in AI-driven search is still open, but it’s closing.

Preparing for the Next Evolution: As LLMs continue to evolve, authority signals will likely become even more sophisticated. We may see increased emphasis on real-time verification, multi-source consensus, and user satisfaction metrics. The brands that build genuine expertise and maintain transparent, trustworthy practices will be best positioned to thrive in whatever comes next. The fundamental principle remains: authentic expertise and trust are the foundation of authority, whether in traditional search or AI-driven discovery.

E-E-A-T stands for Experience, Expertise, Authoritativeness, and Trust. It's a framework that determines content credibility for both traditional search and AI systems. LLMs use E-E-A-T signals to decide which sources to cite in their responses, making it critical for AI visibility.

LLMs evaluate authority through semantic patterns, consistency across sources, and demonstrated expertise rather than backlinks. They recognize authority linguistically through technical terminology, logical depth, and topical coverage. This means high-authority sites still get cited more, but primarily because they rank better in search results that LLMs use for retrieval.

Yes. While larger sites have advantages, niche experts with smaller backlink profiles regularly appear in LLM citations. What matters is demonstrating genuine expertise in your specific domain through semantic richness, topical depth, and consistent positioning. A specialized blog with deep knowledge can outrank generalist sites with more backlinks.

E-E-A-T is a long-term strategy. Building genuine authority and trust signals typically takes months, not weeks. However, implementing proper schema markup and author attribution can have more immediate effects. The first year of content's life is critical for AI visibility, with citation rates declining significantly after three years unless content is updated.

Backlinks remain valuable authority signals, but they're no longer the sole currency. High-authority sites get cited more frequently by LLMs, but this is largely because they rank better in search results. The key insight: backlinks still matter as part of a larger puzzle that now includes semantic authority, freshness, and demonstrated expertise.

Track AI citation frequency, citation diversity across topics and platforms, semantic relevance scores, freshness metrics, and domain overlap with competitors. Use tools like Ahrefs Brand Radar for comprehensive monitoring across 150 million prompts, or manually test your target queries monthly across ChatGPT, Perplexity, Claude, and Gemini.

No, E-E-A-T is not a direct ranking factor. It's a framework that influences how AI systems evaluate content quality. Optimizing for E-E-A-T is really about creating genuinely authoritative, trustworthy content that serves human readers first. The benefits come indirectly through improved user satisfaction and increased citation rates.

Freshness is heavily weighted in LLM citation selection. Research shows LLMs cite content published within 300 days at significantly higher rates. For time-sensitive topics, an article from last month is inherently more trustworthy than one from five years ago. Systematic content updates and prominent 'last updated' dates are essential for maintaining AI visibility.

Track how your brand is cited by ChatGPT, Perplexity, Google AI Overviews, and other LLMs. Understand your E-E-A-T signals and optimize for AI visibility with AmICited.

E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) is Google's framework for evaluating content quality. Learn how it impacts SEO, AI citations...

Understand E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) and its critical importance for visibility in AI search engines like ChatGPT, Per...

Learn how author authority influences AI search results and AI-generated answers. Understand E-E-A-T signals, expertise demonstration, and how to build credibil...