The 10 Most Important AI Visibility Metrics to Track

Discover the essential AI visibility metrics and KPIs to monitor your brand's presence across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms. ...

Learn how to evolve your measurement frameworks as AI search matures. Discover citation-based metrics, AI visibility dashboards, and KPIs that matter for tracking brand presence in ChatGPT, Perplexity, and Google AI Overviews.

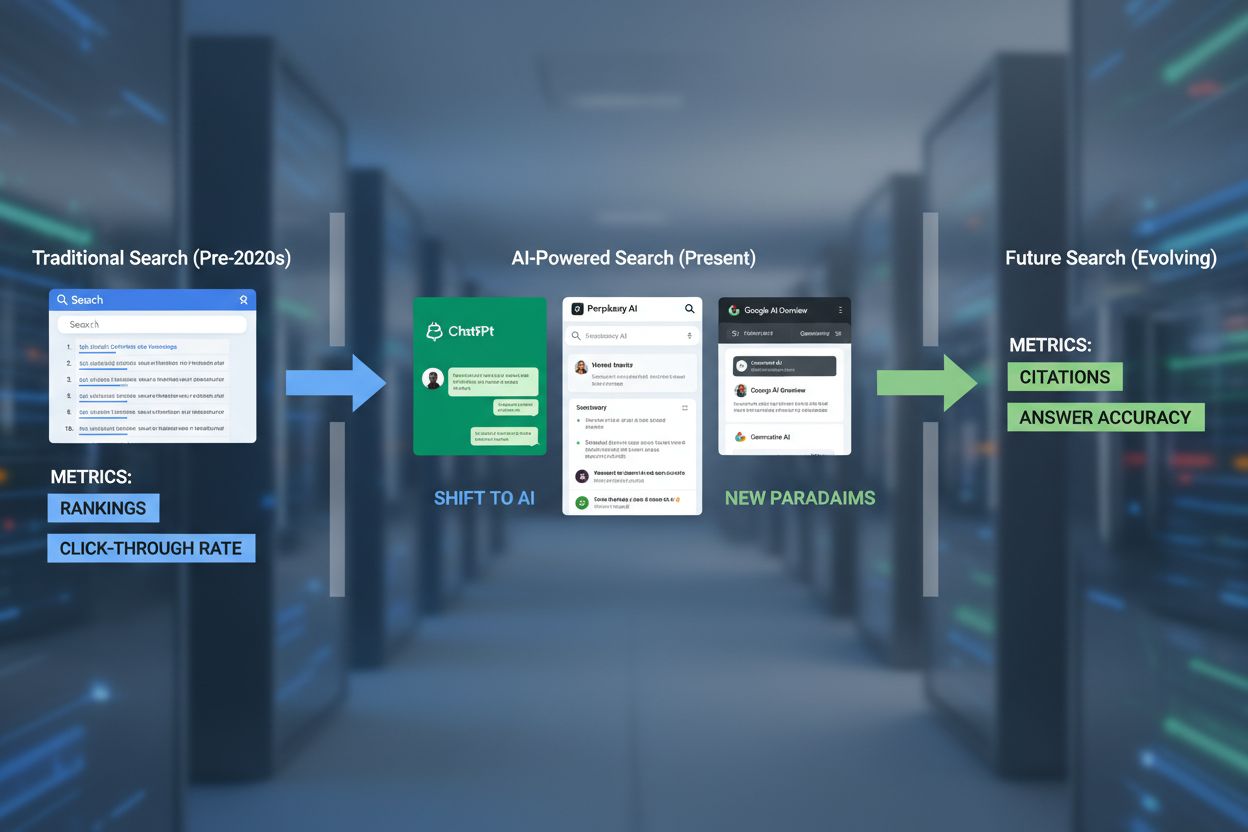

The metrics that defined digital marketing success for the past two decades are rapidly becoming obsolete. Click-through rates, keyword rankings, and organic session counts once served as the holy grail of marketing measurement, but they tell an incomplete story in an AI-driven search landscape. When users ask ChatGPT, Perplexity, or Claude a question, they receive a synthesized answer that often resolves their query without ever visiting your website. This fundamental shift means that citation-based metrics have replaced click-based metrics as the true measure of visibility. Your brand can rank #1 on Google for a high-value keyword yet remain completely invisible in AI-generated answers—a scenario that would have been unthinkable in traditional SEO. The urgency is real: as LLM traffic is projected to surpass traditional Google search by 2027, organizations that continue measuring success through legacy KPIs risk operating blind to where their actual influence lies.

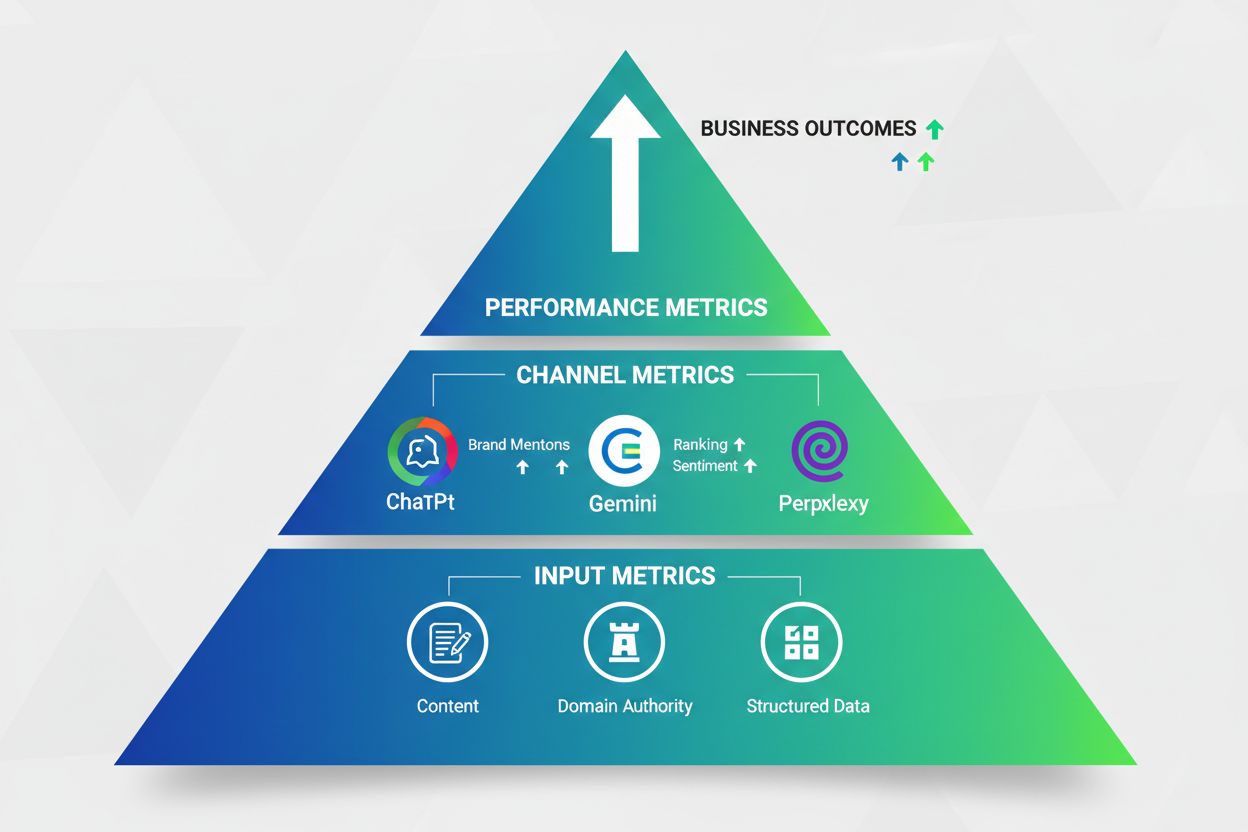

Effective AI measurement requires a comprehensive framework that extends far beyond simple visibility tracking. Rather than relying on a single metric, mature organizations track performance across four interconnected pillars that together paint a complete picture of AI system effectiveness and business impact.

| Pillar | What It Measures | Why It Matters |

|---|---|---|

| Model Quality Metrics | Accuracy, coherence, safety, groundedness, instruction following | Ensures AI outputs are factually correct, aligned with brand messaging, and free from hallucinations that could damage credibility |

| System Quality Metrics | Latency, uptime, error rates, throughput, token processing speed | Guarantees reliable performance, fast response times, and consistent availability across all AI platforms and user interactions |

| Business Operational Metrics | Conversion rates, customer satisfaction, churn reduction, average handle time | Directly connects AI visibility to tangible business outcomes like revenue, customer retention, and operational efficiency |

| Adoption Metrics | Usage frequency, session length, query length, user engagement, feedback signals | Reveals whether users actually find value in AI-powered features and are integrating them into their decision-making processes |

These pillars are deeply interconnected. A model with perfect accuracy but poor latency will see low adoption. High adoption without business operational tracking leaves you unable to prove ROI. The most mature organizations measure across all four pillars simultaneously, using insights from one to optimize the others.

Understanding how AI systems represent your brand requires moving beyond simple presence detection to a nuanced measurement approach. Four core metrics form the foundation of effective AI visibility tracking:

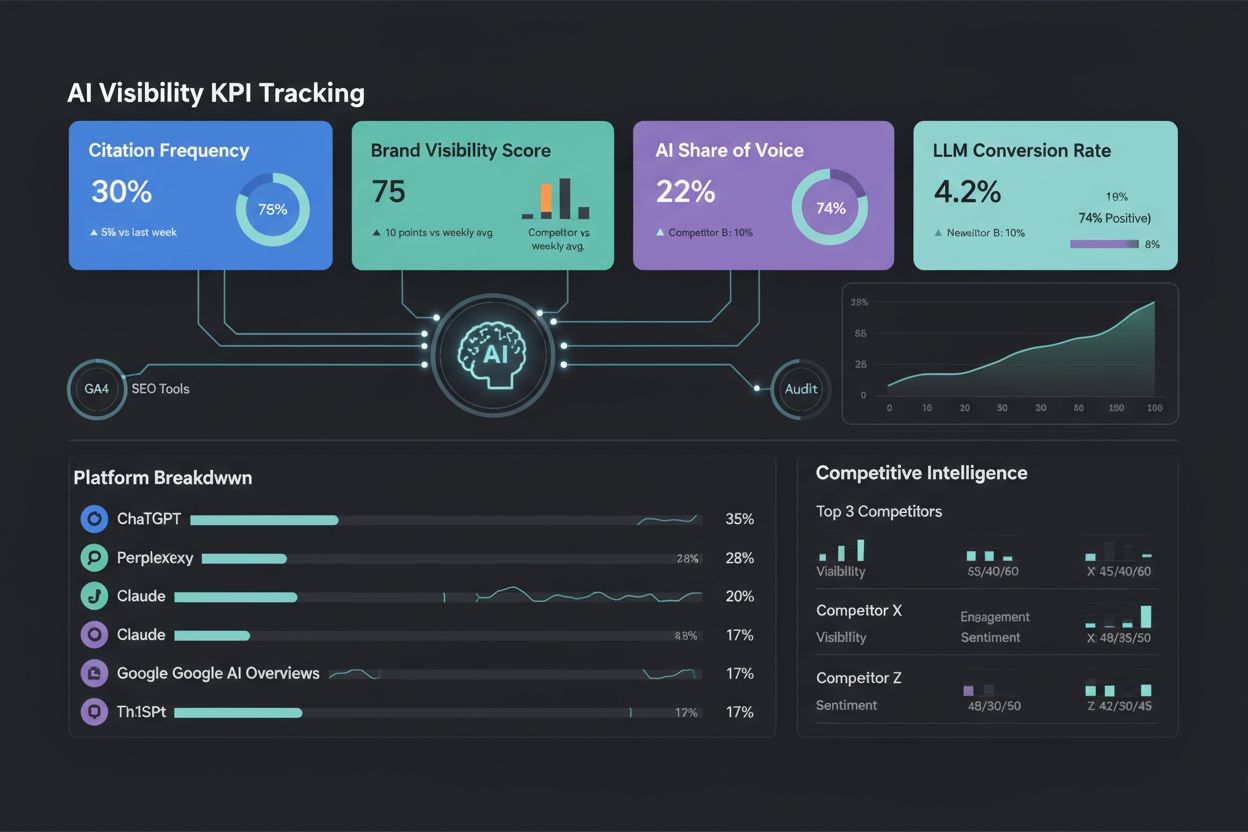

AI Signal Rate: Calculate this by dividing the number of AI responses mentioning your brand by the total number of relevant prompts tested. For example, if your brand appears in 15 out of 50 prompts about “project management software,” your AI Signal Rate is 30%. Category leaders typically achieve 60-80% citation rates, while emerging brands often start at 5-10%. This metric establishes your baseline visibility across different AI platforms.

Answer Accuracy Rate: Evaluate AI responses on a 0-2 point scale across three dimensions: factual correctness (pricing, features, specifications), alignment with your brand messaging (mission, values, differentiators), and absence of hallucinations (false claims). Create a “ground truth” document outlining your key facts and review AI outputs against it quarterly. Visibility without accuracy is actually a liability—incorrect information damages credibility more than no mention at all.

Citation Coverage: Track not just whether you’re mentioned, but whether your domain is cited as a source. Monitor your Top-Source Share—the percentage of answers where you appear as the first or second source, as these positions drive significantly more traffic and signal greater authority. Interestingly, approximately 90% of ChatGPT citations come from search results ranked 21 or lower, meaning a robust content library matters more than homepage dominance.

Share of Voice (SOV): Measure your mentions compared to competitors for high-intent prompts. If you appear in 20 out of 100 prompts while three main competitors appear in 30, 25, and 15 respectively, your SOV is 22%. Also track your average position in enumerated lists—being listed fourth instead of first significantly influences buyer perception of your market position.

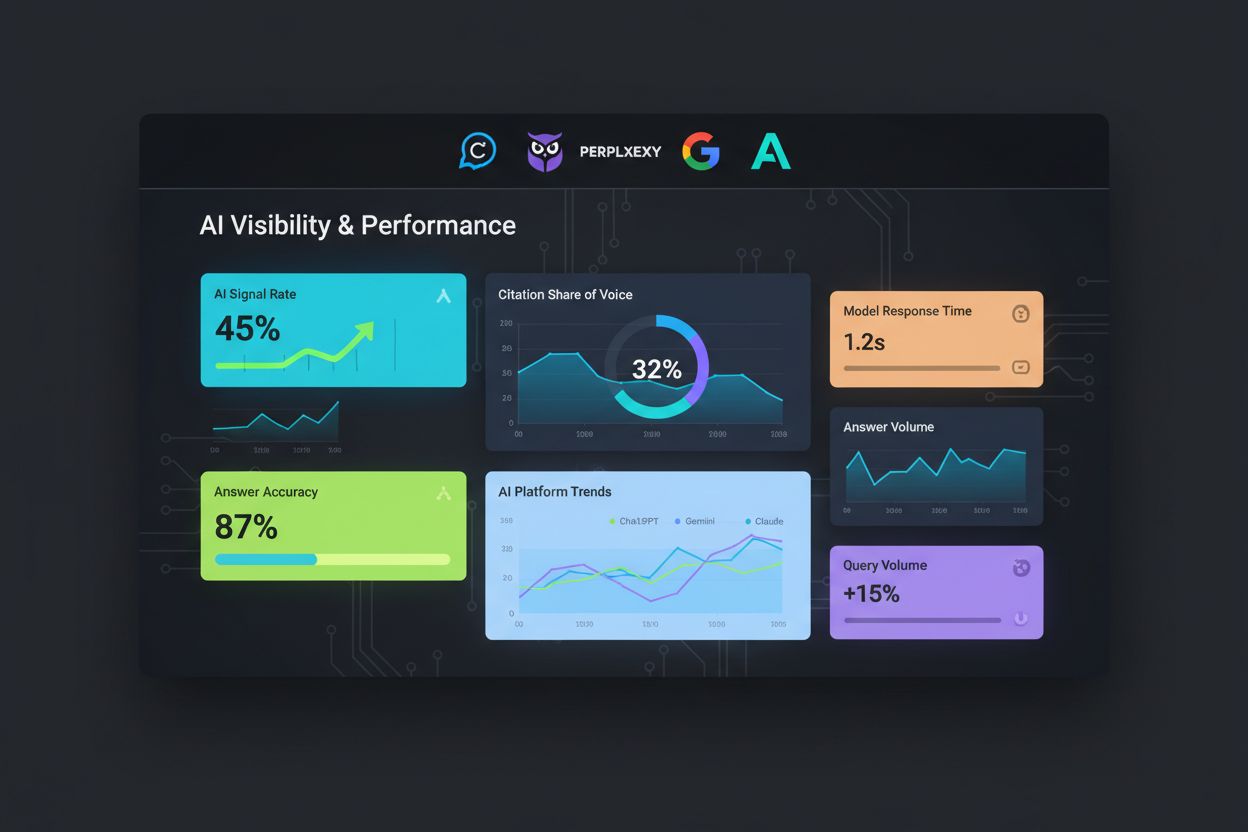

A powerful AI visibility dashboard serves as your command center for understanding how multiple AI engines represent your brand. Rather than a single monolithic view, the most effective dashboards provide persona-specific perspectives tailored to different stakeholder needs. Your CMO needs a high-level summary of brand share-of-voice by strategic theme and market, with modeled impact on pipeline and revenue. Your SEO lead focuses on inclusion and citation trends, competitive benchmarks, and which technical or content changes correlate with visibility lifts. Your content team wants to see which questions, entities, and formats AI engines favor within each topic cluster to inform editorial roadmaps. Your product marketing team tracks how AI systems describe positioning, pricing, and differentiators versus competitors across decision-stage queries.

Beyond these persona-specific views, your dashboard should include real-time alerting for critical scenarios: drops in AI Overview inclusion for high-priority topics, competitors overtaking your citation share, or shifts in brand sentiment to negative territory. Set up automated alerts that route to appropriate teams—SEO for technical issues, content for narrative gaps, product marketing for positioning misalignment. Additionally, implement trend tracking that overlays AI visibility changes with core business metrics like branded search volume, direct traffic, and revenue. This integrated view reveals downstream effects: if AI visibility spikes but branded search volume remains flat, it signals a positioning issue that needs investigation.

Monitoring AI visibility isn’t a quarterly audit—it’s an ongoing operational discipline. The most effective teams operate on a structured weekly cycle that transforms AI visibility from a vanity metric into a measurable, actionable channel:

Build a Comprehensive Prompt Set: Develop 20-50 high-value queries that your potential buyers might use, organized into four categories: problem queries (“how to reduce churn in SaaS”), solution queries (“best customer retention platforms”), category queries (“what is AI-powered knowledge software”), and brand queries (“Is [Your Brand] reliable?”). Include comparison prompts like “[Your Brand] vs [Competitor] for mid-market” to gauge competitive positioning. Prioritize prompts with high commercial intent, as these are more likely to convert than general awareness queries.

Test Prompts Across AI Platforms: Run your prompt set through ChatGPT, Perplexity, Gemini, and Claude on a weekly basis. You can do this manually or use scheduling tools to streamline the process. Keep in mind that each platform pulls from different training data and retrieval methods, so your brand may show up on one platform but not another. Log each response for version control and tracking.

Score the Results: Evaluate each response based on presence, accuracy, citations, and competitor mentions using a simple 0-2 scale (0 for incorrect, 1 for partially correct, 2 for fully accurate). Calculate your Share of Voice by comparing how often your brand appears compared to competitors. Track your Top-Source Share—the percentage of responses where your brand is cited as the first or second source.

Identify Missing Context: If AI platforms misrepresent or omit your brand, it’s likely due to missing or incomplete context. Compare outputs against your established key facts—pricing, features, target audience, and differentiators. Look for gaps: Are you absent from category definitions? Are your unique selling points unclear? Is your entity record incomplete on platforms like Wikidata or Crunchbase?

Update and Distribute Content: Based on your findings, create content that’s easy for AI systems to extract and cite. Use concise, 2-3 sentence definitions at the top of key pages, incorporate question-first headings (e.g., “What is [Your Product]?”), and structure FAQs around common buyer queries. Add structured data like JSON-LD using Schema.org to provide machine-readable context, and link your brand to authoritative sources using the sameAs property.

Re-Test and Track Progress: Once your updates are live, re-test your prompt set and compare new results to your baseline scores. Log any changes in visibility, accuracy, citations, and competitor mentions. Document update latency—the time it takes for AI systems to reflect your changes. If a specific content update significantly improves your citation rate, apply similar strategies across other topics.

Many organizations waste valuable resources focusing on the wrong metrics or treating AI visibility as a one-time project. Understanding these four critical pitfalls helps you avoid costly measurement mistakes:

Mistake 1: Tracking Mentions Without Checking Accuracy — Counting how often your brand appears in AI-generated answers is pointless if those mentions are inaccurate or negative. A high presence combined with poor representation can harm your reputation more than not being mentioned at all. Large Language Models can easily produce outdated or misleading information about your pricing, features, or positioning. Create a detailed “ground truth” document outlining your validated facts, and regularly evaluate AI outputs against it using the RAPP framework (Regularity, Accuracy, Prominence, Positivity).

Mistake 2: Ignoring Citations and Source Tracking — In a world where users often don’t click through to websites, citations act as a primary marker of authority. If LLMs stop citing your brand, you risk fading from the “collective intelligence” that future AI systems rely on. Almost 90% of ChatGPT’s citations come from search results ranked 21 or lower, giving competitors an edge simply by being more accessible. Audit your backlink profile to ensure it includes publishers with direct ties to major LLM providers, and add “AI Assistant” as an option in your “How did you find us?” forms to capture AI-driven discovery.

Mistake 3: Using Generic Prompts That Miss Buyer Intent — If you’re only testing prompts like “[Your Brand]” or “[Your Brand] reviews,” you’re missing the bigger picture. Most AI-driven discovery happens through problem and solution-based queries, not direct brand searches. Develop prompts that align with how buyers actually search: cover problem queries, solution queries, category queries, and brand-specific queries. Tailor prompts to different buyer personas and sales funnel stages. Shift your language from product-focused to problem-focused to better reflect buyer behavior.

Mistake 4: Treating This as a One-Time Project — AI systems evolve, competitors release new content, and buyer questions shift over time. If you treat AI visibility as a one-time effort, you’ll miss changes in how your brand is represented. Set up a weekly routine to monitor your AI presence, run your prompt set, evaluate results, identify gaps, update content, and re-test. Without this ongoing effort, you risk falling behind while competitors gain an edge through consistent AI-driven optimization.

The market for AI search monitoring tools has exploded, with solutions ranging from lightweight spreadsheet-based trackers to enterprise-grade platforms. When evaluating tools, prioritize engine coverage (does it monitor all platforms your buyers use?), transparency in scoring (avoid single unexplained scores), citation tracking (measure not just mentions but citation rates and top-source share), and integration capabilities (can it connect to your analytics systems?).

AmICited.com stands out as the leading solution specifically designed for AI answers monitoring. It provides comprehensive tracking of how your brand appears across ChatGPT, Perplexity, Google AI Overviews, and other major platforms, with detailed metrics on citation frequency, accuracy, and competitive positioning. For teams already invested in traditional SEO tools, Semrush’s AI Toolkit extends their platform with ChatGPT visibility tracking and AI-specific content suggestions. Ahrefs Brand Radar leverages their rich link index to monitor SGE citation frequency and weighted positioning. Atomic AGI offers an all-in-one platform combining keyword tracking across Google and AI engines with NLP-based content clustering and optimization. SE Ranking’s AI Search Toolkit provides accurate tracking of brand mentions and links across Google AIOs, Gemini, and ChatGPT with competitor research capabilities.

For teams focused on AI content generation and automation workflows, FlowHunt.io offers complementary capabilities for creating and optimizing content at scale. The key is selecting tools that align with your measurement priorities and integrate seamlessly into your existing analytics stack. Start with a free tool or manual checks to audit your core buyer questions before committing to a pricier automated platform.

Metrics alone don’t drive business value—the real power emerges when you connect AI visibility to downstream business metrics. Begin by tracking referral visits from platforms like ChatGPT, Gemini, and Perplexity in your analytics. Set up custom channel groupings in Google Analytics 4 to correctly classify traffic from these sources, which are often mislabeled as generic referral traffic. Monitor conversion rates and revenue tied to AI-driven visits, as this traffic often converts better than traditional search because the platform has already provided a trusted recommendation.

Implement attribution modeling that accounts for AI-influenced conversions, not just direct conversions. Many buyers discover your brand through an AI answer, then search for you directly later—this “invisible influence” becomes clear only when you track high-intent prompts consistently and correlate them with later branded searches. Gather qualitative insights by asking customers during sales calls how they first heard about you, and explicitly include platforms like ChatGPT and Perplexity as options. Log this information systematically to complement your quantitative metrics. Finally, calculate the ROI of your AI visibility investments by comparing the cost of optimization efforts against the incremental revenue generated from AI-influenced conversions. This business-focused approach transforms AI visibility from a marketing vanity metric into a strategic investment with measurable returns.

As AI models evolve, new platforms emerge, and user behaviors shift, your measurement framework must remain flexible and durable. Rather than building metrics around specific interfaces or model names, design your framework around durable concepts like entities, intents, and narratives. An entity-based approach means tracking how your brand, products, and key concepts are represented across any AI system, regardless of its specific architecture. An intent-based approach focuses on the underlying buyer needs and questions, which remain stable even as platforms and interfaces change.

Build a flexible collection layer that can swap in new engines or answer formats without requiring a complete rebuild of your measurement infrastructure. Review your metric definitions at set intervals—quarterly or semi-annually—so you can adapt to changes in the AI landscape without losing historical continuity. Invest in continuous learning about how AI systems work, how they’re evolving, and how buyer behavior is shifting in response. The organizations that treat AI measurement as a strategic capability rather than a tactical project will be best positioned to maintain visibility and influence as the search landscape continues its rapid evolution.

Traditional metrics like keyword rankings and click-through rates measure visibility in Google's blue links, but AI search works differently. When users ask ChatGPT or Perplexity, they get synthesized answers that often resolve queries without website visits. Citation-based metrics now matter more than clicks, as they measure whether your brand is referenced as a trusted source in AI-generated answers.

AI Signal Rate is foundational—it measures how often your brand appears in relevant AI responses. Calculate it by dividing brand mentions by total prompts tested. However, mature organizations track across four pillars: Model Quality (accuracy), System Quality (performance), Business Operational (conversions), and Adoption (user engagement). No single metric tells the complete story.

Weekly monitoring is ideal for competitive markets. Run your prompt set through ChatGPT, Perplexity, Gemini, and Claude every week, score the results, identify gaps, update content, and re-test. This creates a continuous feedback loop that keeps your brand competitive as AI systems evolve and competitors optimize their presence.

AI Signal Rate measures how often your brand appears in AI responses (e.g., 30% of prompts). Share of Voice compares your mentions to competitors' mentions for the same prompts (e.g., you get 20 mentions while competitors get 30, 25, and 15—your SOV is 22%). SOV reveals competitive positioning, while Signal Rate reveals absolute visibility.

Create a 'ground truth' document with validated facts about your pricing, features, target audience, and differentiators. Review AI outputs quarterly against this document using a 0-2 accuracy scale. Update your website content with concise definitions, question-first headings, and structured data (JSON-LD). Ensure your brand is linked to authoritative sources like Wikidata and LinkedIn using the sameAs property.

AmICited.com is the leading platform specifically designed for AI answers monitoring, tracking citations across ChatGPT, Perplexity, Google AI Overviews, and Claude. For teams already using traditional SEO tools, Semrush's AI Toolkit and Ahrefs Brand Radar offer AI visibility features. Atomic AGI and SE Ranking provide comprehensive multi-engine tracking. Start with manual testing before investing in automated platforms.

Track referral traffic from ChatGPT, Perplexity, and Gemini in Google Analytics 4 using custom channel groupings. Monitor conversion rates from AI-driven traffic, which often outperforms traditional search. Ask customers how they discovered you and include AI platforms as options. Calculate ROI by comparing optimization costs against incremental revenue from AI-influenced conversions.

First, identify the specific inaccuracy and compare it against your ground truth document. Update your website content to provide clearer, more accurate information. Add structured data to help AI systems extract correct information. Monitor how long it takes for AI systems to reflect your changes (update latency). If hallucinations persist, consider reaching out to the AI platform's support team with evidence of the inaccuracy.

Track how your brand appears in AI-generated answers across ChatGPT, Perplexity, Google AI Overviews, and Claude. Get real-time insights into citations, accuracy, and competitive positioning with AmICited.

Discover the essential AI visibility metrics and KPIs to monitor your brand's presence across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms. ...

Learn how to set effective OKRs for AI visibility and GEO goals. Discover the three-tier measurement framework, brand mention tracking, and implementation strat...

Learn how to set AI visibility KPIs and measure success in AI search. Discover the 5 metrics that matter: citation frequency, brand visibility score, AI share o...