Fluency Optimization

Learn how fluency optimization improves content visibility in AI search results. Discover writing techniques that help AI systems extract and cite your content ...

Master fluency optimization to create LLM-friendly content that gets cited more often. Learn how to write naturally flowing content that AI systems prefer to quote and reference.

Fluency optimization is the practice of crafting content that reads naturally while being inherently quotable by large language models. Unlike traditional SEO, which focuses on keyword placement and density, fluency optimization prioritizes semantic clarity, logical flow, and information density. It’s about writing in a way that feels human-first while being machine-discoverable. LLMs don’t just scan for keywords—they understand context, relationships between ideas, and the overall coherence of your message. When your content flows naturally and presents ideas clearly, LLMs are significantly more likely to cite it as a source when generating responses.

Modern language models process content semantically rather than syntactically, meaning they understand meaning and context rather than just matching keywords. This fundamental shift has changed how content gets discovered and cited by AI systems. Research shows that fluency-optimized content experiences a 15-30% visibility boost in LLM outputs compared to traditionally SEO-optimized content. The reason is straightforward: LLMs are trained to recognize high-quality, authoritative sources, and fluent writing signals both quality and expertise. When you prioritize natural language flow over keyword density, you’re actually making your content more discoverable to the systems that increasingly drive traffic and visibility.

| Aspect | Traditional SEO Focus | Fluency Optimization Focus |

|---|---|---|

| Language Style | Keyword-heavy, sometimes awkward | Natural, conversational, flowing |

| Keyword Approach | Density targets (1-2%), exact matches | Semantic variations, contextual relevance |

| Sentence Structure | Optimized for readability scores | Optimized for idea clarity and rhythm |

| Reader Experience | Secondary to ranking signals | Primary driver of content quality |

| AI Citation Rate | Lower (keyword matching only) | Higher (30-40% more citations with quotes) |

Fluent content combines several key elements that work together to create naturally quotable material. These elements aren’t about gaming algorithms—they’re about communicating effectively with both humans and machines. When you master these fundamentals, your content becomes the kind of source that LLMs naturally reach for when generating responses.

Clarity is the bedrock of fluency optimization. If your content is hard to understand, LLMs will struggle to extract meaningful information, and humans will bounce away. Clarity means your main ideas are immediately apparent, your explanations are complete, and your language is precise. It’s not about dumbing down your content—it’s about respecting your reader’s time and intelligence. When you write with clarity, you eliminate the friction that prevents both humans and machines from engaging with your ideas.

Here’s how clarity transforms quotability:

UNCLEAR:

"The implementation of advanced technological solutions can potentially

facilitate improvements in operational efficiency metrics across various

organizational contexts."

CLEAR:

"Using the right tools helps teams work faster and accomplish more with

fewer resources."

UNCLEAR:

"It has been suggested by various researchers that consumer behavior

patterns may be influenced by multiple factors."

CLEAR:

"Three factors drive consumer behavior: price, convenience, and brand trust.

Research shows price influences 45% of purchase decisions, while convenience

and trust each account for roughly 30%."

The second version of each example is more quotable because it’s specific, actionable, and immediately understandable. LLMs recognize this clarity and are more likely to cite it.

The rhythm of your writing directly impacts how LLMs process and cite your content. Sentences that vary in length create a natural cadence that feels engaging to read and easier for language models to parse. Short sentences create emphasis. Medium sentences carry information. Long sentences can explore complexity. When you vary these intentionally, you create content that flows naturally rather than feeling robotic or formulaic.

Repetitive sentence structures are a major fluency killer. If every sentence follows the same pattern—subject, verb, object, period—your writing becomes monotonous and less quotable. Instead, mix it up. Start some sentences with dependent clauses. Use questions to engage readers. Break up complex ideas across multiple sentences. This variety isn’t just stylistic; it’s functional. It helps LLMs understand the relative importance of different ideas and makes your content more likely to be selected as a source for direct quotes.

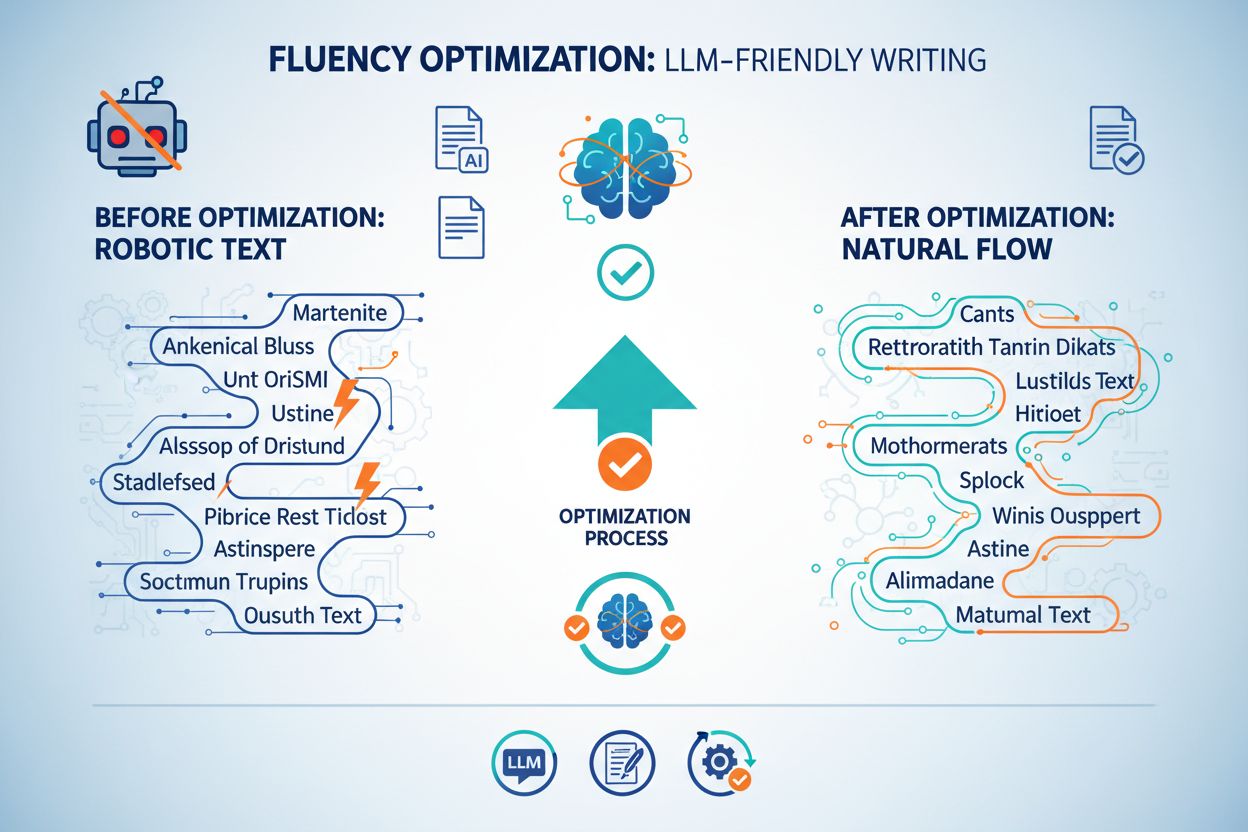

The irony of writing for LLMs is that content that sounds like it was written by an AI is actually less likely to be cited by LLMs. Language models are trained on human-written content and recognize patterns that signal low quality or inauthenticity. Certain phrases, structures, and patterns are red flags that reduce your content’s quotability. These fluency killers make your writing sound generic, corporate, or machine-generated—the opposite of what you want.

Common fluency killers and how to fix them:

Killer: Excessive hedging language

Killer: Vague corporate jargon

Killer: Repetitive transition phrases

Semantic keyword integration means using related terms, synonyms, and contextual variations naturally throughout your content. Instead of forcing the exact keyword repeatedly, you weave in variations that support the main topic. This approach actually increases your visibility to LLMs because it demonstrates comprehensive coverage of a topic. Research shows that keyword stuffing reduces visibility by approximately 10%, while semantic integration increases citation rates by 30-40%.

The key is thinking about the topic ecosystem rather than individual keywords. If you’re writing about “content marketing,” you’ll naturally use terms like “audience engagement,” “brand storytelling,” “content strategy,” “editorial calendar,” and “audience insights.” These variations appear because they’re relevant to the topic, not because you’re forcing them in. LLMs recognize this natural semantic clustering and view it as a signal of authoritative, comprehensive coverage. This approach also makes your content more useful to readers because you’re exploring the topic from multiple angles.

LLMs cite specific, data-backed content far more often than generic statements. When you include concrete numbers, real examples, and specific insights, you’re creating content that’s inherently more quotable. The difference between generic and specific content is dramatic in terms of citation likelihood. Specific content receives 30-40% more citations because it provides actual value that LLMs can extract and share.

Consider these examples:

Generic: “Many businesses struggle with content marketing.” Specific: “73% of B2B websites experienced traffic loss in the past year, primarily due to ineffective content strategies and poor optimization for AI visibility.”

Generic: “AI is changing how people find information.” Specific: “LLM traffic is growing 40% monthly, and 86% of AI citations come from brand-managed sources, meaning companies that optimize their content directly control their AI visibility.”

The specific versions are quotable because they contain actual data points that readers and LLMs can reference. When you write with this level of specificity, you’re not just hoping to be cited—you’re creating content that’s inherently valuable enough to cite. This doesn’t mean every sentence needs a statistic, but strategic placement of specific data throughout your content dramatically increases its quotability.

How your content is structured impacts both readability and LLM processing. Strategic use of headings, paragraph length, lists, and white space creates a structure that guides readers and helps language models understand your content’s hierarchy and organization. Short paragraphs (2-4 sentences) are easier to scan and quote. Headings break up content and signal topic shifts. Bullet points and numbered lists make information digestible and quotable. Tables organize complex information in a way that’s easy for both humans and machines to reference.

White space is underrated in fluency optimization. Dense blocks of text feel overwhelming and are harder for LLMs to parse. When you break up your content with headings, short paragraphs, and lists, you’re making it easier for language models to extract meaningful chunks. This structural clarity directly impacts citation rates because LLMs can more easily identify and quote specific, well-organized sections of your content.

Your tone and voice should feel authentic and conversational while remaining professional and authoritative. This balance is crucial for fluency optimization because it signals to LLMs that your content is written by a knowledgeable human, not generated by a machine. Conversational tone performs better with both audiences because it feels more trustworthy and engaging. The goal is to sound like an expert who’s explaining something to a colleague, not a corporate memo or a textbook.

Tone that works:

Tone that doesn’t work:

The difference is subtle but significant. Conversational, confident tone signals expertise and makes your content more quotable. Formal, hedging tone signals uncertainty and makes LLMs less likely to cite you as an authoritative source.

Implementing fluency optimization doesn’t require a complete rewrite of your content strategy. Start by applying these principles to your most important pieces and gradually integrate them into your standard process. This checklist helps you evaluate and improve your content’s fluency before publishing.

Fluency Optimization Checklist:

Tracking the impact of fluency optimization requires looking beyond traditional metrics. While organic traffic and rankings matter, you should also monitor how often your content is cited by LLMs and in what context. Tools that track AI citations are becoming increasingly important for understanding your content’s visibility in the AI-driven search landscape. You can measure fluency impact by comparing citation rates before and after optimization, tracking the growth of LLM-driven traffic, and monitoring which pieces get quoted most frequently.

The correlation between fluency and citations is strong and measurable. Content that scores high on fluency metrics—clarity, specificity, semantic coverage, and natural flow—consistently receives 30-40% more citations. This isn’t coincidental; it’s because LLMs are trained to recognize and cite high-quality sources. By focusing on fluency, you’re directly improving your content’s value to the AI systems that increasingly drive visibility and traffic. Track these metrics monthly to understand which fluency elements have the biggest impact on your specific audience and content type.

A B2B SaaS company optimized their product documentation by applying fluency principles. Before optimization, their documentation was technically accurate but dense and jargon-heavy. After restructuring for clarity, adding specific examples, and varying sentence structure, their documentation was cited 35% more often in LLM responses. The key changes: breaking long paragraphs into 2-3 sentence chunks, replacing technical jargon with clear explanations, and adding specific use-case examples. Within three months, they saw a 28% increase in LLM-driven traffic to their documentation pages.

A content marketing agency applied fluency optimization to their client’s blog. The original content was keyword-optimized but read like a checklist. The optimized version maintained the same keywords but rewrote for natural flow, added specific data points, and varied sentence structure. Result: citation rate increased from 12% to 38% of their published pieces being quoted in LLM responses. The client also saw a 22% increase in organic traffic because the improved fluency made the content more engaging for human readers as well. The lesson: fluency optimization benefits both audiences simultaneously.

As LLM traffic continues to grow at 40% monthly, fluency optimization is shifting from a nice-to-have to a core competency. The companies that master fluency will control their visibility in AI-driven search results, while those that ignore it will see their content increasingly overlooked. The competitive advantage goes to those who understand that writing for LLMs isn’t about gaming algorithms—it’s about writing better content that serves both human and machine readers.

The future of content strategy is fluency-first. This doesn’t mean abandoning traditional SEO or user experience principles. It means recognizing that the best content is naturally optimized for all audiences: humans, search engines, and language models. By prioritizing clarity, specificity, and natural flow, you’re creating content that’s inherently more valuable and more likely to be discovered, shared, and cited. The organizations that embrace fluency optimization now will establish themselves as authoritative sources in the AI era, while those that delay will find themselves increasingly invisible in the systems that drive modern discovery and traffic.

Fluency optimization is the practice of writing content with natural, clear language flow that LLMs can easily understand and cite. It focuses on semantic clarity, sentence variety, and readability rather than keyword density, resulting in 15-30% higher visibility in AI-generated responses.

Traditional SEO emphasizes keyword matching and backlinks, while fluency optimization prioritizes semantic understanding and natural language flow. LLMs process content semantically, so fluent, well-structured writing that answers questions directly performs better in AI citations than keyword-optimized content.

No. Fluency optimization actually complements traditional SEO. Clear, well-written content that performs well in LLM responses also tends to perform well in Google search. The focus on clarity and structure benefits both human readers and search algorithms.

Common fluency killers include repetitive sentence structures, overused em dashes, overly formal tone, keyword stuffing, vague language, and inconsistent terminology. These patterns signal AI-generated or low-quality content to LLMs and reduce citation likelihood.

Track metrics like LLM referral traffic, brand mentions in AI responses, citation frequency across platforms like ChatGPT and Perplexity, and engagement metrics from AI-referred visitors. Tools like Profound and Semrush can help monitor AI visibility and citation patterns.

No. Fluency optimization applies to all content types—product descriptions, FAQs, blog posts, and social media. The principles of clarity, natural flow, and semantic coherence improve content performance across all formats in both traditional and AI search.

AmICited monitors how your brand is cited and referenced across AI platforms like ChatGPT, Perplexity, and Google AI Overviews. Fluency optimization directly improves your citation rates by making your content more attractive to LLMs, which AmICited then tracks and reports.

Entity clarity—consistent naming and positioning of your brand across platforms—works hand-in-hand with fluency. Fluent content that clearly explains your brand's value, combined with consistent entity signals, significantly increases LLM citation rates and brand visibility in AI responses.

AmICited tracks how your brand is cited across ChatGPT, Perplexity, and Google AI Overviews. Discover which content drives AI citations and optimize your fluency strategy based on real data.

Learn how fluency optimization improves content visibility in AI search results. Discover writing techniques that help AI systems extract and cite your content ...

Learn what LLMO is, how it works, and why it matters for AI visibility. Discover optimization techniques to get your brand mentioned in ChatGPT, Perplexity, and...

Learn how to optimize keywords for AI search engines. Discover strategies to get your brand cited in ChatGPT, Perplexity, and Google AI answers with actionable ...