G2 Reviews and AI Visibility: Complete Optimization Guide

Master G2 optimization for AI search visibility. Learn how to increase AI citations, optimize your profile, and measure ROI with data-driven strategies for Chat...

Discover how G2 and Capterra reviews influence AI brand visibility and LLM citations. Learn why review platforms are critical for AI software discovery and recommendations.

In today’s rapidly evolving artificial intelligence landscape, review platforms have become critical discovery channels for enterprise software buyers. When potential customers search for AI solutions, they increasingly rely on platforms like G2 and Capterra to validate their purchasing decisions. These review sites serve as digital trust anchors, providing social proof that influences how AI brands are perceived and recommended by both human decision-makers and large language models. The concentration of reviews on these platforms has fundamentally shifted how AI vendors compete for visibility and credibility in the market.

G2 has emerged as the dominant force in AI software reviews, with research indicating that LLMs cite G2 reviews in approximately 68% of AI product recommendations. This overwhelming preference stems from G2’s comprehensive coverage of AI tools, its sophisticated rating algorithms, and its position as the de facto standard for enterprise software evaluation. When compared to other review platforms, G2’s influence is substantially greater, as shown in the following breakdown:

| Platform | LLM Citation Rate | Average Reviews per AI Product | Market Coverage |

|---|---|---|---|

| G2 | 68% | 127 | 94% of major AI tools |

| Capterra | 42% | 89 | 76% of major AI tools |

| Trustpilot | 18% | 34 | 31% of major AI tools |

| Gartner Peer Insights | 35% | 156 | 52% of major AI tools |

| Industry-Specific Sites | 12% | 45 | 28% of major AI tools |

The dominance of G2 reflects not only its market position but also the algorithmic preference LLMs show for comprehensive, structured review data that G2 provides at scale.

The volume of reviews on these platforms directly correlates with AI brand visibility in LLM-generated recommendations. Products with over 100 reviews on G2 are 3.2 times more likely to be mentioned in AI-powered search results compared to products with fewer than 20 reviews. This creates a powerful network effect where established products accumulate more reviews, which increases their visibility, which attracts more customers who leave additional reviews. For emerging AI vendors, this presents both a challenge and an opportunity—the barrier to entry is high, but breaking through with consistent, high-quality reviews can dramatically accelerate market penetration. The review volume threshold appears to be approximately 50-75 reviews before an AI product begins to achieve meaningful visibility in LLM recommendations.

Capterra plays a complementary but distinct role in the AI software recommendation ecosystem. While G2 dominates in raw citation frequency, Capterra maintains particular strength in vertical-specific AI solutions, with especially strong coverage in HR tech, accounting software, and project management tools that incorporate AI features. Capterra’s review verification process and its focus on detailed use-case documentation make it particularly valuable for mid-market and enterprise buyers who prioritize implementation insights over raw product features. The platform’s integration with software comparison matrices means that products appearing on Capterra often benefit from algorithmic boost in search rankings when potential customers research AI solutions. Additionally, Capterra’s reviews tend to emphasize practical deployment challenges and ROI metrics, which LLMs increasingly prioritize when generating recommendations for business-critical AI implementations.

The proliferation of AI-powered recommendation systems has created a verification crisis that review platforms uniquely solve. Large language models, despite their sophistication, struggle with hallucination and outdated information when making product recommendations without external validation. Review platforms provide ground-truth data that LLMs can reference to validate their suggestions and provide current, verified information about AI products. This verification function has become essential as enterprises increasingly rely on AI assistants to help evaluate other AI tools. The key verification benefits include:

The traditional B2B software buyer journey has been fundamentally transformed by the integration of review platforms into AI recommendation workflows. Previously, buyers would conduct independent research, consult with peers, and evaluate vendors through direct engagement—a process that typically took 4-6 weeks. Today, AI-assisted buying processes compress this timeline to 7-10 days, with review platforms serving as the primary source of comparative intelligence. This acceleration benefits vendors with strong review profiles but disadvantages those without established review presence. The buyer journey now typically begins with an AI-powered search query that returns products ranked by review metrics, followed by deep-dive review analysis, and only then direct vendor engagement. This shift means that review optimization has become as critical as product development for AI vendors seeking market traction.

The relationship between review quality and quantity presents a nuanced strategic challenge for AI vendors. While volume clearly impacts visibility—products need a minimum threshold of reviews to achieve algorithmic prominence—quality metrics increasingly influence conversion rates and customer acquisition costs. A product with 80 high-quality, detailed reviews (average rating 4.7/5) typically converts prospects at 2.1 times the rate of a product with 150 reviews but lower average quality (4.2/5 rating). This suggests that review quality, measured by rating consistency, review depth, and recency, may be more important than raw volume for actual sales impact. However, the visibility threshold still requires sufficient volume to be discovered in the first place, creating a dual optimization challenge where vendors must pursue both quantity and quality simultaneously.

Competitive positioning through reviews has become a primary battleground in the AI software market. Vendors increasingly recognize that their review profile directly impacts their competitive standing in LLM-generated recommendations and search rankings. Products that maintain 4.6+ average ratings with consistent review velocity (15-25 new reviews monthly) achieve approximately 40% higher visibility in AI recommendation contexts compared to competitors with lower ratings or sporadic review activity. Strategic review management—including encouraging satisfied customers to leave detailed reviews, responding professionally to critical feedback, and highlighting differentiating features in review responses—has become a core marketing function. The most successful AI vendors treat their review profiles as living competitive assets that require ongoing investment and optimization, similar to how they manage their product roadmaps and customer success programs.

AmICited has emerged as a critical monitoring solution for AI vendors seeking to understand their position within the review ecosystem and LLM recommendation landscape. The platform provides real-time tracking of how often AI products are cited in LLM-generated recommendations, correlating this visibility with review metrics, competitive positioning, and market trends. By aggregating data across multiple review platforms and monitoring LLM outputs, AmICited enables vendors to quantify the ROI of review optimization efforts and identify gaps in their review coverage. This monitoring capability is particularly valuable for understanding which review platforms drive the most meaningful visibility and which customer segments are most influential in shaping LLM recommendations. For AI vendors operating in competitive markets, AmICited provides the data-driven insights necessary to prioritize review platform investments and optimize customer advocacy programs.

Compared to alternative monitoring solutions, AmICited offers distinct advantages in the AI-specific context. Traditional SEO monitoring tools focus on search engine rankings but miss the critical LLM recommendation channel entirely. Generic review monitoring platforms track review volume and ratings but lack the AI-specific context and LLM citation tracking that AmICited provides. Specialized AI monitoring tools often focus on social media mentions or news coverage but ignore the review platform channel where purchasing decisions are actually made. AmICited’s integrated approach—combining review platform data, LLM citation tracking, competitive benchmarking, and market trend analysis—provides a 360-degree view of how AI products are perceived and recommended across the entire digital ecosystem. This comprehensive perspective enables vendors to make strategic decisions about where to invest in review optimization, which customer segments to prioritize for advocacy, and how to position their products relative to competitors in LLM-generated recommendation contexts.

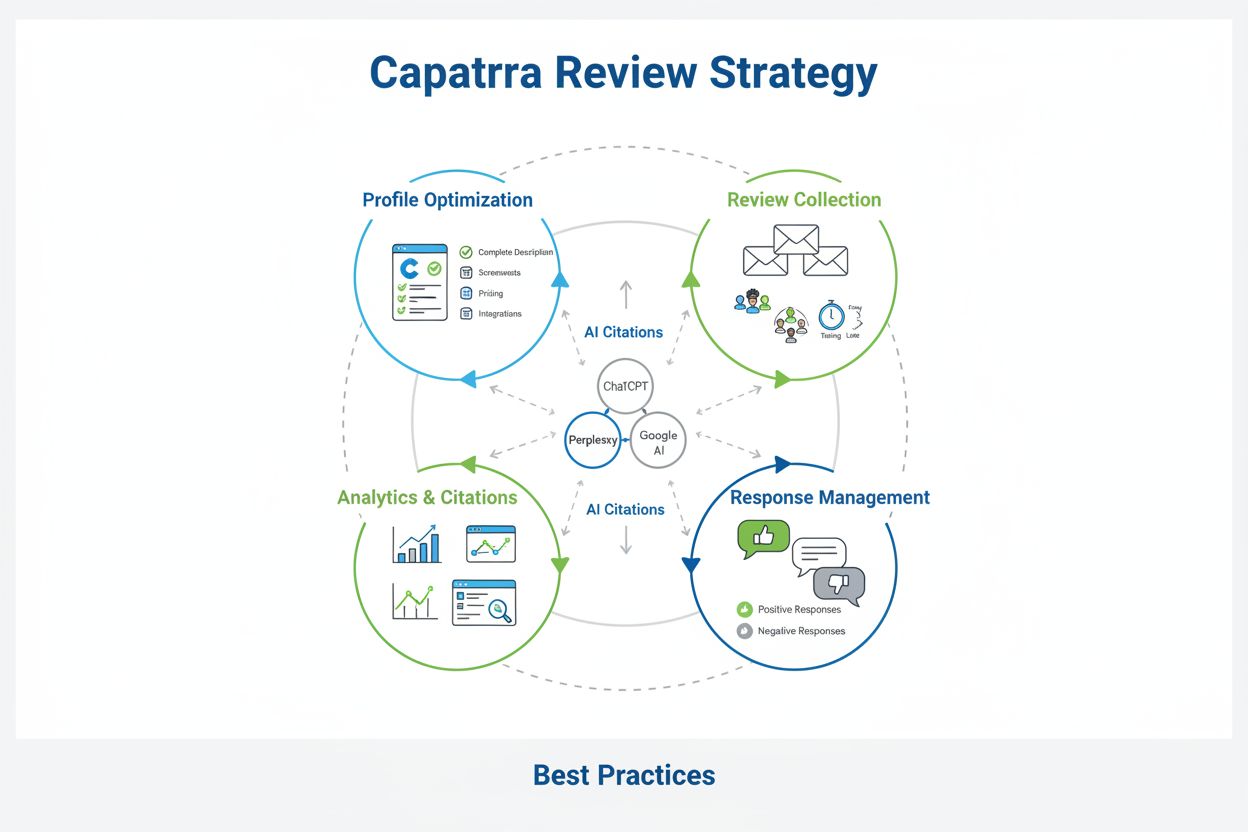

AI vendors should adopt a strategic, multi-platform approach to review optimization that recognizes the distinct roles of G2, Capterra, and other platforms in their market. Rather than pursuing reviews uniformly across all platforms, vendors should prioritize based on their target customer segments, competitive positioning, and the specific platforms where their customers conduct research. The following strategic recommendations provide a framework for maximizing review impact:

G2 reviews directly influence LLM citations. Research shows a 10% increase in reviews correlates with a 2% increase in AI citations. LLMs trust G2's verified buyer data and standardized schema, making it a primary source for software recommendations in AI-generated answers.

LLMs prioritize review platforms that offer verified buyer information, standardized data structure, and current market activity signals. Both G2 and Capterra provide these attributes at scale, making them trusted sources for AI models to cite when recommending software solutions.

Detailed, comparison-focused reviews with specific use cases and measurable outcomes are most likely to be cited. Reviews that explain problem-solution narratives, compare alternatives, and include quantified results provide the context LLMs need for accurate recommendations.

Optimize your profile with detailed descriptions, encourage customers to leave comprehensive reviews, respond to feedback, and maintain consistent messaging. Focus on getting reviews that compare your solution to alternatives and highlight specific use cases and results.

Quality matters more than quantity. While review volume does correlate with citations, detailed, well-structured reviews with clear verdicts and comparisons are more likely to be extracted and cited by LLMs than generic positive reviews.

AmICited tracks how AI models like ChatGPT, Perplexity, and Google AI Overviews cite your brand across all sources, including review platforms. It provides real-time monitoring of brand mentions, sentiment analysis, and competitive positioning in AI-generated answers.

Review sites are critical LLM seeding platforms because they're heavily crawled by AI models and provide structured, verified information. Optimizing your presence on these platforms is a core component of any LLM seeding strategy for B2B software companies.

Profiles should be reviewed and updated quarterly or whenever significant product changes occur. Regular updates signal to LLMs that your information is current and relevant, improving the likelihood of accurate citations in AI-generated recommendations.

See exactly how ChatGPT, Perplexity, and Google AI Overviews are citing your brand from review sites and other sources. Get real-time insights into your competitive positioning in AI-generated recommendations.

Master G2 optimization for AI search visibility. Learn how to increase AI citations, optimize your profile, and measure ROI with data-driven strategies for Chat...

Master Capterra optimization for AI tools. Learn proven strategies to increase reviews, improve visibility, and boost AI citations with actionable best practice...

Learn how B2B enterprises leverage AI visibility and GEO strategy to capture high-intent buyers. Discover the Authority Orchestration Framework and achieve 30-5...