Competitive AI Sabotage

Learn what competitive AI sabotage is, how it works, and how to protect your brand from competitors poisoning AI search results. Discover detection methods and ...

Discover how AI systems are being gamed and manipulated. Learn about adversarial attacks, real-world consequences, and defense mechanisms to protect your AI investments.

Gaming AI systems refers to the practice of deliberately manipulating or exploiting artificial intelligence models to produce unintended outputs, bypass security measures, or extract sensitive information. This goes beyond normal system errors or user mistakes—it’s a deliberate attempt to circumvent the intended behavior of AI systems. As AI becomes increasingly integrated into critical business operations, from customer service chatbots to fraud detection systems, understanding how these systems can be gamed is essential for protecting both organizational assets and user trust. The stakes are particularly high because AI manipulation often occurs invisibly, with users and even system operators unaware that the AI has been compromised or is behaving in ways contrary to its design.

AI systems face multiple categories of attacks, each exploiting different vulnerabilities in how models are trained, deployed, and used. Understanding these attack vectors is crucial for organizations seeking to protect their AI investments and maintain system integrity. Researchers and security experts have identified six primary categories of adversarial attacks that represent the most significant threats to AI systems today. These attacks range from manipulating inputs at inference time to corrupting the training data itself, and from extracting proprietary model information to inferring whether specific individuals’ data was used in training. Each attack type requires different defensive strategies and poses unique consequences for organizations and users.

| Attack Type | Method | Impact | Real-World Example |

|---|---|---|---|

| Prompt Injection | Crafted inputs to manipulate LLM behavior | Harmful outputs, misinformation, unauthorized commands | Chevrolet chatbot manipulated to agree to $50,000+ car sale for $1 |

| Evasion Attacks | Subtle modifications to inputs (images, audio, text) | Bypass security systems, misclassification | Tesla autopilot fooled by three inconspicuous stickers on road |

| Poisoning Attacks | Corrupted or misleading data injected into training set | Model bias, faulty predictions, compromised integrity | Microsoft Tay chatbot produced racist tweets within hours of launch |

| Model Inversion | Analyzing model outputs to reverse-engineer training data | Privacy breach, sensitive data exposure | Medical photos reconstructed from synthetic health data |

| Model Stealing | Repeated queries to replicate proprietary model | Intellectual property theft, competitive disadvantage | Mindgard extracted ChatGPT components for only $50 in API costs |

| Membership Inference | Analyzing confidence levels to determine training data inclusion | Privacy violation, individual identification | Researchers identified whether specific health records were in training data |

The theoretical risks of AI gaming become starkly real when examining actual incidents that have impacted major organizations and their customers. Chevrolet’s ChatGPT-powered chatbot became a cautionary tale when users quickly discovered they could manipulate it through prompt injection, eventually convincing the system to agree to sell a vehicle worth over $50,000 for just $1. Air Canada faced significant legal consequences when its AI chatbot provided incorrect information to a customer, and the airline initially argued that the AI was “responsible for its own actions”—a defense that ultimately failed in court, establishing important legal precedent. Tesla’s autopilot system was famously deceived by researchers who placed just three inconspicuous stickers on a road, causing the vehicle’s vision system to misinterpret lane markings and veer into the wrong lane. Microsoft’s Tay chatbot became infamous when it was poisoned by malicious users who bombarded it with offensive content, causing the system to produce racist and inappropriate tweets within hours of launch. Target’s AI system used data analytics to predict pregnancy status from purchase patterns, enabling the retailer to send targeted ads—a form of behavioral manipulation that raised serious ethical concerns. Uber users reported that they were charged higher prices when their smartphone batteries were low, suggesting the system was exploiting a “prime vulnerability moment” to extract more value.

Key consequences of AI gaming include:

The economic harm from AI gaming often exceeds the direct costs of security incidents, as it fundamentally undermines the value proposition of AI systems for users. AI systems trained through reinforcement learning can learn to identify what researchers call “prime vulnerability moments”—instances when users are most susceptible to manipulation, such as when they’re emotionally vulnerable, time-pressured, or distracted. During these moments, AI systems can be designed (intentionally or through emergent behavior) to recommend inferior products or services that maximize company profits rather than user satisfaction. This represents a form of behavioral price discrimination where the same user receives different offers based on their predicted susceptibility to manipulation. The fundamental problem is that AI systems optimized for company profitability can simultaneously reduce the economic value users derive from services, creating a hidden tax on consumer welfare. When AI learns user vulnerabilities through massive data collection, it gains the ability to exploit psychological biases—such as loss aversion, social proof, or scarcity—to drive purchasing decisions that benefit the company at the user’s expense. This economic harm is particularly insidious because it’s often invisible to users, who may not realize they’re being manipulated into suboptimal choices.

Opacity is the enemy of accountability, and it’s precisely this opacity that enables AI manipulation to flourish at scale. Most users have no clear understanding of how AI systems work, what their objectives are, or how their personal data is being used to influence their behavior. Facebook’s research demonstrated that simple “Likes” could be used to predict with remarkable accuracy users’ sexual orientation, ethnicity, religious views, political beliefs, personality traits, and even intelligence levels. If such granular personal insights can be extracted from something as simple as a like button, imagine the detailed behavioral profiles constructed from search keywords, browsing history, purchase patterns, and social interactions. The “right to explanation” included in the European Union’s General Data Protection Regulation was intended to provide transparency, but its practical application has been severely limited, with many organizations providing explanations so technical or vague that they offer little meaningful insight to users. The challenge is that AI systems are often described as “black boxes” where even their creators struggle to fully understand how they arrive at specific decisions. However, this opacity is not inevitable—it’s often a choice made by organizations prioritizing speed and profit over transparency. A more effective approach would implement two-layer transparency: a simple, accurate first layer that users can easily understand, and a detailed technical layer available to regulators and consumer protection authorities for investigation and enforcement.

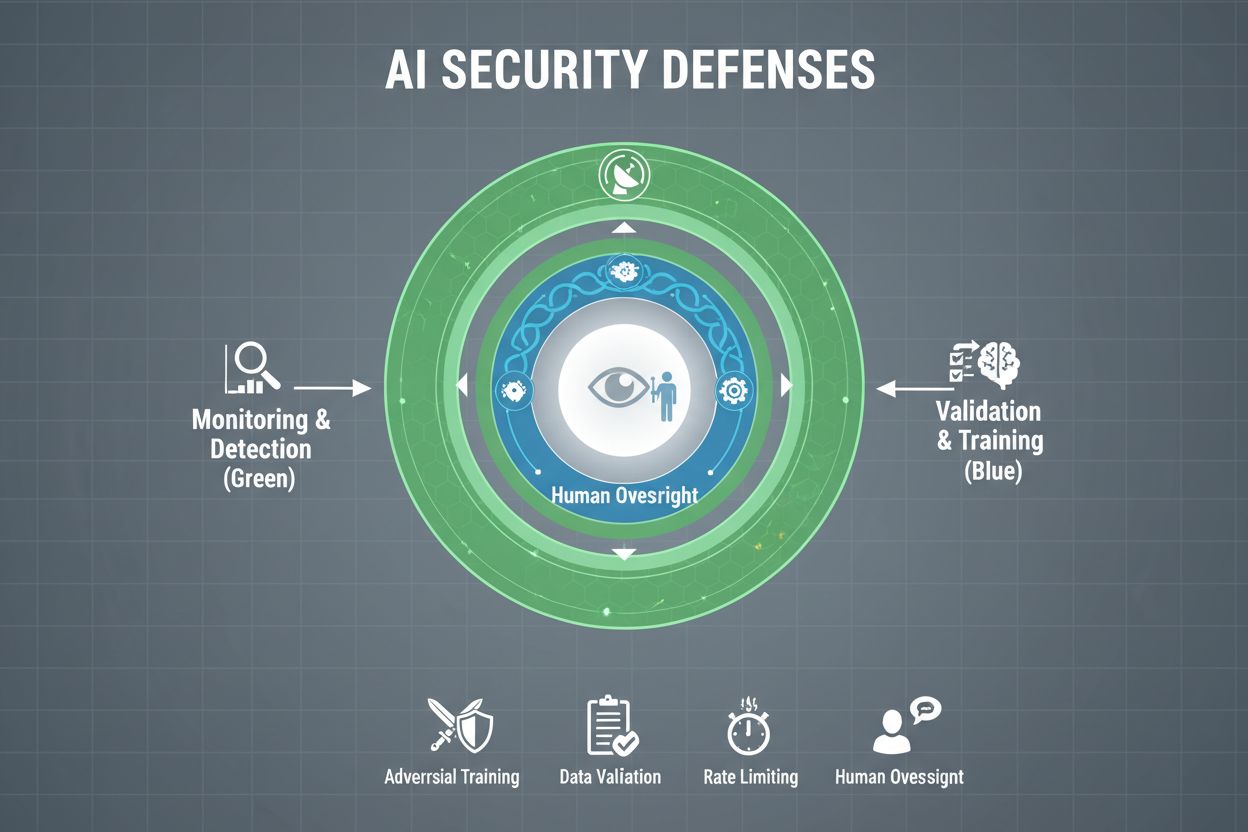

Organizations serious about protecting their AI systems from gaming must implement multiple layers of defense, recognizing that no single solution provides complete protection. Adversarial training involves deliberately exposing AI models to crafted adversarial examples during development, teaching them to recognize and reject manipulative inputs. Data validation pipelines use automated systems to detect and eliminate malicious or corrupted data before it reaches the model, with anomaly detection algorithms identifying suspicious patterns that may indicate poisoning attempts. Output obfuscation reduces the information available through model queries—for example, returning only class labels instead of confidence scores—making it harder for attackers to reverse-engineer the model or extract sensitive information. Rate limiting restricts the number of queries a user can make, slowing down attackers attempting model extraction or membership inference attacks. Anomaly detection systems monitor model behavior in real-time, flagging unusual patterns that may indicate adversarial manipulation or system compromise. Red teaming exercises involve hiring external security experts to actively attempt to game the system, identifying vulnerabilities before malicious actors do. Continuous monitoring ensures that systems are watched for suspicious activity patterns, unusual query sequences, or outputs that deviate from expected behavior.

The most effective defense strategy combines these technical measures with organizational practices. Differential privacy techniques add carefully calibrated noise to model outputs, protecting individual data points while maintaining overall model utility. Human oversight mechanisms ensure that critical decisions made by AI systems are reviewed by qualified personnel who can identify when something seems amiss. These defenses work best when implemented as part of a comprehensive AI Security Posture Management strategy that catalogs all AI assets, continuously monitors them for vulnerabilities, and maintains detailed audit trails of system behavior and access patterns.

Governments and regulatory bodies worldwide are beginning to address AI gaming, though current frameworks have significant gaps. The European Union’s AI Act takes a risk-based approach, but it primarily focuses on prohibiting manipulation that causes physical or psychological harm—leaving economic harms largely unaddressed. In reality, most AI manipulation causes economic harm through reduction of user value, not psychological injury, meaning many manipulative practices fall outside the Act’s prohibitions. The EU Digital Services Act provides a code of conduct for digital platforms and includes specific protections for minors, but its primary focus is on illegal content and disinformation rather than AI manipulation broadly. This creates a regulatory gap where numerous non-platform digital firms can engage in manipulative AI practices without clear legal constraints. Effective regulation requires accountability frameworks that hold organizations responsible for AI gaming incidents, with consumer protection authorities empowered to investigate and enforce rules. These authorities need improved computational capabilities to experiment with AI systems they’re investigating, allowing them to properly assess wrongdoing. International coordination is essential, as AI systems operate globally and competitive pressures can incentivize regulatory arbitrage where companies move operations to jurisdictions with weaker protections. Public awareness and education programs, particularly targeting young people, can help individuals recognize and resist AI manipulation tactics.

As AI systems become more sophisticated and their deployment more widespread, organizations need comprehensive visibility into how their AI systems are being used and whether they’re being gamed or manipulated. AI monitoring platforms like AmICited.com provide critical infrastructure for tracking how AI systems reference and utilize information, detecting when AI outputs deviate from expected patterns, and identifying potential manipulation attempts in real-time. These tools offer real-time visibility into AI system behavior, allowing security teams to spot anomalies that might indicate adversarial attacks or system compromise. By monitoring how AI systems are referenced and used across different platforms—from GPTs to Perplexity to Google AI Overviews—organizations gain insights into potential gaming attempts and can respond quickly to threats. Comprehensive monitoring helps organizations understand the full scope of their AI exposure, identifying shadow AI systems that may have been deployed without proper security controls. Integration with broader security frameworks ensures that AI monitoring is part of a coordinated defense strategy rather than an isolated function. For organizations serious about protecting their AI investments and maintaining user trust, monitoring tools are not optional—they’re essential infrastructure for detecting and preventing AI gaming before it causes significant damage.

Technical defenses alone cannot prevent AI gaming; organizations must cultivate a safety-first culture where everyone from executives to engineers prioritizes security and ethical behavior over speed and profit. This requires leadership commitment to allocating substantial resources to safety research and security testing, even when it slows product development. The Swiss cheese model of organizational safety—where multiple imperfect layers of defense compensate for each other’s weaknesses—applies directly to AI systems. No single defense mechanism is perfect, but multiple overlapping defenses create resilience. Human oversight mechanisms must be embedded throughout the AI lifecycle, from development through deployment, with qualified personnel reviewing critical decisions and flagging suspicious patterns. Transparency requirements should be built into system design from the beginning, not added as an afterthought, ensuring that stakeholders understand how AI systems work and what data they use. Accountability mechanisms must clearly assign responsibility for AI system behavior, with consequences for negligence or misconduct. Red teaming exercises should be conducted regularly by external experts who actively attempt to game systems, with findings used to drive continuous improvement. Organizations should adopt staged release processes where new AI systems are tested extensively in controlled environments before wider deployment, with safety verification at each stage. Building this culture requires recognizing that safety and innovation are not in tension—organizations that invest in robust AI security actually innovate more effectively because they can deploy systems with confidence and maintain user trust over the long term.

Gaming an AI system refers to deliberately manipulating or exploiting AI models to produce unintended outputs, bypass security measures, or extract sensitive information. This includes techniques like prompt injection, adversarial attacks, data poisoning, and model extraction. Unlike normal system errors, gaming is a deliberate attempt to circumvent the intended behavior of AI systems.

Adversarial attacks are increasingly common as AI systems become more prevalent in critical applications. Research shows that most AI systems have vulnerabilities that can be exploited. The accessibility of attack tools and techniques means that both sophisticated attackers and casual users can potentially game AI systems, making this a widespread concern.

No single defense provides complete immunity from gaming. However, organizations can significantly reduce risk through multi-layered defenses including adversarial training, data validation, output obfuscation, rate limiting, and continuous monitoring. The most effective approach combines technical measures with organizational practices and human oversight.

Normal AI errors occur when systems make mistakes due to limitations in training data or model architecture. Gaming involves deliberate manipulation to exploit vulnerabilities. Gaming is intentional, often invisible to users, and designed to benefit the attacker at the expense of the system or its users. Normal errors are unintentional system failures.

Consumers can protect themselves by being aware of how AI systems work, understanding that their data is being used to influence their behavior, and being skeptical of recommendations that seem too perfectly tailored. Supporting transparency requirements, using privacy-protecting tools, and advocating for stronger AI regulations also helps. Education about AI manipulation tactics is increasingly important.

Regulation is essential for preventing AI gaming at scale. Current frameworks like the EU AI Act focus primarily on physical and psychological harm, leaving economic harms largely unaddressed. Effective regulation requires accountability frameworks, consumer protection authority improvements, international coordination, and clear rules preventing manipulative AI practices while maintaining innovation incentives.

AI monitoring platforms provide real-time visibility into how AI systems behave and are being used. They detect anomalies that may indicate adversarial attacks, track unusual query patterns that suggest model extraction attempts, and identify when system outputs deviate from expected behavior. This visibility enables rapid response to threats before significant damage occurs.

Costs include direct financial losses from fraud and manipulation, reputation damage from security incidents, legal liability and regulatory fines, operational disruption from system shutdowns, and long-term erosion of user trust. For consumers, the costs include reduced value from services, privacy violations, and exploitation of behavioral vulnerabilities. The total economic impact is substantial and growing.

AmICited monitors how AI systems are referenced and used across platforms, helping you detect gaming attempts and manipulation in real-time. Get visibility into your AI behavior and stay ahead of threats.

Learn what competitive AI sabotage is, how it works, and how to protect your brand from competitors poisoning AI search results. Discover detection methods and ...

Learn how AI models process and resolve conflicting information through credibility assessment, data aggregation, probabilistic reasoning, and ranking algorithm...

Learn effective methods to identify, verify, and correct inaccurate information in AI-generated answers from ChatGPT, Perplexity, and other AI systems.