Visual Search and AI: Image Optimization for AI Discovery

Learn how visual search and AI are transforming image discovery. Optimize your images for Google Lens, AI Overviews, and multimodal LLMs to boost visibility in ...

Learn how Google Lens is transforming visual search with 100+ billion searches annually. Discover optimization strategies to ensure your brand appears in visual discovery results and capture shopping-intent traffic.

Visual search has fundamentally transformed how people discover information online, shifting from text-based queries to camera-first interactions. Google Lens, the company’s flagship visual search technology, now powers nearly 20 billion visual searches every month, with over 100 billion visual searches occurring through Lens and Circle to Search in 2024 alone. This explosive growth reflects a broader consumer behavior shift: people increasingly prefer pointing their camera at something they want to learn about rather than typing a description.

The platform’s reach is staggering: 1.5 billion people now use Google Lens monthly, with younger users aged 18-24 showing the highest engagement rates. What makes this particularly significant for brands is that one in five of these visual searches—approximately 20 billion searches—have direct shopping intent. These aren’t casual curiosity searches; they’re potential customers actively looking to purchase something they’ve seen in the real world.

At its core, Google Lens leverages three interconnected AI technologies to understand and respond to visual queries. Convolutional Neural Networks (CNNs) form the foundation, analyzing pixel patterns to identify objects, scenes, and visual relationships with remarkable accuracy. These deep learning models are trained on billions of labeled images, enabling them to recognize everything from common household items to rare plant species.

Optical Character Recognition (OCR) handles text detection and extraction, allowing Lens to read menus, signs, documents, and handwritten notes. When you point your camera at a foreign language menu or a street sign, OCR converts the visual text into digital data that can be processed and translated. Natural Language Processing (NLP) then interprets this text contextually, understanding not just what words are present but what they mean in relation to your query.

The real power emerges from multimodal AI—the ability to process multiple types of input simultaneously. You can now point your camera at a product, ask a voice question about it, and receive an AI-powered response that combines visual understanding with conversational context. This integration creates a search experience that feels natural and intuitive.

| Feature | Traditional Text Search | Google Lens |

|---|---|---|

| Input Method | Typed keywords | Image, video, or voice |

| Recognition Capability | Keywords only | Objects, text, context, relationships |

| Response Speed | Seconds | Instant |

| Context Understanding | Limited to query text | Comprehensive visual context |

| Real-time Capability | No | Yes, with live camera |

| Accuracy for Visual Items | Low (hard to describe) | High (direct visual match) |

The practical applications of Google Lens extend far beyond casual curiosity. In shopping, users photograph products they see in stores, on social media, or in videos, then instantly find where to buy them and compare prices across retailers. A customer spots a piece of furniture at a friend’s house, takes a photo, and discovers the exact item available for purchase—all without leaving the moment.

Education represents another powerful use case, particularly in developing markets. Students photograph textbook problems or classroom materials in English and use Lens to translate them into their native language, then access homework help and explanations. This democratizes access to educational resources across language barriers.

Travel and exploration leverage Lens for landmark identification, restaurant discovery, and cultural learning. Tourists photograph unfamiliar architecture or signage and instantly receive historical context and information. Nature enthusiasts identify plants, animals, and insects during outdoor activities, turning casual observations into learning opportunities.

Product research and comparison has become seamless. Someone sees a handbag they like, photographs it, and Lens returns not just the exact product but similar items in different price ranges from nearby retailers. This capability has fundamentally changed how consumers shop, removing friction from the discovery-to-purchase journey.

The opportunity is staggering: 20 billion shopping-intent visual searches annually represent a massive channel that most brands are completely ignoring. While competitors chase AI Overview placement—a crowded space dominated by massive publishers and established retailers—visual search remains relatively untapped territory with first-mover advantages available right now.

Brands that optimize for visual discovery gain a competitive edge that transcends traditional SEO. When your product appears in Google Lens results, you’re not competing on keywords or content quality; you’re competing on visual relevance and context. A furniture retailer whose products appear in Lens searches for “nightstand queen bedroom” captures customers at the exact moment they’re ready to buy, before they’ve even visited a search engine.

The conversion implications are profound. Visual search users have already identified what they want—they’re not browsing or researching, they’re buying. This intent-rich traffic converts at significantly higher rates than traditional search traffic. Additionally, visual search reduces pre-purchase friction: customers can see exactly how a product looks in context, understand its scale relative to known objects, and make confident purchasing decisions.

The competitive timing advantage is real. Most agencies and brands still don’t understand that visual search exists as a distinct channel. The companies implementing visual search optimization now are building leads and market share while competitors remain focused on yesterday’s search paradigms.

Optimizing for visual discovery requires a fundamentally different approach than traditional image SEO. Here are the essential strategies:

Each of these elements contributes to how Google Lens understands and ranks your visual content. The more context you provide, the more likely your products appear in relevant visual searches.

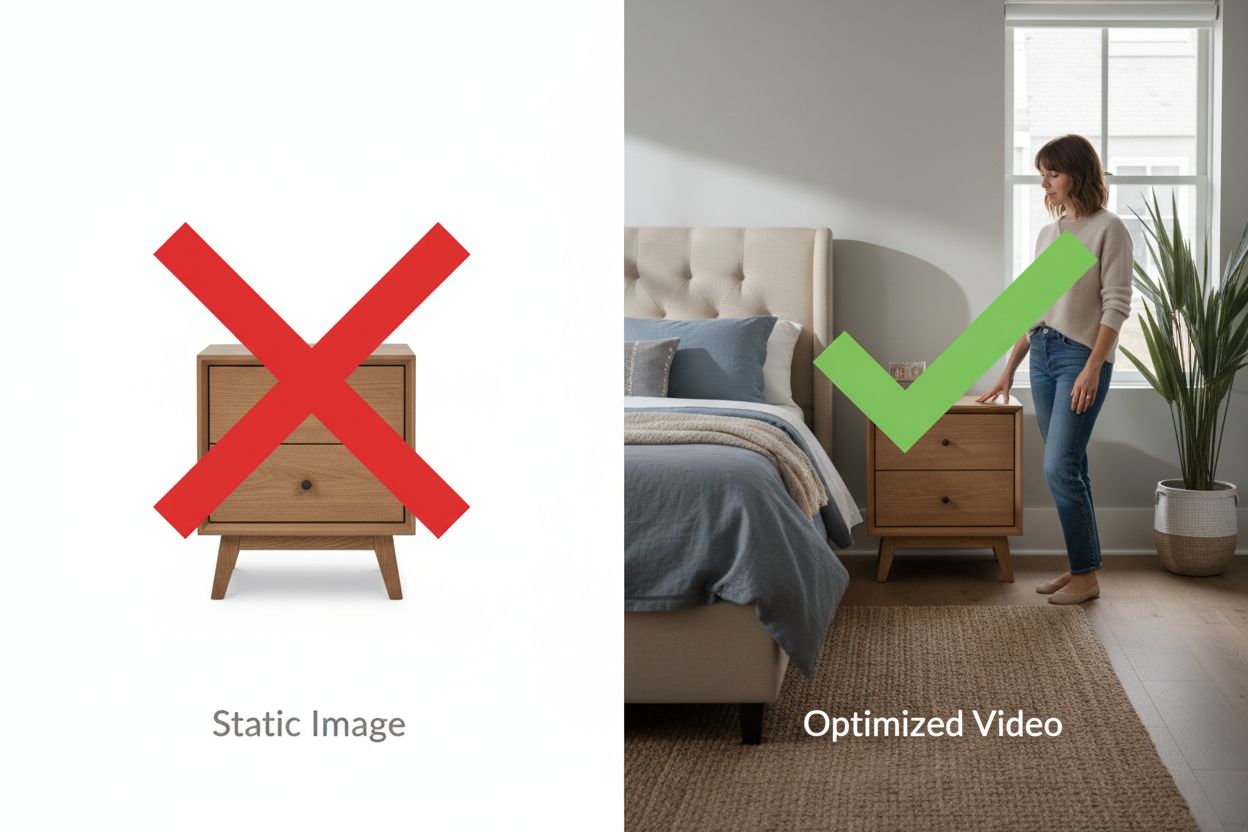

Static images are table stakes in visual search optimization; video is your competitive advantage. Google Lens extracts information from video frames, meaning a 30-second product demonstration video can generate dozens of discoverable moments that static photography cannot.

Video demonstrates scale in ways photographs cannot. When you show a nightstand positioned next to a queen-size bed with a person standing nearby, Lens can infer the exact dimensions through spatial relationships. When you demonstrate a product in use—a waterproof bag surviving a rainstorm, a standing desk supporting dual monitors, a tent withstanding heavy rain—you’re providing proof that transcends claims.

The conversion impact is measurable. Ecommerce sites that add product videos see conversion rate increases of 20-40% because customers can visualize products in their own spaces before purchasing. These same videos become discoverable in Google Lens searches, driving traffic from an entirely new channel.

The technical requirements are straightforward: 15-45 second videos showing products from multiple angles with clear scale context, uploaded directly to your website (not YouTube embeds for product pages), with descriptive filenames and schema markup. You don’t need Hollywood production quality; authentic smartphone footage showing genuine context often outperforms sterile studio videos because the context is more valuable than the production value.

Implementing visual search optimization requires a strategic approach. Start by auditing your current visual assets in Google Search Console’s Image section—most brands discover they’re receiving thousands of impressions but minimal clicks, indicating a massive optimization opportunity.

Identify your top 50 products by traffic and revenue, then assess their current visual content. Which products have multiple angles? Which have videos? Which lack lifestyle photography? This audit reveals where your optimization efforts will generate the highest ROI. Create a content roadmap prioritizing products with the highest search volume and commercial intent.

The implementation roadmap spans 60-90 days. Weeks 1-2 focus on planning and prioritization. Weeks 3-4 involve content creation—shooting product videos, lifestyle photography, and creating demonstration content. Weeks 5-6 handle technical optimization: renaming files, writing alt text, implementing schema markup, and uploading content. Weeks 7-8 focus on monitoring and iteration, tracking which products and content types generate the most visual search traffic.

Monitor Google Search Console’s Performance report filtered by “Image” search type to track progress. Expect 30-60 days before meaningful traffic increases appear, as Google needs time to re-crawl and index your new visual content. Track conversions from image search traffic specifically using UTM parameters or channel grouping in Google Analytics to measure ROI.

Google’s roadmap for visual search continues expanding in exciting directions. Search Live, rolling out in 2025, enables real-time conversation with Search—you can point your camera at a painting and ask “What style is this?” then follow up with “Who are famous artists in that style?” creating a seamless, conversational visual search experience.

Multimodal AI capabilities continue advancing, allowing Lens to understand increasingly complex visual queries. Rather than just identifying objects, Lens can now understand relationships, contexts, and nuanced questions about what you’re seeing. Circle to Search expansion brings gesture-based visual search to more devices and platforms, making visual discovery even more accessible.

Integration across Google’s ecosystem deepens the opportunity. Google Lens is now built into Chrome desktop, meaning visual search is available whenever inspiration strikes. As these capabilities expand globally and to more platforms, the competitive advantage of early optimization becomes even more pronounced.

The brands that prepare now—optimizing their visual content, creating demonstration videos, and implementing proper schema markup—will dominate visual search results as the channel continues its explosive growth. The question isn’t whether visual search will matter for your business; it’s whether you’ll be visible when customers search visually for what you sell.

Google Lens is Google's visual search technology that uses AI to identify objects, text, and scenes from images or video. It employs convolutional neural networks (CNNs) for object recognition, optical character recognition (OCR) for text detection, and natural language processing (NLP) for understanding context. Users can point their camera at something and ask questions about it, receiving instant AI-powered answers and related information.

Traditional image search relies on keywords and metadata to find visually similar images. Google Lens understands the actual content of images—objects, relationships, context, and meaning—allowing it to match products and information based on visual similarity rather than text descriptions. This makes it far more effective for finding items that are difficult to describe in words, like furniture, fashion, or landmarks.

Google Lens processes over 100 billion visual searches annually, with 20 billion having direct shopping intent. Users performing visual searches are actively looking to purchase something they've seen, making this traffic highly valuable. Optimizing for visual discovery captures customers at their moment of intent, before they've even typed a search query, resulting in higher conversion rates than traditional search traffic.

Google Lens requires opening the app and taking a photo or uploading an image. Circle to Search is a gesture-based feature available on Android devices that lets you circle, tap, or highlight objects directly on your screen without switching apps. Both use the same underlying visual search technology, but Circle to Search offers a faster, more seamless experience for users already viewing content on their phones.

Optimize for Google Lens by providing multiple product angles, including known-size references (beds, doors, people) for scale context, writing descriptive alt text, implementing Product and Video schema markup, creating lifestyle photography showing real-world use, keeping seasonal products online with out-of-stock indicators, and using descriptive filenames. Each element helps Google Lens understand your visual content better.

Video is a game-changer for visual search because Google Lens extracts information from video frames, creating multiple discoverable moments from a single video. Videos demonstrate scale, functionality, and real-world use in ways static images cannot. Products with demonstration videos see 20-40% higher conversion rates and appear more frequently in visual search results, making video essential for competitive advantage.

Expect 30-60 days before meaningful traffic increases appear in Google Search Console's Image section. Google needs time to re-crawl and index your new visual content. However, you should monitor impression increases within 30 days, which indicate that Google is discovering and indexing your optimized content. Conversion improvements typically follow within 60-90 days as traffic volume increases.

Yes, you can track visual search traffic in Google Analytics by filtering for 'Image' search type in Google Search Console's Performance report. For more detailed conversion tracking, use UTM parameters on product pages or create a custom channel grouping for image search traffic. Monitor metrics like click-through rate, conversion rate, and average order value specifically for image search traffic to measure ROI on your optimization efforts.

AmICited tracks how Google Lens, Circle to Search, and other AI tools reference your brand in visual discovery results. Get insights into your AI visibility and optimize your visual content strategy.

Learn how visual search and AI are transforming image discovery. Optimize your images for Google Lens, AI Overviews, and multimodal LLMs to boost visibility in ...

Explore AI Visibility Futures - forward-looking analysis of emerging trends in AI-driven brand discovery. Learn how brands will be discovered by AI systems and ...

Learn what visual AI search is, how it works, and its applications in e-commerce and retail. Discover the technologies behind image-based search and how busines...