AI Visibility Content Governance: Policy Framework

Learn how to implement effective AI content governance policies with visibility frameworks. Discover regulatory requirements, best practices, and tools for mana...

Learn how government agencies can optimize their digital presence for AI systems like ChatGPT and Perplexity. Discover strategies for improving AI visibility, ensuring transparency, and implementing AI responsibly in the public sector.

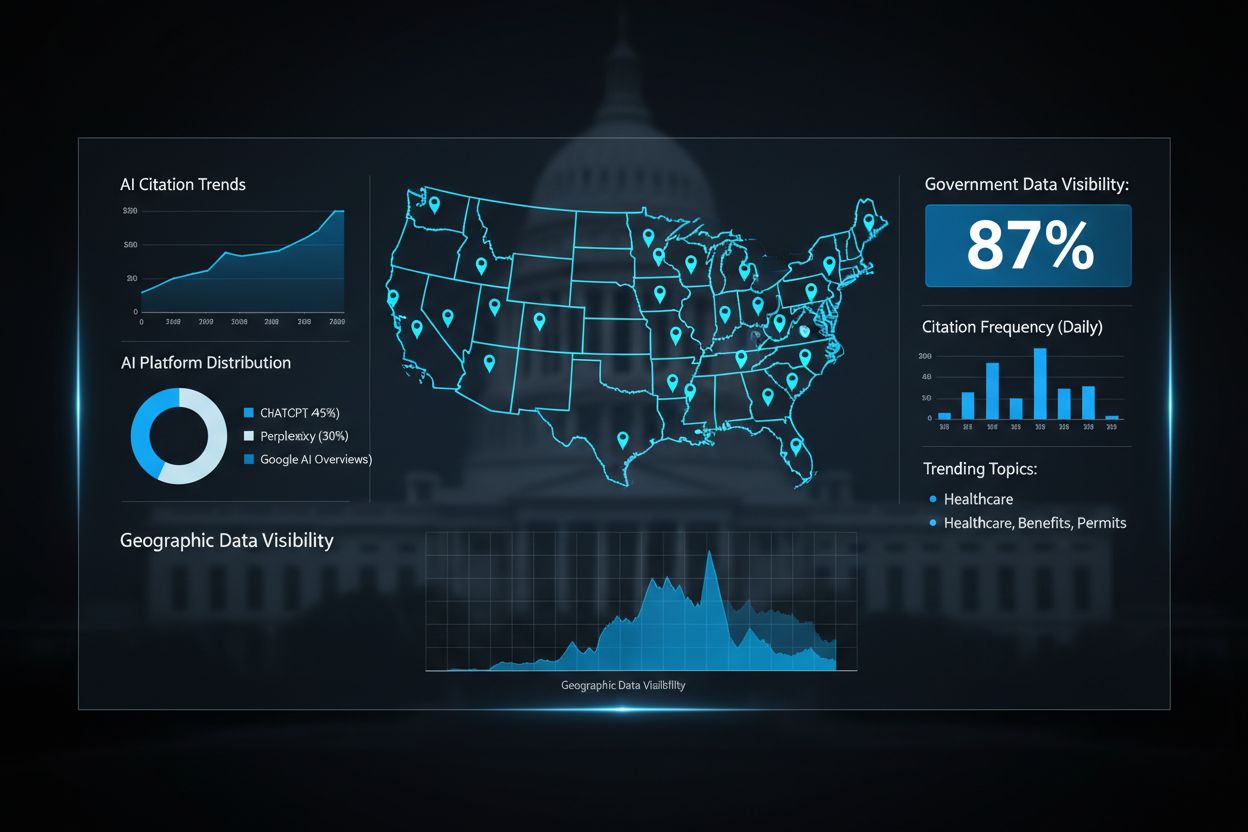

Government AI visibility refers to the extent to which artificial intelligence systems—including GPTs, Perplexity, and Google AI Overviews—can discover, access, and cite government data and resources when responding to public inquiries. This visibility is critical because government agencies hold authoritative information on everything from healthcare regulations to social services, yet much of this data remains invisible to modern AI systems. When citizens ask AI assistants questions about government programs, benefits, or policies, they deserve accurate, up-to-date answers sourced from official government sources rather than outdated or incomplete information. Public trust in government depends on ensuring that AI systems properly reference and attribute government data, maintaining the integrity of official information in an increasingly AI-driven information landscape. AI transparency becomes a cornerstone of democratic governance when citizens can verify that AI recommendations are grounded in legitimate government sources. AmICited.com serves as a critical monitoring platform that tracks how government data is cited and referenced across major AI systems, helping agencies understand their visibility and impact in the AI ecosystem. By establishing clear visibility into government AI citations, public sector organizations can better serve citizens and maintain authority over their own information.

Government agencies continue to struggle with data silos created by decades of fragmented IT investments, where critical information remains locked within incompatible legacy systems that cannot easily communicate with modern AI platforms. These outdated systems were designed for traditional web interfaces and document management, not for the semantic understanding and real-time data access that AI systems require. The cost of this technological fragmentation is staggering: organizations waste approximately $140 billion annually in unused benefits from outdated processes that could be streamlined through AI integration. Beyond efficiency losses, legacy systems create significant security vulnerabilities when government data must be manually extracted and shared with public-facing AI systems, increasing the risk of data breaches and unauthorized access. The challenge intensifies when considering that many government websites lack the structured data, metadata, and API infrastructure necessary for AI crawlers to properly index and understand government information.

| Traditional Government IT | AI-Ready Infrastructure |

|---|---|

| Siloed databases with limited interoperability | Integrated data platforms with APIs and structured formats |

| Manual data extraction and sharing processes | Automated, secure data pipelines |

| Unstructured documents and PDFs | Semantic web standards and machine-readable formats |

| Reactive security measures | Privacy-by-design and continuous monitoring |

| Limited real-time data access | Live data feeds and dynamic content delivery |

Rather than rushing to deploy AI technology, successful government organizations follow a strategic, phased implementation roadmap that prioritizes planning and organizational readiness over technological capabilities. This approach recognizes that AI adoption in the public sector requires careful coordination across multiple stakeholder groups, from IT departments to frontline staff to citizens themselves.

The Five-Step Implementation Roadmap:

Strategic Opportunity Identification: Conduct comprehensive audits of government operations to identify high-impact use cases where AI can deliver measurable public value, such as reducing application processing times, improving service accessibility, or enhancing data-driven policy decisions.

Comprehensive Preparation: Assess current data infrastructure, identify legacy system integration challenges, establish governance frameworks, and build internal AI literacy across the organization before any technology deployment begins.

Strategic Pilot Design: Launch controlled pilots in specific departments or service areas with clear success metrics, allowing teams to learn from real-world implementation challenges in a low-risk environment before scaling.

Organizational Change Management: Develop training programs, address workforce concerns about job displacement, establish clear communication channels, and create feedback mechanisms to ensure staff and stakeholders feel heard throughout the transition.

Impact Measurement: Establish KPIs aligned with government objectives, continuously monitor AI system performance, track citizen satisfaction, measure cost savings, and adjust implementation strategies based on evidence.

This technology-second approach ensures that AI implementations serve genuine public needs rather than becoming expensive solutions searching for problems.

Ethical AI governance has become essential as governments worldwide recognize that AI systems making decisions affecting citizens must operate within clear ethical and legal frameworks. Canada’s Algorithmic Impact Assessment framework provides a practical model, categorizing AI systems into four impact levels—minimal, moderate, high, and very high—with corresponding governance requirements and oversight mechanisms for each tier. This tiered approach allows governments to allocate resources proportionally, applying rigorous scrutiny to high-stakes systems like criminal justice algorithms while maintaining reasonable oversight of lower-impact applications. Estonia has pioneered a privacy-by-design approach through its Data Tracker system, which monitors all government data access across its digital infrastructure, providing 450,000 citizens with complete transparency into which agencies access their personal information and for what purposes. Algorithmic accountability requires that government agencies document how AI systems make decisions, establish audit trails, and maintain the ability to explain recommendations to affected citizens. AmICited.com plays a vital role in this transparency ecosystem by monitoring how government data is cited and referenced across public AI systems, helping agencies verify that their information is being accurately represented and properly attributed. Without robust monitoring and governance frameworks, government AI systems risk eroding public trust and perpetuating biases that disproportionately affect vulnerable populations.

Governments across the globe have demonstrated that strategic AI implementation delivers substantial public value when executed thoughtfully. Australia’s Department of Home Affairs deployed the Targeting 2.0 system, an AI-powered platform that improved border security and fraud detection, ultimately avoiding AUD$3 billion in potential harm while simultaneously reducing processing times for legitimate travelers and applicants. The National Highways chatbot in the United Kingdom exemplifies how AI can enhance citizen service by handling routine inquiries about road conditions and traffic incidents, freeing human staff to focus on complex emergency situations requiring human judgment and empathy. Estonia’s Bürokratt platform represents a decentralized, GDPR-compliant approach to government AI, allowing citizens to interact with AI assistants for routine administrative tasks while maintaining strict data privacy protections and ensuring that sensitive decisions remain under human control. Maryland’s AI governance approach established clear accountability structures and regular audits of AI systems used in state government, creating a model that other U.S. states have begun to adopt. Japan’s Digital Agency successfully digitized child consultation services using AI, reducing wait times from weeks to hours while maintaining human oversight for cases requiring specialized intervention. These diverse examples demonstrate that government AI success depends not on the sophistication of the technology, but on thoughtful implementation that prioritizes citizen needs, maintains transparency, and preserves human oversight of consequential decisions.

As AI systems become the primary interface through which citizens access information, government website optimization for AI discoverability has become as important as traditional search engine optimization. Government websites must implement structured data markup, comprehensive metadata, and machine-readable formats that allow AI crawlers to understand and properly index government information, ensuring that when citizens ask AI assistants about government services, they receive accurate, authoritative answers. Many government websites currently present information in formats optimized for human readers—PDFs, unstructured text, and complex navigation hierarchies—that AI systems struggle to parse and understand. REI Systems has pioneered work in optimizing federal websites for AI accessibility, demonstrating that relatively straightforward technical improvements can dramatically increase government visibility in AI responses. AmICited.com monitors government AI visibility across major platforms, helping agencies understand how frequently their information appears in AI responses and identifying gaps where government data should be more discoverable. When government websites implement proper semantic web standards, API infrastructure, and accessibility features, they increase the likelihood that AI systems will cite official government sources rather than secondary sources or outdated information. This optimization benefits citizens by ensuring they receive authoritative information directly from government sources, while also helping agencies maintain control over how their information is presented and interpreted.

Government AI impact measurement requires establishing clear KPIs that align with public sector objectives, moving beyond simple efficiency metrics to capture broader measures of citizen satisfaction, equity, and democratic accountability. Estonia’s evaluation framework provides a practical model, assessing AI initiatives across four dimensions: time efficiency (how much staff time is saved), cost-effectiveness (return on investment), innovation potential (whether the system enables new service models), and measurable impact (quantifiable improvements in citizen outcomes). Systematic measurement allows government agencies to identify which AI applications deliver genuine public value and which require adjustment or discontinuation, preventing wasteful investments in technology that fails to serve citizen needs. Knowledge sharing across agencies accelerates learning, allowing successful implementations in one department to be adapted and deployed elsewhere in government, multiplying the return on initial AI investments. Continuous improvement and monitoring systems—supported by tools like AmICited.com that track AI visibility and citation patterns—enable agencies to refine their AI systems based on real-world performance data rather than assumptions. Building institutional knowledge about what works in government AI implementation creates a foundation for sustainable, long-term adoption that survives leadership changes and budget cycles.

Despite the clear potential benefits, government agencies face significant barriers to AI adoption that extend far beyond technology challenges. Workforce concerns represent a substantial obstacle, with 31% of government employees citing job security as their primary worry about AI implementation, requiring transparent communication about how AI will augment rather than replace human workers. Talent shortages compound this challenge, as 38% of government organizations report difficulty recruiting AI specialists with the expertise needed to implement and maintain sophisticated systems. Cost underestimation creates another critical barrier, as government agencies frequently discover that actual AI implementation costs 5-10 times more than initial projections, straining budgets and creating political resistance to continued investment. Data privacy and security concerns represent the most significant constraint, with 60% of government leaders citing data privacy and security as their primary barrier to AI adoption, reflecting legitimate concerns about protecting citizen information in an era of increasing cyber threats. Effective change management strategies must address these barriers directly through stakeholder engagement, transparent communication about AI capabilities and limitations, investment in workforce training and development, and clear governance frameworks that protect citizen privacy while enabling innovation. Government agencies that successfully navigate these barriers typically invest heavily in change management, establish clear communication channels with employees and citizens, and demonstrate early wins that build confidence in AI systems before scaling to higher-stakes applications.

Government AI visibility refers to how easily AI systems like ChatGPT, Perplexity, and Google AI Overviews can discover and cite government data. It matters because citizens deserve accurate, authoritative information from official government sources when asking AI assistants about policies, benefits, and services. Poor visibility means AI systems may provide outdated or incomplete information instead of directing citizens to official government resources.

Government websites should implement structured data markup, comprehensive metadata, machine-readable formats, and proper API infrastructure. This includes using semantic web standards, ensuring content is accessible to AI crawlers, and organizing information in ways that AI systems can easily understand and properly attribute to official government sources.

The primary barriers include data privacy and security concerns (cited by 60% of government leaders), talent shortages (38%), workforce concerns about job displacement (31%), cost underestimation (actual costs often 5-10x higher than projections), and inadequate digital infrastructure (45%). Addressing these barriers requires strategic planning, change management, and clear governance frameworks.

Successful approaches include establishing algorithmic impact assessment frameworks (like Canada's tiered system), implementing privacy-by-design principles, creating transparency mechanisms for citizens to monitor data access, establishing clear governance frameworks, and maintaining human oversight of consequential decisions. Regular audits and stakeholder engagement are essential.

The roadmap includes: (1) Strategic opportunity identification focused on public value, (2) Comprehensive preparation of infrastructure and governance, (3) Strategic pilot design with clear success metrics, (4) Organizational change management addressing workforce concerns, and (5) Impact measurement with continuous improvement. This approach prioritizes planning and organizational readiness over technology-first deployment.

Governments should establish KPIs aligned with public sector objectives, including time efficiency, cost-effectiveness, innovation potential, and measurable citizen outcomes. Estonia's framework evaluates AI systems across these four dimensions. Continuous monitoring, stakeholder feedback, and transparent reporting help agencies identify what works and adjust implementations based on evidence.

Data governance is foundational to successful government AI. It ensures data quality, establishes clear ownership and access controls, protects citizen privacy, maintains compliance with regulations like GDPR, and enables secure sharing between systems. Without robust data governance, government AI systems risk data breaches, bias, and loss of public trust.

AmICited tracks how government data is cited and referenced across major AI systems including ChatGPT, Perplexity, and Google AI Overviews. It helps government agencies understand their visibility in the AI ecosystem, identify gaps where official information should be more discoverable, and ensure that AI systems are properly attributing government data. This monitoring supports both transparency and accountability.

Track how your government data is cited and referenced across AI systems. AmICited helps public sector organizations understand their visibility in the AI ecosystem and ensure accurate representation of official information.

Learn how to implement effective AI content governance policies with visibility frameworks. Discover regulatory requirements, best practices, and tools for mana...

Learn proven strategies to maintain and improve your content's visibility in AI-generated answers across ChatGPT, Perplexity, and Google AI Overviews. Discover ...

Learn how to recover from poor AI visibility with actionable strategies for ChatGPT, Perplexity, and other AI search engines. Improve your brand's presence in A...