Best Way to Format Headers for AI: Complete Guide for 2025

Learn the best practices for formatting headers for AI systems. Discover how proper H1, H2, H3 hierarchy improves AI content retrieval, citations, and visibilit...

Learn how to optimize heading hierarchy for LLM parsing. Master H1, H2, H3 structure to improve AI visibility, citations, and content discoverability across ChatGPT, Perplexity, and Google AI Overviews.

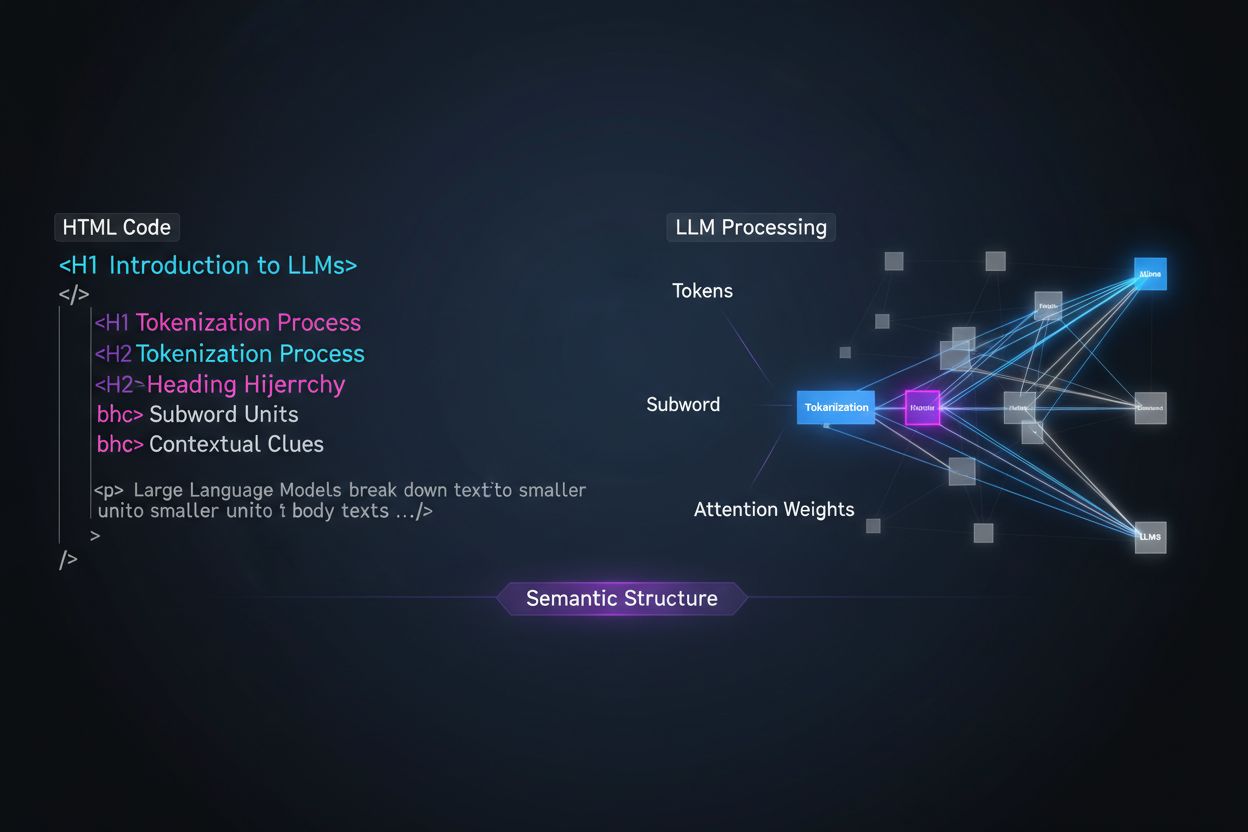

Large Language Models process content fundamentally differently than human readers, and understanding this distinction is crucial for optimizing your content strategy. While humans scan pages visually and intuitively grasp document structure, LLMs rely on tokenization and attention mechanisms to parse meaning from sequential text. When an LLM encounters your content, it breaks it into tokens (small units of text) and assigns attention weights to different sections based on structural signals—and heading hierarchy serves as one of the most powerful structural signals available. Without clear heading organization, LLMs struggle to identify the primary topics, supporting arguments, and contextual relationships within your content, leading to less accurate responses and reduced visibility in AI-powered search and retrieval systems.

Modern content chunking strategies in retrieval-augmented generation (RAG) systems and AI search engines depend heavily on heading structure to determine where to split documents into retrievable segments. When an LLM encounters well-organized heading hierarchies, it uses H2 and H3 boundaries as natural cut lines for creating semantic chunks—discrete units of information that can be independently retrieved and cited. This process is far more effective than arbitrary character-count splitting because heading-based chunks preserve semantic coherence and context. Consider the difference between two approaches:

| Approach | Chunk Quality | LLM Citation Rate | Retrieval Accuracy |

|---|---|---|---|

| Semantic-Rich (Heading-Based) | High coherence, complete thoughts | 3x higher | 85%+ accuracy |

| Generic (Character-Count) | Fragmented, incomplete context | Baseline | 45-60% accuracy |

Research shows that documents with clear heading hierarchies experience 18-27% improvement in question-answering accuracy when processed by LLMs, primarily because the chunking process preserves the logical relationships between ideas. Systems like Retrieval-Augmented Generation (RAG) pipelines, which power tools like ChatGPT’s browsing feature and enterprise AI systems, explicitly look for heading structures to optimize their retrieval systems and improve citation accuracy.

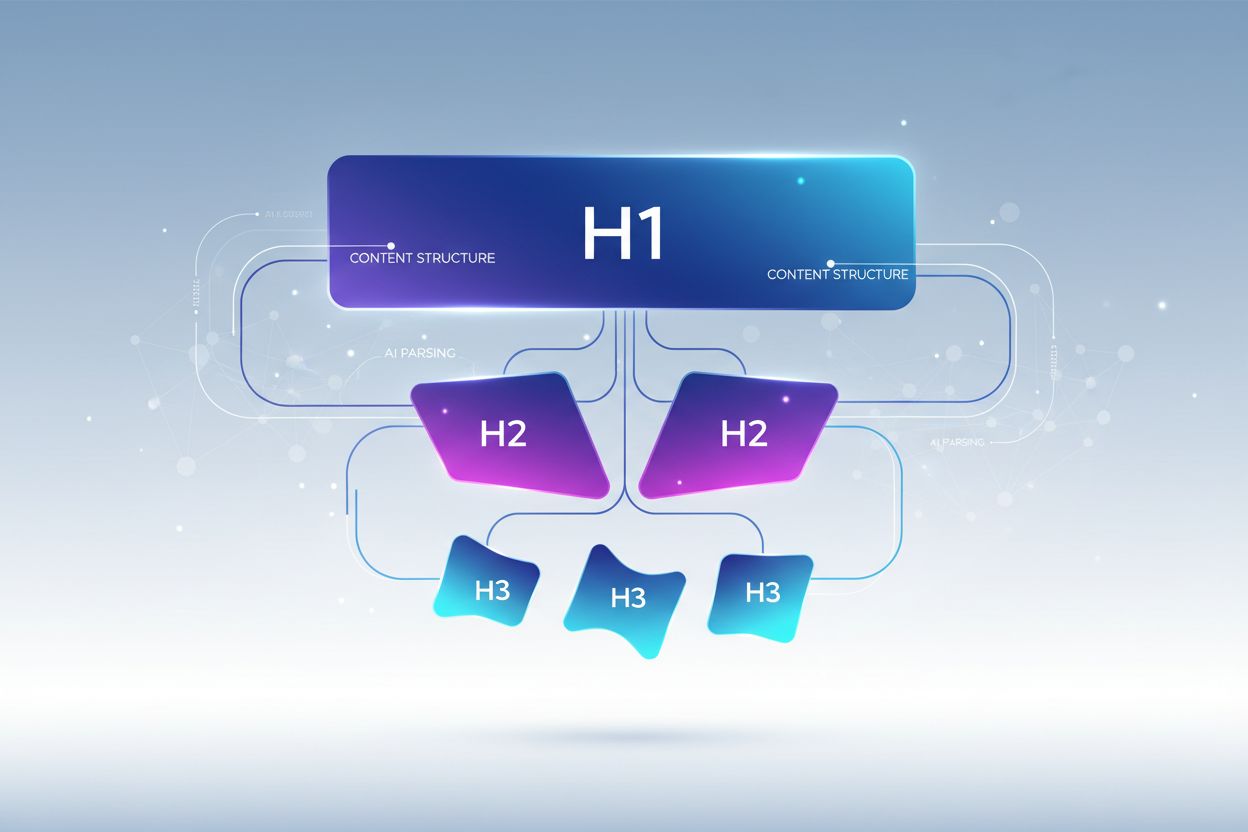

Proper heading hierarchy follows a strict nesting structure that mirrors how LLMs expect information to be organized, with each level serving a distinct purpose in your content architecture. The H1 tag represents your document’s primary topic—there should be only one per page, and it should clearly state the main subject matter. H2 tags represent major topic divisions that support or expand on the H1, each addressing a distinct aspect of your primary topic. H3 tags dive deeper into specific subtopics within each H2 section, providing granular detail and answering follow-up questions. The critical rule for LLM optimization is that you should never skip levels (jumping from H1 directly to H3, for example) and should maintain consistent nesting—each H3 must belong to an H2, and each H2 must belong to an H1. This hierarchical structure creates what researchers call a “semantic tree” that LLMs can traverse to understand your content’s logical flow and extract relevant information with precision.

The most effective heading strategy for LLM visibility treats each H2 heading as a direct answer to a specific user intent or question, with H3 headings mapping to sub-questions that provide supporting detail. This “answer-first” approach aligns with how modern LLMs retrieve and synthesize information—they search for content that directly addresses user queries, and headings that clearly state answers are far more likely to be selected and cited. Each H2 should function as an answer unit, a self-contained response to a distinct question that a user might ask about your topic. For example, if your H1 is “How to Optimize Website Performance,” your H2s might be “Reduce Image File Sizes (Improves Load Time by 40%)” or “Implement Browser Caching (Decreases Server Requests by 60%)"—each heading directly answers a specific performance question. The H3s beneath each H2 then address follow-up questions: under “Reduce Image File Sizes,” you might have H3s like “Choose the Right Image Format,” “Compress Without Losing Quality,” and “Implement Responsive Images.” This structure makes it dramatically easier for LLMs to identify, extract, and cite your content because the headings themselves contain the answers, not just topic labels.

Transforming your heading strategy to maximize LLM visibility requires implementing specific, actionable techniques that go beyond basic structure. Here are the most effective optimization methods:

Use Descriptive, Specific Headings: Replace vague titles like “Overview” or “Details” with specific descriptions like “How Machine Learning Improves Recommendation Accuracy” or “Three Factors That Impact Search Rankings.” Research shows that specific headings increase LLM citation rates by up to 3x compared to generic titles.

Implement Question-Form Headings: Structure H2s as direct questions that users ask (“What Is Semantic Search?” or “Why Does Heading Hierarchy Matter?”). LLMs are trained on Q&A data and naturally prioritize question-form headings when retrieving answers.

Include Entity Clarity in Headings: When discussing specific concepts, tools, or entities, name them explicitly in your headings rather than using pronouns or vague references. For example, “PostgreSQL Performance Optimization” is far more LLM-friendly than “Database Optimization.”

Avoid Combining Multiple Intents: Each heading should address a single, focused topic. Headings like “Installation, Configuration, and Troubleshooting” dilute semantic clarity and confuse LLM chunking algorithms.

Add Quantifiable Context: When relevant, include numbers, percentages, or timeframes in headings (“Reduce Load Time by 40% Using Image Optimization” vs. “Image Optimization”). Studies show that 80% of LLM-cited content includes quantifiable context in headings.

Use Parallel Structure Across Levels: Maintain consistent grammatical structure across H2s and H3s within the same section. If one H2 starts with a verb (“Implement Caching”), others should too (“Configure Database Indexes,” “Optimize Queries”).

Include Keywords Naturally: While not for SEO alone, including relevant keywords in headings helps LLMs understand topic relevance and improves retrieval accuracy by 25-35%.

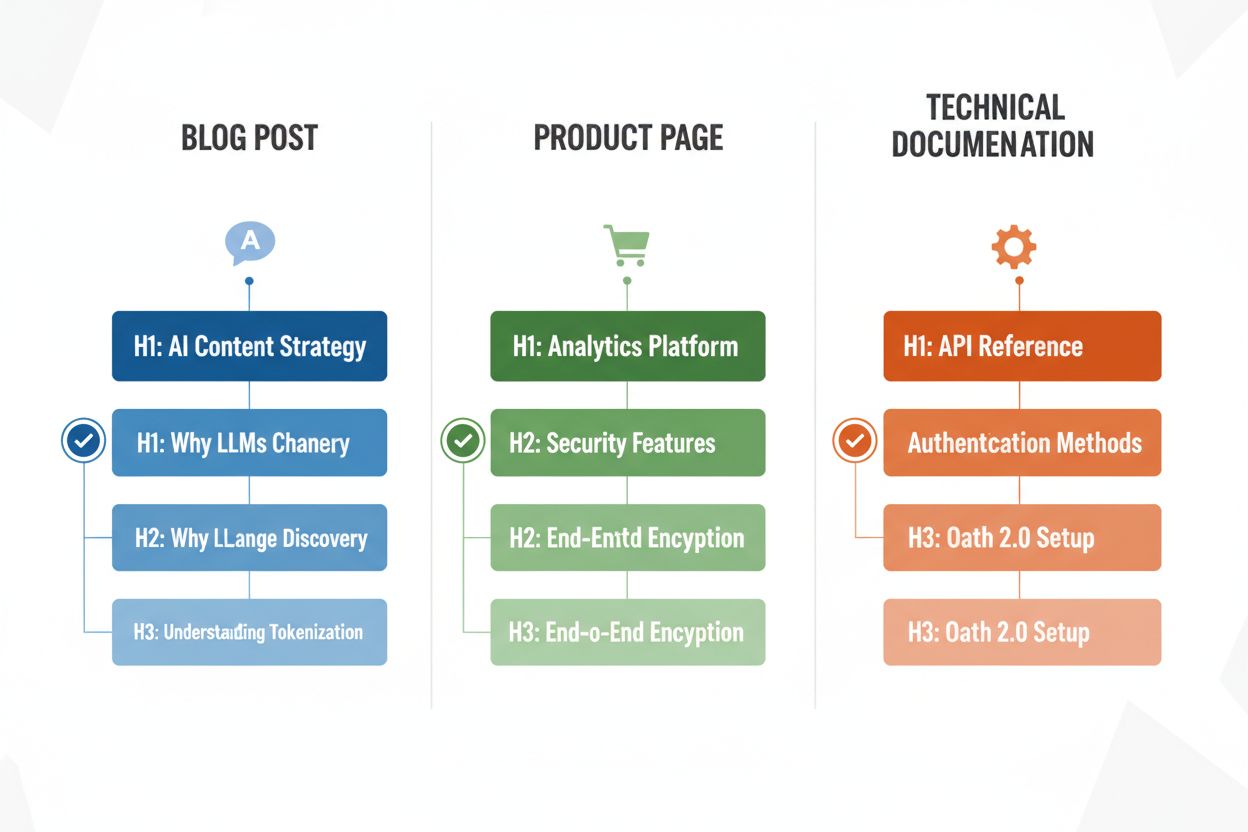

Different content types require adapted heading strategies to maximize LLM parsing effectiveness, and understanding these patterns ensures your content is optimized regardless of format. Blog posts benefit from narrative-driven heading hierarchies where H2s follow a logical progression through an argument or explanation, with H3s providing evidence, examples, or deeper exploration—for instance, a post on “AI Content Strategy” might use H2s like “Why LLMs Are Changing Content Discovery,” “How to Optimize for AI Visibility,” and “Measuring Your AI Content Performance.” Product pages should use H2s that map directly to user concerns and decision factors (“Security and Compliance,” “Integration Capabilities,” “Pricing and Scalability”), with H3s addressing specific feature questions or use cases. Technical documentation requires the most granular heading structure, with H2s representing major features or workflows and H3s breaking down specific tasks, parameters, or configuration options—this structure is critical because documentation is frequently cited by LLMs when users ask technical questions. FAQ pages should use H2s as the questions themselves (formatted as actual questions) and H3s for follow-up clarifications or related topics, as this structure perfectly aligns with how LLMs retrieve and present Q&A content. Each content type has different user intents, and your heading hierarchy should reflect those intents to maximize relevance and citation likelihood.

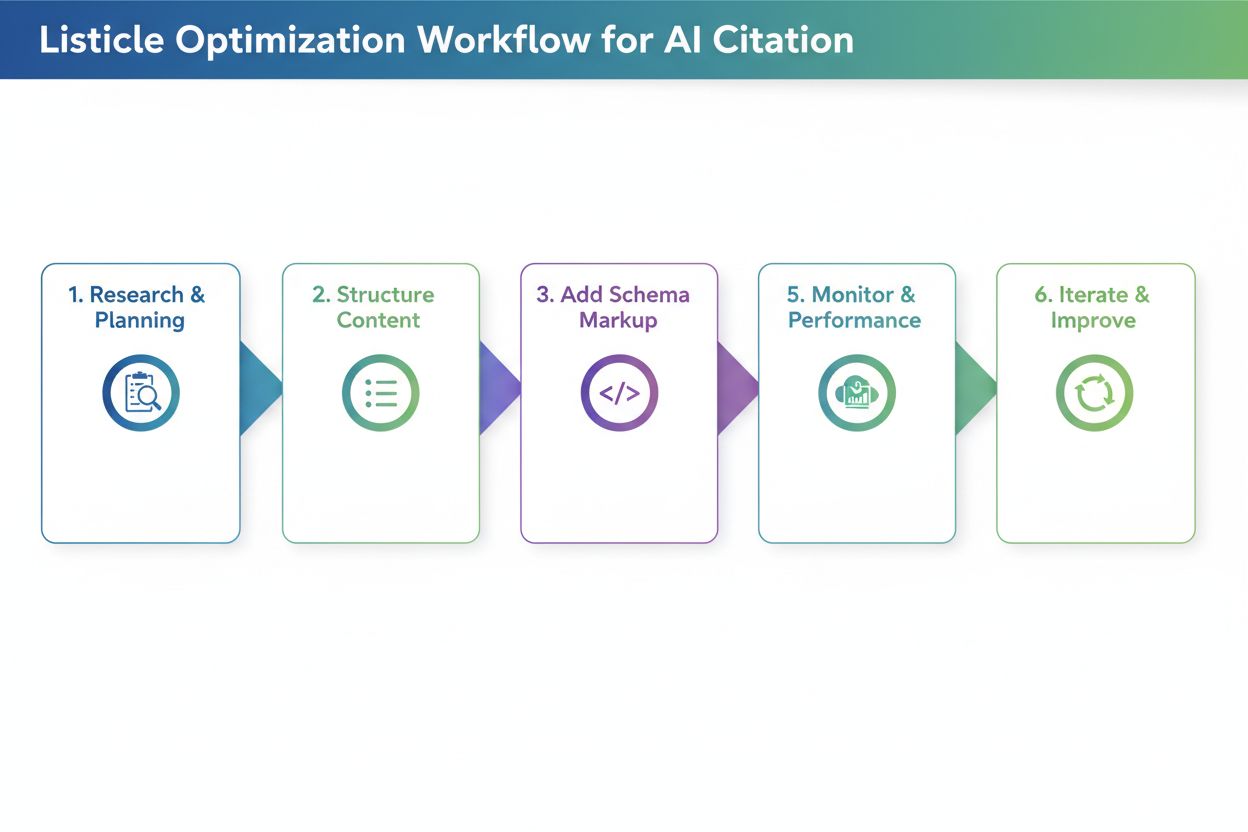

Once you’ve restructured your headings, validation is essential to ensure they’re actually improving LLM parsing and visibility. The most practical approach is to test your content directly with AI tools like ChatGPT, Perplexity, or Claude by uploading your document or providing a URL and asking questions that your headings are designed to answer. Pay attention to whether the AI tool correctly identifies and cites your content, and whether it extracts the right sections—if your H2 on “Reducing Load Time” isn’t being cited when users ask about performance optimization, your heading may need refinement. You can also use specialized tools like SEO platforms with AI citation tracking (such as Semrush or Ahrefs’ newer AI features) to monitor how often your content appears in LLM responses over time. Iterate based on results: if certain sections aren’t being cited, experiment with more specific or question-form headings, add quantifiable context, or clarify the connection between your heading and common user queries. This testing cycle typically takes 2-4 weeks to show measurable results, as it takes time for AI systems to re-index and re-evaluate your content.

Even well-intentioned content creators often make heading mistakes that significantly reduce LLM visibility and parsing accuracy. One of the most common errors is combining multiple intents in a single heading—for example, “Installation, Configuration, and Troubleshooting” forces LLMs to choose which topic the section addresses, often leading to incorrect chunking and reduced citation likelihood. Vague, generic headings like “Overview,” “Key Points,” or “Additional Information” provide no semantic clarity and make it impossible for LLMs to understand what specific information the section contains; when an LLM encounters these headings, it often skips the section entirely or misinterprets its relevance. Missing context is another critical mistake—a heading like “Best Practices” doesn’t tell an LLM what domain or topic the practices apply to, whereas “Best Practices for API Rate Limiting” is immediately clear and retrievable. Inconsistent hierarchy (skipping levels, using H4s without H3s, or mixing heading styles) confuses LLM parsing algorithms because they rely on consistent structural patterns to understand document organization. For example, a document that uses H1 → H3 → H2 → H4 creates ambiguity about which sections are related and which are independent, degrading retrieval accuracy by 30-40%. Testing your content with ChatGPT or similar tools will quickly reveal these mistakes—if the AI struggles to understand your content structure or cites the wrong sections, your headings likely need revision.

The optimization of heading hierarchy for LLM parsing creates a powerful secondary benefit: improved accessibility for human users with disabilities. Semantic HTML heading structure (proper use of H1-H6 tags) is fundamental to screen reader functionality, allowing visually impaired users to navigate documents efficiently and understand content organization. When you create clear, descriptive headings optimized for LLM parsing, you simultaneously create better navigation for screen readers—the same specificity and clarity that helps LLMs understand your content helps assistive technologies guide users through it. This alignment between AI optimization and accessibility represents a rare win-win: the technical requirements for LLM-friendly content directly support WCAG accessibility standards and improve the experience for all users. Organizations that prioritize heading hierarchy for AI visibility often see unexpected improvements in accessibility compliance scores and user satisfaction metrics from users relying on assistive technologies.

Implementing heading hierarchy improvements requires measurement to justify the effort and identify what’s working. The most direct KPI is LLM citation rate—track how often your content appears in responses from ChatGPT, Perplexity, Claude, and other AI tools by regularly querying relevant questions and logging which sources are cited. Tools like Semrush, Ahrefs, and newer platforms like Originality.AI now offer LLM citation tracking features that monitor your visibility in AI-generated responses over time. You should expect to see a 2-3x increase in citations within 4-8 weeks of implementing proper heading hierarchy, though results vary by content type and competition level. Beyond citations, track organic traffic from AI-powered search features (Google’s AI Overviews, Bing Chat citations, etc.) separately from traditional organic search, as these often show faster improvement with heading optimization. Additionally, monitor content engagement metrics like time-on-page and scroll depth for pages with optimized headings—better structure typically increases engagement by 15-25% because users can more easily find relevant information. Finally, measure retrieval accuracy in your own systems if you use RAG pipelines or internal AI tools by testing whether the correct sections are being retrieved for common queries. These metrics collectively demonstrate the ROI of heading optimization and guide ongoing refinement of your content strategy.

Heading hierarchy primarily impacts AI visibility and LLM citations rather than traditional Google rankings. However, proper heading structure improves overall content quality and readability, which indirectly supports SEO. The main benefit is increased visibility in AI-powered search results like Google AI Overviews, ChatGPT, and Perplexity, where heading structure is critical for content extraction and citation.

Yes, if your current headings are vague or don't follow a clear H1→H2→H3 hierarchy. Start by auditing your top-performing pages and implementing heading improvements on high-traffic content first. The good news is that LLM-friendly headings are also more user-friendly, so changes benefit both humans and AI systems.

Absolutely. In fact, the best heading structures work well for both. Clear, descriptive, hierarchical headings that help humans understand content organization are exactly what LLMs need for parsing and chunking. There's no conflict between human-friendly and LLM-friendly heading practices.

There's no strict limit, but aim for 3-7 H2s per page depending on content length and complexity. Each H2 should represent a distinct topic or answer unit. Under each H2, include 2-4 H3s for supporting details. Pages with 12-15 total heading sections (H2s and H3s combined) tend to perform well in LLM citations.

Yes, even short-form content benefits from proper heading structure. A 500-word article might have just 1-2 H2s, but they should still be descriptive and specific. Short-form content with clear headings is more likely to be cited in LLM responses than unstructured short content.

Test your content directly with ChatGPT, Perplexity, or Claude by asking questions your headings are designed to answer. If the AI correctly identifies and cites your content, your structure is working. If it struggles or cites the wrong sections, your headings need refinement. Most improvements show results within 2-4 weeks.

Google's AI Overviews and ChatGPT both benefit from clear heading hierarchy, but ChatGPT places even more emphasis on it. ChatGPT cites content with sequential heading structure 3x more often than content without it. The core principles are the same, but LLMs like ChatGPT are more sensitive to heading quality and structure.

Question-form headings work best for FAQ pages, troubleshooting guides, and educational content. For blog posts and product pages, a mix of question-form and statement-form headings often works best. The key is that headings should clearly state what the section covers, whether as a question or statement.

Track how often your content is cited in ChatGPT, Perplexity, Google AI Overviews, and other LLMs. Get real-time insights into your AI search performance and optimize your content strategy.

Learn the best practices for formatting headers for AI systems. Discover how proper H1, H2, H3 hierarchy improves AI content retrieval, citations, and visibilit...

Discover why AI models prefer listicles and numbered lists. Learn how to optimize list-based content for ChatGPT, Gemini, and Perplexity citations with proven s...

Learn how to create LLM meta answers that AI systems cite. Discover structural techniques, answer density strategies, and citation-ready content formats that in...