YMYL Topics in AI Search: Complete Guide for Brand Monitoring

Learn what YMYL topics are in AI search, how they impact your brand visibility in ChatGPT, Perplexity, and Google AI Overviews, and why monitoring them matters ...

Learn how healthcare organizations can optimize medical content for LLM visibility, navigate YMYL requirements, and monitor AI citations with AmICited.com’s AI monitoring platform.

Your Money or Your Life (YMYL) content encompasses topics that directly impact user wellbeing, including health, finance, safety, and civic information—and healthcare sits at the apex of Google’s scrutiny hierarchy. Following Google’s March 2024 core update, the search giant reduced low-quality content visibility by 40%, signaling an unprecedented crackdown on unreliable medical information. However, the challenge facing healthcare organizations has fundamentally shifted: content must now be visible not just to search engines, but to Large Language Models (LLMs) that increasingly serve as the first stop for health information seekers. With 5% of all Google searches being health-related and 58% of patients now using AI tools for health information, healthcare providers face a critical visibility gap—their content may rank well in traditional search but remain invisible to the AI systems patients actually consult. This dual-visibility requirement represents an entirely new frontier in healthcare content strategy.

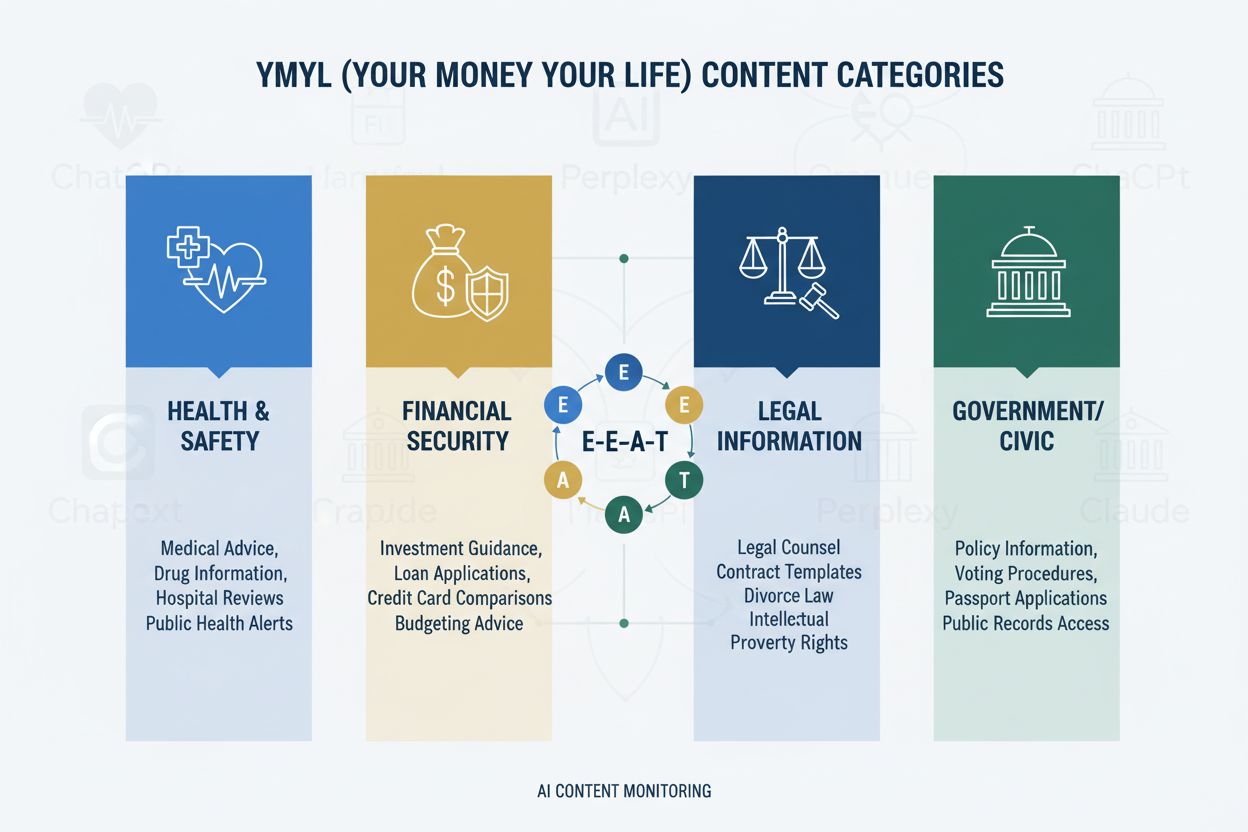

The YMYL framework categorizes content into four critical domains: Health (medical conditions, treatments, wellness), Finance (investment advice, financial planning), Safety (emergency procedures, security), and Civic (voting, legal matters)—with healthcare commanding the highest scrutiny level due to its direct impact on human wellbeing. Google’s E-E-A-T framework (Experience, Expertise, Authoritativeness, Trustworthiness) serves as the evaluation standard, requiring healthcare content to demonstrate genuine medical knowledge, professional credentials, and verifiable accuracy. The Quality Rater Guidelines explicitly emphasize that healthcare content must be created or reviewed by qualified medical professionals, with clear author credentials and institutional backing. Healthcare organizations must understand that E-E-A-T is not optional—it’s the foundational requirement for both search visibility and LLM inclusion. Below is the impact hierarchy of YMYL categories:

| YMYL Category | Impact Level | Scrutiny Intensity | Healthcare Relevance |

|---|---|---|---|

| Health | Critical | Highest | Direct patient safety |

| Finance | High | High | Insurance, costs |

| Safety | High | High | Emergency protocols |

| Civic | Medium | Medium | Health policy |

Despite their sophistication, LLMs demonstrate alarming error rates when processing medical information, with Stanford HAI research documenting 30-50% of unsupported statements in healthcare responses. GPT-4 with Retrieval-Augmented Generation (RAG) still produces responses where 50% contain unsupported claims, even when trained on authoritative sources—a phenomenon known as hallucination that can manifest as recommending non-existent medical equipment, suggesting incorrect treatment protocols, or fabricating drug interactions. A notable case involved Men’s Journal publishing an AI-generated article containing 18 specific medical errors, including dangerous treatment recommendations that could harm readers. The fundamental problem is that AI lacks “Experience”—the first-hand clinical knowledge that distinguishes expert physicians from statistical pattern-matching systems. This gap means that without properly structured, authoritative content from verified medical professionals, LLMs will confidently generate plausible-sounding but potentially dangerous medical guidance.

AI Overviews and similar LLM-powered answer systems fundamentally change how patients discover health information by providing direct answers without requiring website visits, effectively eliminating the traditional click-through that has driven healthcare web traffic for decades. Content visibility now depends on citation within AI responses rather than ranking position, meaning a healthcare provider’s article might be synthesized into an AI answer without ever receiving traffic or attribution. LLMs synthesize information from multiple sources simultaneously, creating a new information architecture where individual websites compete for inclusion in AI-generated summaries rather than for top search positions. Healthcare providers must recognize that Share of Model (SOM)—the percentage of AI responses citing their content—has become the critical visibility metric, replacing traditional click-through rates. This paradigm shift requires fundamentally rethinking content strategy: instead of optimizing for clicks, healthcare organizations must optimize for citation quality, accuracy, and structural clarity that makes their content the preferred source for LLM synthesis.

LLMs process medical content most effectively when organized with clear hierarchical structure that mirrors clinical decision-making: condition definition → symptom presentation → diagnostic criteria → treatment options → prognosis. Progressive disclosure architecture—presenting simple concepts before complex ones—enables LLMs to build accurate mental models rather than conflating related but distinct conditions. Question-first content design (answering “What is condition X?” before “How is it treated?”) aligns with how LLMs retrieve and synthesize information across multiple documents. Semantic richness and interconnected concepts—explicitly linking related conditions, treatments, and risk factors—helps LLMs understand relationships that might otherwise remain implicit. Implementation of Schema.org medical markup (MedicalCondition, MedicalProcedure, MedicalTreatment) provides structured data that LLMs can reliably parse and cite. Natural language that mimics expert explanation—using terminology that physicians would use while remaining accessible—signals authenticity to both LLMs and human readers. Healthcare organizations should audit existing content against these structural requirements, as traditional SEO-optimized content often lacks the hierarchical clarity and semantic richness that LLMs require for accurate synthesis.

Multi-platform presence amplifies authority signals in ways that single-website strategies cannot achieve, as LLMs recognize consistent expertise demonstrated across multiple authoritative channels. Medical Q&A platforms like HealthTap and Figure 1 provide direct physician-to-patient interaction that LLMs recognize as authentic expertise, with verified credentials and real-time engagement. Professional networks including Doximity and medical-focused LinkedIn communities establish peer recognition and professional standing that LLMs weight heavily in authority assessment. Medical Wikipedia contributions and similar collaborative knowledge bases signal willingness to contribute to public medical knowledge without commercial motivation, a trust signal that LLMs recognize. Comprehensive author credibility pages—featuring board certifications, publications, clinical experience, and institutional affiliations—must be present on the primary website and linked consistently across platforms. Cross-platform consistency in credentials, specialties, and clinical perspectives reinforces authenticity; contradictions between platforms trigger LLM skepticism. Healthcare organizations should develop a multi-platform authority strategy that treats each platform as a reinforcing signal rather than a separate channel, ensuring that physician expertise is visible and verifiable across the entire digital ecosystem.

Traditional healthcare analytics—tracking organic traffic, click-through rates, and search rankings—miss the AI visibility story entirely, creating a dangerous blind spot where content appears successful by legacy metrics while remaining invisible to LLMs. Share of Model (SOM) emerges as the critical new metric, measuring what percentage of AI-generated responses about a given condition cite your organization’s content. Effective monitoring requires systematic testing across multiple LLM platforms (ChatGPT, Claude, Perplexity, and emerging competitors) using consistent queries about your specialty areas, documenting citation frequency and positioning within responses. Citation quality matters as much as citation frequency—being cited as a primary source carries more weight than appearing in a list of secondary references, and LLMs recognize when content is cited for specific expertise versus generic information. Monitoring tools range from manual testing (running queries and documenting results) to automation platforms that track SOM changes over time and alert to visibility shifts. Indirect indicators including branded search volume, patient feedback mentioning AI recommendations, and referral patterns from AI platforms provide supplementary data that validates SOM trends. Healthcare organizations should establish baseline SOM measurements immediately, as the competitive landscape is shifting rapidly and early visibility advantages compound over time.

Healthcare organizations should begin with a single specialty area rather than attempting organization-wide transformation simultaneously, allowing teams to develop expertise and refine processes before scaling. Content audits through an LLM lens require evaluating existing articles for hierarchical clarity, semantic richness, question-first architecture, and author credibility—often revealing that well-ranking content lacks the structure LLMs require. Visibility testing across AI platforms using specialty-specific queries establishes baseline SOM and identifies which conditions and treatments are currently visible versus invisible. Implementation of question-first architecture involves restructuring existing content or creating new content that leads with patient questions (“Why do I have this symptom?”) before clinical explanations. Author credibility pages should be created for each physician contributor, featuring board certifications, specialties, publications, and clinical experience, with consistent linking from all authored content. Content clustering around conditions—creating interconnected content that covers symptoms, diagnosis, treatment options, and prognosis—helps LLMs understand comprehensive condition information rather than isolated articles. This phased approach allows healthcare organizations to measure impact, refine strategy, and build internal expertise before expanding to additional specialties.

HIPAA compliance remains paramount even as content becomes visible to LLMs; patient privacy protections apply regardless of whether information is accessed through search engines or AI systems, requiring careful anonymization and de-identification of any case examples. Medical disclaimers and accuracy requirements must be explicit and prominent, with clear statements that AI-synthesized information should not replace professional medical consultation, and that individual circumstances may differ from general guidance. Fact-checking and source citation become critical compliance elements, as healthcare organizations are liable for accuracy of information they publish, and LLMs will amplify errors across thousands of user interactions. Regulatory scrutiny of AI-generated medical content is intensifying, with FDA and FTC increasingly examining how AI systems present medical information; healthcare organizations must ensure that all content—whether human-written or AI-assisted—meets regulatory standards. Human medical review by qualified physicians must remain mandatory for all healthcare content, with documented review processes that demonstrate commitment to accuracy and safety. Liability considerations extend beyond traditional medical malpractice to include potential liability for information that LLMs synthesize and present to patients; healthcare organizations should consult legal counsel regarding their responsibility for how their content is used in AI systems. Compliance and safety cannot be treated as secondary concerns in the rush to achieve AI visibility.

AI will continue reshaping healthcare information discovery at an accelerating pace, with LLMs becoming increasingly sophisticated in medical reasoning and increasingly central to how patients research health conditions. Healthcare organizations that adapt their content strategy now position themselves as trusted sources in this emerging ecosystem, while those that delay risk becoming invisible to the AI systems their patients actually use. Early adopters gain competitive advantage through established authority signals, higher Share of Model metrics, and patient trust built through consistent visibility in AI responses—advantages that compound as LLMs learn to recognize and prioritize reliable sources. Integration of traditional SEO and LLM optimization is not an either-or choice but a complementary strategy, as search engines increasingly incorporate LLM technology and patients continue using multiple information sources. Long-term sustainability depends on genuine expertise rather than optimization tricks; healthcare organizations that invest in authentic medical knowledge, transparent credentials, and accurate information will thrive regardless of how discovery mechanisms evolve. The healthcare content landscape has fundamentally shifted, and organizations that recognize this transition as an opportunity rather than a threat will define the future of patient health information discovery.

YMYL (Your Money Your Life) refers to content that could significantly impact people's health, financial stability, or safety. Healthcare is the highest scrutiny category because medical misinformation can cause serious harm or death. Google applies stricter algorithmic standards to YMYL content, and LLMs are increasingly used by patients to research health information, making YMYL compliance critical for visibility.

LLMs synthesize information from multiple sources to provide direct answers, while search engines rank individual pages. Healthcare content must now be structured for citation by AI systems, not just for Google rankings. This means your content might educate thousands through AI responses without receiving direct website traffic, requiring a fundamentally different optimization approach.

E-E-A-T stands for Experience, Expertise, Authoritativeness, and Trustworthiness. Healthcare content requires the highest E-E-A-T standards, with emphasis on first-hand medical experience and verified credentials. Google's Quality Rater Guidelines mention E-E-A-T 137 times, reflecting its critical importance for healthcare content visibility in both search and LLM systems.

Studies show AI produces unsupported medical statements 30-50% of the time and hallucinates medical information. AI lacks real-world medical experience and can't verify information against current medical standards. This is why human medical review and expert authorship remain essential—AI should assist healthcare professionals, not replace them.

Test your content across ChatGPT, Claude, and Perplexity using common patient questions in your specialty. Track Share of Model (SOM) metrics—the percentage of AI responses citing your content. AmICited.com automates this monitoring, providing real-time insights into how your healthcare brand appears across multiple LLM platforms.

Traditional SEO focuses on ranking individual pages for keywords. LLM optimization emphasizes comprehensive coverage, semantic richness, and structured content that AI systems can understand and cite. Both approaches are complementary—healthcare organizations need integrated strategies that optimize for both search engines and LLMs.

AI should only be used as a tool to assist human medical experts, not to replace them. All healthcare content must be reviewed and approved by qualified medical professionals before publication. The stakes are too high for healthcare content to rely on AI generation without expert oversight and verification.

AmICited.com monitors how healthcare brands and medical content appear in AI responses across ChatGPT, Perplexity, Google AI Overviews, and other LLM platforms. It provides visibility metrics, tracks Share of Model (SOM), and offers optimization recommendations to help healthcare organizations ensure their expertise is discoverable through AI-driven patient research.

Track how your medical content appears in ChatGPT, Perplexity, Google AI Overviews, and other LLM platforms. Get real-time insights into your Share of Model (SOM) and optimize for AI-driven patient discovery.

Learn what YMYL topics are in AI search, how they impact your brand visibility in ChatGPT, Perplexity, and Google AI Overviews, and why monitoring them matters ...

YMYL content requires high E-E-A-T standards. Learn what qualifies as Your Money Your Life content, why it matters for SEO and AI visibility, and how to optimiz...

Learn how AI platforms like ChatGPT, Perplexity, and Google AI Overviews evaluate financial content. Understand YMYL requirements, E-E-A-T standards, and compli...