Social Proof and AI Recommendations: The Trust Connection

Discover how social proof shapes AI recommendations and influences brand visibility. Learn why customer reviews are now critical training data for LLMs and how ...

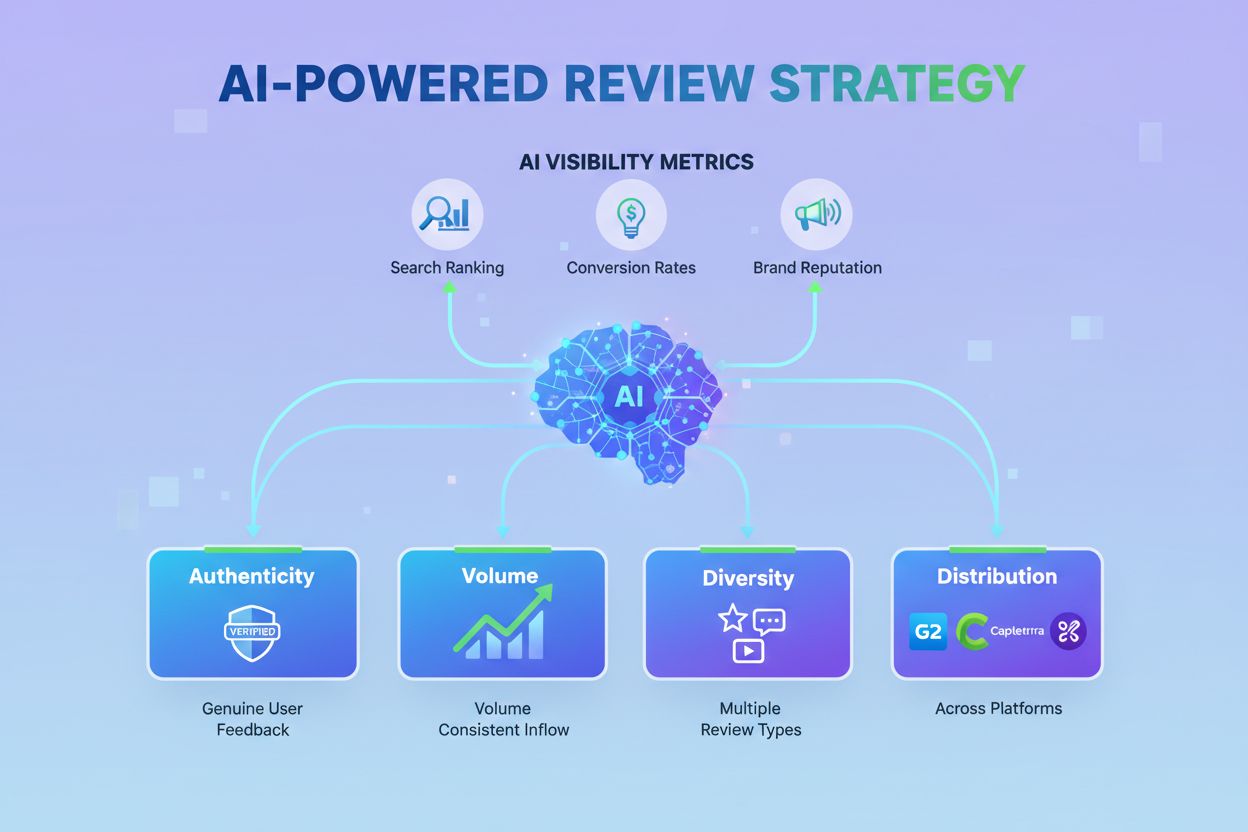

Learn how to manage reviews for maximum AI visibility. Discover the importance of authenticity, semantic diversity, and strategic distribution for LLM citations and brand mentions in AI responses.

Customer reviews have undergone a fundamental transformation in the digital landscape. For years, they served a singular purpose: building social proof to reassure human shoppers and influence purchase decisions. Today, reviews have evolved into something far more consequential—they are now training data that shapes how large language models describe and recommend brands. LLMs like ChatGPT, Claude, and Perplexity are trained on massive datasets that include publicly available review content, meaning every customer sentence becomes part of the corpus that teaches AI systems how to talk about your business. This dual purpose fundamentally changes how brands should think about review strategy. The language customers use in reviews doesn’t just influence other humans; it directly scripts the narratives that AI systems will generate tomorrow. When a customer writes “this held up well during a 20-mile trail run in heavy rain,” they’re not just reassuring potential buyers—they’re providing the exact phrasing that an LLM might later use when recommending waterproof gear to someone asking about durability. This shift means that authentic customer language now carries weight in two distinct channels: human trust and machine learning, making review authenticity and diversity more critical than ever before.

Large language models don’t treat all content equally when generating responses. They specifically prioritize review data for three interconnected reasons that brands must understand to optimize their AI visibility. Recency is the first critical factor—models and AI overviews lean heavily on fresh signals, and a steady stream of recent reviews tells an AI system that your brand isn’t stagnant, making descriptors more up-to-date and relevant. Volume represents the second pillar; one review carries minimal weight, but hundreds or thousands form recognizable patterns that AI can confidently echo and synthesize into recommendations. Diversity of phrasing is the third and often overlooked element—generic praise like “great product, fast shipping” provides minimal value to LLMs, while specific, varied descriptions unlock new linguistic territory for AI systems to draw from.

| Generic Review | Specific Review | AI Value |

|---|---|---|

| “Great product” | “This held up well during a 20-mile trail run in heavy rain” | High—provides concrete use case and performance context |

| “Fast shipping” | “Arrived in 2 days with detailed tracking updates” | High—specific timeline and service details |

| “Good quality” | “The vegan leather doesn’t look cheap and lasts for quite a while” | High—material-specific durability assessment |

| “Highly recommend” | “Stopped heel slip on marathon training and feels really stable” | High—specific athletic performance metrics |

The distinction matters enormously because LLMs synthesize patterns across datasets rather than highlighting individual reviews. When an AI system encounters hundreds of reviews describing a shoe as “supportive and durable,” it learns to associate those terms with the product. But when it encounters varied descriptions—“incredible abrasion resistance,” “solid heel support,” “stable under extended wear,” “held up after machine washes”—it gains a richer vocabulary for describing the product across different contexts and queries. This phrasing diversity directly expands the semantic surface area that AI systems can draw from, making your brand discoverable in unexpected query contexts.

Semantic surface area refers to the range of unique linguistic territory your brand occupies in AI training data. Every distinct phrase from a customer creates more entry points for AI systems to surface your brand in response to varied queries. When reviews use different words to describe the same attribute, they exponentially increase the ways an LLM can find and recommend your product. For example, a shoe might be described as “supportive,” “stable,” “holds my arch well,” “prevents foot fatigue,” and “comfortable for long distances”—each phrase creates a different semantic pathway that an AI system might traverse when answering questions about footwear. This expanded semantic surface area is what transforms narrow discoverability into broad visibility across multiple query types and contexts.

Consider how varied phrasing creates multiple discovery pathways:

When customers describe your product using this kind of linguistic variety, they’re essentially creating multiple semantic pathways that LLMs can follow. An AI system answering “What shoes work best for marathon runners?” might find your brand through the “marathon training” phrase. Another query about “durable vegan leather alternatives” might surface your product through completely different review language. This semantic expansion means your brand becomes discoverable not just for your primary keywords, but for adjacent queries you never explicitly targeted. The brands that win in AI visibility are those whose reviews collectively paint a rich, multifaceted picture of their products through authentic customer language.

A common misconception in review strategy is that brands need to choose between maintaining authenticity and building volume. In reality, steady positives consistently outweigh occasional negatives when LLMs synthesize brand narratives. AI systems don’t highlight individual negative reviews; they identify patterns across entire datasets. This means that a brand with 500 authentic reviews—even if 50 are negative—presents a far stronger signal than a brand with 100 reviews that are all suspiciously positive. LLMs are sophisticated enough to recognize manipulation patterns, and they reward consistency over campaigns. A sudden spike of identical five-star reviews looks like gaming to AI systems, while a steady stream of varied positive reviews with occasional legitimate criticism signals genuine customer feedback. Ongoing reviews signal relevance to AI systems in ways that one-time pushes cannot. When an LLM sees that a brand consistently receives fresh reviews month after month, it interprets this as proof that the product remains current and that customers continue to engage with it. This continuous signal is far more valuable for AI visibility than a massive volume spike followed by silence. The brands that maintain steady, authentic review inflow—even at modest volumes—build more durable AI visibility than those pursuing aggressive, time-limited review campaigns.

As reviews increasingly feed into AI training data, authenticity has become non-negotiable. AI systems are becoming increasingly sophisticated at filtering out manipulation, and brands that cut corners on review authenticity risk being sidelined or even penalized in AI responses. Authenticity operates across multiple layers that together create trust signals AI systems recognize and reward.

The reviews that matter most to AI systems are precisely those that humans would also trust. Verified, authentic, and diverse voices rise to the top while manufactured signals fade. This alignment between human trust and AI trust creates a powerful incentive structure: the best review strategy for human buyers is also the best strategy for AI visibility.

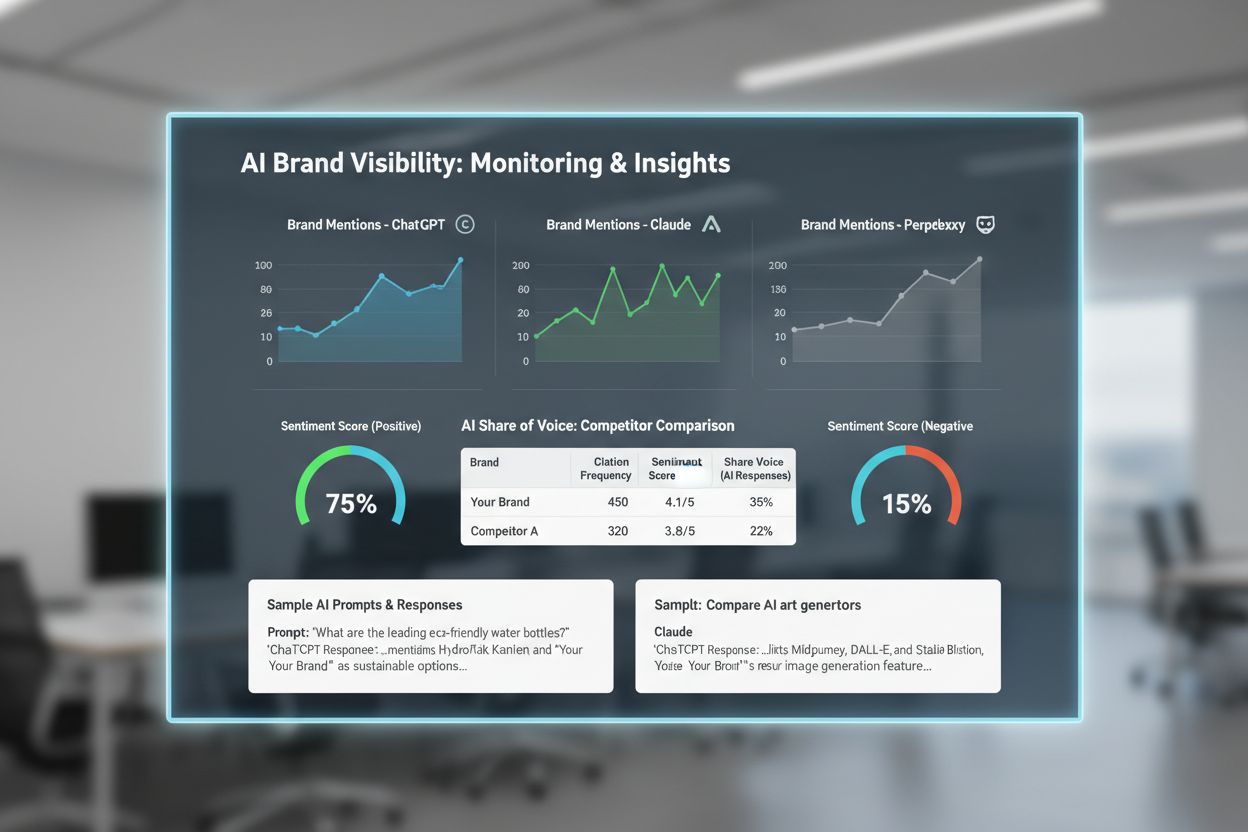

Traditional SEO metrics like keyword rankings and organic traffic tell only part of the story in an AI-driven discovery landscape. Traditional metrics are incomplete because they don’t capture how AI systems are describing your brand or whether you’re being cited in responses that don’t generate direct clicks. The critical new question isn’t “Are we ranking?” but rather “How is AI currently describing our brand?” Testing how LLMs frame your business has become as important as tracking keyword positions. This requires a systematic approach to understanding your AI narrative.

# Sample Prompts to Test AI Brand Visibility

1. "What do customers say about [brand]?"

2. "Why do people choose [brand]?"

3. "What are the drawbacks of [brand]?"

4. "Which products from [brand] are most popular?"

5. "How would you compare [brand] to others in this space?"

Run these prompts through ChatGPT, Claude, Perplexity, and Google’s AI Overviews at regular intervals—ideally monthly—to track how AI systems’ descriptions of your brand evolve. Pair these prompt tests with your review dashboard data to understand the correlation between review improvements and AI narrative shifts. When you increase review diversity and recency, you should see corresponding changes in how AI systems describe your brand. This pairing reveals whether your review strategy is actually influencing AI visibility. Understanding context and positioning matters as much as frequency; an AI system might mention your brand often but in contexts that don’t align with your positioning, or it might mention you rarely but always in premium contexts. Systematic testing reveals these nuances and helps you identify whether your review strategy is moving the needle on AI visibility in ways that matter for your business.

The shift toward AI visibility requires a fundamental reorientation of review strategy away from volume-focused campaigns and toward quality-focused, sustainable approaches. Rather than pursuing one-time pushes designed to spike review counts, successful brands now prioritize a steady inflow of authentic feedback that signals ongoing relevance to AI systems. This means building review collection into regular customer touchpoints rather than launching periodic campaigns. Diversity of expression becomes more valuable than generic praise; a brand should actively encourage customers to describe their specific experiences rather than using templated language. Verified authenticity matters more than inflated volume; 200 verified reviews from real customers outweigh 500 reviews of questionable origin in AI systems’ evaluation. Multi-platform distribution prevents reviews from appearing siloed; brands should syndicate reviews across multiple platforms where AI systems source information, rather than concentrating all efforts on a single channel. Integration with broader digital PR strategy ensures that review efforts align with earned media, thought leadership, and brand mentions across authoritative sources. When review strategy operates in isolation, it misses the opportunity to reinforce brand narratives across multiple channels that AI systems monitor. The most effective approach treats reviews as one component of a comprehensive strategy to build brand authority and visibility in AI systems.

Brands that treat reviews as strategic intelligence gain significant competitive advantages in the AI era. Monitor how competitors appear in AI responses by running the same test prompts for competitor brands and analyzing how AI systems describe them relative to your brand. This competitive analysis reveals gaps in your positioning and opportunities to differentiate. Identify gaps in review coverage by analyzing which attributes, use cases, and customer segments are underrepresented in your review portfolio compared to competitors. If competitors have extensive reviews about durability but your reviews focus on aesthetics, you’ve identified a content gap to address. Use review data to inform content strategy by identifying the most frequently mentioned attributes, use cases, and customer pain points in reviews, then creating content that expands on these themes and provides the context AI systems need to make recommendations. Track sentiment and positioning across your review portfolio to understand how customers perceive your brand relative to competitors, then use this insight to guide product development and marketing messaging. Benchmark against industry leaders by analyzing how top-performing brands in your category manage reviews and structure their feedback to maximize AI visibility. This competitive intelligence transforms reviews from a customer feedback mechanism into a strategic asset that informs every aspect of brand positioning and visibility strategy.

Large language models learn from publicly available text, including customer reviews. Authentic reviews help train AI systems on how to describe brands, products, and services. When LLMs encounter diverse, specific review language, they learn to associate those terms and phrases with your brand, making it more likely to be cited in AI-generated responses.

Semantic surface area refers to the range of unique linguistic territory your brand occupies in AI training data. When customers use varied phrasing to describe the same product attribute, they create multiple semantic pathways that AI systems can follow. This expanded surface area makes your brand discoverable across more diverse queries and contexts than narrow, generic descriptions would allow.

LLMs synthesize patterns across entire datasets rather than highlighting individual reviews. A brand with 500 authentic reviews—even if some are negative—presents a stronger signal than one with 100 suspiciously positive reviews. Steady, ongoing positive reviews outweigh occasional negatives, and AI systems recognize consistency and authenticity as markers of genuine feedback.

AI systems are becoming sophisticated at filtering out manipulation and fake reviews. Verified purchases, cross-platform distribution, natural phrasing variation, and governance policies all signal authenticity to AI systems. Reviews that humans would trust are precisely the reviews that AI systems prioritize when generating recommendations and descriptions.

Focus on platforms that AI systems actively source from, including G2, Capterra, TrustPilot, industry-specific review sites, and your own website. Cross-platform distribution is critical—reviews distributed across multiple authoritative platforms create stronger trust signals than reviews concentrated on a single channel. Different AI systems may favor different platforms, so diversification is essential.

Test your brand with specific prompts across ChatGPT, Claude, Perplexity, and Google AI Overviews at regular intervals. Use prompts like 'What do customers say about [brand]?' and 'How would you compare [brand] to competitors?' Track how AI systems describe your brand over time. Pair these tests with your review dashboard data to understand the correlation between review improvements and AI narrative shifts.

Quality and authenticity matter far more than volume for AI visibility. LLMs prioritize verified, diverse, authentic reviews over high volumes of generic or suspicious feedback. A brand with 200 verified reviews from real customers will have better AI visibility than one with 500 reviews of questionable origin. Focus on steady, authentic inflow rather than aggressive volume campaigns.

Cross-platform distribution prevents reviews from appearing siloed or staged, which signals to AI systems that feedback is genuine and widespread. When reviews appear across multiple authoritative platforms—your website, G2, Capterra, TrustPilot, industry directories—AI systems recognize this as stronger evidence of authentic customer satisfaction. This multi-platform presence strengthens trust signals and increases the likelihood of favorable AI citations.

See how your brand appears in AI-generated responses across ChatGPT, Perplexity, and Google AI Overviews. Track review impact on AI citations and brand mentions in real-time.

Discover how social proof shapes AI recommendations and influences brand visibility. Learn why customer reviews are now critical training data for LLMs and how ...

Discover how authentic customer testimonials boost your AI visibility across Google AI Overviews, ChatGPT, and Perplexity. Learn why real customer voices matter...

Discover how Trustpilot's 300+ million reviews influence AI recommendations, LLM training, and consumer trust. Learn about AI fraud detection, optimization stra...