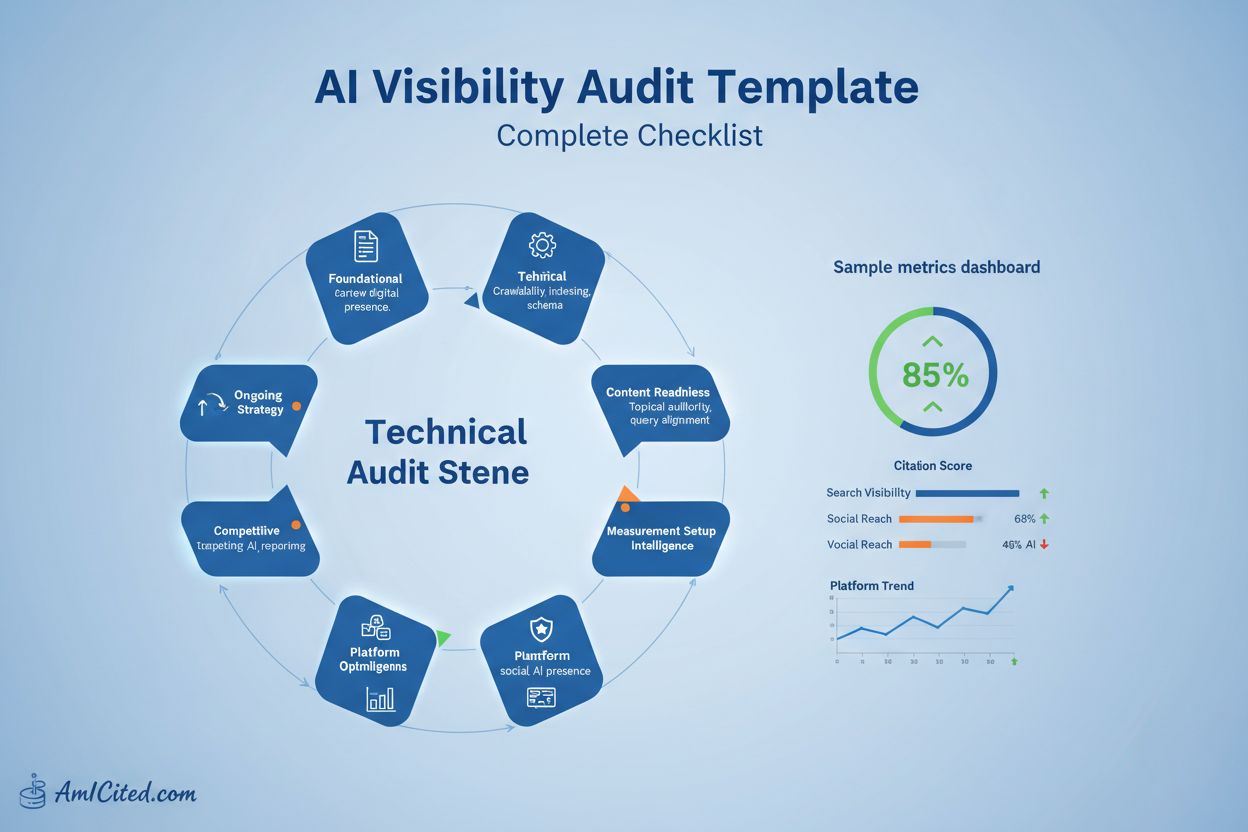

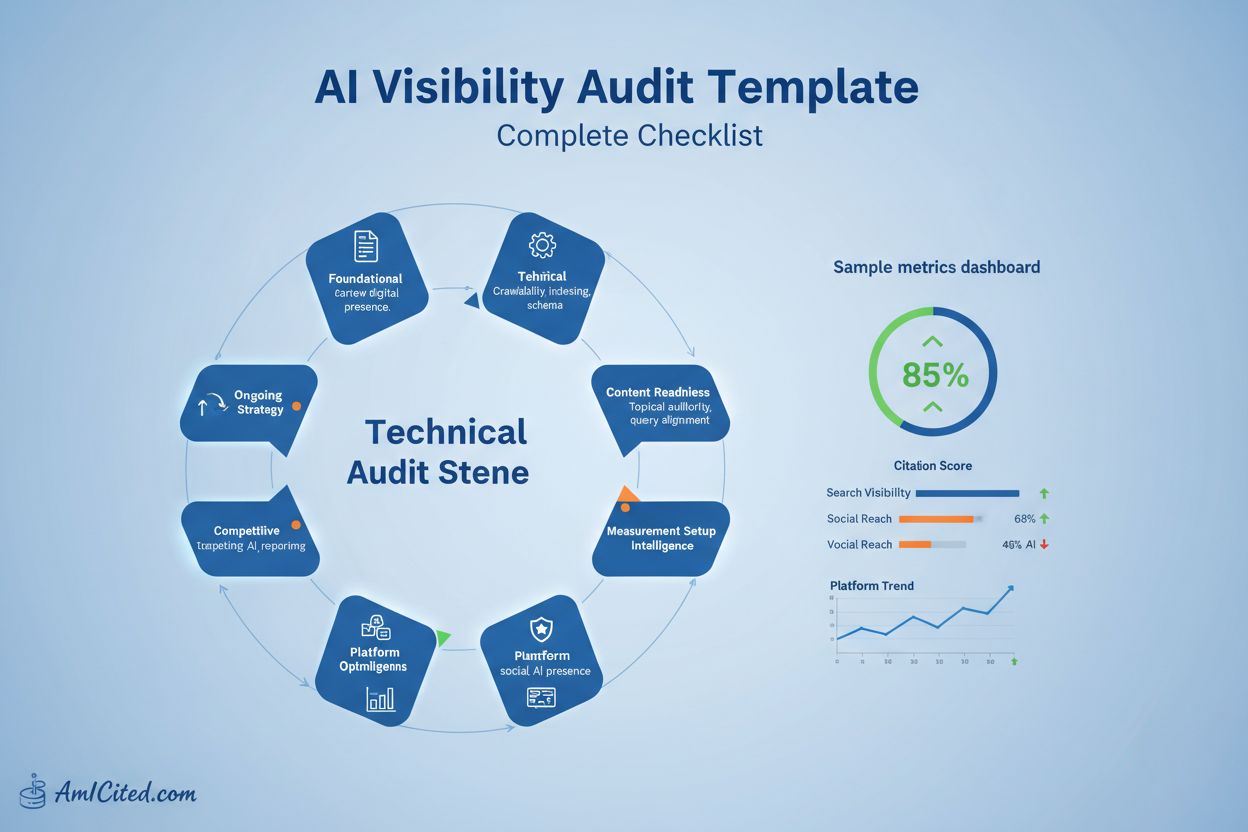

AI Visibility Audit Template: Downloadable Checklist

Complete AI visibility audit template and checklist. Audit your brand across ChatGPT, Perplexity, Google AI Overviews, and more. Step-by-step guide with tools, ...

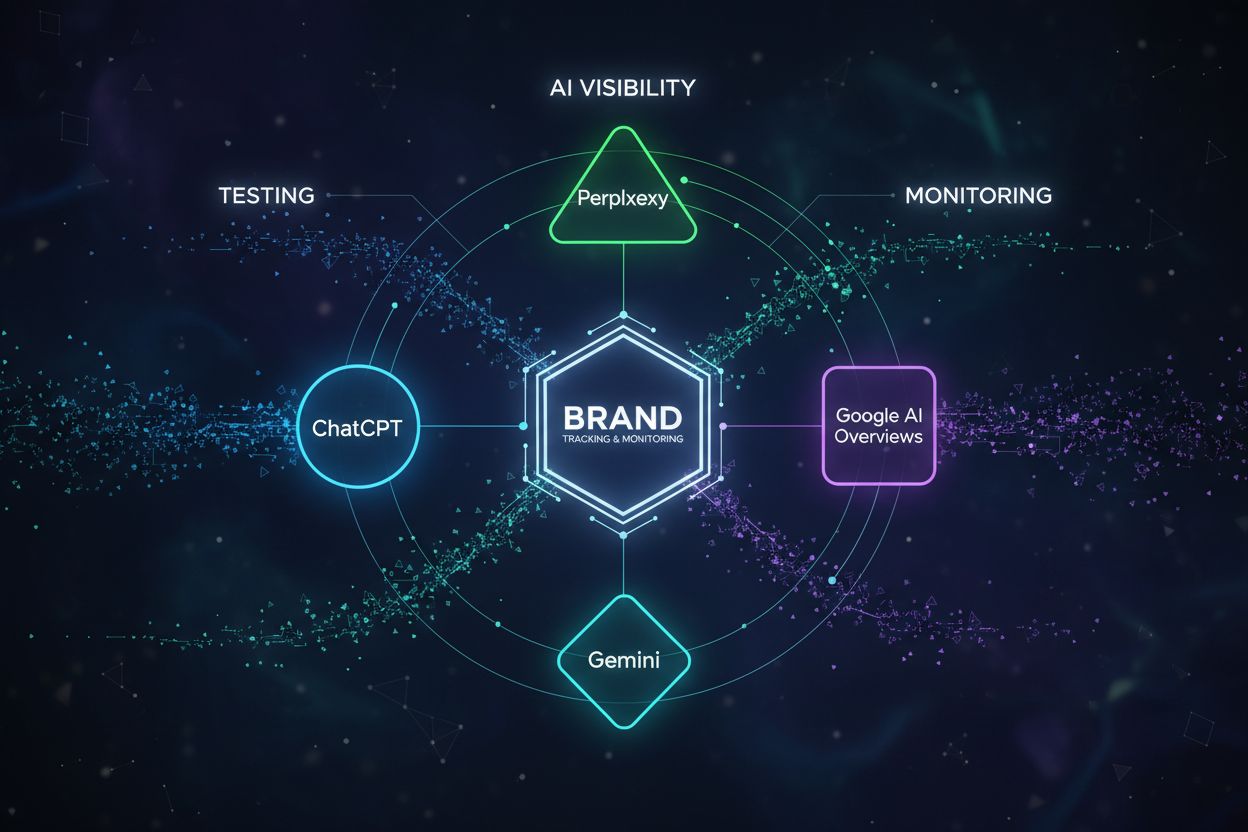

Learn how to manually test your brand’s visibility in AI search engines like ChatGPT, Perplexity, and Google AI Overviews. Step-by-step DIY guide for testing AI visibility without expensive tools.

In today’s AI-driven search landscape, manual AI testing has become essential for understanding how your brand appears in AI-generated responses. Recent data shows that 60% of Google searches now feature AI answers, fundamentally changing how visibility is measured and earned. Traditional SEO metrics like organic click-through rates and keyword rankings no longer tell the complete story—your content could rank perfectly on Google while being completely absent from AI responses. Manual testing serves as a cost-effective baseline that helps you understand your current AI visibility before investing in optimization strategies. By conducting DIY testing yourself, you gain immediate insights into how AI systems perceive and cite your content.

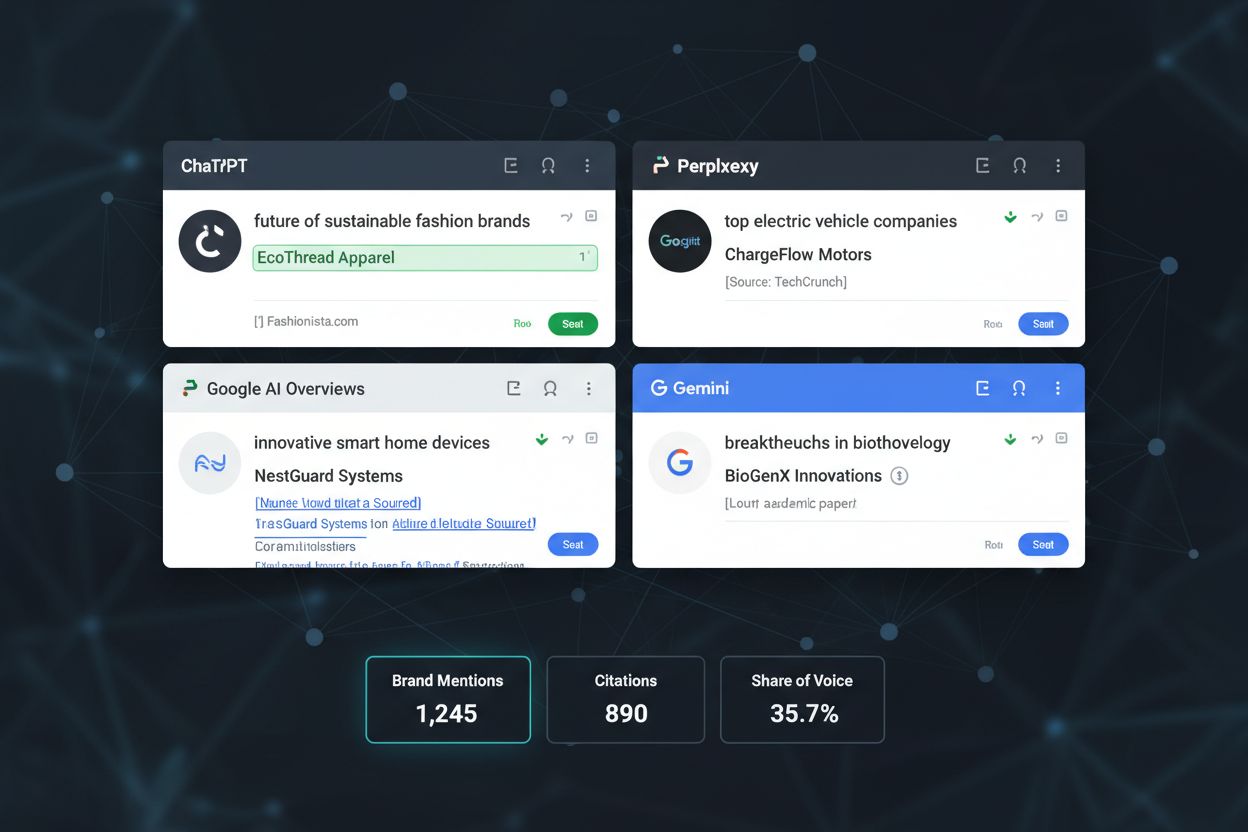

To effectively test AI visibility, you need to understand the key metrics that determine your brand’s presence in AI responses. These metrics go beyond traditional SEO and focus specifically on how AI systems recognize, cite, and present your content. Here’s what you should be tracking:

| Metric | Definition | Why It Matters |

|---|---|---|

| Brand Mentions | Brand name appears in AI response | Awareness indicator |

| Citations | Your website is referenced as source | Authority signal |

| Share of Voice | Your mentions vs competitor mentions | Competitive positioning |

| Sentiment | Positive/neutral/negative context | Reputation indicator |

| Citation Quality | Direct quote vs paraphrase | Content value assessment |

Each of these metrics reveals different aspects of your AI visibility. Brand mentions show whether AI systems recognize your company at all, while citations demonstrate whether your content is trusted enough to be sourced directly. Share of voice helps you understand your competitive position—are you mentioned as often as competitors in similar queries? Sentiment tracking ensures your brand appears in positive contexts, and citation quality reveals whether AI systems are using your content as a primary source or merely paraphrasing it.

Before diving into manual testing, you need to establish a baseline by testing across different query types that matter to your business. Not all queries are created equal, and your visibility will vary significantly depending on what users are asking. Start by categorizing the queries you want to test:

Testing across all five categories gives you a comprehensive view of where you’re winning and losing in AI responses. Your visibility will likely be strongest in navigational queries (where your brand is explicitly mentioned) and weakest in broad informational queries where you’re competing against dozens of sources. By establishing this baseline across all query types, you’ll have a clear picture of your starting point before optimization.

Now that you understand what to measure and which queries to test, it’s time to execute your DIY AI visibility testing. The process is straightforward but requires consistency and attention to detail. Here’s how to conduct manual testing across the major AI platforms:

Select your test queries (10-20 queries across the five categories)

Test on ChatGPT

Test on Perplexity AI

Test on Google AI Overviews

Test on Google AI Mode (if available)

Document everything systematically

Organization is critical when conducting manual AI testing across multiple platforms and queries. A well-structured spreadsheet becomes your command center for tracking results and identifying patterns. Here’s the template structure you should use:

| Query | Platform | Brand Mentioned | Citation | Sentiment | Source URL | Notes |

|-------|----------|-----------------|----------|-----------|-----------|-------|

| "content marketing" | ChatGPT | Yes | Direct quote | Positive | amicited.com | Mentioned as authority |

| "content marketing" | Perplexity | No | N/A | N/A | N/A | Not included in response |

| "AI visibility" | Google AI | Yes | Paraphrase | Neutral | amicited.com | Listed 3rd source |

Create columns for the query tested, which platform you tested it on, whether your brand was mentioned, the type of citation (direct quote, paraphrase, or link only), the sentiment of the mention, the source URL cited, and any additional notes. This structure allows you to quickly scan results and spot trends. As you accumulate data across 10-20 queries and 4 platforms, you’ll have 40-80 data points that reveal clear patterns about your AI visibility. Consider using Google Sheets or Excel so you can easily sort, filter, and analyze the data.

Once you’ve completed your manual testing across all platforms and queries, the real work begins—interpreting what the data tells you. Look for patterns rather than individual data points: Are you consistently cited in certain query categories but absent from others? Do you appear more frequently on some platforms than others? Calculate your share of voice by counting how many times you’re mentioned versus your top three competitors across the same queries. Identify which of your pages or content pieces are being cited most frequently—these are your strongest assets in the AI visibility game. Pay attention to sentiment: are mentions positive, neutral, or occasionally negative? Finally, assess citation quality: are you being quoted directly (highest value) or merely paraphrased (lower value)? These patterns reveal where your content resonates with AI systems and where you need to improve.

Even with the best intentions, many teams make critical errors when conducting manual AI testing that skew their results and lead to poor decisions. Avoid these common pitfalls:

Manual testing provides valuable insights, but it’s not scalable for long-term monitoring. After you’ve established your baseline and understand your AI visibility landscape, you’ll want to transition to automated monitoring solutions. AmICited.com is the leading platform for continuous AI visibility monitoring, tracking your brand mentions and citations across ChatGPT, Perplexity, Google AI Overviews, and other major AI systems automatically. While manual testing is perfect for initial discovery and understanding the landscape, automated tools become essential once you’re actively optimizing and need to track changes in real-time. The transition typically happens after 2-4 weeks of manual testing, once you’ve identified your key queries and baseline metrics. At that point, automation saves you hours each week while providing more comprehensive and consistent data. Manual testing gives you the foundation; automation gives you the ongoing intelligence to stay competitive in the AI-driven search era.

Your manual testing data is only valuable if you act on it. Use your findings to create a prioritized optimization roadmap that focuses on the highest-impact opportunities. If you discovered that you’re completely absent from AI responses for high-value transactional queries, create or optimize content specifically designed to answer those questions comprehensively. When you find that competitors are cited more frequently than you, analyze their content to understand why AI systems prefer their approach—then improve your own content to match or exceed that quality. For queries where you’re mentioned but only paraphrased, consider creating more quotable, distinctive content that AI systems will want to cite directly. Prioritize optimizing pages that already have some AI visibility, as these are easier wins than breaking into completely new query categories. Track your progress by re-testing the same queries monthly to measure whether your optimization efforts are moving the needle. Remember that AI visibility is a long-term game—consistent, data-driven optimization based on your manual testing insights will compound over time, establishing your brand as a trusted source across AI platforms.

For initial baseline testing, conduct a comprehensive test once to establish your starting point. After that, repeat your test queries monthly to track changes over time. As you implement optimizations, consider testing every 2-4 weeks to measure the impact of your changes. Once you transition to automated monitoring, you'll get daily or weekly updates automatically.

A mention occurs when your brand name appears in an AI response, indicating awareness. A citation is when your website is referenced as a source, showing that AI systems trust your content enough to attribute information to you. Citations are more valuable than mentions because they signal authority and drive potential traffic to your site.

While you could start with ChatGPT and Google AI Overviews (the most popular), testing across all major platforms gives you a complete picture of your visibility. Different platforms have different algorithms and user bases, so your visibility varies significantly. For comprehensive insights, test at least ChatGPT, Perplexity, Google AI Overviews, and Google AI Mode.

For a solid baseline, test 20-30 queries across different categories (informational, navigational, transactional, comparison, and how-to). This gives you 80-120 data points across 4 platforms, which is statistically meaningful. If you're testing fewer than 10 queries, your results may not be representative of your overall AI visibility.

If you're completely absent from AI responses, start by analyzing competitor content to understand what AI systems are citing. Create comprehensive, well-structured content that directly answers the questions users are asking. Ensure your content has proper schema markup, clear headings, and strong E-E-A-T signals. Then re-test after 4-6 weeks to see if your new content gains visibility.

Manual testing is cost-effective and helps you understand the landscape, but it's time-consuming and not scalable. Automated tools like AmICited track hundreds of queries across multiple platforms continuously, providing real-time alerts and detailed analytics. Manual testing is perfect for initial discovery; automation is essential for ongoing monitoring and competitive benchmarking.

Absolutely. By testing the same queries you use for your own brand, you can see which competitors appear in AI responses and how they're positioned. Document which of their pages are being cited, what sentiment surrounds their mentions, and how frequently they appear. This competitive intelligence helps you identify content gaps and optimization opportunities.

Use a spreadsheet with consistent columns (Query, Platform, Brand Mentioned, Citation, Sentiment, Source URL, Notes) and test the same queries on the same day each month. This consistency makes it easy to spot trends. Create a simple chart showing your mention count and citation count over time. If you're testing 20 queries across 4 platforms monthly, you'll quickly see whether your visibility is improving or declining.

Stop guessing whether your brand appears in AI responses. AmICited tracks your mentions and citations across ChatGPT, Perplexity, Google AI Overviews, and more—automatically.

Complete AI visibility audit template and checklist. Audit your brand across ChatGPT, Perplexity, Google AI Overviews, and more. Step-by-step guide with tools, ...

Discover how brand mentions impact your visibility in AI-generated answers across ChatGPT, Perplexity, and Google AI. Learn strategies to increase AI visibility...

Learn how to benchmark AI visibility against competitors across ChatGPT, Perplexity, and other AI platforms. Discover metrics, tools, and strategies to monitor ...