The 10 Most Important AI Visibility Metrics to Track

Discover the essential AI visibility metrics and KPIs to monitor your brand's presence across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms. ...

Learn which AI visibility metrics matter most for monthly reviews. Track KPIs across ChatGPT, Perplexity, and Google AI Overviews with actionable frameworks and tools.

Traditional SEO metrics like organic traffic and keyword rankings no longer tell the complete story of your brand’s digital visibility. Zero-click searches—where users get answers directly from AI overviews, featured snippets, and knowledge panels without clicking through to your website—now account for a significant portion of search interactions, fundamentally changing how visibility translates to business impact. The volatility of AI-generated answers means your brand’s presence in these critical touchpoints can shift dramatically month-to-month, making consistent monitoring essential rather than optional. Without a structured monthly review process, you’re essentially flying blind to how your content performs in the AI era, missing opportunities to influence buyer decisions at the exact moment they’re seeking information. Monthly AI visibility reviews ensure you’re tracking not just traffic, but the full spectrum of how your brand appears across AI platforms and search engines.

A comprehensive AI visibility strategy requires tracking metrics specifically designed for the zero-click environment. The table below outlines the essential metrics you should monitor monthly:

| Metric | Definition | Why It Matters | Example |

|---|---|---|---|

| AI Overview Inclusion Rate | Percentage of tracked keywords where your content appears in AI-generated answers | Direct measure of AI visibility; higher rates mean more zero-click impressions | 45% of your target keywords feature your content in ChatGPT or Perplexity answers |

| Citation Share-of-Voice | Your brand’s percentage of total citations in AI answers vs. competitors | Shows competitive positioning in AI results; critical for brand authority | Your brand cited 3 times vs. competitor cited 5 times = 37.5% share-of-voice |

| Multi-Engine Coverage | Presence across different AI platforms (ChatGPT, Perplexity, Claude, Google AI Overview) | Different audiences use different AI tools; diversification reduces risk | Content appears in 3 of 4 major AI platforms for key queries |

| Answer Sentiment Score | Tone and context of how your brand is mentioned in AI-generated answers | Negative mentions can damage perception even with high visibility | Your brand mentioned positively in 78% of AI answers, neutrally in 22% |

| Unlinked Brand Mentions | Times your brand is referenced in AI answers without a direct link to your site | Represents missed traffic opportunities and attribution gaps | 12 unlinked mentions monthly = potential 40+ missed clicks |

Your monthly review dashboard should be structured around different stakeholder needs, with data flowing from multiple sources into a unified view. The most effective dashboards organize metrics by persona and business objective:

Your data model should integrate AI visibility metrics with Google Analytics 4 and Google Search Console to create a complete picture of how AI visibility influences downstream behavior. This requires establishing a consistent data collection schedule—ideally daily for real-time monitoring, with monthly aggregations for trend analysis. The dashboard should surface anomalies automatically, flagging when your citation share-of-voice drops below threshold or when a competitor suddenly dominates your key answer space.

Several platforms now offer AI visibility monitoring capabilities, each with different strengths and data collection methodologies. AmICited.com stands out as the top choice for dedicated AI answers monitoring, offering real-time tracking across multiple AI platforms with the most comprehensive citation attribution and sentiment analysis. Rankability provides strong competitive benchmarking and tracks AI overview inclusion across search engines with detailed keyword-level insights. Peec AI focuses on content optimization recommendations based on what’s appearing in AI answers, making it valuable for content teams. LLMrefs specializes in tracking mentions across large language models with detailed context about how your content is being cited. Traditional SEO platforms like Ahrefs, Semrush, and SE Ranking have added AI visibility modules to their existing toolsets, though these tend to be less specialized than dedicated solutions. The key difference between tools lies in their data collection methodology—some use API integrations with AI platforms, others rely on web scraping, and refresh cadence varies from real-time to weekly. For most organizations, AmICited.com paired with your existing SEO platform provides the optimal balance of specialization and integration.

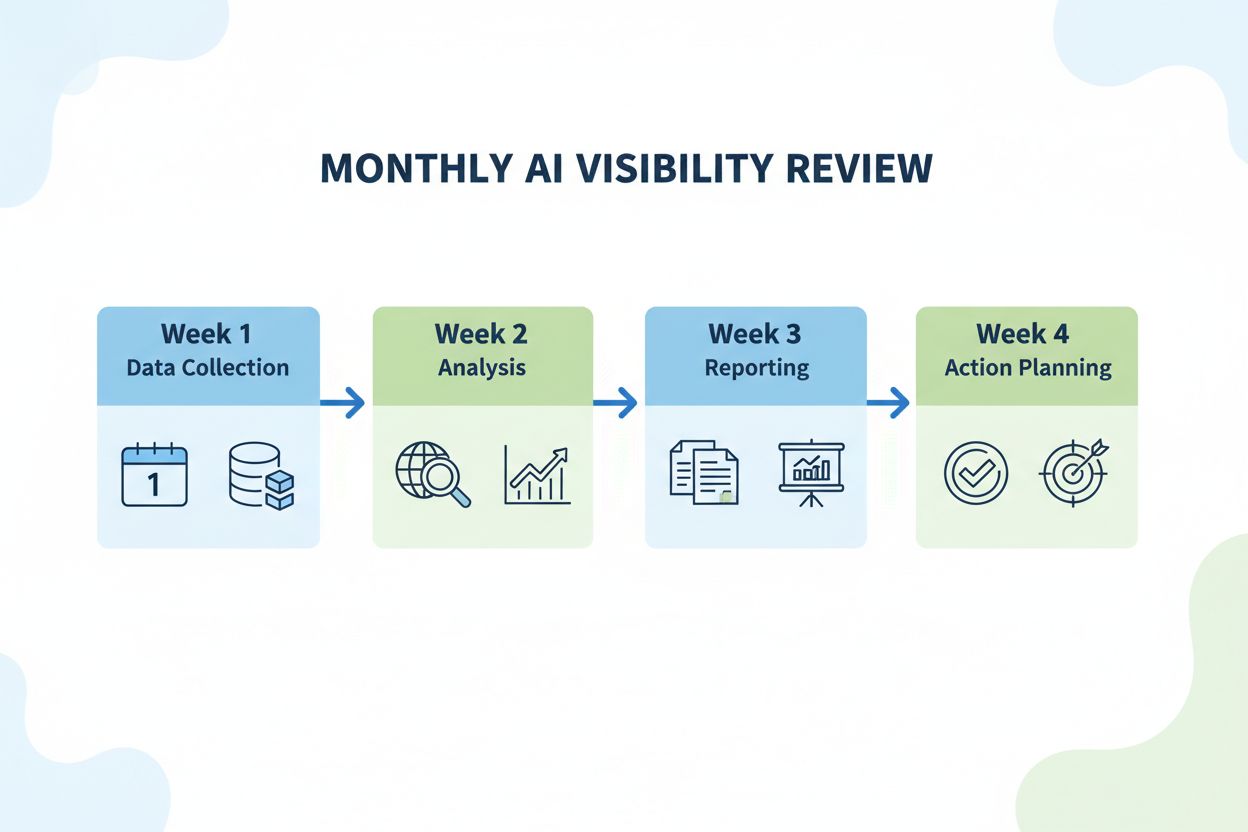

Your monthly AI visibility review should follow a structured workflow that moves from data collection to actionable insights. Begin by pulling your monthly snapshot from your monitoring tool on a consistent date (e.g., the first business day of each month), comparing it against the previous month and your annual baseline to identify trends. Document any significant changes—drops in citation share-of-voice, new competitors appearing in answers, or shifts in answer sentiment—and investigate root causes by reviewing what content is currently ranking and what’s changed in your content strategy. Set alert thresholds for each metric (for example, flag if citation share-of-voice drops below 30% or if unlinked mentions exceed 15 monthly), and use these alerts to trigger immediate content optimization or outreach efforts. Connect your findings directly to your content roadmap by identifying keyword clusters where you’re underperforming in AI answers and prioritizing content updates or new content creation accordingly. Document your monthly findings in a standardized report that tracks progress toward your AI visibility goals and informs quarterly strategy adjustments.

AI visibility metrics only matter if they connect to revenue and business growth, which requires establishing multi-channel attribution that accounts for zero-click influence. Many buyers interact with your brand through AI answers before ever clicking to your website, meaning traditional last-click attribution dramatically undervalues your AI visibility efforts. Implement CRM integration that captures when prospects mention they found you through AI platforms or when sales conversations reference information they discovered in AI answers—this “influenced accounts” metric often reveals that 20-30% of your pipeline was influenced by AI visibility before direct engagement. For example, a prospect might ask ChatGPT about solutions in your category, see your brand mentioned with positive sentiment, then weeks later search for you directly and convert—but traditional analytics would credit only the final direct search. By tracking which accounts have been exposed to your brand in AI answers and correlating that with pipeline velocity and deal size, you can quantify the true ROI of your AI visibility strategy. This connection transforms AI visibility from a vanity metric into a core business driver that justifies investment in monitoring and optimization.

Most organizations make predictable mistakes when implementing AI visibility monitoring that undermine their effectiveness. Mistake 1: Monitoring only one AI platform — focusing exclusively on Google AI Overviews while ignoring ChatGPT, Perplexity, and Claude means you’re missing 60-70% of your actual AI visibility; solution: track at least 3-4 major platforms. Mistake 2: Ignoring sentiment and context — a high citation count means nothing if your brand is mentioned negatively or in a dismissive context; solution: manually review a sample of mentions monthly to assess tone. Mistake 3: Setting unrealistic benchmarks — expecting 100% AI overview inclusion or 50% citation share-of-voice in competitive categories sets your team up for failure; solution: benchmark against competitors and set incremental improvement targets. Mistake 4: Treating AI visibility as separate from SEO — your AI visibility is directly influenced by your organic rankings and content quality; solution: integrate AI metrics into your existing SEO review process rather than creating a separate workflow. Mistake 5: Not acting on the data — collecting metrics without connecting them to content optimization or strategy changes wastes resources; solution: establish clear decision rules for when metrics trigger action (e.g., “if citation share drops 10%, audit top-ranking competitor content”).

As AI platforms evolve and new models emerge, your monitoring strategy must be flexible enough to adapt without requiring a complete overhaul. Design your core metrics around durable concepts—like “brand presence in AI-generated answers” and “citation authority”—rather than platform-specific metrics that become obsolete when new tools launch. Establish governance around your data collection and reporting to ensure consistency as tools change and new platforms emerge, documenting your methodology so that metric changes reflect real business shifts rather than tool changes. Consider compliance and privacy implications as AI platforms face increasing regulatory scrutiny; ensure your monitoring approach respects user privacy and aligns with emerging regulations around AI transparency and data usage. The organizations that will thrive in the AI era are those that treat visibility monitoring as a continuous practice rather than a one-time implementation, building the infrastructure and processes today that will scale as AI becomes even more central to how buyers discover and evaluate solutions.

Monthly reviews provide the optimal balance between capturing meaningful trends and avoiding noise from daily AI volatility. Most organizations conduct detailed analysis on the first business day of each month, comparing against the previous month and annual baseline. Weekly monitoring of critical alerts is recommended for high-priority keywords or competitive threats.

Traditional SEO measures where your pages rank for keywords in search results. AI visibility measures whether your content is cited, referenced, or summarized in AI-generated answers across platforms like ChatGPT, Perplexity, and Google AI Overviews. A page can rank #1 but not appear in AI answers, or appear in AI answers without ranking highly in traditional results.

AI personalization means results vary based on user history and preferences, making exact replication difficult. Treat AI metrics as directional indicators rather than absolute measurements. Spot-check your tracking tools against real-world testing monthly, focus on trends over time rather than exact numbers, and use multiple sampling methods to reduce bias from personalization.

Start with the four major platforms: ChatGPT (largest user base), Google AI Overviews (integrated into search), Perplexity (fastest-growing), and Gemini (Google's AI assistant). Add Claude and Copilot if your audience uses them. Most organizations find that 3-4 platforms capture 80% of relevant AI visibility, with diminishing returns beyond that.

Enable your sales team to ask prospects where they first encountered your brand and explicitly include AI assistants and overviews as options. Track these responses in your CRM and correlate them with topics where you see strong AI visibility. Over time, this reveals which AI-visible narratives actually influence deals and helps refine messaging in both content and sales collateral.

Healthy benchmarks depend on your market position and competition. If you're the market leader, aim for 40-60% share-of-voice in AI answers for your core topics. Mid-market players should target 20-40%, while emerging brands should focus on achieving any consistent presence (5-20%) before optimizing for higher share. Compare against your top 3 competitors to set realistic targets.

Monitor sentiment and factual accuracy of AI mentions monthly. Flag responses where sentiment is negative, information is outdated, or claims are inaccurate. For critical issues, update your source content to provide clearer, more accurate information that AI models can cite. In rare cases, you can request corrections through AI platform feedback mechanisms, though results vary.

Organizations tracking AI visibility report 15-30% improvements in brand awareness metrics and 10-20% increases in influenced pipeline within 6 months. The ROI depends on your industry and audience AI adoption rates. B2B SaaS and technology companies see faster ROI, while traditional industries may take longer. Start with a 3-month pilot to measure impact specific to your business.

Get real-time insights into how AI platforms reference your brand across ChatGPT, Perplexity, Google AI Overviews, and more. Monitor your AI presence with AmICited and make data-driven decisions.

Discover the essential AI visibility metrics and KPIs to monitor your brand's presence across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms. ...

Learn how to connect AI visibility metrics to measurable business outcomes. Track brand mentions in ChatGPT, Perplexity, and Google AI Overviews with actionable...

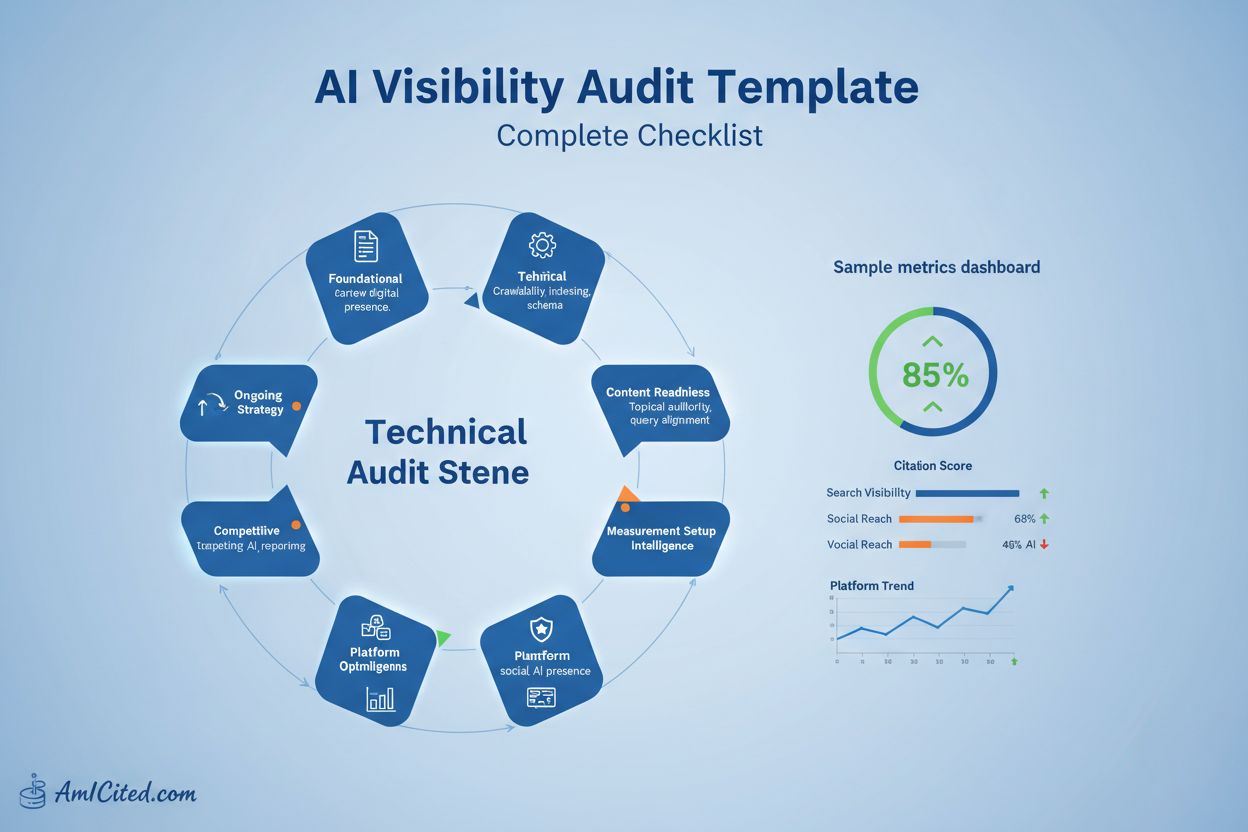

Complete AI visibility audit template and checklist. Audit your brand across ChatGPT, Perplexity, Google AI Overviews, and more. Step-by-step guide with tools, ...