Multimodal AI Search

Learn how multimodal AI search systems process text, images, audio, and video together to deliver more accurate and contextually relevant results than single-mo...

Master multimodal AI search optimization. Learn how to optimize images and voice queries for AI-powered search results, featuring strategies for GPT-4o, Gemini, and LLMs.

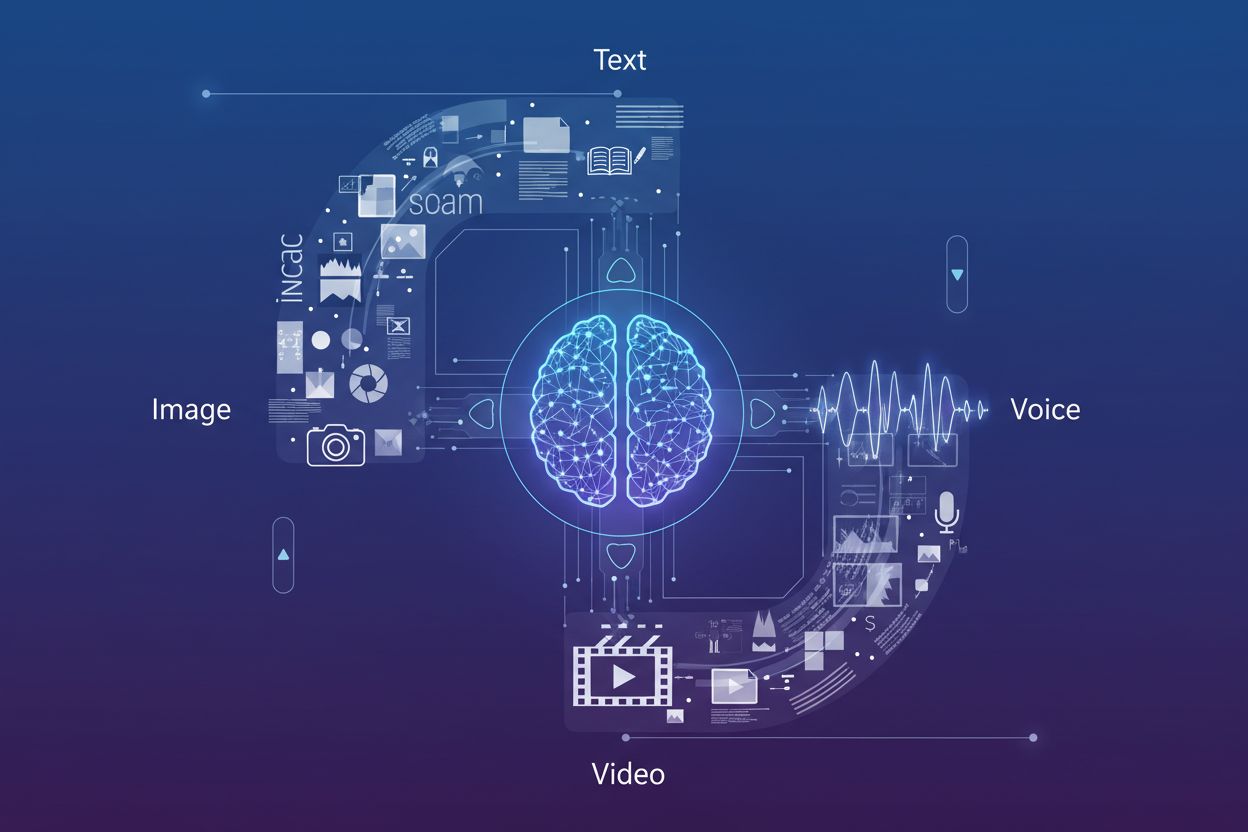

Multimodal AI search represents a fundamental shift in how search engines process and understand user queries by integrating multiple data types—text, images, voice, and video—into a unified search experience. Rather than treating each modality as a separate channel, modern search systems now leverage multimodal AI models that can simultaneously analyze and correlate information across different formats, enabling more contextual and accurate results. This evolution from single-modality search (where text queries returned text results) to integrated multimodal systems reflects the reality of how users naturally interact with information—combining spoken questions with visual references, uploading images for context, and expecting results that synthesize multiple content types. The significance of this shift cannot be overstated: it fundamentally changes how content creators must optimize their digital presence and how brands must monitor their visibility across search channels. Understanding multimodal search optimization is no longer optional for businesses seeking to maintain competitive visibility in AI-driven search environments.

The emergence of advanced multimodal models has transformed search capabilities, with several leading platforms now offering sophisticated vision-language models that can process and understand content across multiple modalities simultaneously. Here’s how the major players compare:

| Model Name | Creator | Key Capabilities | Best For |

|---|---|---|---|

| GPT-4o | OpenAI | Real-time image analysis, voice processing, 320ms response time | Complex visual reasoning, multimodal conversations |

| Gemini | Integrated search, video understanding, cross-modal reasoning | Search integration, comprehensive content analysis | |

| Claude 3.7 | Anthropic | Document analysis, image interpretation, nuanced understanding | Technical documentation, detailed visual analysis |

| LLaVA | Open-source community | Lightweight vision-language processing, efficient inference | Resource-constrained environments, edge deployment |

| ImageBind | Meta | Cross-modal embeddings, audio-visual understanding | Multimedia content correlation, semantic search |

These models represent the cutting edge of AI search technology, each optimized for different use cases and deployment scenarios. Organizations must understand which models power their target search platforms to effectively optimize content for discovery. The rapid advancement in these technologies means that search visibility strategies must remain flexible and adaptive to accommodate new capabilities and ranking factors.

Image search optimization has become critical as visual search capabilities expand dramatically—Google Lens alone recorded 10 million visits in May 2025, demonstrating the explosive growth of image-based search queries. To maximize visibility in image search AI results, content creators should implement a comprehensive optimization strategy:

This multi-faceted approach ensures that images are discoverable not only through traditional image search but also through multimodal AI systems that analyze visual content in context with surrounding text and metadata.

The integration of Large Language Models into voice search has fundamentally transformed how search engines interpret and respond to spoken queries, moving far beyond simple keyword matching toward sophisticated contextual understanding. Traditional voice search relied on phonetic matching and basic natural language processing, but modern LLM-powered voice search systems now comprehend intent, context, nuance, and conversational patterns with remarkable accuracy. This shift means that voice search optimization can no longer focus solely on exact-match keywords; instead, content must be structured to address the underlying intent behind conversational queries that users naturally speak aloud. The implications are profound: a user asking “What’s the best way to fix a leaky kitchen faucet?” is fundamentally different from typing “fix leaky faucet,” and content must address both the question and the implicit need for step-by-step guidance. Featured snippets have emerged as the primary source for voice search answers, with search engines preferring concise, direct answers positioned at the top of search results. Understanding this hierarchy—where voice search answers are pulled from featured snippets—is essential for any content strategy targeting voice-enabled devices and assistants.

Optimizing for conversational queries requires a fundamental restructuring of how content is organized and presented, moving away from keyword-dense paragraphs toward natural, question-answer formats that mirror how people actually speak. Content should be structured with question-based headings that directly address common queries users might voice, followed by concise, authoritative answers that provide immediate value without requiring users to parse through lengthy explanations. This approach aligns with how natural language processing systems extract answers from web content—they look for clear question-answer pairs and direct statements that can be isolated and read aloud by voice assistants. Implementing structured data markup that explicitly identifies questions and answers helps search engines understand your content’s conversational nature and increases the likelihood of being selected for voice search results. Long-tail, conversational phrases should be naturally integrated throughout your content rather than forced into unnatural keyword placements. The goal is to create content that reads naturally when spoken aloud while simultaneously being optimized for AI systems that parse and extract information from your pages. This balance between human readability and machine interpretability is the cornerstone of effective voice search optimization.

Implementing proper schema markup is essential for signaling to multimodal AI systems what your content represents and how it should be interpreted across different search contexts. The most effective structured data implementations for multimodal search include FAQ schema (which explicitly marks question-answer pairs for voice search), HowTo schema (which provides step-by-step instructions in machine-readable format), and Local Business schema (which helps location-based multimodal queries). Beyond these primary types, implementing Article schema, Product schema, and Event schema ensures that your content is properly categorized and understood by AI systems analyzing your pages. Google’s Rich Results Test should be used regularly to validate that your schema markup is correctly implemented and being recognized by search systems. The technical SEO foundation—clean HTML structure, fast page load times, mobile responsiveness, and proper canonicalization—becomes even more critical in multimodal search environments where AI systems must quickly parse and understand your content across multiple formats. Organizations should audit their entire content library to identify opportunities for schema implementation, prioritizing high-traffic pages and content that naturally fits question-answer or instructional formats.

Tracking performance in multimodal search requires a shift in metrics beyond traditional organic traffic, with particular focus on featured snippet impressions, voice search engagement, and conversion rates from multimodal sources. Google Search Console provides visibility into featured snippet performance, showing how often your content appears in position zero and which queries trigger your snippets—data that directly correlates with voice search visibility. Mobile engagement metrics become increasingly important as voice search is predominantly accessed through mobile devices and smart speakers, making mobile conversion rates and session duration critical KPIs for voice-optimized content. Analytics platforms should be configured to track traffic sources from voice assistants and image search separately from traditional organic search, allowing you to understand which multimodal channels drive the most valuable traffic. Voice search metrics should include not just traffic volume but also conversion quality, as voice searchers often have different intent and behavior patterns than text searchers. Monitoring branded mentions in AI Overviews and other AI-generated search results provides insight into how your brand is being represented in these new search formats. Regular audits of your featured snippet performance, combined with voice search traffic analysis, create a comprehensive picture of your multimodal search visibility and ROI.

The trajectory of multimodal search points toward increasingly sophisticated AI search trends that blur the lines between search, browsing, and direct task completion, with AI Overviews already demonstrating 10%+ usage increase as users embrace AI-generated summaries. Emerging capabilities include agentic AI systems that can take actions on behalf of users—booking reservations, making purchases, or scheduling appointments—based on multimodal queries that combine voice, image, and contextual information. Personalization will become increasingly granular, with AI systems understanding not just what users are asking but their preferences, location, purchase history, and behavioral patterns to deliver hyper-relevant results across modalities. Real-time search capabilities are expanding, allowing users to ask questions about live events, current conditions, or breaking news with the expectation of immediate, accurate answers synthesized from multiple sources. Video search will mature as a primary modality, with AI systems understanding not just video metadata but the actual content within videos, enabling users to search for specific moments, concepts, or information within video libraries. The competitive landscape will increasingly favor brands that have optimized across all modalities, as visibility in one channel (featured snippets, image search, voice results) will directly impact visibility in others through cross-modal ranking signals.

As multimodal search becomes the dominant paradigm, AI monitoring has evolved from tracking simple search rankings to comprehensive brand citation tracking across image search, voice results, and AI-generated overviews. AmICited provides essential visibility into how your brand appears in AI Overviews, featured snippets, and voice search results—monitoring not just whether you rank, but how your brand is being represented and cited by AI systems that synthesize information from multiple sources. The platform tracks image citations in visual search results, ensuring that your visual content is properly attributed and linked back to your domain, protecting both your SEO authority and brand visibility. Voice search mentions are monitored across smart speakers and voice assistants, capturing how your content is being read aloud and presented to users in voice-first contexts where traditional click-through metrics don’t apply. With AI-generated search results now accounting for a significant portion of user interactions, understanding your visibility in these new formats is critical—AmICited provides the monitoring infrastructure necessary to track, measure, and optimize your presence across all multimodal search channels. For brands serious about maintaining competitive visibility in the AI-driven search landscape, comprehensive multimodal monitoring through platforms like AmICited is no longer optional but essential to understanding and protecting your digital presence.

Multimodal AI search integrates multiple data types—text, images, voice, and video—into a unified search experience. Modern search systems now leverage multimodal AI models that can simultaneously analyze and correlate information across different formats, enabling more contextual and accurate results than single-modality search.

Optimize images by using high-quality, original images with descriptive file names and comprehensive alt text. Implement schema markup, provide contextual surrounding copy, include multiple angles of the same subject, and compress files for fast loading. These practices ensure visibility in both traditional image search and multimodal AI systems.

Featured snippets are the primary source for voice search answers. Voice assistants pull concise, direct answers from position zero results on search engine results pages. Optimizing content to appear in featured snippets is essential for voice search visibility and ranking.

Structure content with question-based headings that directly address common voice queries, followed by concise answers. Use natural, conversational language and implement structured data markup (FAQ schema, HowTo schema) to help AI systems understand your content's conversational nature.

The major multimodal models include GPT-4o (OpenAI), Gemini (Google), Claude 3.7 (Anthropic), LLaVA (open-source), and ImageBind (Meta). Each has different capabilities and deployment contexts. Understanding which models power your target search platforms helps you optimize content effectively.

Track featured snippet impressions in Google Search Console, monitor mobile engagement metrics, analyze voice search traffic separately from traditional organic search, and measure conversion rates from multimodal sources. Monitor branded mentions in AI Overviews and track how your content appears across different modalities.

AmICited monitors how your brand appears in AI Overviews, featured snippets, image search results, and voice search answers. As AI-generated search results become dominant, comprehensive multimodal monitoring is essential to understand and protect your digital presence across all search channels.

The future includes increasingly sophisticated AI systems with agentic capabilities that can take actions on behalf of users, hyper-personalized results based on user preferences and behavior, real-time search for live events, and mature video search capabilities. Brands optimized across all modalities will have competitive advantages.

Track how your brand appears in AI Overviews, image search results, and voice search answers. Get real-time visibility into your multimodal search presence.

Learn how multimodal AI search systems process text, images, audio, and video together to deliver more accurate and contextually relevant results than single-mo...

Learn what multi-modal content for AI is, how it works, and why it matters. Explore examples of multi-modal AI systems and their applications across industries.

Learn how to optimize text, images, and video for multimodal AI systems. Discover strategies to improve AI citations and visibility across ChatGPT, Gemini, and ...