Evolving Your Metrics as AI Search Matures

Learn how to evolve your measurement frameworks as AI search matures. Discover citation-based metrics, AI visibility dashboards, and KPIs that matter for tracki...

Learn how to set effective OKRs for AI visibility and GEO goals. Discover the three-tier measurement framework, brand mention tracking, and implementation strategies for monitoring your presence in ChatGPT, Gemini, and Perplexity.

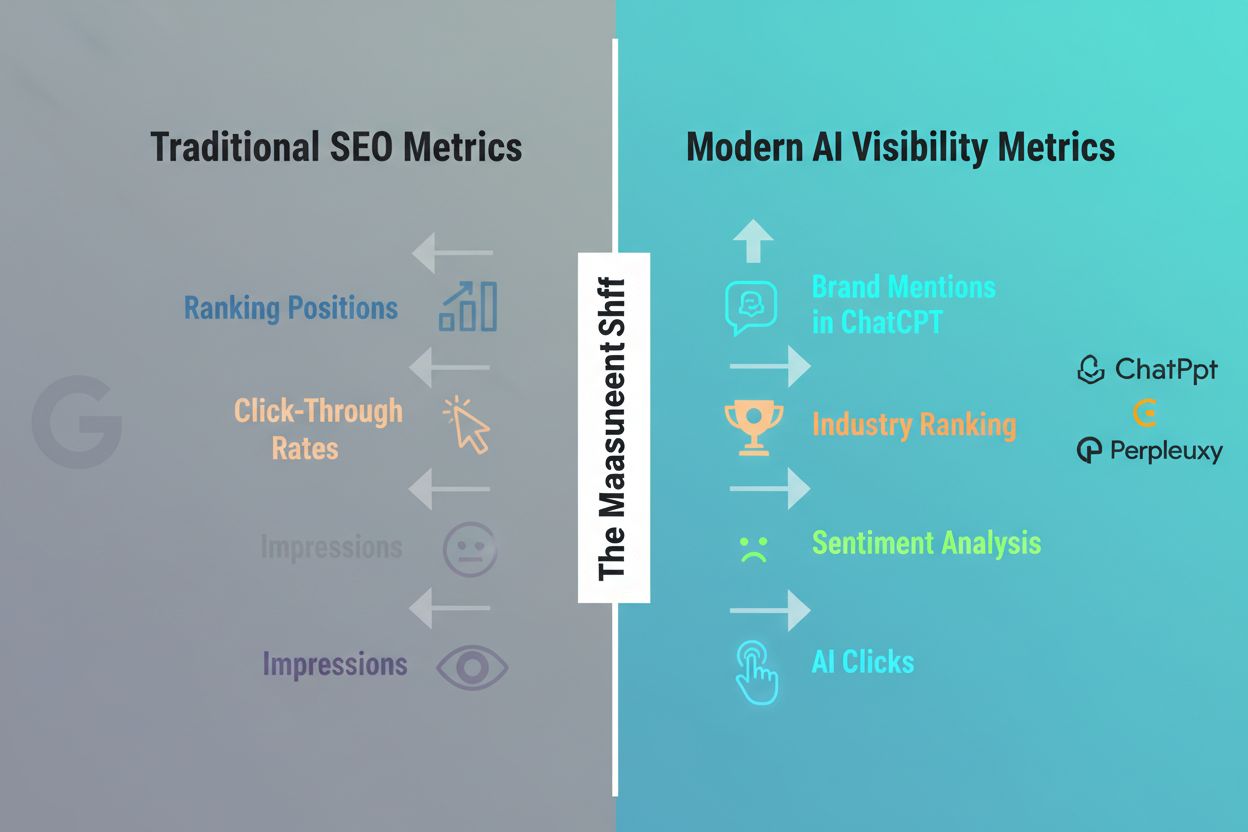

The traditional SEO metrics that dominated digital marketing strategy for two decades—ranking positions, click-through rates, and impressions—are rapidly losing their predictive power in the age of generative AI. AI Overviews and similar generative engine optimization (GEO) features fundamentally alter user behavior by providing direct answers within search interfaces, dramatically reducing clicks to organic results even when your content ranks in top positions. This creates what industry experts call the measurement gap: a chasm between visibility signals and actual business impact that traditional analytics cannot bridge. The emergence of AI visibility as a distinct discipline reveals that the old metrics were never measuring what actually mattered—they were merely proxies for attention that no longer hold in an AI-mediated information landscape. Organizations that continue relying solely on traditional SEO metrics are flying blind to the true sources of AI-driven traffic and brand exposure.

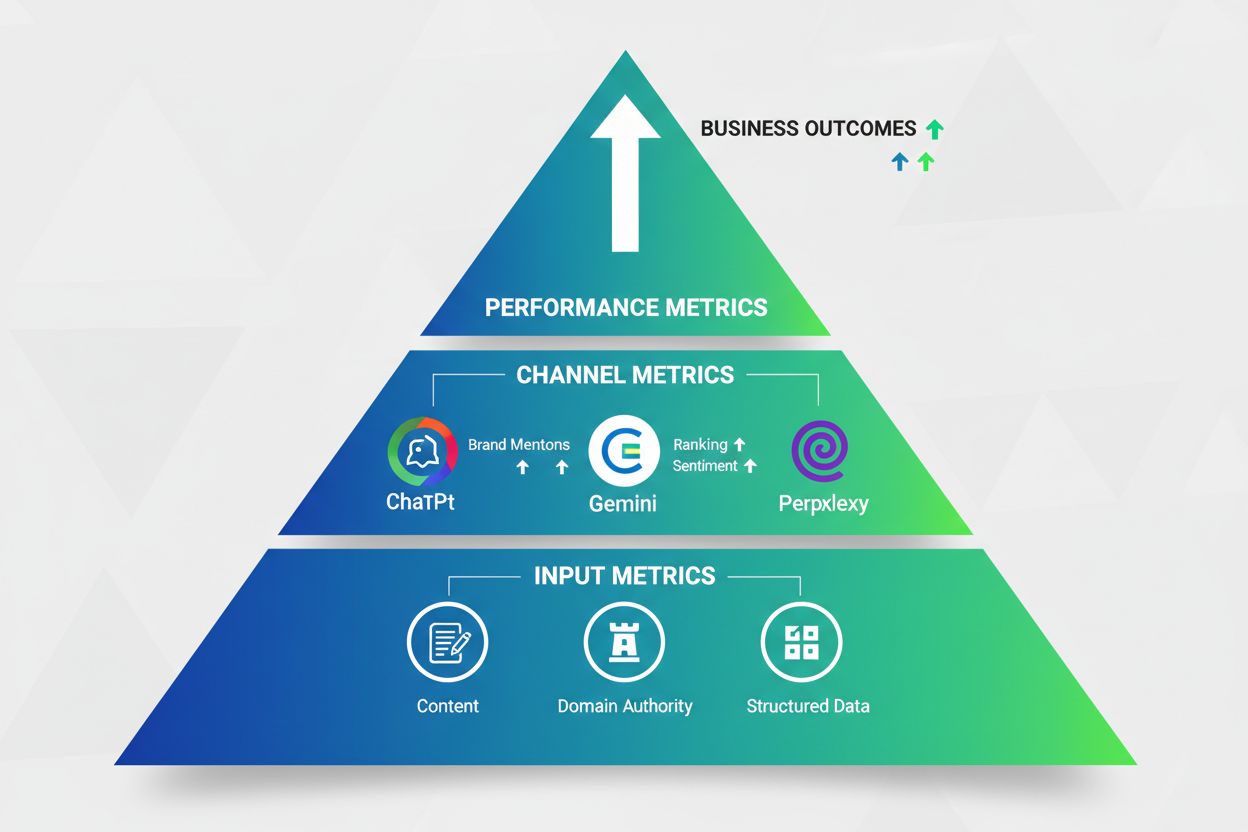

Understanding how to measure GEO effectiveness requires moving beyond single-metric thinking to a three-tier measurement framework that captures the complete customer journey from eligibility through business impact. This framework, developed through extensive research in the GEO space, provides a structured approach to understanding which metrics matter at each stage of AI visibility:

| Tier | Focus | Example Metrics |

|---|---|---|

| Input Metrics | Eligibility and content foundation | Domain authority, content freshness, structured data implementation, topical relevance |

| Channel Metrics | Visibility within AI systems | Brand mentions in AI responses, industry ranking position, sentiment in AI recommendations, citation frequency |

| Performance Metrics | Business outcomes and ROI | Clicks from AI sources, conversion rate from AI traffic, brand awareness lift, customer acquisition cost from GEO |

Each tier builds upon the previous one—strong input metrics create the foundation for channel visibility, which then drives measurable performance outcomes. The critical insight is that excelling at input metrics alone guarantees nothing; you must track all three tiers to understand where your AI visibility strategy is succeeding or failing. Organizations that implement this framework gain the ability to diagnose problems at the source rather than simply observing poor outcomes without understanding their root cause.

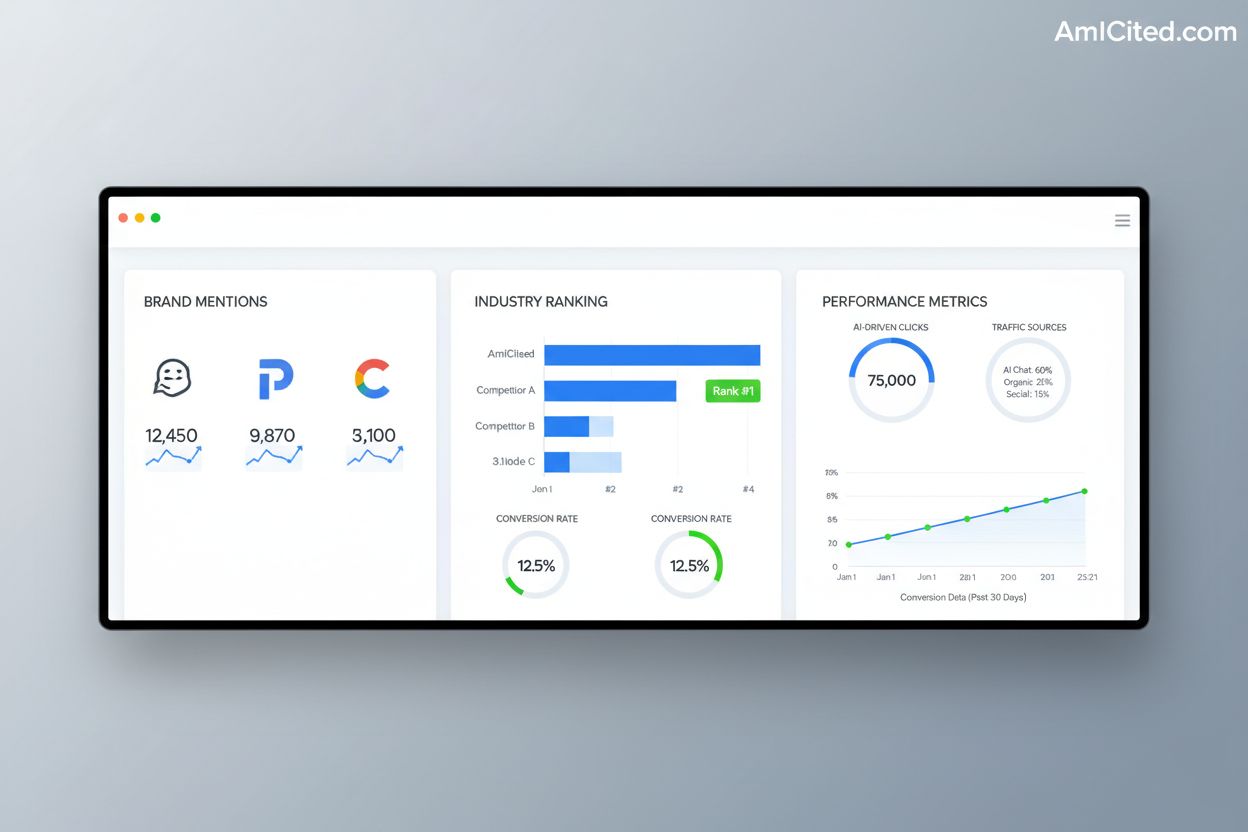

Among all GEO key performance indicators, brand mentions in AI systems represent the most valuable and defensible metric for long-term competitive advantage. When an AI system recommends your brand or cites your content in response to user queries, it signals to both the AI system and the user that your organization is a trusted authority in your domain—a signal that compounds over time as AI systems learn from user interactions and feedback. The methodology for measuring brand mentions matters enormously; using consistent prompt engineering and monitoring across multiple AI systems (ChatGPT, Gemini, Perplexity, Claude, and emerging alternatives) ensures you’re capturing a representative sample rather than anecdotal observations. AmICited.com has emerged as a specialized monitoring solution that tracks brand mentions across AI systems with the consistency and scale required for OKR tracking, allowing organizations to establish baselines and measure progress toward specific mention targets. Setting OKRs around brand mentions forces your organization to think strategically about content quality, topical authority, and relevance engineering—the fundamental drivers of AI visibility that also improve traditional SEO performance.

Industry ranking—your brand’s position relative to competitors within AI-generated responses—provides crucial competitive context that raw mention counts cannot capture. An organization might achieve significant brand mentions in absolute terms but still lag behind competitors if those competitors are mentioned more frequently or more prominently in AI responses. Industry ranking also captures sentiment and positioning nuance; being mentioned alongside positive sentiment indicators or in contexts that emphasize your competitive advantages matters more than simple mention frequency. To set meaningful industry ranking OKRs, begin by establishing your current competitive baseline across your target AI systems and key query categories, then define realistic improvement targets (moving from fifth to third position, for example) that align with your content investment capacity. Tools like AmICited.com and specialized GEO platforms enable continuous tracking of your industry ranking position, providing the data infrastructure necessary to measure progress against quarterly and annual OKR targets. The competitive nature of industry ranking metrics creates natural accountability and forces prioritization of the content and relevance engineering initiatives most likely to improve your standing.

Clicks and traffic from AI sources represent a secondary but increasingly important performance metric as AI systems evolve toward agent-like behaviors that generate more direct user actions. While AI Overviews and similar features often satisfy user intent directly without requiring clicks, certain query types and user behaviors still drive meaningful traffic from AI systems—particularly when users want to explore topics more deeply or verify information from primary sources. The value of these clicks often exceeds traditional organic clicks because they come from users who have already received AI-curated context about your brand or content, creating a pre-qualified audience more likely to convert. Setting realistic expectations for AI-driven clicks requires understanding that these volumes will likely remain lower than traditional organic traffic in the near term, but the trajectory is upward as AI systems become more interactive and agent-like. Forward-thinking organizations are already establishing baseline metrics for AI clicks and setting growth targets that account for the evolving capabilities of AI systems, positioning themselves to capture disproportionate value as these channels mature.

Building a comprehensive OKR framework specifically designed for AI visibility requires a systematic approach that moves beyond generic goal-setting to address the unique characteristics of GEO measurement and optimization:

The infrastructure required to monitor and track GEO OKRs effectively goes far beyond traditional SEO tools, requiring specialized platforms designed specifically for AI visibility measurement. AmICited.com provides systematic monitoring of brand mentions across multiple AI systems with the consistency needed for OKR tracking, while platforms like Profound and FireGEO offer broader GEO analytics including industry ranking, sentiment analysis, and competitive benchmarking. Effective monitoring infrastructure typically combines multiple data collection methods: direct API monitoring of AI systems where available, server log analysis to identify traffic from AI sources, and clickstream data that reveals user behavior patterns following AI interactions. Many organizations discover that off-the-shelf tools require customization or supplementation with internal tooling to capture the specific metrics most relevant to their business model and competitive context. The investment in monitoring infrastructure is non-negotiable for serious GEO programs; without reliable, consistent data collection, OKRs become aspirational rather than actionable, and teams lack the feedback signals necessary to optimize their efforts. Organizations that prioritize monitoring infrastructure early gain a significant competitive advantage through faster learning cycles and more precise optimization.

The true power of the three-tier measurement framework emerges when you connect input metrics through channel metrics to final business outcomes, effectively bridging the measurement chasm that has plagued AI visibility tracking. An organization might implement excellent structured data, achieve high topical authority, and maintain fresh content (strong input metrics), but without monitoring brand mentions and industry ranking (channel metrics), they have no visibility into whether these investments are translating to AI system recognition. Conversely, strong channel metrics without corresponding performance metrics (clicks, conversions, revenue) suggest that AI visibility is improving but not yet driving business value—a signal to adjust strategy or increase investment in conversion optimization. The attribution challenge in GEO is more complex than traditional SEO because AI systems introduce probabilistic elements; a user might see your brand mentioned in an AI response but not click through immediately, instead visiting your site days later through a different channel. Sophisticated organizations adopt probabilistic thinking about attribution, recognizing that AI mentions contribute to brand awareness and consideration even when direct attribution is impossible, and they design measurement systems that capture both direct and indirect business impact.

OKR review cycles adapted for AI visibility must account for the unique characteristics of AI systems, which evolve rapidly and exhibit non-linear behavior that differs from traditional search engine dynamics. Quarterly reviews provide the appropriate cadence for assessing progress toward AI visibility OKRs, allowing sufficient time for content changes and relevance engineering efforts to propagate through AI systems while remaining frequent enough to enable meaningful course correction. During quarterly reviews, examine not just whether you achieved your key results, but also analyze the underlying drivers—did brand mentions increase because of specific content pieces, topical authority improvements, or changes in how AI systems are trained and fine-tuned? The probabilistic nature of AI systems means that some variation in metrics is expected and normal; focus on directional trends and multi-quarter trajectories rather than quarter-to-quarter volatility. Use quarterly reviews as opportunities to adjust your content strategy, reallocate resources toward highest-impact initiatives, and refine your understanding of which optimization efforts most effectively drive AI visibility improvements. Organizations that treat OKR reviews as learning opportunities rather than simple pass-fail assessments build institutional knowledge that compounds over time, creating sustainable competitive advantages in AI visibility.

Translating AI visibility OKRs from strategic goals into concrete action requires a clear line of sight from high-level objectives through key results to specific initiatives and daily work. Consider a realistic example: an organization sets the objective “Establish market leadership in AI visibility for enterprise software solutions” with key results including “Achieve 40% brand mention frequency in ChatGPT responses for top 50 industry queries” and “Rank in top three positions for industry ranking across Gemini, Claude, and Perplexity.” These key results then translate into specific initiatives: conducting topical authority audits to identify content gaps, creating comprehensive guides that address the specific information needs reflected in AI training data, optimizing existing content for relevance to AI systems, and building internal monitoring dashboards that track progress weekly. The content strategy shifts from traditional keyword optimization toward relevance engineering—ensuring that your content directly addresses the questions and contexts that AI systems encounter during training and inference. Implementation requires cross-functional collaboration between content teams (who create and optimize material), SEO specialists (who ensure technical foundations support AI visibility), product teams (who may need to expose more structured data), and analytics teams (who maintain the monitoring infrastructure). Organizations that successfully implement this framework discover that AI visibility improvements often correlate with improved traditional SEO performance, creating a virtuous cycle where investments in relevance and topical authority drive gains across multiple channels simultaneously.

Traditional SEO metrics like ranking positions and click-through rates measure visibility in Google's organic results, which are increasingly obscured by AI Overviews. GEO metrics focus on brand mentions, industry ranking, and sentiment within AI-generated responses across platforms like ChatGPT, Gemini, and Perplexity. GEO metrics directly measure visibility in the AI systems that now mediate user discovery.

Quarterly reviews provide the optimal cadence for AI visibility OKRs. This timeframe allows sufficient time for content changes and relevance engineering efforts to propagate through AI systems while remaining frequent enough to enable meaningful course correction. Quarterly reviews also align with standard business planning cycles.

Effective monitoring requires specialized platforms like AmICited.com for brand mention tracking, Profound for comprehensive GEO analytics, and potentially FireGEO for competitive benchmarking. Most organizations also implement server log analysis to track AI bot activity and clickstream data analysis to understand traffic patterns from AI sources.

Start by establishing your current baseline across target AI systems using consistent prompt methodology. Then set improvement targets that account for your content investment capacity and competitive landscape. A realistic first-year goal might be increasing brand mention frequency by 25-50% depending on your starting position and industry competitiveness.

The measurement chasm is the gap between your optimization actions and measurable business outcomes, where AI systems retrieve and synthesize your content without leaving visible traces in traditional analytics. It matters because you can't optimize what you can't measure—understanding this gap is essential for building effective GEO strategies.

Use the three-tier framework: track input metrics (eligibility), channel metrics (visibility), and performance metrics (business impact). Connect brand mentions to brand awareness metrics, ranking improvements to market share goals, and AI-driven traffic to revenue or customer acquisition objectives. Adopt probabilistic thinking about attribution since AI mentions contribute to consideration even without direct clicks.

Sentiment analysis reveals not just whether your brand is mentioned in AI responses, but how it's positioned. Being mentioned with positive sentiment indicators (intuitive, comprehensive, innovative) matters more than raw mention frequency. Sentiment tracking helps you understand competitive positioning and identify which aspects of your offering resonate most with AI systems.

Recognize that AI systems produce variable outputs—the same query may yield different responses across requests. Focus on directional trends and multi-quarter trajectories rather than quarter-to-quarter volatility. Set goals based on statistical distributions of presence rather than fixed percentages, and use probabilistic modeling to understand your likely range of visibility outcomes.

Monitor your brand mentions across ChatGPT, Gemini, Perplexity, and other AI systems. Get real-time insights into your GEO performance and competitive positioning with AmICited.

Learn how to evolve your measurement frameworks as AI search matures. Discover citation-based metrics, AI visibility dashboards, and KPIs that matter for tracki...

Discover the essential AI visibility metrics and KPIs to monitor your brand's presence across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms. ...

Learn how to measure GEO success with AI citation tracking, brand mentions, and visibility metrics across ChatGPT, Perplexity, Google AI Overviews, and Claude. ...