Content Chunking for AI: Optimal Passage Lengths for Citations

Learn how to structure content into optimal passage lengths (100-500 tokens) for maximum AI citations. Discover chunking strategies that increase visibility in ...

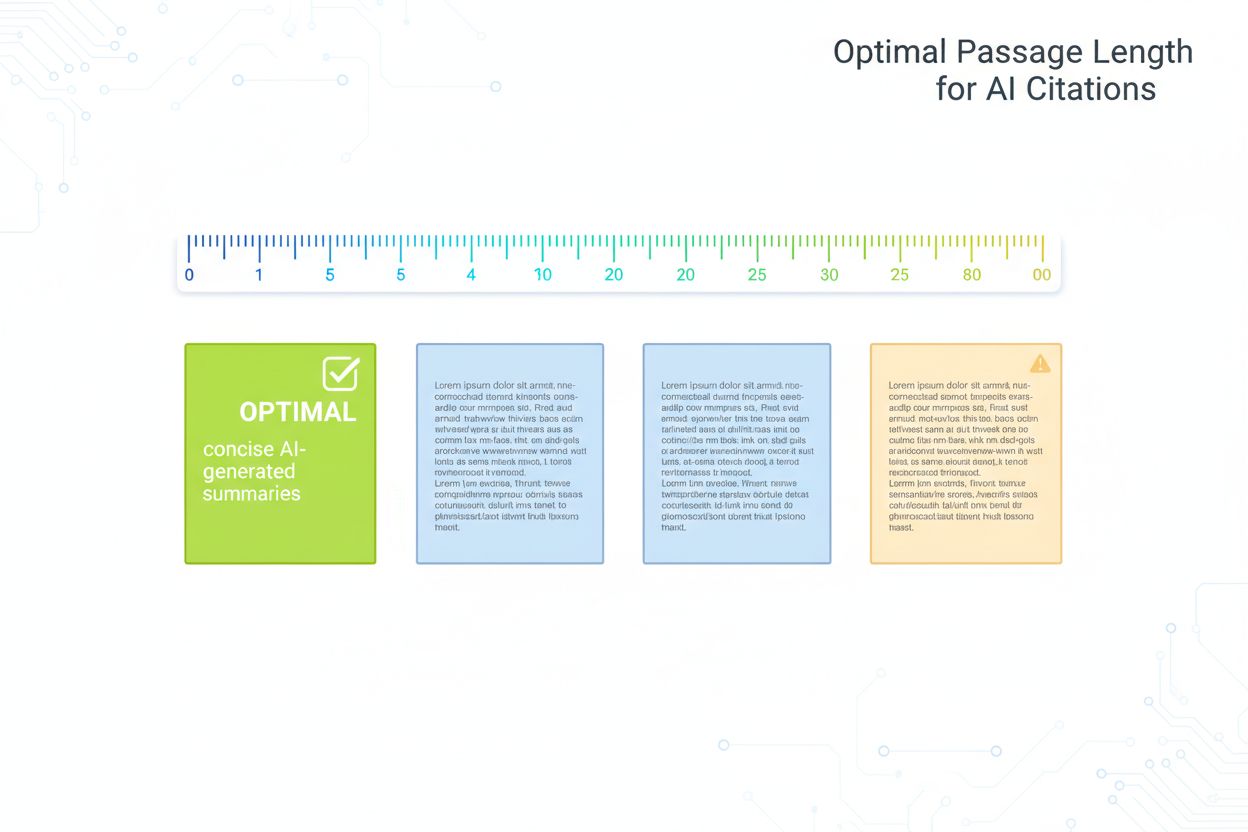

Research-backed guide to optimal passage length for AI citations. Learn why 75-150 words is ideal, how tokens affect AI retrieval, and strategies to maximize your content’s citation potential.

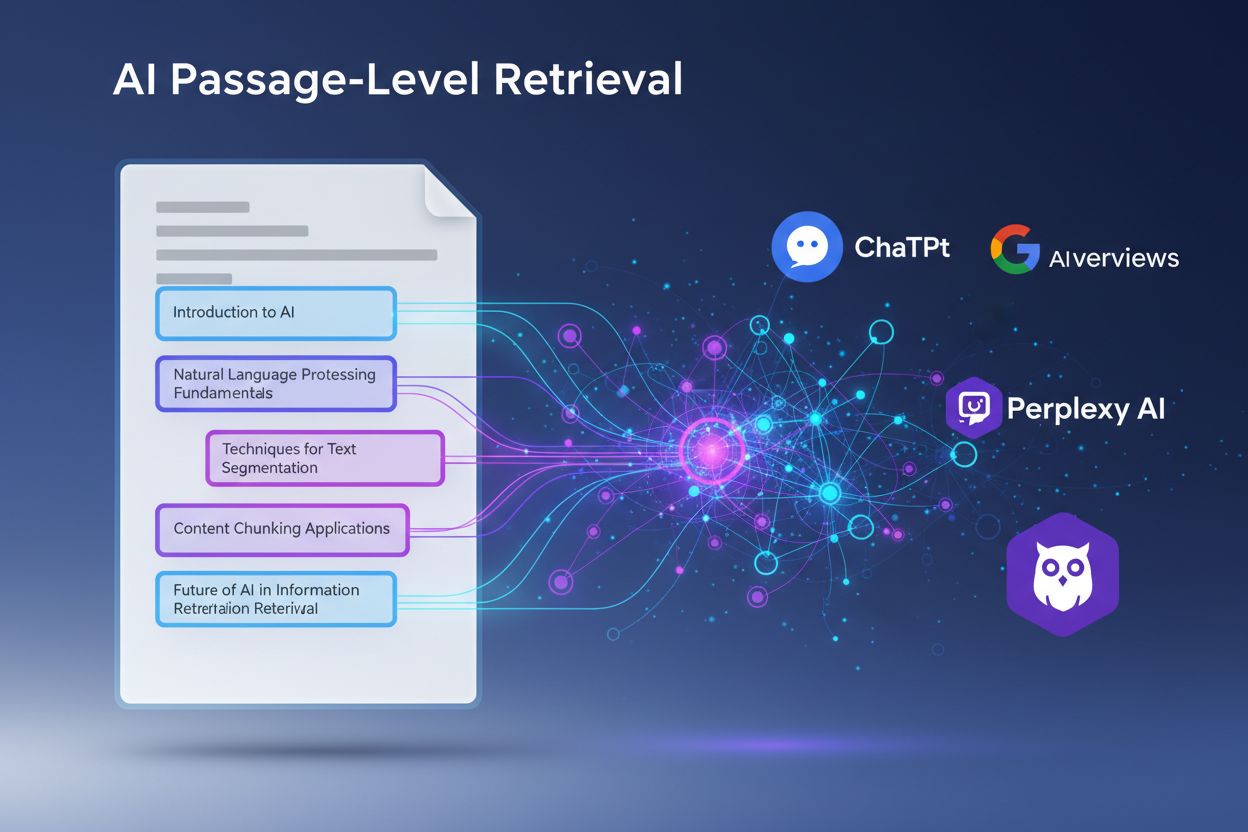

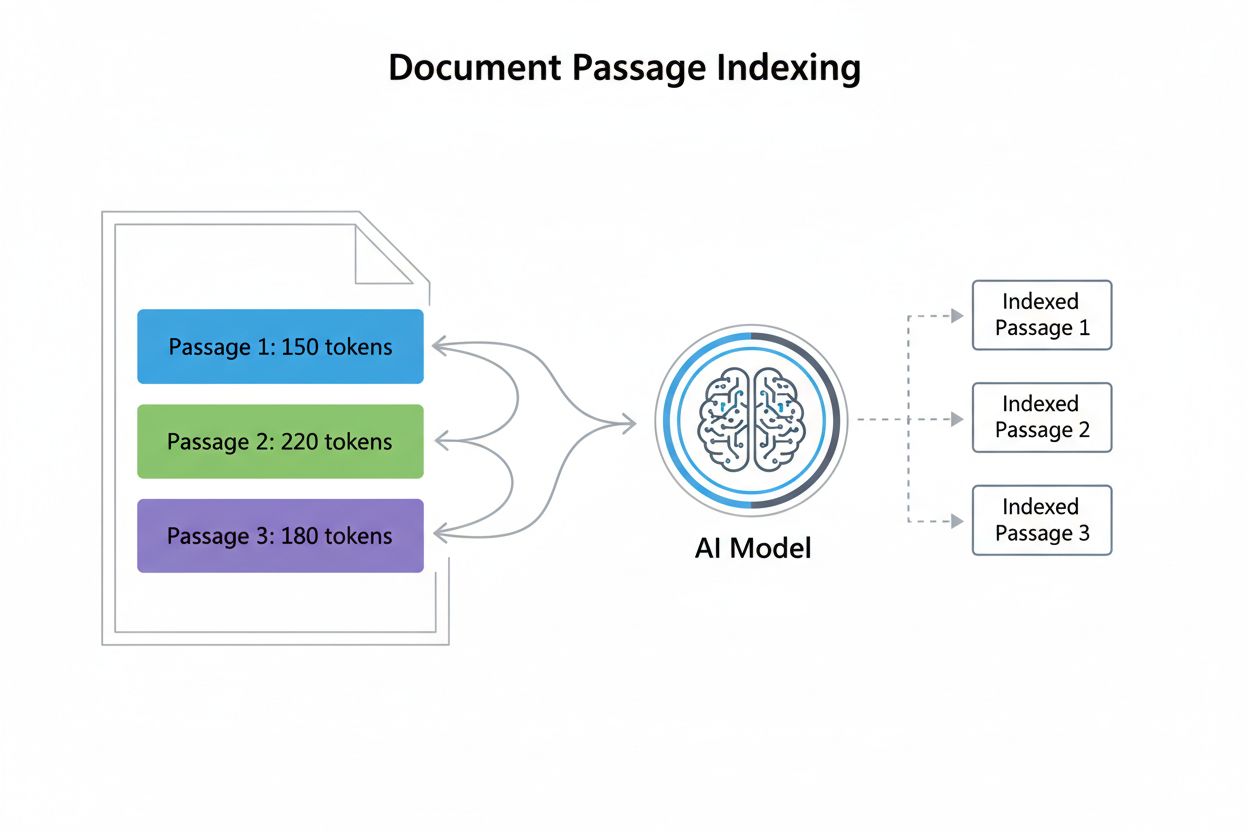

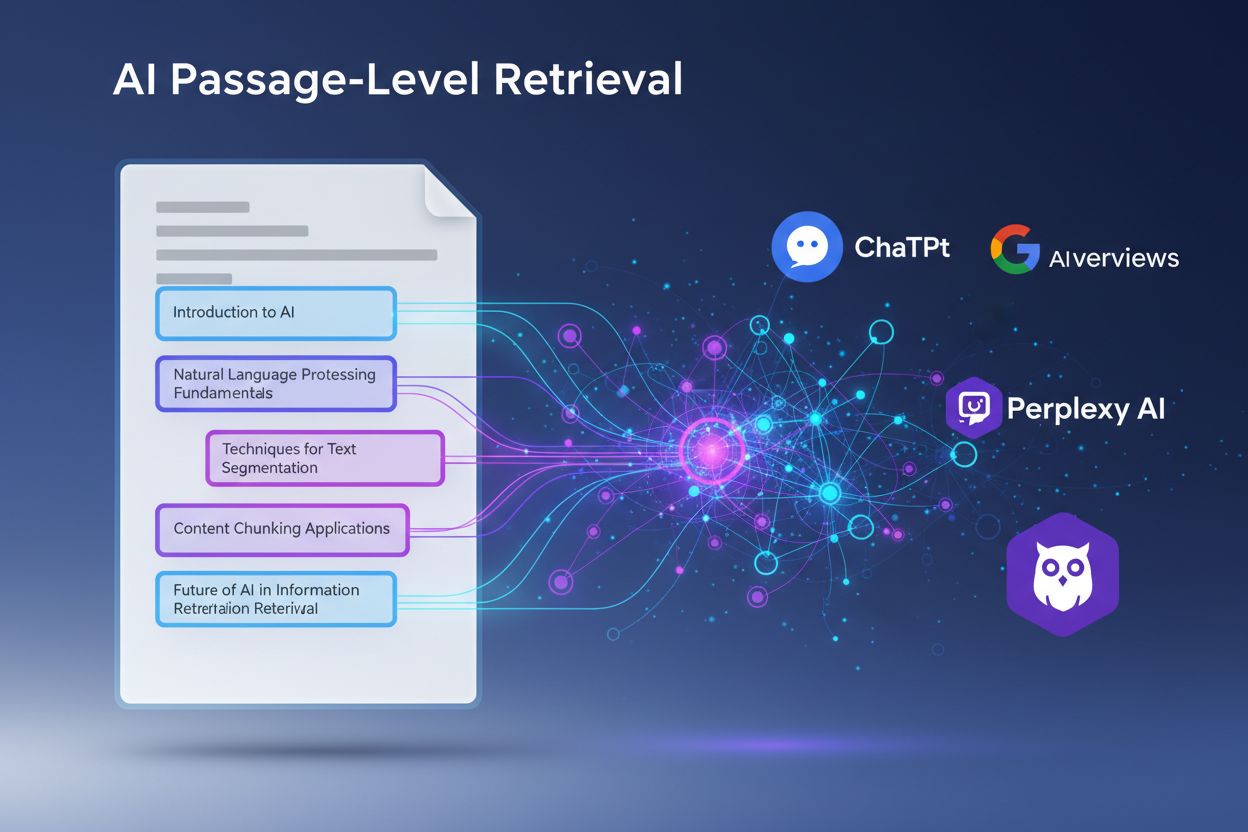

Passage length in the context of AI citations refers to the optimal size of content chunks that AI models extract and cite when generating responses. Rather than citing entire pages or documents, modern AI systems employ passage-level indexing, which breaks content into discrete, manageable segments that can be independently evaluated and cited. Understanding this distinction is crucial because it fundamentally changes how content creators should structure their material. The relationship between passages and tokens is essential to grasp: approximately 1 token equals 0.75 words, meaning a 300-word passage typically contains around 400 tokens. This conversion matters because AI models operate within context windows—fixed limits on how much text they can process simultaneously. By optimizing passage length, content creators can ensure their most valuable information falls within the range that AI systems can effectively index, retrieve, and cite, rather than being buried in longer documents that may exceed processing capabilities.

Research consistently demonstrates that 53% of content cited by AI systems is under 1,000 words, a finding that challenges traditional assumptions about content depth and authority. This preference for shorter content stems from how AI models evaluate relevance and extractability—concise passages are easier to parse, contextualize, and cite accurately. The concept of an “answer nugget” (typically 40-80 words) has emerged as a critical unit of optimization, representing the smallest meaningful answer to a user query. Interestingly, studies show a near-zero correlation between word count and citation position, meaning that longer content doesn’t automatically rank higher in AI citations. Content under 350 words tends to land in the top three citation spots more frequently, suggesting that brevity combined with relevance creates optimal conditions for AI citation. This data-driven insight reshapes content strategy fundamentally.

| Content Type | Optimal Length | Token Count | Use Case |

|---|---|---|---|

| Answer Nugget | 40-80 words | 50-100 tokens | Direct Q&A responses |

| Featured Snippet | 75-150 words | 100-200 tokens | Quick answers |

| Passage Chunk | 256-512 tokens | 256-512 tokens | Semantic search results |

| Topic Hub | 1,000-2,000 words | 1,300-2,600 tokens | Comprehensive coverage |

| Long-form Content | 2,000+ words | 2,600+ tokens | Deep dives, guides |

Tokens are the fundamental units that AI models use to process language, with each token typically representing a word or word fragment. Calculating token count is straightforward: divide your word count by 0.75 to estimate tokens, though exact counts vary by tokenization method. For example, a 300-word passage contains approximately 400 tokens, while a 1,000-word article contains roughly 1,333 tokens. Context windows—the maximum number of tokens a model can process in a single request—directly impact which passages get selected for citation. Most modern AI systems operate with context windows ranging from 4,000 to 128,000 tokens, but practical limitations often mean only the first 2,000-4,000 tokens receive optimal attention. When a passage exceeds these practical limits, it risks being truncated or deprioritized in the retrieval process. Understanding your target AI system’s context window allows you to structure passages that fit comfortably within processing constraints while maintaining semantic completeness.

Example Token Calculation:

- 100-word passage = ~133 tokens

- 300-word passage = ~400 tokens

- 500-word passage = ~667 tokens

- 1,000-word passage = ~1,333 tokens

Practical Context Window Allocation:

- System context window: 8,000 tokens

- Reserved for query + instructions: 500 tokens

- Available for passages: 7,500 tokens

- Optimal passage size: 256-512 tokens (fits 14-29 passages)

AI models exhibit a phenomenon known as context rot, where information positioned in the middle of long passages experiences significant performance degradation. This occurs because transformer-based models apply attention mechanisms that naturally favor content at the beginning (primacy effect) and end (recency effect) of input sequences. When passages exceed 1,500 tokens, critical information buried in the middle can be overlooked or deprioritized during citation generation. This limitation has profound implications for how content should be structured—placing your most important information at the beginning and end of passages maximizes citation likelihood. Several mitigation strategies can counteract this problem:

Optimal passage structure prioritizes semantic coherence—ensuring each passage represents a complete, self-contained thought or answer. Rather than arbitrarily breaking content at word counts, passages should align with natural topic boundaries and logical divisions. Context independence is equally critical; each passage should be understandable without requiring readers to reference surrounding content. This means including necessary context within the passage itself rather than relying on cross-references or external information. When structuring content for AI retrieval, consider how passages will appear in isolation—without headers, navigation, or surrounding paragraphs. Best practices include: starting each passage with a clear topic sentence, maintaining consistent formatting and terminology, using descriptive subheadings that clarify passage purpose, and ensuring each passage answers a complete question or covers a complete concept. By treating passages as independent units rather than arbitrary text segments, content creators dramatically improve the likelihood that AI systems will extract and cite their work accurately.

The “Snack Strategy” optimizes for short, focused content (75-350 words) designed to answer specific queries directly. This approach excels for simple, straightforward questions where users seek quick answers without extensive context. Snack content performs exceptionally well in AI citations because it matches the “answer nugget” format that AI systems naturally extract. Conversely, the “Hub Strategy” creates comprehensive, long-form content (2,000+ words) that explores complex topics in depth. Hub content serves different purposes: establishing topical authority, capturing multiple related queries, and providing context for more nuanced questions. The key insight is that these strategies aren’t mutually exclusive—the most effective approach combines both. Create focused snack content for specific questions and quick answers, then develop hub content that links to and expands upon these snacks. This hybrid approach allows you to capture both direct AI citations (through snacks) and comprehensive topical authority (through hubs). When deciding which strategy to employ, consider your query intent: simple, factual questions favor snacks, while complex, exploratory topics benefit from hubs. The winning strategy balances both approaches based on your audience’s actual information needs.

Answer nuggets are concise, self-contained summaries typically 40-80 words that directly respond to specific questions. These nuggets represent the optimal format for AI citation because they provide complete answers without excess information. Placement strategy is critical: position your answer nugget immediately after your main heading or topic introduction, before diving into supporting details and explanations. This front-loading ensures AI systems encounter the answer first, increasing citation likelihood. Schema markup plays a vital supporting role in answer nugget optimization—using structured data formats like JSON-LD tells AI systems exactly where your answer exists. Here’s an example of a well-structured answer nugget:

Question: "How long should web content be for AI citations?"

Answer Nugget: "Research shows 53% of AI-cited content is under 1,000 words, with optimal passages ranging from 75-150 words for direct answers and 256-512 tokens for semantic chunks. Content under 350 words tends to rank in top citation positions, suggesting brevity combined with relevance maximizes AI citation likelihood."

This nugget is complete, specific, and immediately useful—exactly what AI systems seek when generating citations.

JSON-LD schema markup provides explicit instructions to AI systems about your content’s structure and meaning, dramatically improving citation likelihood. The most impactful schema types for AI optimization include FAQ schema for question-and-answer content and HowTo schema for procedural or instructional content. FAQ schema is particularly powerful because it directly mirrors how AI systems process information—as discrete question-answer pairs. Research demonstrates that pages implementing appropriate schema markup are 3x more likely to be cited by AI systems compared to unmarked content. This isn’t coincidental; schema markup reduces ambiguity about what constitutes an answer, making extraction and citation more confident and accurate.

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"@id": "https://example.com/faq#q1",

"name": "What is optimal passage length for AI citations?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Research shows 53% of AI-cited content is under 1,000 words, with optimal passages ranging from 75-150 words for direct answers and 256-512 tokens for semantic chunks."

}

}

]

}

Implementing schema markup transforms your content from unstructured text into machine-readable information, signaling to AI systems exactly where answers exist and how they’re organized.

Tracking passage performance requires monitoring specific metrics that indicate AI citation success. Citation share measures how frequently your content appears in AI-generated responses, while citation position tracks whether your passages appear first, second, or later in citation lists. Tools like SEMrush, Ahrefs, and specialized AI monitoring platforms now track AI Overview appearances and citations, providing visibility into performance. Implement A/B testing by creating multiple versions of passages with different lengths, structures, or schema implementations, then monitor which versions generate more citations. Key metrics to track include:

Regular monitoring reveals which passage structures, lengths, and formats resonate most with AI systems, enabling continuous optimization.

Many content creators inadvertently sabotage their AI citation potential through preventable structural mistakes. Burying important information deep within passages forces AI systems to search through irrelevant context before finding answers—place your most critical information first. Excessive cross-referencing creates context dependency; passages that constantly reference other sections become difficult for AI systems to extract and cite independently. Vague, non-specific content lacks the precision AI systems need for confident citation—use concrete details, specific numbers, and clear statements. Poor section boundaries create passages that span multiple topics or incomplete thoughts; ensure each passage represents a coherent unit. Ignoring technical structure means missing opportunities for schema markup, proper heading hierarchy, and semantic clarity. Additional mistakes include:

Avoiding these mistakes, combined with implementing the optimization strategies outlined above, positions your content for maximum AI citation performance.

Research shows 75-150 words (100-200 tokens) is optimal for most content types. This length provides sufficient context for AI systems to understand and cite your content while remaining concise enough for direct inclusion in AI-generated answers. Content under 350 words tends to land in top citation positions.

No. Research demonstrates that 53% of cited pages are under 1,000 words, and there's virtually no correlation between word count and citation position. Quality, relevance, and structure matter far more than length. Short, focused content often outperforms lengthy articles in AI citations.

One token approximately equals 0.75 words in English text. So 1,000 tokens equals roughly 750 words. The exact ratio varies by language and content type—code uses more tokens per word due to special characters and syntax. Understanding this conversion helps you optimize passage length for AI systems.

Break long content into self-contained sections of 400-600 words each. Each section should have a clear topic sentence and include a 40-80 word 'answer nugget' that directly answers a specific question. Use schema markup to help AI systems identify and cite these nuggets. This approach captures both direct citations and topical authority.

AI models tend to focus on information at the beginning and end of long contexts, struggling with content in the middle. This 'context rot' means critical information buried in passages over 1,500 tokens may be overlooked. Mitigate this by placing critical information at the start or end, using explicit headers, and repeating key points strategically.

Track citation share (percentage of AI Overviews linking to your domain) using tools like BrightEdge, Semrush, or Authoritas. Monitor which specific passages appear in AI-generated answers and adjust your content structure based on performance data. AmICited also provides specialized monitoring for AI citations across multiple platforms.

Yes, significantly. Pages with comprehensive JSON-LD schema markup (FAQ, HowTo, ImageObject) are 3x more likely to appear in AI Overviews. Schema helps AI systems understand and extract your content more effectively, making it easier for them to cite your passages accurately and confidently.

Use both. Write short, focused content (300-500 words) for simple, direct queries using the 'Snack Strategy.' Write longer, comprehensive content (2,000-5,000 words) for complex topics using the 'Hub Strategy.' Within long content, structure it as multiple short, self-contained passages to capture both direct citations and topical authority.

Track how AI systems like ChatGPT, Perplexity, and Google AI Overviews cite your content. Optimize your passages based on real citation data and improve your visibility in AI-generated answers.

Learn how to structure content into optimal passage lengths (100-500 tokens) for maximum AI citations. Discover chunking strategies that increase visibility in ...

Community discussion on optimal content length for AI search visibility. Writers and strategists share data on what length gets cited and whether word count mat...

Learn the optimal content depth, structure, and detail requirements for getting cited by ChatGPT, Perplexity, and Google AI. Discover what makes content citatio...