Content Restructuring for AI: Before and After Examples

Learn how to restructure your content for AI systems with practical before and after examples. Discover techniques to improve AI citations and visibility across...

Learn how AI systems bypass paywalls and reconstruct premium content. Discover the impact on publisher traffic and effective strategies to protect your visibility.

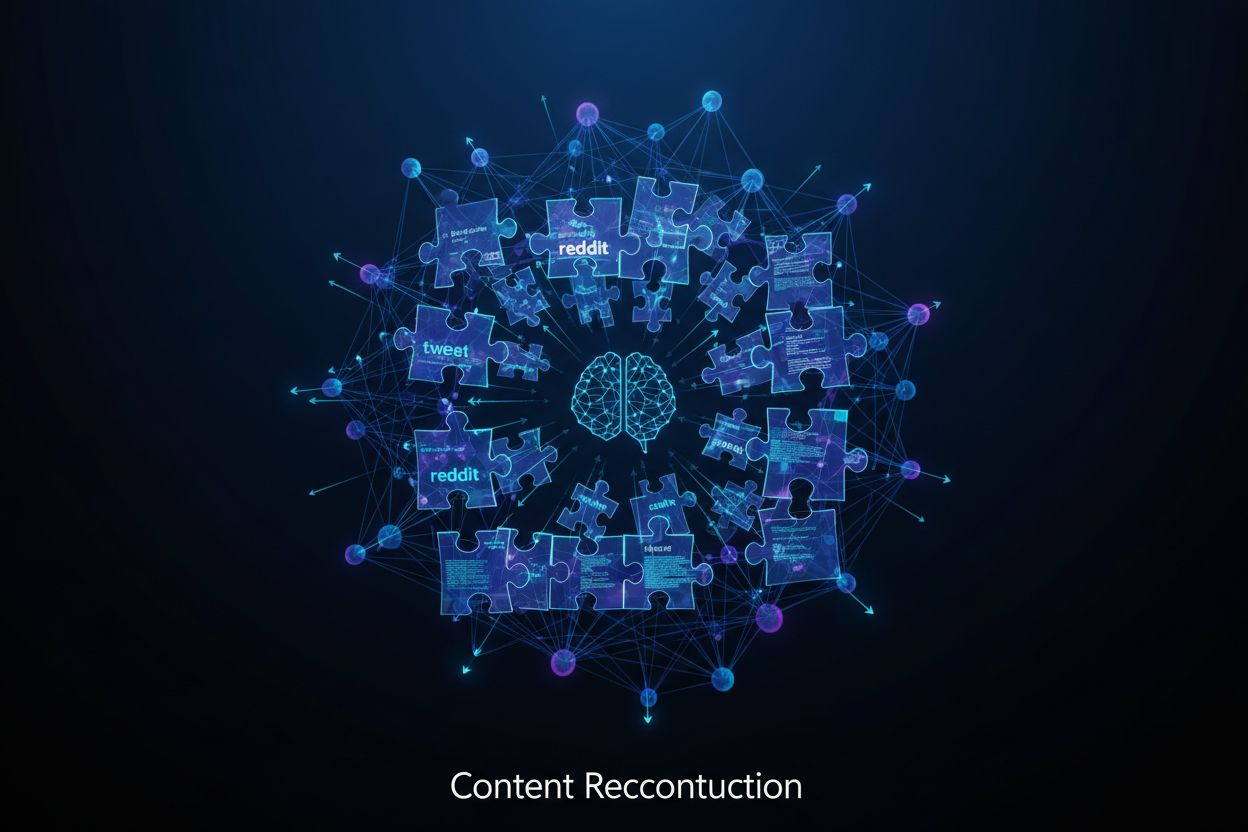

Your paywalled content isn’t being stolen through direct access—it’s being reconstructed from fragments scattered across the internet. When you publish an article, pieces of it inevitably appear in tweets, LinkedIn posts, Reddit discussions, and cached summaries. AI systems act as digital detectives, gathering these fragments and assembling them into a coherent summary that captures 70-80% of your article’s value without ever touching your servers. The chatbot doesn’t need to scrape your site; it triangulates between public statements, social media reactions, and competing coverage to rebuild your exclusive reporting. This fragment-based reconstruction is particularly effective for breaking news, where multiple sources cover similar ground, giving AI multiple angles to synthesize. Traditional bot blocking and paywall defenses are powerless against this approach because the AI never directly accesses your content—it simply stitches together publicly available pieces. Understanding this mechanism is crucial because it reveals why conventional security measures fail: you’re not fighting a scraper; you’re fighting a system that learns from the entire internet.

The numbers tell a sobering story about how AI is reshaping publisher visibility. Publishers worldwide are experiencing unprecedented traffic declines, with the most visible impact coming from AI-powered search and summarization tools. The following table illustrates the scale of this challenge:

| Metric | Impact | Time Period |

|---|---|---|

| Top 500 Publishers Traffic Loss | 20-27% year-over-year decline | February 2024 onwards |

| Monthly Visits Lost Across Industry | 64 million visits | Average monthly impact |

| New York Times Referral Traffic Drop | 27.4% decline | Q2 2025 |

| Google AI Overviews Traffic Reduction | Up to 70% traffic loss | 2025 |

| AI Chatbot Monthly Referrals | 25 million visits | 2025 (up from 1M in early 2024) |

What makes these numbers particularly concerning is the zero-click search phenomenon—users get their answers directly from AI summaries without ever visiting the original source. While AI chatbots are beginning to send more referral traffic (25 million monthly in 2025), this pales in comparison to the 64 million monthly visits publishers are losing overall. CNBC has reported losing 10-20% of its search-driven traffic, while election-related content saw even steeper declines. The fundamental problem: publishers are losing significantly more traffic than the referrals AI currently generates, creating a net negative impact on visibility and revenue.

Your readers aren’t intentionally trying to circumvent your paywall—they’ve simply discovered that AI makes it effortless. Understanding these four methods reveals why paywall protection has become so challenging:

Direct Summary Requests: Users ask AI tools like ChatGPT or Claude to summarize specific paywalled articles by title or topic. The AI pulls together responses using cached previews, public comments, related coverage, and previously quoted excerpts, delivering a comprehensive summary without the user ever visiting your site.

Social Media Fragment Mining: Platforms like X (Twitter) and Reddit are filled with screenshots, quotes, and paraphrased content from paywalled articles. AI tools trained on these platforms scan for scattered pieces, collecting them to rebuild the original article’s message with surprising accuracy.

Quick Takeaway Requests: Instead of asking for full summaries, users request bullet-point takeaways. Prompts like “Give me the key points from the latest WSJ article on inflation” lead AI to generate concise, accurate summaries—especially when the article sparked widespread discussion online.

Academic and Technical Content Reconstruction: Professionals frequently ask AI to “recreate the argument” of locked journal articles or technical papers. AI pulls from abstracts, citations, previous papers, and commentary to assemble a convincing version of the original content.

Most users don’t perceive this as paywall circumvention or content theft. They see it as a smarter way to stay informed—ask once, get what they need instantly, and move on without considering the impact on subscriptions, traffic, or original publishers.

Publishers have deployed increasingly sophisticated defenses, but each has significant limitations. Bot blocking has evolved considerably, with Cloudflare implementing default bot blocking on new domains and developing pay-per-crawl opt-in models for AI firms. TollBit reported blocking 26 million scraping attempts in March 2025 alone, while Cloudflare observed bot traffic jump from 3% to 13% in a single quarter—demonstrating both the scale of the problem and the effectiveness of some blocking measures. AI honeypots represent a clever defensive approach, luring bots into dead-end decoy pages to help publishers identify and block specific crawlers. Some publishers are experimenting with content watermarking and unique phrasing designed to make unauthorized reproduction more detectable, while others implement sophisticated tracking systems to monitor where their content appears in AI responses. However, these measures only address direct scraping, not fragment-based reconstruction. The fundamental challenge remains: how do you protect content that needs to be discoverable enough to attract readers but hidden enough to prevent AI from assembling it from public fragments? No single technical solution provides complete protection because the AI never needs direct access to your servers.

The most successful publishers are abandoning traffic-dependent models entirely, instead building direct relationships with readers who value their brand specifically. Dotdash Meredith provides the clearest success story for this approach, achieving revenue growth in Q1 2024—a rare feat in today’s publishing landscape. CEO Neil Vogel revealed that Google Search now accounts for just over one-third of their traffic, down from around 60% in 2021. This dramatic shift toward direct audience cultivation has insulated them from AI-driven traffic losses that devastated competitors. The pivot requires fundamental changes in how you think about content and audience: instead of optimizing for search keywords and viral potential, successful publishers focus on building trust, expertise, and community around their brand. They create content that readers specifically seek out rather than stumble across. Publishers are adjusting their subscription strategies to reflect AI’s growing influence, introducing new value propositions that AI cannot replicate—exclusive interviews, behind-the-scenes access, community-driven features, and personalized content experiences. The Athletic and The Information exemplify this approach, building loyal subscriber bases through unique content and community rather than search visibility. Brand building is replacing SEO-heavy strategies as the core growth engine, with publishers investing in direct email, membership programs, and exclusive communities that create switching costs and deeper engagement than any search-driven traffic could provide.

Understanding different paywall implementations is essential because each has distinct implications for search visibility and AI vulnerability. Publishers typically employ four paywall models: hard paywalls (all content locked), freemium (some content free, some locked), metered paywalls (limited free articles before paywall), and dynamic paywalls (personalized metering based on user behavior). Google’s research indicates that metered and lead-in paywalls are most compatible with search visibility, as Googlebot crawls without cookies and sees all content on first visit. Publishers must implement the isAccessibleForFree structured data attribute (set to false) to inform Google about paywalled content, along with CSS selectors indicating exactly where the paywall begins. User-agent detection strategies offer stronger protection, serving different HTML to regular users versus verified Googlebot, though this requires careful implementation to avoid cloaking penalties. JavaScript paywalls are easily circumvented by disabling JavaScript, while content-locked paywalls prevent Google from indexing full content, resulting in lower rankings due to insufficient quality signals. The critical SEO consideration is the “return to SERP” engagement signal—when users bounce back to search results after hitting a paywall, Google interprets this as poor user experience, gradually diminishing your site’s visibility over time. Publishers can mitigate this by allowing first-click-free access from Google or implementing smart metering that doesn’t penalize search traffic.

The legal battle over AI and paywalled content is still unfolding, with significant implications for publishers. The New York Times versus OpenAI case represents the most high-profile legal challenge, focusing on whether AI companies can use publisher content for training without compensation or permission. While the outcome will set important precedent, the case highlights a fundamental problem: copyright law wasn’t designed for content reconstruction from fragments. Some publishers are pursuing licensing agreements as a viable path forward—deals with the Associated Press, Future Publishing, and others demonstrate that AI companies are willing to negotiate compensation. However, these licensing arrangements currently cover only a small fraction of publishers, leaving most without formal agreements or revenue sharing. Global regulatory differences create additional complexity, as protections vary significantly between jurisdictions, and AI systems don’t respect borders. The core legal challenge is that if AI tools are reconstructing your content from publicly available fragments rather than directly copying it, traditional copyright enforcement becomes nearly impossible. Publishers cannot wait for courts to catch up to AI’s rapid evolution—legal battles take years while AI capabilities advance in months. The most pragmatic approach is implementing defensive strategies that work today, even as the legal framework continues to develop.

The publishing industry is approaching a critical inflection point, with three distinct scenarios emerging. In the consolidation scenario, only large publishers with strong brands, multiple revenue sources, and legal resources survive. Smaller publishers lack the scale to negotiate with AI companies or the resources to implement sophisticated defenses, potentially disappearing entirely. The coexistence scenario mirrors the music industry’s evolution with streaming—AI companies and publishers work out fair licensing deals, allowing AI to function while publishers receive proper compensation. This path requires industry coordination and regulatory pressure but offers a sustainable middle ground. The disruption scenario represents the most radical outcome: the traditional publishing model collapses because AI doesn’t just summarize content, it creates it. In this future, journalists might shift into roles like prompt designers or AI editors, while subscriptions and ad models fade. Each scenario has different implications for publisher strategy—consolidation favors brand investment and diversification, coexistence requires licensing negotiations and industry standards, while disruption demands fundamental business model innovation. Publishers should prepare for multiple futures simultaneously, building direct audiences while exploring licensing opportunities and developing AI-native content strategies.

Publishers need visibility into how AI systems are using their content, and several practical approaches can reveal reconstruction patterns. Direct testing involves prompting AI tools with specific article titles or topics to see if they generate detailed summaries—if the AI provides accurate information about your paywalled content, it’s likely reconstructing from fragments. Look for telltale signs of reconstruction: summaries that capture main arguments but lack specific quotes, exact data points, or recent information only available in the full article. AI reconstructions often feel slightly vague or generalized compared to direct quotes. Social media tracking reveals where your content fragments appear—monitor mentions of your articles on X, Reddit, and LinkedIn to identify the sources AI uses for reconstruction. AI forum monitoring on platforms like Reddit’s r/ChatGPT or specialized AI communities shows how users are requesting summaries of your content. Some publishers use lightweight monitoring tools to run ongoing checks, tracking where their content appears in AI responses and identifying patterns. This is where AmICited.com becomes invaluable—it provides comprehensive monitoring across GPTs, Perplexity, Google AI Overviews, and other AI systems, automatically tracking how your brand and content are referenced and reconstructed. Rather than manually testing each AI tool, AmICited gives publishers real-time visibility into their AI visibility, showing exactly how their paywalled content is being used, summarized, and presented to users across the entire AI landscape. This intelligence enables publishers to make informed decisions about defensive strategies, licensing negotiations, and content strategy adjustments.

Direct testing involves prompting AI tools with specific article titles to see if they generate detailed summaries. Look for telltale signs: summaries that capture main arguments but lack specific quotes, exact data points, or recent information only available in the full article. Social media tracking and AI forum monitoring also reveal where your content fragments appear and how users request summaries.

Scraping involves AI systems directly accessing and extracting your content, which appears in server logs and can be blocked with technical measures. Reconstruction assembles your content from publicly available fragments like social media posts, cached snippets, and related coverage. The AI never touches your servers, making it much harder to detect and prevent.

No single technical solution provides complete protection because AI reconstruction doesn't require direct access to your content. Traditional defenses like bot blocking help with direct scraping but offer limited protection against fragment-based reconstruction. The most effective approach combines technical measures with content strategy changes and business model adaptations.

Google research indicates that metered and lead-in paywalls are most compatible with search visibility, as Googlebot crawls without cookies and sees all content on first visit. Hard paywalls and content-locked paywalls prevent Google from indexing full content, resulting in lower rankings. Publishers should implement the isAccessibleForFree structured data attribute to inform Google about paywalled content.

Search engines enable circumvention through AI Overviews and zero-click results that answer user queries without driving traffic to source sites. However, they remain important traffic sources for many publishers. The challenge lies in maintaining search visibility while protecting content value. Search engines are developing licensing programs and exploring ways to better compensate content creators.

Licensing agreements with AI companies demonstrate that they're willing to negotiate compensation. Deals with the Associated Press and Future Publishing show one possible path forward. However, these licensing arrangements currently cover only a small fraction of publishers. Global regulatory differences create additional complexity, as protections vary significantly between jurisdictions.

AmICited provides comprehensive monitoring across GPTs, Perplexity, Google AI Overviews, and other AI systems, automatically tracking how your brand and content are referenced and reconstructed. Rather than manually testing each AI tool, AmICited gives publishers real-time visibility into their AI visibility, showing exactly how their paywalled content is being used and presented to users.

Implement a multi-layered approach: combine technical defenses (bot blocking, pay-per-crawl), build direct audience relationships, create exclusive content AI cannot replicate, monitor where your content appears in AI responses, and explore licensing opportunities. Focus on brand building and direct subscriptions rather than relying solely on search traffic, as this insulates you from AI-driven traffic losses.

Discover how AI systems are using your paywalled content and take control of your visibility across ChatGPT, Perplexity, Google AI Overviews, and more.

Learn how to restructure your content for AI systems with practical before and after examples. Discover techniques to improve AI citations and visibility across...

Learn how to repurpose and optimize content for AI platforms like ChatGPT, Perplexity, and Claude. Discover strategies for AI visibility, content structuring, a...

Learn how to optimize content readability for AI systems, ChatGPT, Perplexity, and AI search engines. Discover best practices for structure, formatting, and cla...