PerplexityBot

Learn about PerplexityBot, Perplexity's web crawler that indexes content for its AI answer engine. Understand how it works, robots.txt compliance, and how to ma...

Complete guide to PerplexityBot crawler - understand how it works, manage access, monitor citations, and optimize for Perplexity AI visibility. Learn about stealth crawling concerns and best practices.

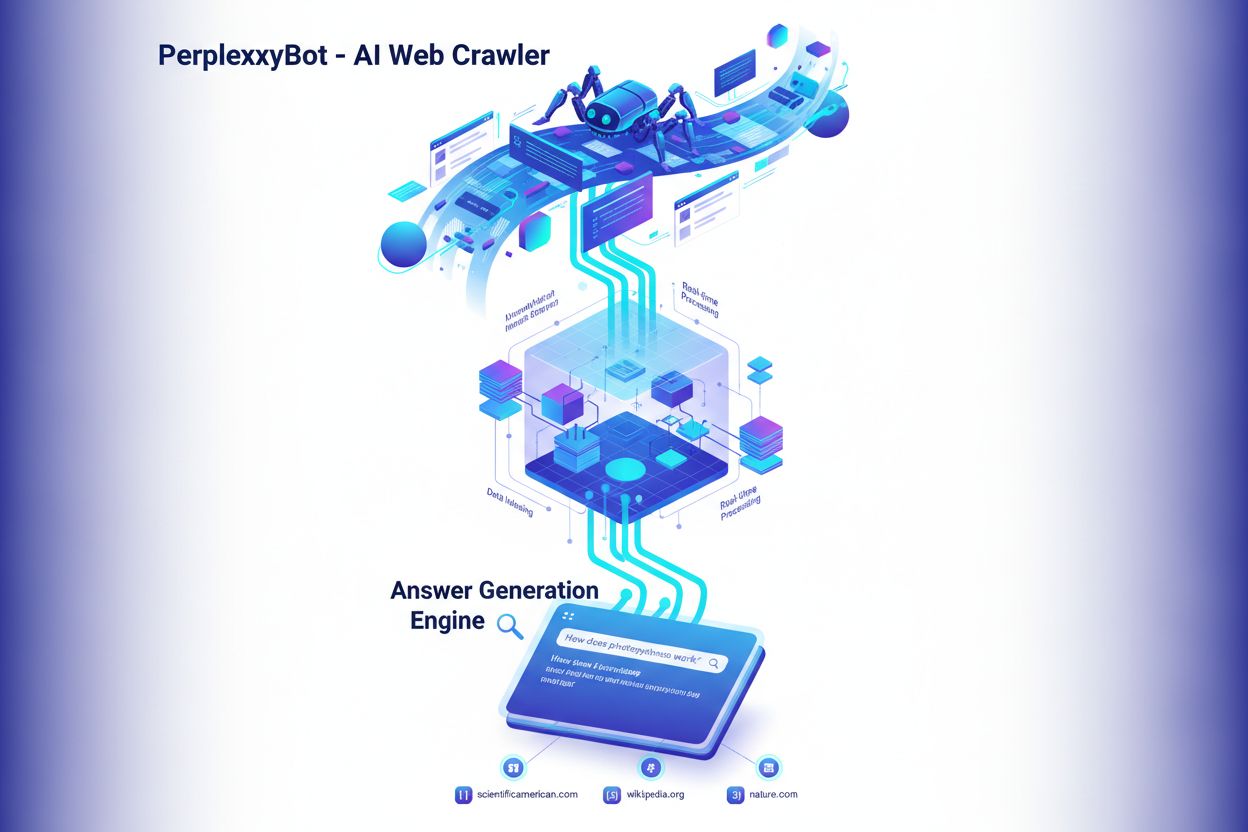

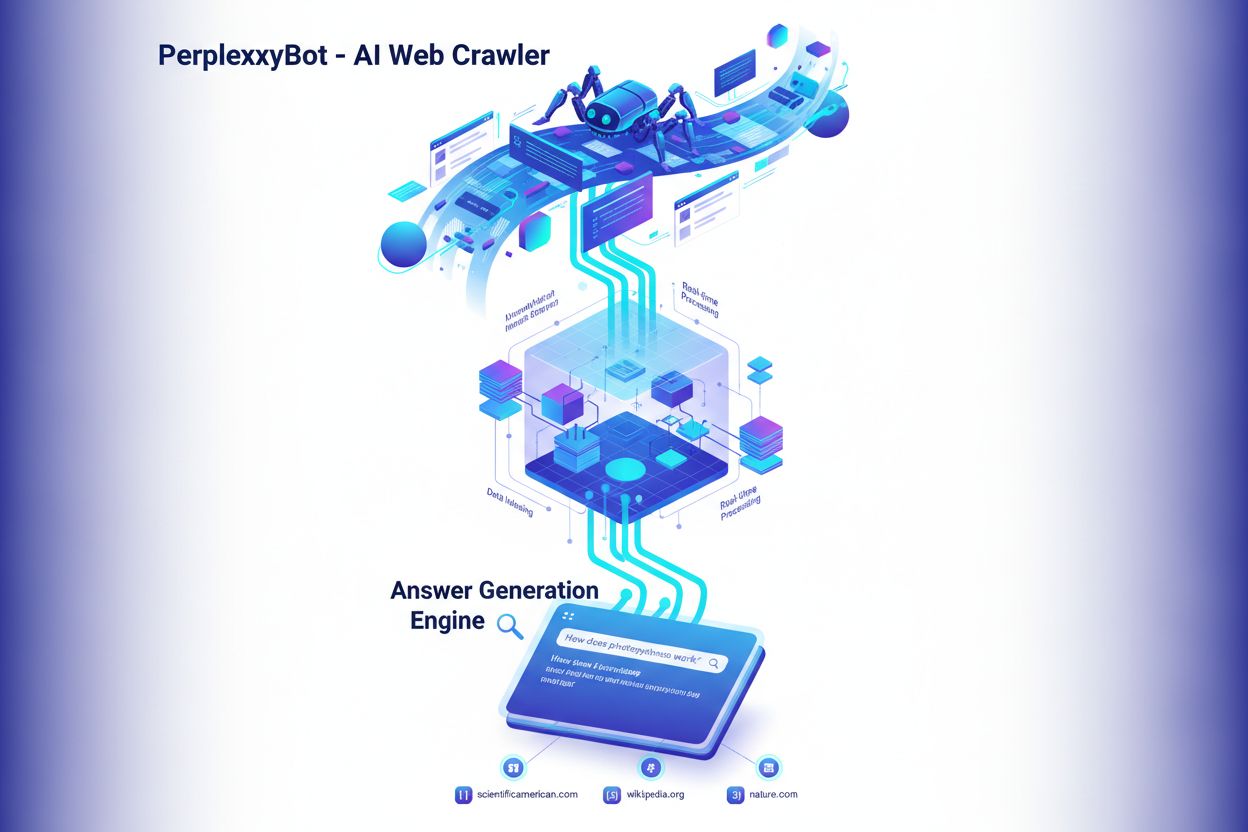

PerplexityBot is the official web crawler developed by Perplexity AI, designed to index and surface websites in Perplexity’s AI-powered search results. Unlike some AI crawlers that collect data for training large language models, PerplexityBot serves a specific purpose: to discover, crawl, and link to websites that provide relevant answers to user queries. The crawler operates using a clearly defined user-agent string (Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; PerplexityBot/1.0; +https://perplexity.ai/perplexitybot)) and publishes its IP address ranges publicly, allowing website owners to identify and manage crawler traffic. Understanding what PerplexityBot does is essential for website owners who want to control their content’s visibility in Perplexity’s answer engine while maintaining transparency about how their sites are accessed.

PerplexityBot operates as a standard web crawler, continuously scanning the internet to discover and index web pages. When it encounters a website, it reads the robots.txt file to understand which content it’s allowed to access, then systematically crawls pages to extract and index their content. This indexed information feeds into Perplexity’s search algorithm, which uses it to provide cited answers to user queries. However, Perplexity actually operates two distinct crawlers with different purposes, each with its own user-agent and behavior patterns. Understanding the difference between these crawlers is crucial for website owners who want to fine-tune their access policies.

| Feature | PerplexityBot | Perplexity-User |

|---|---|---|

| Purpose | Indexes websites for search results and citations | Fetches specific pages in real-time when answering user queries |

| User-Agent String | PerplexityBot/1.0 | Perplexity-User/1.0 |

| robots.txt Compliance | Respects robots.txt disallow directives | Generally ignores robots.txt (user-initiated requests) |

| IP Ranges | Published at perplexity.com/perplexitybot.json | Published at perplexity.com/perplexity-user.json |

| Frequency | Continuous, scheduled crawling | On-demand, triggered by user queries |

| Use Case | Building search index | Retrieving current information for answers |

The distinction between these two crawlers is important because they can be managed separately through robots.txt rules and firewall configurations. PerplexityBot’s regular indexing crawl respects your robots.txt directives, while Perplexity-User may bypass them since it’s fetching content in response to a specific user request. Both crawlers publish their IP address ranges publicly, enabling website owners to implement precise firewall rules if they choose to block or allow specific crawler traffic.

In 2025, Cloudflare published a detailed investigation revealing that Perplexity was using undeclared crawlers to bypass website restrictions. According to their findings, when Perplexity’s declared crawlers (PerplexityBot and Perplexity-User) were blocked via robots.txt or firewall rules, the company deployed additional crawlers using generic browser user-agents (like Chrome on macOS) and rotating IP addresses from different ASNs (Autonomous System Numbers) to continue accessing restricted content. This behavior directly contradicts web crawling standards outlined in RFC 9309, which emphasize transparency and respect for website owner preferences. The investigation tested this by creating brand-new domains with explicit robots.txt disallow rules, yet Perplexity still provided detailed information about their content, suggesting the use of undeclared data sources or stealth crawling techniques.

This stands in stark contrast to how OpenAI handles crawler management. OpenAI’s GPTBot clearly identifies itself, respects robots.txt directives, and stops crawling when presented with blocks—demonstrating that transparent, ethical crawler behavior is both possible and practical. The Cloudflare findings raised significant concerns about whether Perplexity’s stated commitment to respecting website preferences is genuine, particularly for website owners who explicitly want to prevent their content from being indexed or cited by AI systems. For website owners concerned about content control and transparency, this controversy highlights the importance of monitoring crawler behavior and using multiple layers of protection (robots.txt, WAF rules, and IP blocking) to enforce their preferences.

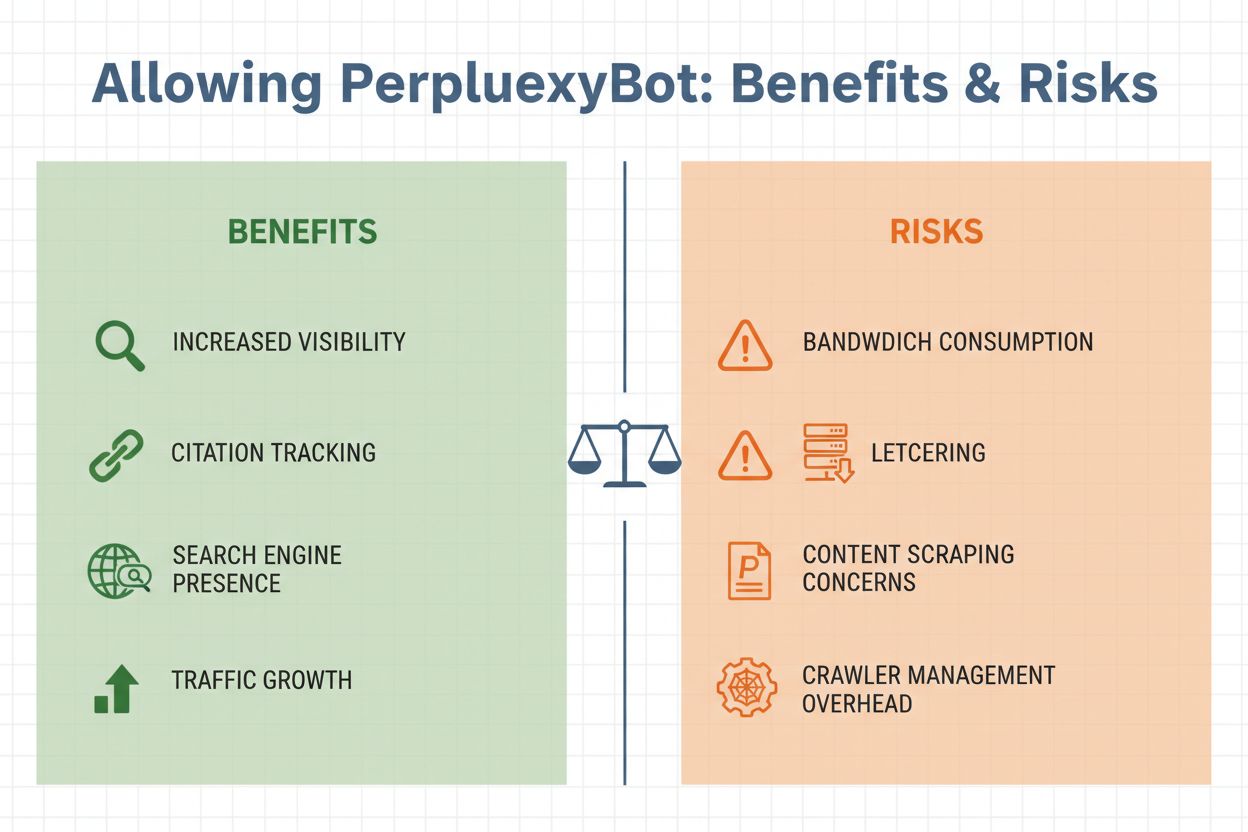

Deciding whether to allow PerplexityBot on your website requires weighing several important factors. On one hand, allowing the crawler provides significant benefits: your content becomes eligible for citation in Perplexity’s answers, potentially driving referral traffic from users who see your site mentioned in AI-generated responses. On the other hand, there are legitimate concerns about bandwidth consumption, content scraping, and the loss of control over how your information is used. The decision ultimately depends on your business goals, content strategy, and comfort level with AI systems accessing your data.

Key Considerations for Allowing PerplexityBot:

Managing PerplexityBot access is straightforward and can be accomplished through several methods, depending on your technical infrastructure and specific requirements. The most common approach is using your robots.txt file, which provides clear directives to all well-behaved crawlers about which content they can access.

To allow PerplexityBot in your robots.txt file:

User-agent: PerplexityBot

Allow: /

To block PerplexityBot in your robots.txt file:

User-agent: PerplexityBot

Disallow: /

If you want to block PerplexityBot from specific directories while allowing access to others, you can use more granular rules:

User-agent: PerplexityBot

Disallow: /admin/

Disallow: /private/

Allow: /public/

For more robust protection, especially if you’re concerned about stealth crawling, implement firewall rules at the Web Application Firewall (WAF) level. Cloudflare WAF users can create custom rules to block PerplexityBot by combining user-agent and IP address matching:

AWS WAF users should create IP sets using the published PerplexityBot IP ranges from https://www.perplexity.com/perplexitybot.json, then create rules that match both the IP set and the PerplexityBot user-agent string. Always use the official IP ranges published by Perplexity, as they update regularly and are the authoritative source for legitimate crawler traffic.

Once you’ve decided on your PerplexityBot policy, monitoring actual crawler activity helps you verify that your rules are working correctly and understand the impact on your infrastructure. You can identify PerplexityBot requests in your server logs by looking for the distinctive user-agent string: PerplexityBot/1.0 or the generic browser user-agent if stealth crawling is occurring. Most web analytics platforms and server log analysis tools allow you to filter traffic by user-agent, making it easy to isolate PerplexityBot requests and analyze their patterns.

Key metrics to monitor include the frequency of crawler visits, the pages being accessed, and the bandwidth consumed. If you notice unusual patterns—such as rapid crawling of sensitive pages or requests from IP addresses not in Perplexity’s published ranges—this may indicate stealth crawling activity. Beyond basic traffic monitoring, using specialized tools like AmICited.com provides deeper insights into how your content is actually being cited across AI platforms including Perplexity. AmICited tracks mentions of your brand and content in AI-generated answers, allowing you to measure the real impact of allowing PerplexityBot and understand which of your pages are most valuable to AI systems. This data helps you make informed decisions about future crawler management policies and content optimization strategies.

Managing PerplexityBot effectively requires a balanced approach that protects your interests while recognizing the value of AI-driven visibility. First, establish a clear policy based on your business goals: decide whether the potential traffic and brand exposure from Perplexity citations outweighs your concerns about bandwidth and content control. Document this decision in your robots.txt file and communicate it to your team so everyone understands your crawler management strategy.

Second, implement layered protection if you choose to block PerplexityBot. Don’t rely solely on robots.txt, as the stealth crawling controversy demonstrates that some crawlers may ignore these directives. Combine robots.txt rules with WAF rules and IP blocking for defense-in-depth protection. Third, stay informed about crawler behavior by monitoring your logs regularly and following industry discussions about AI crawler ethics and transparency. The landscape is evolving rapidly, and new crawlers or tactics may emerge that require policy adjustments.

Finally, use monitoring tools strategically to measure the actual impact of your decisions. Tools like AmICited.com provide visibility into how AI systems cite your content, helping you understand whether allowing PerplexityBot is delivering the visibility benefits you expected. If you’re allowing the crawler, this data helps you optimize your content for AI citation. If you’re blocking it, monitoring confirms that your blocks are effective and that your content isn’t appearing in Perplexity results through other means.

PerplexityBot operates in a crowded landscape of AI crawlers, each with different purposes and transparency standards. GPTBot, operated by OpenAI, is widely recognized as a model of transparent crawler behavior—it clearly identifies itself, respects robots.txt directives, and stops crawling when blocked. Google’s crawlers for AI Overviews and other AI features similarly maintain transparency and respect website preferences. In contrast, Perplexity’s stealth crawling behavior, as documented by Cloudflare, represents a concerning departure from these standards.

The key difference lies in transparency and respect for website owner preferences. Well-behaved crawlers like GPTBot make it easy for website owners to understand what they’re doing and provide clear mechanisms for control. Perplexity’s use of undeclared crawlers and IP rotation to bypass restrictions undermines this trust. For website owners, this means you should be more cautious about Perplexity’s stated policies and implement stronger technical controls if you want to ensure your preferences are actually respected. As the AI crawler ecosystem matures, expect increasing pressure on companies like Perplexity to adopt more transparent, ethical practices that align with established web standards and respect website owner autonomy.

PerplexityBot is Perplexity AI's official web crawler designed to index websites and surface them in Perplexity's AI-powered search results. Unlike some AI crawlers that collect data for training, PerplexityBot specifically discovers and links to websites that provide relevant answers to user queries. It operates transparently with a published user-agent string and IP address ranges.

No. According to Perplexity's official documentation, PerplexityBot is designed to surface and link websites in search results on Perplexity. It is not used to crawl content for AI foundation models or training purposes. The crawler's sole function is to index content for inclusion in Perplexity's answer engine.

You can block PerplexityBot using your robots.txt file by adding 'User-agent: PerplexityBot' followed by 'Disallow: /' to prevent all access. For stronger protection, implement WAF rules on Cloudflare or AWS WAF that block requests matching the PerplexityBot user-agent and IP ranges. However, be aware that stealth crawling may bypass these controls.

Perplexity publishes official IP address ranges for PerplexityBot at https://www.perplexity.com/perplexitybot.json and for Perplexity-User at https://www.perplexity.com/perplexity-user.json. These ranges are updated regularly and should be the authoritative source for your firewall and WAF configurations. Always use the official endpoints rather than relying on outdated IP lists.

PerplexityBot claims to respect robots.txt directives, but Cloudflare's 2025 investigation found evidence of stealth crawling using undeclared user-agents and rotating IP addresses to bypass robots.txt restrictions. While the declared PerplexityBot crawler should honor your robots.txt rules, implementing additional WAF protections is recommended if you want to ensure your preferences are enforced.

Bandwidth usage varies depending on your site's size and content volume. PerplexityBot performs continuous, scheduled crawling similar to Google's crawler. High-traffic sites may notice measurable bandwidth consumption. You can monitor actual usage by filtering your server logs for PerplexityBot requests and analyzing the data transfer volume to determine if it impacts your infrastructure.

Yes. You can manually search Perplexity for queries related to your content to see if your site is cited in answers. For more comprehensive monitoring, use tools like AmICited.com, which tracks how your brand and content appear across AI platforms including Perplexity, providing real-time insights into your AI visibility and citation patterns.

PerplexityBot is the scheduled crawler that continuously indexes websites for Perplexity's search index. Perplexity-User is triggered on-demand when users ask questions and Perplexity needs to fetch specific pages for real-time information. PerplexityBot respects robots.txt, while Perplexity-User generally ignores it since it's responding to user requests. Both have separate user-agent strings and IP ranges.

Track how Perplexity and other AI platforms cite your brand. Get real-time insights into your AI visibility and optimize your content strategy for maximum impact across generative search engines.

Learn about PerplexityBot, Perplexity's web crawler that indexes content for its AI answer engine. Understand how it works, robots.txt compliance, and how to ma...

Perplexity AI is an AI-powered answer engine combining real-time web search with LLMs to deliver cited, accurate responses. Learn how it works and its impact on...

Discover how stealth crawlers bypass robots.txt directives, the technical mechanisms behind crawler evasion, and solutions to protect your content from unauthor...