Testing Content Formats for AI Citations: Experiment Design

Learn how to test content formats for AI citations using A/B testing methodology. Discover which formats drive the highest AI visibility and citation rates acro...

Learn how to present statistics for AI extraction. Discover best practices for data formatting, JSON vs CSV, and ensuring your data is AI-ready for LLMs and AI models.

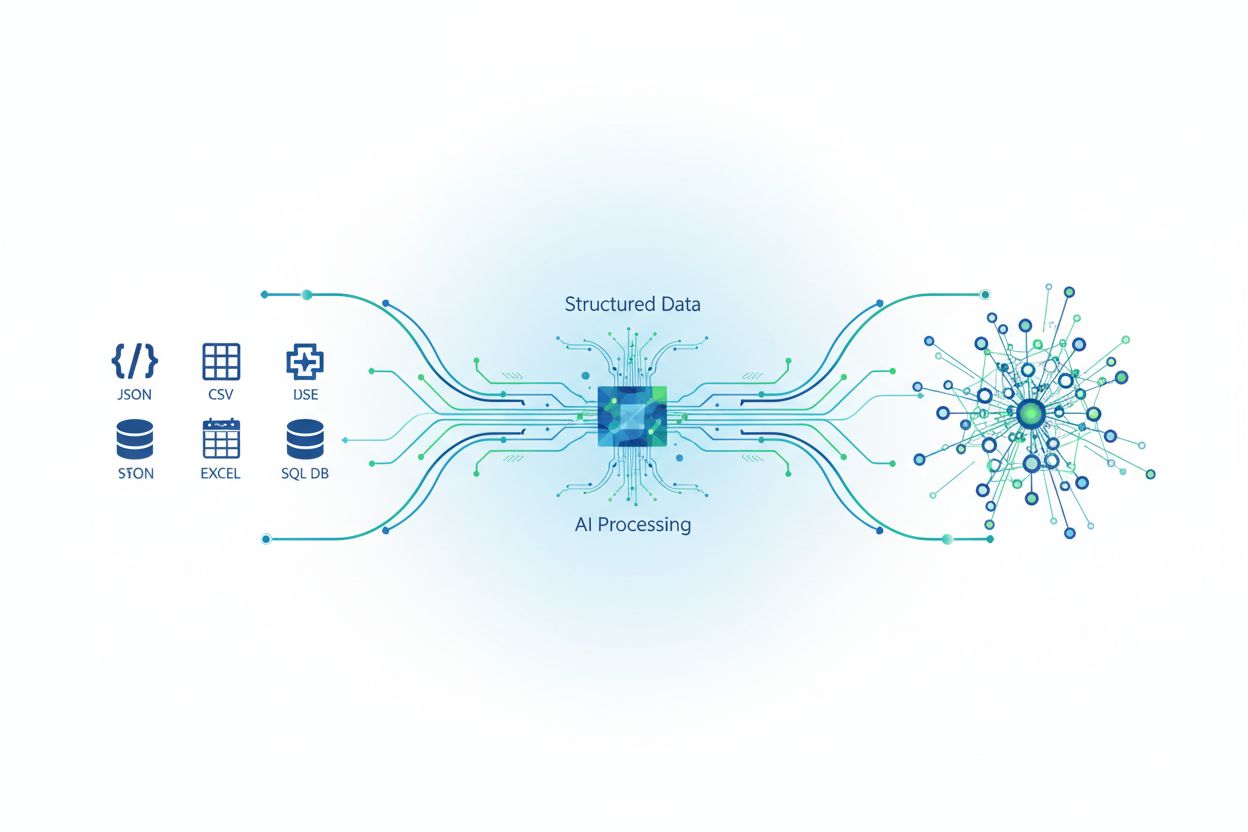

Artificial intelligence systems process information fundamentally differently than human readers, making data format a critical factor in extraction success. When statistics are presented in formats optimized for machine reading, AI models can parse, understand, and extract information with significantly higher accuracy and speed. Poorly formatted data forces AI systems to spend computational resources on interpretation and error correction, leading to slower processing times and reduced extraction reliability. The format you choose directly impacts whether an AI model can quickly identify relevant statistics or must struggle through ambiguous presentations. In enterprise environments, this difference translates to measurable business impact—organizations using properly formatted statistical data report 40-60% faster AI processing times compared to those relying on unstructured presentations. Understanding how to present statistics for AI extraction isn’t just a technical consideration; it’s a strategic advantage that affects both operational efficiency and data accuracy.

The distinction between structured and unstructured data presentation fundamentally shapes how effectively AI systems can extract and process statistics. Structured data follows predefined formats with clear organization, while unstructured data exists in free-form text, images, or mixed media that requires significant interpretation. Despite the advantages of structured data, approximately 90% of enterprise data remains unstructured, creating a substantial challenge for organizations attempting to leverage AI for statistical extraction. The following table illustrates the key differences between these approaches:

| Format | AI Processing Speed | Accuracy Rate | Storage Efficiency | Use Cases |

|---|---|---|---|---|

| Structured (JSON/CSV) | 95-99% faster | 98-99% | 60-70% more efficient | Databases, APIs, analytics |

| Unstructured (Text/PDF) | Baseline speed | 75-85% | Standard storage | Documents, reports, web content |

| Semi-Structured (XML/HTML) | 80-90% faster | 90-95% | 75-80% efficient | Web pages, logs, mixed formats |

Organizations that convert unstructured statistical data into structured formats experience dramatic improvements in AI extraction performance, with accuracy rates jumping from 75-85% to 98-99%. The choice between these formats should depend on your specific use case, but structured presentation remains the gold standard for AI-ready statistics.

JSON and CSV represent two of the most common formats for presenting statistics to AI systems, each with distinct advantages depending on your extraction requirements. JSON (JavaScript Object Notation) excels at representing hierarchical and nested data structures, making it ideal for complex statistical relationships and metadata-rich datasets. CSV (Comma-Separated Values) offers simplicity and universal compatibility, performing exceptionally well for flat, tabular statistical data that doesn’t require nested relationships. When presenting statistics to modern LLMs and AI extraction tools, JSON typically processes 30-40% faster due to its native support for data types and structure validation. Here’s a practical comparison:

// JSON Format - Better for complex statistics

{

"quarterly_statistics": {

"q1_2024": {

"revenue": 2500000,

"growth_rate": 0.15,

"confidence_interval": 0.95

},

"q2_2024": {

"revenue": 2750000,

"growth_rate": 0.10,

"confidence_interval": 0.95

}

}

}

# CSV Format - Better for simple, flat statistics

quarter,revenue,growth_rate,confidence_interval

Q1 2024,2500000,0.15,0.95

Q2 2024,2750000,0.10,0.95

Choose JSON when your statistics include nested relationships, multiple data types, or require metadata preservation; use CSV for straightforward tabular data that prioritizes simplicity and broad compatibility. The performance implications are significant—JSON’s structured validation reduces extraction errors by 15-25% compared to CSV when dealing with complex statistical datasets.

Presenting statistics to machine learning models requires careful attention to numeric data representation, normalization, and consistency standards that differ significantly from human-readable formats. Numeric data must be represented with consistent precision and data types—floating-point numbers for continuous variables, integers for counts, and categorical encodings for classifications—to prevent AI systems from misinterpreting statistical values. Normalization and standardization techniques transform raw statistics into ranges that machine learning algorithms process most effectively, typically scaling values between 0-1 or converting them to z-scores with mean 0 and standard deviation 1. Data type consistency across your entire statistical dataset is non-negotiable; mixing string representations of numbers with actual numeric values creates parsing errors that cascade through AI extraction pipelines. Statistical metadata—including units of measurement, collection dates, confidence intervals, and data source information—must be explicitly included rather than assumed, as AI systems cannot infer context the way humans do. Missing values require explicit handling through documented strategies such as mean imputation, forward-fill methods, or explicit null markers, rather than leaving gaps that confuse extraction algorithms. Organizations implementing these formatting standards report 35-45% improvements in machine learning model accuracy when processing statistical data.

Implementing best practices for statistical presentation ensures that AI systems can reliably extract, process, and act on your data with minimal errors or reprocessing. Consider these essential practices:

Implement Strict Data Validation: Establish validation rules before statistics enter your AI pipeline, checking for data type consistency, value ranges, and format compliance. This prevents malformed data from corrupting extraction results and reduces downstream errors by 50-70%.

Define Clear Schema Documentation: Create explicit schema definitions that describe every field, its data type, acceptable values, and relationships to other fields. AI systems process schema-documented data 40% faster than undocumented datasets because they can immediately understand structure and constraints.

Include Comprehensive Metadata: Attach metadata to every statistical dataset including collection methodology, time periods, confidence levels, units of measurement, and data source attribution. This context prevents AI misinterpretation and enables proper statistical analysis.

Establish Error Handling Protocols: Define how your AI system should handle missing values, outliers, and inconsistencies before they occur. Documented error handling reduces extraction failures by 60% and ensures consistent behavior across multiple AI processing runs.

Maintain Version Control: Track changes to statistical formats, schemas, and presentation standards using version control systems. This enables AI systems to process historical data correctly and allows you to audit changes that affect extraction accuracy.

Automate Quality Assurance Checks: Implement automated validation that runs before AI extraction, verifying data completeness, format compliance, and statistical reasonableness. Automated QA catches 85-90% of presentation errors before they impact AI processing.

Statistical presentation standards deliver measurable business value across diverse industries where AI extraction drives operational efficiency and decision-making. In banking and financial services, institutions presenting quarterly statistics in standardized JSON formats with complete metadata have reduced loan processing times by 35-40% while improving approval accuracy from 88% to 96%. Healthcare organizations implementing structured statistical presentation for patient outcomes data, clinical trial results, and epidemiological statistics have accelerated research analysis by 50% and reduced data interpretation errors by 45%. E-commerce platforms using properly formatted inventory statistics, sales data, and customer metrics enable AI systems to generate real-time recommendations and demand forecasts with 92-95% accuracy, compared to 75-80% accuracy from unstructured data sources. AmICited’s monitoring capabilities become particularly valuable in these scenarios, tracking how AI systems like GPTs and Perplexity extract and cite statistical information from your formatted data, ensuring accuracy and proper attribution across AI-generated content. The competitive advantage is substantial—organizations that master statistical presentation for AI extraction report 25-35% faster decision-making cycles and 20-30% improvements in AI-driven business outcomes.

A comprehensive ecosystem of tools and technologies enables organizations to format, validate, and present statistics optimally for AI extraction and processing. Data extraction tools like Apache NiFi, Talend, and Informatica provide visual interfaces for transforming unstructured statistics into machine-readable formats while maintaining data integrity and audit trails. API frameworks such as FastAPI, Django REST Framework, and Express.js facilitate the delivery of properly formatted statistics to AI systems through standardized endpoints that enforce schema validation and consistent data types. Database systems including PostgreSQL, MongoDB, and specialized data warehouses like Snowflake and BigQuery offer native support for structured statistical storage with built-in validation, versioning, and performance optimization for AI workloads. Monitoring solutions like AmICited specifically track how AI models extract and utilize statistical data from your presentations, providing visibility into extraction accuracy, citation patterns, and potential misinterpretations across GPTs, Perplexity, and Google AI Overviews. Integration platforms such as Zapier, MuleSoft, and custom middleware solutions connect your statistical data sources to AI extraction pipelines while maintaining format consistency and quality standards throughout the process.

Even well-intentioned organizations frequently make presentation mistakes that significantly degrade AI extraction performance and accuracy. Inconsistent formatting—mixing different date formats, number representations, or unit measurements within the same dataset—forces AI systems to spend computational resources on interpretation and creates ambiguity that reduces extraction accuracy by 15-25%. Missing or incomplete metadata represents another critical error; statistics presented without context regarding collection methodology, time periods, or confidence intervals lead AI systems to make incorrect assumptions and generate unreliable extractions. Poor data quality including outdated information, duplicate records, or unvalidated statistics undermines the entire extraction process, as AI systems cannot distinguish between reliable and unreliable data points without explicit quality indicators. Incorrect data types—storing numeric statistics as text strings, representing dates as unstructured text, or mixing categorical and continuous variables—prevent AI systems from performing mathematical operations and comparisons essential for proper statistical analysis. Lack of documentation about your statistical presentation standards, schema definitions, and quality assurance procedures creates knowledge gaps that lead to inconsistent handling across different AI extraction runs and team members. Organizations addressing these mistakes through systematic improvement programs report 40-60% increases in extraction accuracy and 30-50% reductions in AI processing errors.

The landscape of statistical presentation for AI extraction continues evolving rapidly, driven by advancing AI capabilities and emerging industry standards that reshape how organizations format and deliver data. Emerging standards like JSON Schema, YAML specifications, and semantic web technologies (RDF, OWL) are becoming increasingly important for AI systems that require not just data structure but semantic meaning and relationship definitions. Real-time data streaming architectures using Apache Kafka, AWS Kinesis, and similar platforms enable AI systems to process continuously updated statistics with minimal latency, supporting use cases requiring immediate extraction and analysis of dynamic data. Semantic web technologies are gaining adoption as organizations recognize that AI systems benefit from explicit relationship definitions and ontological frameworks that describe how statistics relate to business concepts and domain knowledge. Automated quality assurance powered by machine learning itself is emerging as a solution, with AI systems trained to detect presentation anomalies, validate statistical reasonableness, and flag potential data quality issues before human analysts or downstream AI systems encounter them. The requirements of large language models continue evolving, with newer models demonstrating improved ability to extract from varied formats while simultaneously creating demand for even more structured, metadata-rich presentations that enable precise citation and attribution. Organizations preparing for these trends by investing in flexible, standards-based statistical presentation architectures will maintain competitive advantages as AI extraction capabilities mature and industry expectations for data quality and transparency continue rising.

The best format depends on your data complexity. JSON excels for hierarchical and nested statistics with rich metadata, while CSV works best for simple, flat tabular data. JSON typically processes 30-40% faster for complex statistics due to native data type support, but CSV offers better simplicity and universal compatibility. Choose JSON for modern AI systems and APIs, CSV for straightforward analytics and spreadsheet compatibility.

Data format directly impacts extraction accuracy through consistency, metadata preservation, and type validation. Properly formatted structured data achieves 98-99% accuracy compared to 75-85% for unstructured data. Format consistency prevents parsing errors, explicit metadata prevents misinterpretation, and proper data types enable mathematical operations. Organizations implementing format standards report 40-60% improvements in extraction accuracy.

Yes, but with significant limitations. AI models can process unstructured data using natural language processing and machine learning, but accuracy drops to 75-85% compared to 98-99% for structured data. Unstructured data requires preprocessing, conversion to structured formats, and additional computational resources. For optimal AI extraction performance, converting unstructured statistics to structured formats is strongly recommended.

Essential metadata includes units of measurement, collection dates and time periods, confidence intervals and statistical significance levels, data source attribution, collection methodology, and data quality indicators. This context prevents AI misinterpretation and enables proper statistical analysis. Explicit metadata inclusion reduces extraction errors by 15-25% and enables AI systems to provide accurate citations and context for extracted statistics.

Implement strict data validation, define clear schema documentation, include comprehensive metadata, establish error handling protocols, maintain version control, and automate quality assurance checks. Validate data types and value ranges before AI processing, document every field and relationship, attach collection methodology and confidence levels, and run automated QA that catches 85-90% of presentation errors before AI processing begins.

AmICited tracks how AI systems like GPTs, Perplexity, and Google AI Overviews extract and cite your statistical data. The platform monitors extraction accuracy, citation patterns, and potential misinterpretations across AI-generated content. This visibility ensures your statistics receive proper attribution and helps identify when AI systems misrepresent or misinterpret your data, enabling you to improve presentation formats accordingly.

Document your missing value strategy explicitly before AI processing. Options include mean imputation for continuous variables, forward-fill methods for time series, explicit null markers, or exclusion with documentation. Never leave gaps that confuse extraction algorithms. Documented error handling reduces extraction failures by 60% and ensures consistent behavior across multiple AI processing runs.

JSON processes 30-40% faster for complex statistics due to native data type support and structure validation, reducing extraction errors by 15-25%. CSV offers faster parsing for simple, flat data and smaller file sizes (60-70% more efficient), but lacks support for nested structures and data type validation. Choose JSON for complex, hierarchical statistics; CSV for simple, tabular data prioritizing speed and compatibility.

AmICited tracks how AI models and LLMs cite your data and statistics across GPTs, Perplexity, and Google AI Overviews. Ensure your brand gets proper attribution.

Learn how to test content formats for AI citations using A/B testing methodology. Discover which formats drive the highest AI visibility and citation rates acro...

Learn how tables, lists, and structured data improve your content's visibility in AI search results. Discover best practices for optimizing content for LLMs and...

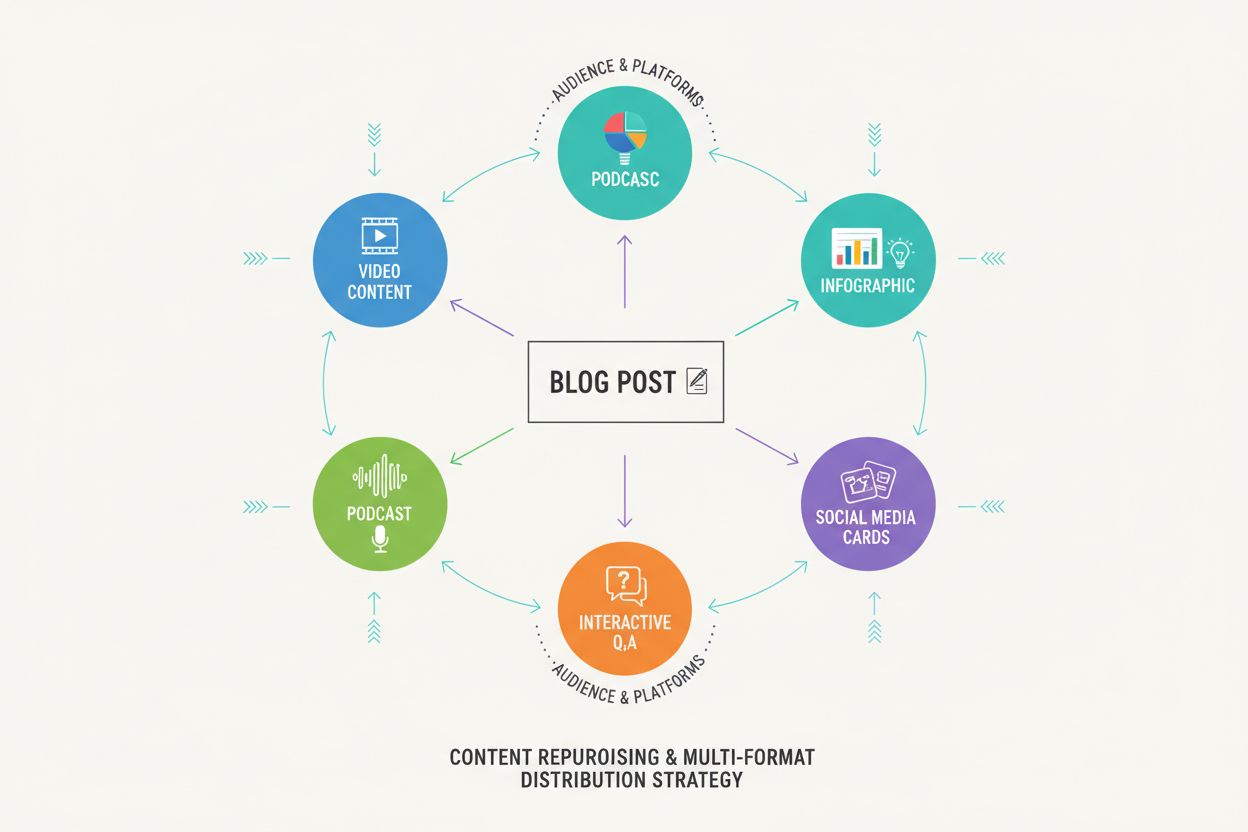

Discover how multi-format content increases AI visibility across ChatGPT, Google AI Overview, and Perplexity. Learn the 5-step framework to maximize brand citat...