Building a Prompt Library for AI Visibility Tracking

Learn how to create and organize an effective prompt library to track your brand across ChatGPT, Perplexity, and Google AI. Step-by-step guide with best practic...

Learn how to build and use prompt libraries for manual AI visibility testing. DIY guide to testing how AI systems reference your brand across ChatGPT, Perplexity, and Google AI.

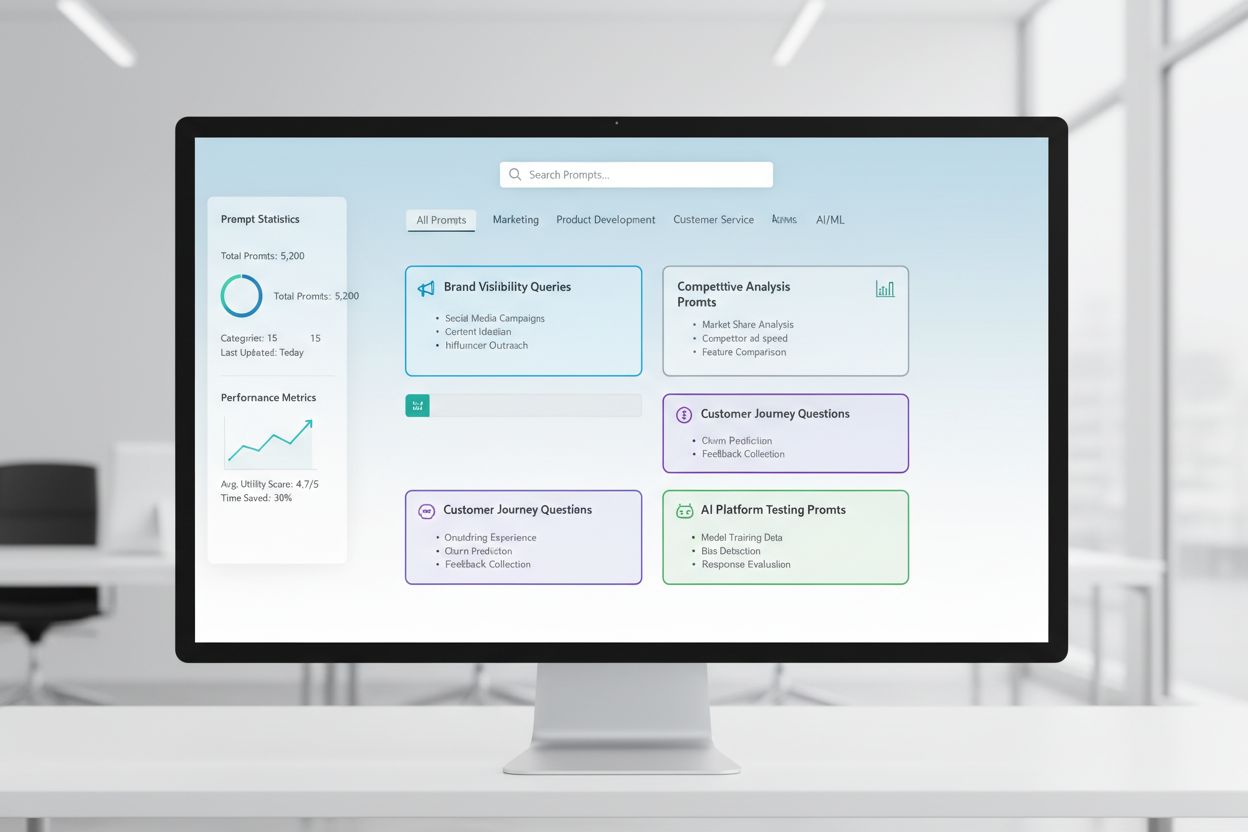

A prompt library is a curated, organized collection of test prompts designed to systematically evaluate how AI systems respond to specific queries and scenarios. In the context of manual AI testing, these libraries serve as your foundational toolkit—a repository of carefully crafted prompts that help you understand exactly how different AI models handle your brand mentions, citations, content accuracy, and contextual understanding. AI visibility testing relies heavily on prompt libraries because they enable consistent, repeatable testing across multiple platforms and time periods, allowing you to track how your content appears (or doesn’t appear) in AI-generated responses. Rather than randomly testing with ad-hoc queries, a well-structured prompt library ensures you’re systematically covering all the scenarios that matter to your business, from direct brand searches to indirect contextual references. This structured approach transforms manual testing from a haphazard process into a strategic, data-driven methodology that reveals patterns in AI behavior and helps you understand your actual visibility across the AI landscape.

Manual AI visibility testing is the hands-on process of directly querying AI systems with predetermined prompts and carefully analyzing their responses to understand how your content, brand, and citations are being surfaced or omitted. Unlike automated monitoring systems that run continuously in the background, manual testing gives you direct control over what you’re testing, when you’re testing it, and how deeply you analyze the results—making it invaluable for exploratory testing, understanding edge cases, and investigating unexpected AI behaviors. The key distinction lies in the nature of the work: manual testing is deliberate and investigative, allowing you to ask follow-up questions, test variations, and understand the “why” behind AI responses, whereas automated monitoring excels at consistent, large-scale tracking over time. Manual testing is particularly important for AI visibility because it helps you catch nuances that automated systems might miss—like whether your brand is mentioned but misattributed, whether citations are accurate but incomplete, or whether context is being understood correctly. Here’s how these approaches compare:

| Aspect | Manual Testing | Automated Monitoring |

|---|---|---|

| Cost | Lower upfront investment; time-intensive | Higher initial setup; lower ongoing labor |

| Speed | Slower per test; immediate insights | Slower to set up; rapid continuous tracking |

| Flexibility | Highly flexible; easy to pivot and explore | Rigid; requires predefined parameters |

| Accuracy | High for qualitative analysis; subject to human bias | Consistent; excellent for quantitative metrics |

| Learning Curve | Minimal; anyone can start testing | Steeper; requires technical setup and configuration |

Manual testing excels when you need to understand why something is happening, while automated monitoring excels at tracking what is happening at scale.

A robust prompt library structure should be organized around the key dimensions of your AI visibility strategy, with each component serving a specific testing purpose. Your library should include prompts that test different aspects of how AI systems discover and surface your content, ensuring comprehensive coverage of your visibility landscape. Here are the essential components every prompt library should contain:

When building your testing strategy, you need prompts that specifically target the dimensions of AI visibility that matter most to your business—brand mentions, citation accuracy, and contextual understanding. The most effective test prompts are those that mirror real user behavior while also isolating specific variables you want to measure. For test prompts focused on brand mentions, you’ll want variations that test direct searches, indirect references, and comparative contexts. Citation accuracy testing requires prompts that naturally trigger citations and then variations that test whether the AI system correctly attributes information to your sources. Here are example prompts you can adapt for your own testing:

"What are the latest developments in [your industry]?

Please cite your sources."

"Compare [your product] with [competitor product].

What are the key differences and advantages of each?"

"Explain [your area of expertise].

Who are the leading experts or companies in this field?"

These prompts are designed to naturally trigger the types of responses where your visibility matters most—when AI systems are providing information, making comparisons, or identifying authoritative sources. You can create variations by changing specificity levels (broad vs. narrow), adding constraints (recent information only, specific use cases), or modifying the citation requirements (with sources, with links, with author names). The key is testing not just whether your content appears, but how it appears and in what context, which requires prompts that create realistic scenarios where your visibility would naturally be demonstrated.

Your AI visibility strategy must account for the fact that different AI platforms—ChatGPT, Perplexity, Google AI Overviews, Claude, and others—have fundamentally different training data, retrieval mechanisms, and response generation approaches, meaning your visibility varies significantly across platforms. Cross-platform testing is essential because a prompt that surfaces your content prominently in one AI system might produce completely different results in another, and understanding these platform-specific patterns is crucial for developing a comprehensive visibility strategy. When testing across platforms, you’ll notice that Perplexity, with its real-time search integration, often surfaces more recent content and includes more explicit citations than ChatGPT, which relies on training data with a knowledge cutoff. Google AI Overviews, integrated directly into search results, operate under different visibility rules and may prioritize different sources than conversational AI systems. The practical approach is to maintain a consistent set of core prompts that you run across all platforms on a regular schedule, allowing you to track not just whether your content appears, but where it appears and how platform differences affect your visibility. This cross-platform perspective reveals whether your visibility challenges are universal (affecting all platforms) or platform-specific (requiring targeted strategies for particular systems), which fundamentally changes how you should approach optimization.

Effective test documentation transforms raw testing data into actionable intelligence, and the organization system you choose will determine whether your testing efforts compound in value over time or remain isolated data points. The most practical approach is to use a structured spreadsheet or documentation system that captures not just the results, but the context—including the exact prompt used, the date tested, the platform tested, the full AI response, and your analysis of whether your content appeared and how it was presented. Your result tracking system should include columns for: prompt category (brand, competitor, industry, etc.), platform tested, whether your content appeared, citation accuracy (if applicable), position in response (first mention, supporting evidence, etc.), and any qualitative notes about the response quality or relevance. Beyond the raw data, create templates for common testing scenarios so that your documentation remains consistent over time, making it easier to spot trends and changes in AI behavior. Document not just successes but also failures and anomalies—these often reveal the most important insights about how AI systems work and where your visibility strategy needs adjustment. By maintaining this disciplined approach to testing methodology, you create a historical record that shows how your visibility has evolved, which changes in AI systems have affected your results, and which optimization efforts have actually moved the needle.

Manual AI visibility testing is vulnerable to several systematic errors that can undermine your results and lead to incorrect conclusions about your actual visibility. The most common pitfall is inconsistent prompt phrasing—testing the same concept with slightly different wording each time, which introduces variables that make it impossible to track whether changes in results are due to AI system changes or your testing variations. To avoid this, create a master prompt document where each prompt is locked in and used identically across all testing rounds; if you want to test variations, create separate prompt variants with clear naming conventions. Another critical mistake is insufficient sample sizes and frequency—testing once and drawing conclusions, rather than recognizing that AI responses can vary based on timing, system load, and other factors. Establish a regular testing cadence (weekly, bi-weekly, or monthly depending on your resources) and test each prompt multiple times to identify patterns rather than anomalies. Confirmation bias is a subtle but dangerous pitfall where testers unconsciously interpret ambiguous results as confirming their expectations; combat this by having multiple team members review results independently and documenting objective criteria for what counts as “your content appearing.” Additionally, avoid the mistake of testing in isolation—always note the date, time, and any known AI system updates when testing, because understanding the context of your results is essential for interpreting what they mean. Finally, poor documentation of methodology means you won’t be able to replicate your testing or explain your results to stakeholders; always document exactly how you tested, what you measured, and how you interpreted results so that your testing is reproducible and defensible.

As your organization grows and your AI visibility strategy becomes more sophisticated, you’ll need to scale from individual, ad-hoc testing to a structured team testing approach that distributes the workload while maintaining consistency and quality. The key to successful scaling is creating clear testing protocols and role definitions—designate who owns which platforms, which prompt categories, and which analysis responsibilities, so that testing becomes a coordinated team effort rather than duplicated individual work. Implement a shared testing calendar where team members can see what’s been tested, when, and by whom, preventing redundant testing while ensuring comprehensive coverage. Create a testing checklist or runbook that any team member can follow to execute tests consistently, including specific instructions on how to document results, what to look for in responses, and how to flag anomalies or interesting findings. Collaboration tools like shared spreadsheets, project management systems, or dedicated testing platforms help coordinate efforts across team members and create a single source of truth for your testing data. As you scale, consider rotating testing responsibilities so that multiple people understand your testing methodology and can contribute, reducing dependency on any single person. Regular team sync meetings where you review testing results, discuss findings, and adjust your testing strategy based on what you’re learning ensure that your scaled testing effort remains aligned and strategic rather than becoming a disconnected set of individual tasks.

The most sophisticated AI visibility strategies recognize that manual vs automated approaches are complementary rather than competitive, with each excelling in different contexts and together providing comprehensive visibility coverage. Manual testing is your investigative tool—it’s where you explore, understand nuances, test hypotheses, and develop deep insights into how AI systems work and why your visibility varies across platforms and scenarios. Automated monitoring tools like AmICited excel at consistent, large-scale tracking over time, continuously monitoring your visibility across multiple AI platforms and alerting you to changes, trends, and anomalies that would be impossible to catch through manual testing alone. The practical integration strategy is to use manual testing to develop your testing framework, understand what matters, and investigate anomalies, while using automated monitoring to track those key metrics continuously and alert you when something changes. For example, you might manually test a new prompt category to understand how AI systems respond, then once you’ve validated that it’s a meaningful test, add it to your automated monitoring so you get continuous tracking without ongoing manual effort. AmICited and similar monitoring tools handle the repetitive, time-consuming work of running tests at scale and frequency that would be impractical to do manually, freeing your team to focus on the higher-value work of analysis, strategy, and optimization. The ideal workflow is: use manual testing to build your prompt library and testing strategy, validate your approach, and investigate specific questions; use automated monitoring to track your key visibility metrics continuously; and use the insights from automated monitoring to inform what you test manually next. This integrated approach ensures you’re not just testing manually in a vacuum, but building a comprehensive visibility strategy that combines the depth of manual investigation with the scale and consistency of automated monitoring.

A prompt library is a curated, organized collection of test prompts designed to systematically evaluate AI systems across multiple dimensions. Unlike a single prompt template, a library contains dozens of prompts organized by category (brand mentions, competitor comparisons, citations, etc.), allowing you to conduct comprehensive testing rather than isolated checks. Prompt libraries are versioned, documented, and designed for reuse and scaling across your team.

The frequency depends on your resources and how dynamic your industry is. Most organizations benefit from weekly or bi-weekly testing of core prompts to catch significant changes, with monthly deep-dive testing of the full prompt library. If you're in a fast-moving industry or have recently published major content, increase frequency to weekly. Combine manual testing with automated monitoring tools like AmICited for continuous tracking between manual test cycles.

Yes, you should use the same core prompts across platforms to ensure consistency and comparability. However, you may need platform-specific variations because different AI systems (ChatGPT, Perplexity, Google AI Overviews) have different interfaces, citation mechanisms, and response formats. Test your core prompts identically across platforms, then create platform-specific variations to account for unique features or limitations of each system.

Track whether your content appears (yes/no), where it appears in the response (first mention, supporting evidence, etc.), citation accuracy (if cited), and response quality. Also document the date tested, platform, exact prompt used, and any qualitative observations about how your content was presented. Over time, these metrics reveal patterns in your visibility and help you understand which optimization efforts actually move the needle.

Your testing is comprehensive when you're covering all major dimensions of your visibility: direct brand searches, competitor comparisons, industry topics, citation accuracy, and contextual understanding. A good rule of thumb is 20-30 core prompts that you test regularly, plus additional prompts for specific investigations. If you're consistently discovering new insights from manual testing, you likely need more prompts. If results are becoming predictable, your coverage is probably adequate.

For small organizations or initial testing, you can start with internal resources using the DIY approach outlined in this guide. However, as your testing scales, dedicated resources become valuable. Consider hiring or assigning someone to manage your prompt library and conduct regular testing if you have multiple products, operate in a competitive industry, or need frequent testing cycles. Alternatively, combine internal testing with automated monitoring tools like AmICited to distribute the workload.

Manual testing is investigative and flexible—you control what you test and can explore nuances and edge cases. Automated monitoring tools like AmICited run continuously, tracking your visibility across multiple AI platforms at scale and alerting you to changes. The ideal approach combines both: use manual testing to develop your testing strategy and investigate specific questions, while using automated monitoring for continuous tracking of key metrics.

You can automate the execution of prompts using APIs (OpenAI, Anthropic, etc.) to send your prompts to AI systems and capture responses programmatically. However, analysis typically remains manual because understanding context and nuance requires human judgment. You can also automate documentation and result tracking using spreadsheets or databases. The most practical approach is to use automated monitoring tools like AmICited for the repetitive work while reserving manual testing for deeper analysis and investigation.

While manual testing is valuable, automated monitoring ensures continuous visibility across all AI platforms. AmICited tracks how AI systems reference your brand in real-time.

Learn how to create and organize an effective prompt library to track your brand across ChatGPT, Perplexity, and Google AI. Step-by-step guide with best practic...

Learn what Prompt Library Development is and how organizations build query collections to test and monitor brand visibility across AI platforms like ChatGPT, Cl...

Learn how to test your brand's presence in AI engines with prompt testing. Discover manual and automated methods to monitor AI visibility across ChatGPT, Perple...