Building a Prompt Library for AI Visibility Tracking

Learn how to create and organize an effective prompt library to track your brand across ChatGPT, Perplexity, and Google AI. Step-by-step guide with best practic...

Learn how to conduct effective prompt research for AI visibility. Discover the methodology for understanding user queries in LLMs and tracking your brand across ChatGPT, Perplexity, and Google AI Overviews.

As large language models (LLMs) become increasingly integrated into how users discover information, AI visibility has emerged as a critical complement to traditional search engine optimization. While search engine visibility focuses on ranking for keywords in Google, Bing, and other search engines, AI visibility addresses how your brand, products, and content appear in responses generated by ChatGPT, Claude, Gemini, and other AI systems. Unlike traditional keywords with measurable search volumes and predictable patterns, prompts are inherently conversational, context-dependent, and often highly specific to individual user needs. Understanding which prompts surface your brand—and which ones don’t—is essential for maintaining relevance in an AI-driven information landscape. Recent data shows that over 40% of internet users now interact with LLMs weekly, with adoption accelerating across demographics and industries. Without visibility into the prompts that trigger your brand mentions or competitive positioning, you’re operating blind in a channel that increasingly influences purchasing decisions, brand perception, and customer trust.

Prompts can be systematically categorized into five distinct types, each with unique characteristics and business implications. Understanding these categories helps organizations prioritize which prompts to monitor and how to optimize for each type.

| Prompt Type | Description | Example Query | Business Impact |

|---|---|---|---|

| Direct Brand Queries | Explicit mentions of your company, product, or brand name | “What are the features of Slack?” or “How does Salesforce compare to HubSpot?” | Critical for brand control; directly influences brand perception and competitive positioning |

| Category/Solution Queries | Questions about product categories or solution types without brand mention | “What’s the best project management software?” or “How do I set up email marketing automation?” | Reveals market awareness gaps; opportunities to be included in solution comparisons |

| Problem-Solving Queries | User questions focused on solving specific problems or use cases | “How can I improve team collaboration?” or “What’s the best way to track customer interactions?” | Indicates intent-rich opportunities; shows where your solution addresses real pain points |

| Comparative Queries | Requests comparing multiple solutions or approaches | “Compare Asana vs Monday.com vs Jira” or “What’s better for startups: Shopify or WooCommerce?” | Determines competitive visibility; critical for winning consideration among alternatives |

| How-To and Educational Queries | Requests for guidance, tutorials, or explanatory content | “How do I automate my sales pipeline?” or “What is customer relationship management?” | Builds authority and trust; positions your brand as a thought leader in your space |

Each category requires different content strategies and monitoring approaches. Direct brand queries demand immediate attention to ensure accurate representation, while problem-solving queries present opportunities to demonstrate solution fit before competitors are mentioned.

Discovering the prompts that matter to your business requires a multi-faceted approach combining user research, competitive analysis, and technical monitoring. Here are seven actionable methods for identifying prompts to track:

Customer Interview Analysis: Conduct structured interviews with customers and prospects, recording the exact language they use when describing problems, solutions, and decision criteria. Transcribe these conversations and extract recurring phrases and question patterns that represent how real users think about your category. This reveals authentic, high-intent prompts that may not appear in traditional keyword research.

Support Ticket Mining: Analyze your customer support system (Zendesk, Intercom, etc.) to identify the most common questions and how customers phrase them. Support tickets represent genuine user confusion points and information needs, making them goldmines for prompt discovery. Tag and categorize these questions to identify patterns and priority areas.

Competitive Prompt Reverse Engineering: Manually test competitor names and products in ChatGPT, Claude, and Gemini, documenting how they appear in responses and which prompts surface them prominently. This reveals the competitive landscape and shows which prompts you’re currently losing. Document the exact positioning language used in AI responses about competitors.

Social Listening and Community Monitoring: Monitor Reddit, Twitter, Discord, Slack communities, and industry forums where your target audience discusses problems and solutions. Extract the exact language users employ when asking questions or describing needs. These communities often contain unfiltered, authentic prompts that represent genuine user intent.

Search Query Expansion: Use traditional SEO tools (SEMrush, Ahrefs, Moz) to identify high-volume search queries in your category, then convert these into conversational prompts. For example, the search query “best CRM for small business” becomes the prompt “What’s the best CRM for small businesses?” This bridges your existing keyword research into the AI visibility space.

LLM-Native Prompt Testing: Systematically test variations of prompts in multiple LLMs, documenting which versions surface your brand and which don’t. Test different phrasings, specificity levels, and context-setting approaches. Create a testing matrix covering your core business categories and track how response quality and brand mentions vary.

Stakeholder and Sales Team Input: Engage your sales, marketing, and product teams to document the questions prospects ask during discovery calls, the objections they raise, and the language they use to describe problems. Sales teams have direct insight into how prospects think about your solution and competitive alternatives. Compile these into a master prompt list organized by sales stage and buyer persona.

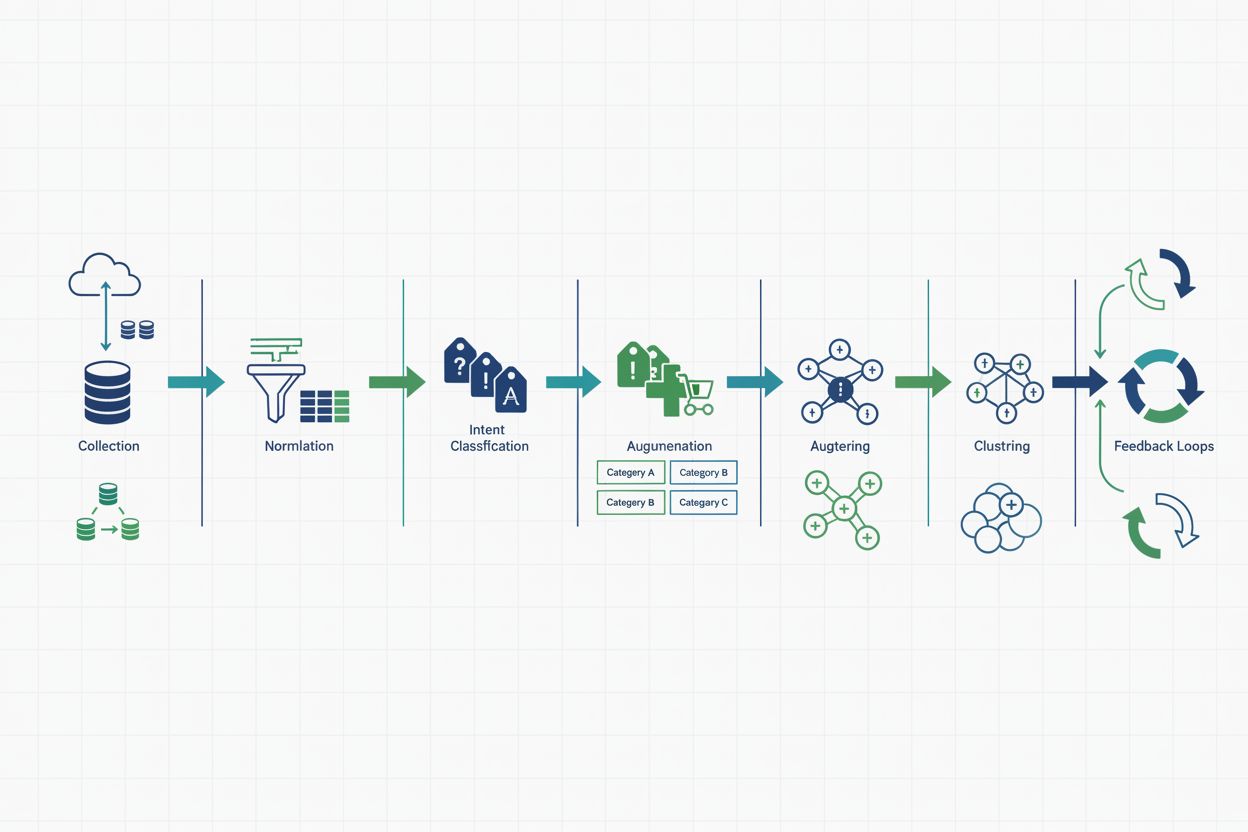

Effective prompt research requires a structured lifecycle that moves from raw query collection through actionable insights. The complete LLM Query Analysis Lifecycle consists of six interconnected stages: Collection and Governance establishes how prompts are captured, stored, and protected, ensuring compliance with privacy regulations and internal data policies. Normalization standardizes raw prompts by removing duplicates, correcting typos, and converting variations into canonical forms—for example, treating “ChatGPT,” “chat gpt,” and “openai chatgpt” as the same entity. Intent Classification assigns each prompt to one of your predefined intent categories (brand, category, problem-solving, comparative, educational) using both manual review and machine learning models. Augmentation enriches prompts with metadata including source, timestamp, user segment, LLM platform, and response quality metrics. Clustering groups similar prompts together to identify themes, emerging topics, and priority areas for optimization. Finally, Feedback Loops connect insights back to product, content, and marketing teams, enabling continuous improvement and measurement of impact. This lifecycle transforms raw prompt data into strategic intelligence that drives business decisions.

An intent taxonomy is a structured framework that categorizes prompts based on the underlying user need or goal they represent. Beyond the five prompt types, intent taxonomy adds another layer of granularity by classifying the business outcome each prompt represents. For example, a prompt like “How do I choose between Salesforce and HubSpot?” could be classified as having comparison intent (prompt type) with purchase intent (business outcome), indicating a high-value opportunity to influence a buying decision. Other intent classifications include awareness intent (user is learning about a category), troubleshooting intent (user has a problem to solve), validation intent (user is confirming a decision), and expansion intent (existing customer exploring additional features). Building a comprehensive intent taxonomy requires collaboration between marketing, sales, product, and customer success teams, each bringing unique perspectives on what prompts matter most. The taxonomy becomes the foundation for prioritization—high-intent prompts (those indicating purchase readiness or problem-solving urgency) warrant immediate attention and optimization, while awareness-stage prompts may require different content strategies. Organizations that implement intent taxonomy see 30-40% improvements in their ability to prioritize optimization efforts and measure the business impact of prompt research initiatives.

Prompt research reveals distinct opportunities and challenges across different industries, each with unique competitive dynamics and user behaviors. In e-commerce, prompts like “What’s the best laptop for video editing under $1500?” or “How do I choose between Nike and Adidas running shoes?” directly influence purchase decisions; brands that appear in these comparative prompts see measurable increases in traffic and conversion rates. SaaS companies benefit from tracking problem-solving prompts like “How do I automate my email marketing?” or “What’s the best way to manage remote team projects?"—appearing in these responses positions your solution as a natural answer to customer pain points. Customer support organizations use prompt research to identify the most common questions users ask LLMs before contacting support, enabling proactive content creation that reduces support volume; for example, if “How do I reset my password?” is a frequent prompt, creating clear documentation ensures users find answers in AI responses. Regulated industries (finance, healthcare, legal) must monitor prompts to ensure AI systems provide accurate, compliant information about their services; a bank might discover that prompts about mortgage rates are returning outdated information, requiring immediate outreach to LLM providers. Marketing and SEO agencies use prompt research to identify emerging content opportunities and competitive gaps; tracking prompts reveals which topics are gaining traction in AI conversations before they become mainstream search trends. Across all industries, prompt research transforms from a monitoring exercise into a strategic advantage when organizations systematically track, analyze, and act on the insights they discover.

Implementing effective prompt research at scale requires a technical architecture designed to collect, process, and analyze queries efficiently. The architecture typically includes four core components: Event Collection systems that capture prompts from multiple sources (customer interactions, support tickets, social listening, manual testing) and stream them into a centralized data pipeline. A Data Warehouse (Snowflake, BigQuery, Redshift) stores normalized prompts with rich metadata including source, timestamp, user segment, LLM platform, and response characteristics. Batch Processing jobs run nightly or weekly to perform intent classification, clustering, and trend analysis using both rule-based systems and machine learning models. Real-Time Classification systems flag high-priority prompts (competitive threats, brand mentions, critical problems) immediately, enabling rapid response. Key metrics for monitoring include Brand Mention Rate (percentage of category prompts that mention your brand), Intent Distribution (breakdown of prompts by intent type), Competitive Positioning (how often your brand appears relative to competitors in comparative prompts), Emerging Topics (new prompts gaining traction), and Response Quality (accuracy and relevance of AI responses mentioning your brand). Dashboards should surface these metrics by business unit, product line, and customer segment, enabling stakeholders to identify opportunities and track progress toward visibility goals.

As organizations scale prompt research, protecting user privacy and maintaining ethical standards becomes paramount. Data Minimization principles dictate that you collect only the prompts necessary for your analysis, avoiding unnecessary capture of user context or personal information. When collecting prompts from customer interactions, implement PII (Personally Identifiable Information) detection and redaction to automatically remove names, email addresses, phone numbers, and other sensitive data before storage. Retention Policies should specify how long prompts are stored—many organizations adopt a 12-month retention window, deleting older data unless specific business justification exists for longer retention. Access Controls ensure that only authorized team members can view raw prompt data, with role-based permissions limiting access based on job function and need-to-know. Transparency with users is essential; if you’re collecting prompts from customer interactions, clearly communicate this in privacy policies and terms of service. Responsible Query Mining also means avoiding manipulation or gaming of LLM systems—the goal is to understand genuine user needs and optimize your presence accordingly, not to exploit system vulnerabilities or engage in prompt injection attacks. Organizations that prioritize privacy and ethics in their prompt research build stronger customer trust and reduce regulatory risk.

Discovering prompts is only valuable if insights translate into concrete business actions and measurable impact. Closing Feedback Loops means establishing clear processes for how prompt research findings reach decision-makers and drive changes: when analysis reveals that a competitor is mentioned in 60% of comparative prompts while your brand appears in only 20%, this insight should trigger content creation, product positioning, or sales enablement initiatives. Cross-Functional Alignment requires regular communication between marketing, product, sales, and customer success teams; monthly or quarterly reviews of prompt research findings ensure that insights inform strategy across the organization. Measuring Impact involves tracking leading indicators (brand mention rate, intent distribution, response quality) and lagging indicators (traffic from AI sources, conversion rates, customer acquisition cost) to quantify the business value of prompt research investments. Start with quick wins—identify 5-10 high-priority prompts where your brand is underrepresented and create targeted content or outreach to improve visibility. Establish a Prompt Research Roadmap that prioritizes optimization efforts by business impact and feasibility, allocating resources to the prompts that matter most to your business. Finally, treat prompt research as an ongoing discipline, not a one-time project; as LLMs evolve and user behaviors shift, your prompt tracking and optimization strategies must evolve accordingly. Organizations that embed prompt research into their core visibility strategy—alongside SEO, paid search, and social media—position themselves to thrive in an AI-driven information landscape.

Keyword research focuses on search volume and ranking difficulty for terms used in search engines, while prompt research examines the conversational, context-dependent queries users submit to LLMs. Prompts are typically longer, more specific, and don't have measurable search volumes. Prompt research requires understanding user intent in AI conversations rather than optimizing for search engine algorithms.

Review and update your prompt tracking list quarterly as user behaviors and LLM capabilities evolve. However, monitor real-time metrics weekly to catch emerging trends or competitive threats. Start with 20-30 core prompts and expand based on performance data and business priorities.

Start with ChatGPT (largest user base), Perplexity (AI-native search), and Google AI Overviews (integrated into search). Then expand to Claude, Gemini, and other emerging platforms based on your audience demographics and industry. Different platforms may surface your brand differently, so comprehensive monitoring across multiple platforms is ideal.

Track leading indicators like brand mention rate, visibility score, and competitive positioning in AI responses. Measure lagging indicators including traffic from AI sources, conversion rates from AI-referred visitors, and customer acquisition cost. Compare these metrics before and after optimization efforts to quantify business impact.

Tools like AmICited, LLM Pulse, and AccuRanker offer automated prompt discovery and tracking. You can also use SEO tools (SEMrush, Ahrefs) to identify search queries to convert into prompts, and leverage LLMs themselves to suggest relevant prompts for your business category.

Prompt research reveals content gaps and opportunities by showing which questions users ask LLMs about your category. Use these insights to create targeted content addressing high-intent prompts, update existing content to better answer common questions, and develop new resources for underserved topics.

AI Overviews are Google's AI-generated summaries in search results. Prompts that trigger AI Overviews indicate high-intent queries where AI visibility matters. Monitor which keywords trigger AI Overviews, then test those as prompts in other LLMs to understand your visibility across the AI landscape.

Decide whether to normalize all prompts into a single language or maintain language-specific taxonomies. Use reliable language detection, ensure your analysis tools support your key markets, and involve native speakers in periodic audits to catch cultural nuances and regional variations in how users phrase queries.

Understand how your brand appears in AI-generated answers across ChatGPT, Perplexity, and Google AI Overviews. AmICited tracks your prompt performance and AI citations in real-time.

Learn how to create and organize an effective prompt library to track your brand across ChatGPT, Perplexity, and Google AI. Step-by-step guide with best practic...

Compare agency vs in-house AI visibility monitoring. Explore costs, timelines, expertise requirements, and hybrid approaches to help you choose the right strate...

Learn proven strategies to improve your brand's visibility in AI search engines like ChatGPT, Perplexity, and Gemini. Discover content optimization, entity cons...