Editorial Guidelines for AI-Optimized Content

Comprehensive guide to developing and implementing editorial guidelines for AI-generated and AI-assisted content. Learn best practices from major publishers and...

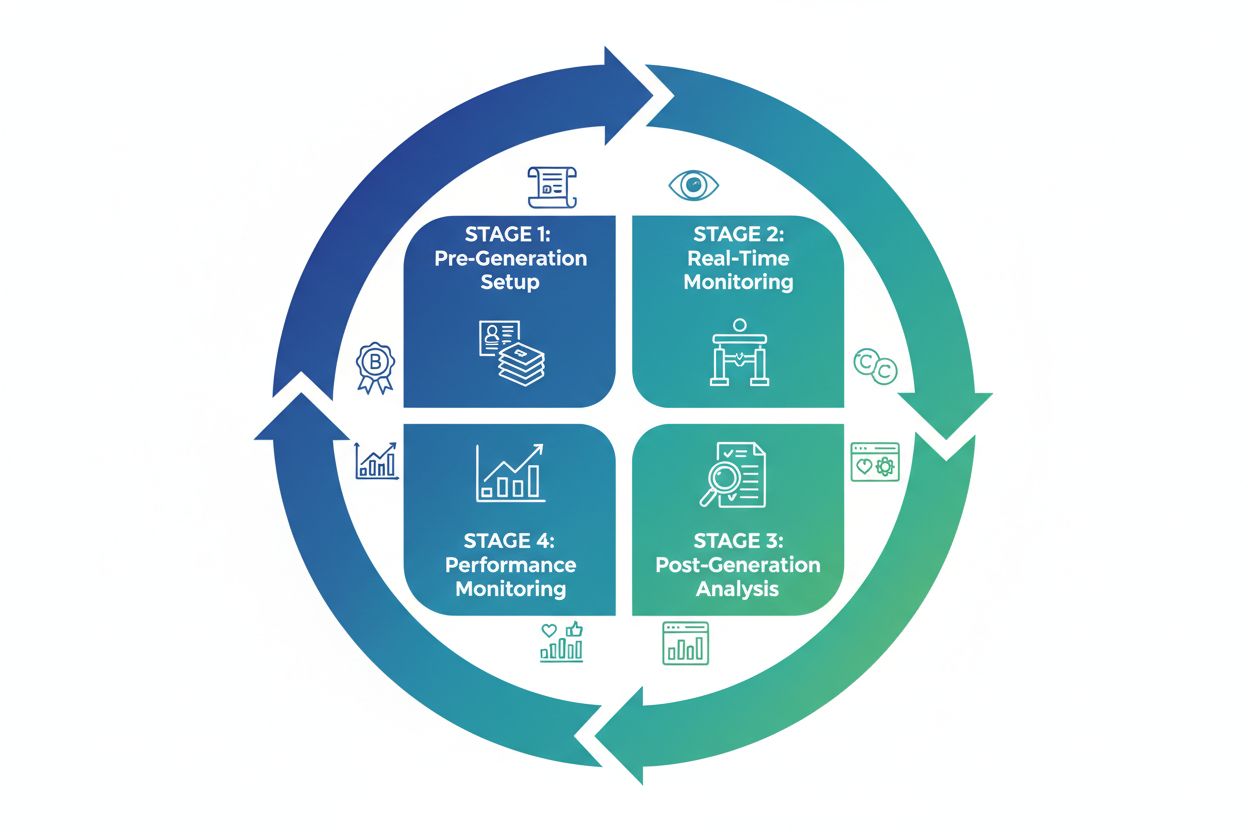

Master AI content quality control with our comprehensive 4-step framework. Learn how to ensure accuracy, brand alignment, and compliance in AI-generated content while monitoring AI visibility.

The landscape of content creation has fundamentally shifted. With 50% of marketers now leveraging artificial intelligence to generate content, the question is no longer whether to use AI—it’s how to ensure that AI-generated content meets the rigorous quality standards your brand demands. As organizations increasingly integrate AI into their content workflows, the challenge of maintaining consistent quality, accuracy, and brand alignment has become more complex than ever. The stakes are high: poor quality AI-generated content can damage brand reputation, mislead audiences, and undermine trust. Yet many organizations lack a structured approach to quality control specifically designed for AI-generated content. This comprehensive guide explores the essential framework for implementing effective quality control measures that ensure your AI-generated content is not just acceptable, but exceptional.

Quality control for AI-generated content differs fundamentally from traditional content QC processes. While conventional quality assurance focuses on grammar, style, and factual accuracy, AI-specific quality control must address unique challenges that emerge from how language models operate. These challenges include hallucinations (where AI generates plausible-sounding but false information), context drift (where the AI loses track of the original intent or topic), plagiarism concerns, and inherent biases that may be embedded in training data. Understanding these AI-specific quality factors is essential for developing an effective QC strategy. The definition of quality for AI-ready content encompasses not just what is produced, but how it’s produced, monitored, and validated throughout the entire content lifecycle.

| Quality Factor | Traditional Content | AI-Generated Content | Key Difference |

|---|---|---|---|

| Accuracy | Fact-checking by human reviewers | Requires verification against authoritative sources | AI may confidently state false information |

| Consistency | Brand voice guidelines | Brand voice + context preservation | AI may drift from established tone |

| Originality | Plagiarism detection tools | Plagiarism + hallucination detection | AI may inadvertently reproduce training data |

| Bias | Editorial review | Algorithmic bias detection | Biases embedded in training data |

| Explainability | Content source documentation | Model decision transparency | Understanding why AI made specific choices |

| Compliance | Legal and regulatory review | Compliance + responsible AI framework | Industry-specific AI governance requirements |

The most effective approach to quality control for AI-generated content follows a structured, four-step validation system that addresses quality at every stage of the content lifecycle. This framework—encompassing pre-generation setup, real-time monitoring, post-generation analysis, and performance monitoring—creates multiple checkpoints where quality issues can be identified and corrected. Rather than treating quality control as a final step before publication, this approach embeds quality assurance throughout the entire process. By implementing this comprehensive framework, organizations can catch issues early, reduce the need for extensive revisions, and maintain consistent quality across all AI-generated content. The framework is designed to be scalable, allowing teams to apply it across different content types, channels, and use cases.

Before a single word of AI-generated content is created, the groundwork for quality must be established. Pre-generation setup involves defining clear parameters, guidelines, and expectations that will guide the AI model’s output. This critical phase includes:

These foundational elements act as guardrails that significantly improve the quality of AI-generated content from the outset. By investing time in pre-generation setup, teams reduce downstream quality issues and create a more efficient review process. The clearer and more detailed your pre-generation guidelines, the better the AI model can understand and meet your quality expectations.

Real-time monitoring represents the second critical phase of the quality control framework, where issues are identified and addressed as content is being generated. This proactive approach prevents poor-quality content from progressing through the workflow. Real-time monitoring capabilities include:

Modern AI quality assurance tools can perform these checks in real-time, providing immediate feedback to content creators and allowing for quick adjustments before content moves to the next stage. This approach is far more efficient than discovering quality issues during post-generation review, as it enables course correction while the content is still being refined. Real-time monitoring transforms quality control from a reactive process into a proactive one.

After content has been generated, a thorough post-generation analysis ensures that all quality standards have been met before publication. This phase involves detailed human review combined with automated verification tools. Post-generation analysis encompasses:

The post-generation phase is where human expertise becomes indispensable. While automated tools can flag potential issues, human reviewers bring contextual understanding, industry knowledge, and judgment that machines cannot replicate. This combination of automated detection and human review creates a robust quality assurance process that catches issues that either approach alone might miss. The goal is not to achieve perfection, but to ensure that published content meets your organization’s quality standards and represents your brand appropriately.

The fourth phase of the quality control framework extends beyond publication to monitor how content performs in the real world. Performance monitoring provides valuable insights that inform future quality improvements and help teams understand what quality factors actually matter to their audience. Performance monitoring includes:

Performance monitoring transforms quality control into a learning system where each piece of published content contributes to improving future content quality. By analyzing what works and what doesn’t, teams can refine their quality standards to focus on factors that genuinely impact audience satisfaction and business outcomes. This data-driven approach to quality improvement ensures that your QC processes evolve and improve over time.

Quality standards for AI-generated content are not one-size-fits-all; they vary significantly depending on industry, regulatory environment, and organizational context. Different sectors face unique quality challenges and compliance requirements that must be integrated into the QC framework. Healthcare and pharmaceutical content, for example, requires rigorous fact-checking and regulatory compliance, as inaccurate information could directly impact patient safety. Financial services content must meet strict regulatory requirements and cannot contain misleading information about investments or financial products. Legal content demands absolute accuracy and must comply with bar association rules and professional standards. Educational content must be pedagogically sound and factually accurate to serve its learning objectives. E-commerce content must accurately represent products and comply with consumer protection regulations. Each industry requires customized quality control approaches that address sector-specific risks and compliance obligations. Organizations must audit their industry’s specific requirements and build those standards into their pre-generation guidelines and review processes.

While quality control ensures that AI-generated content meets your standards, AI visibility ensures that audiences understand when and how AI was involved in content creation. This transparency is increasingly important as audiences become more aware of AI-generated content and regulators begin requiring disclosure. AI visibility metrics—including mention rate (how often AI involvement is disclosed), representation accuracy (whether disclosures accurately describe the AI’s role), and citation share (proper attribution of sources and influences)—are becoming essential components of responsible AI content practices. AmICited.com specializes in monitoring and measuring these visibility metrics, helping organizations understand and optimize their AI disclosure practices. By integrating AI visibility monitoring into your quality control framework, you ensure not just that content is high-quality, but that audiences understand the role AI played in its creation. This transparency builds trust and demonstrates your organization’s commitment to responsible AI practices. Quality control and AI visibility work together to create a comprehensive approach to AI-generated content that is both excellent and ethical.

Successfully implementing a comprehensive quality control system for AI-generated content requires more than just understanding the framework—it requires establishing best practices that your team can consistently execute. First, invest in training your team on AI-specific quality challenges and how to identify them; many quality issues are subtle and require educated reviewers to catch. Second, establish clear quality standards and document them thoroughly so that all team members understand expectations and can apply them consistently. Third, use a combination of automated tools and human review rather than relying on either approach exclusively; automation catches obvious issues efficiently while human judgment handles nuanced quality decisions. Fourth, create feedback loops where quality issues discovered after publication inform improvements to pre-generation guidelines and monitoring parameters. Fifth, regularly audit your quality control processes to ensure they’re working effectively and adjust them based on performance data and changing business needs. Sixth, maintain detailed documentation of quality issues, their root causes, and how they were resolved; this institutional knowledge becomes invaluable for continuous improvement. Finally, foster a culture where quality is everyone’s responsibility, not just the QC team’s; when content creators understand quality standards and take ownership of quality, the entire system works more effectively.

As AI-generated content becomes increasingly prevalent in marketing, communications, and business operations, quality control transforms from a nice-to-have into a critical competitive advantage. Organizations that implement robust quality control frameworks will produce content that builds audience trust, protects brand reputation, and delivers better business results. The four-step framework—pre-generation setup, real-time monitoring, post-generation analysis, and performance monitoring—provides a structured approach that addresses quality at every stage of the content lifecycle. By combining this framework with industry-specific compliance requirements, AI visibility practices, and continuous improvement processes, organizations can confidently leverage AI’s efficiency while maintaining the quality standards their audiences expect. The future of content creation is not about choosing between human quality and AI efficiency; it’s about combining both to create content that is simultaneously excellent and scalable. Organizations that master this balance will lead their industries in content quality and audience trust.

The biggest challenge is that AI can generate plausible-sounding but false information (hallucinations), lose track of context, and inadvertently reproduce training data. Unlike human-written content, AI-generated content requires specific quality checks for these AI-specific issues in addition to traditional quality assurance.

Quality review should happen at multiple stages: during pre-generation setup (establishing guidelines), in real-time as content is being generated (catching issues early), immediately after generation (comprehensive analysis), and after publication (performance monitoring). This multi-stage approach is more efficient than reviewing only at the end.

No. While automated quality assurance tools are valuable for catching obvious issues like plagiarism, tone inconsistency, and readability problems, human expertise is essential for contextual understanding, fact-checking, and nuanced quality decisions. The most effective approach combines automated detection with human review.

Traditional QC focuses on grammar, style, and factual accuracy. AI content QC must address additional challenges including hallucinations (false information), context drift, plagiarism concerns, embedded biases, and explainability. AI-specific QC requires different tools and expertise.

High-quality, accurate content is more likely to be cited in AI responses like ChatGPT, Perplexity, and Google AI Overviews. AmICited monitors these citations and visibility metrics, helping you understand how your content is being referenced in AI-generated answers and ensuring proper attribution.

Healthcare, financial services, legal, and highly technical industries require stricter QC due to regulatory requirements and higher stakes. Healthcare content must meet FDA/HIPAA compliance, financial content must follow SEC regulations, and legal content must comply with bar association rules. However, all industries benefit from robust quality control.

Track metrics including: engagement rates (views, shares, time on page), audience feedback and comments, error rates (issues discovered after publication), SEO performance, conversion rates, and brand perception. Compare AI-generated content performance against human-written content to identify quality gaps.

Use a combination of tools: plagiarism detection (Copyscape, Turnitin), readability analysis (Grammarly), fact-checking platforms, brand governance systems (like Typeface or Sanity), and AI visibility monitoring (AmICited). Combine these automated tools with human expert review for comprehensive quality assurance.

AmICited tracks how AI references your brand and content across GPTs, Perplexity, and Google AI Overviews. Ensure your AI-generated content maintains quality standards and gets properly cited in AI responses.

Comprehensive guide to developing and implementing editorial guidelines for AI-generated and AI-assisted content. Learn best practices from major publishers and...

Learn what AI content quality thresholds are, how they're measured, and why they matter for monitoring AI-generated content across ChatGPT, Perplexity, and othe...

Learn how to enhance AI-generated content with human expertise through strategic editing, fact-checking, brand voice refinement, and original insights to create...