Creating AI Visibility Dashboards: Best Practices

Learn how to build effective AI visibility dashboards to monitor your brand across ChatGPT, Perplexity, and Google AI Overviews. Best practices for GEO reportin...

Learn how to build effective AI visibility KPI dashboards to track brand mentions, citations, and performance across ChatGPT, Google AI Overviews, Perplexity, and more.

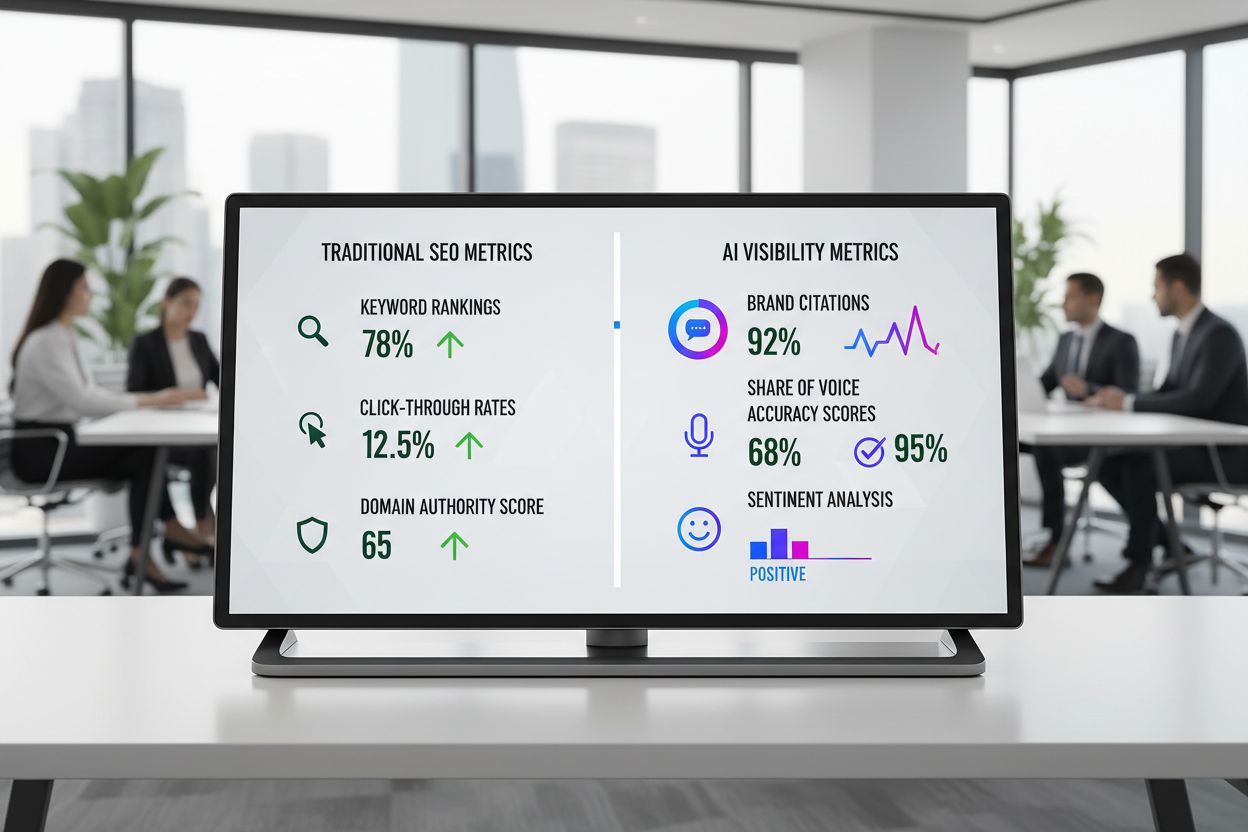

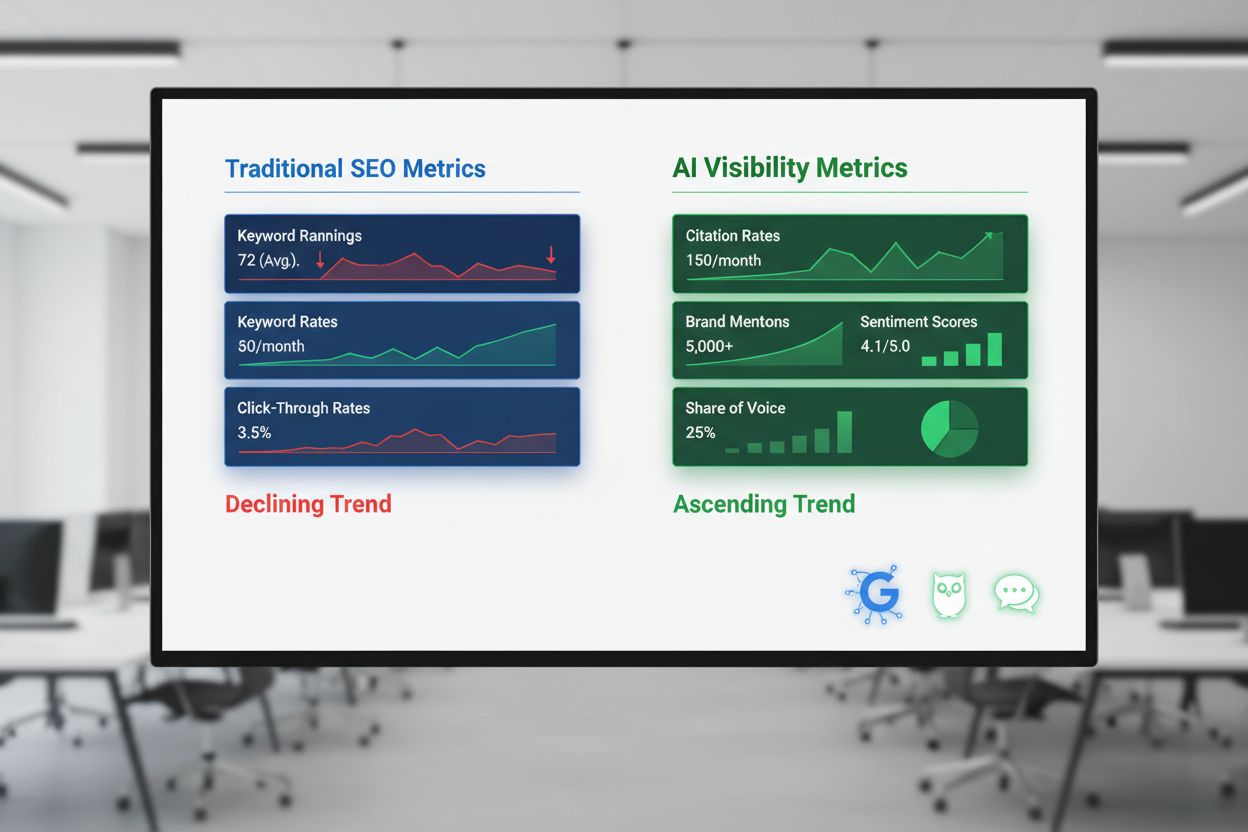

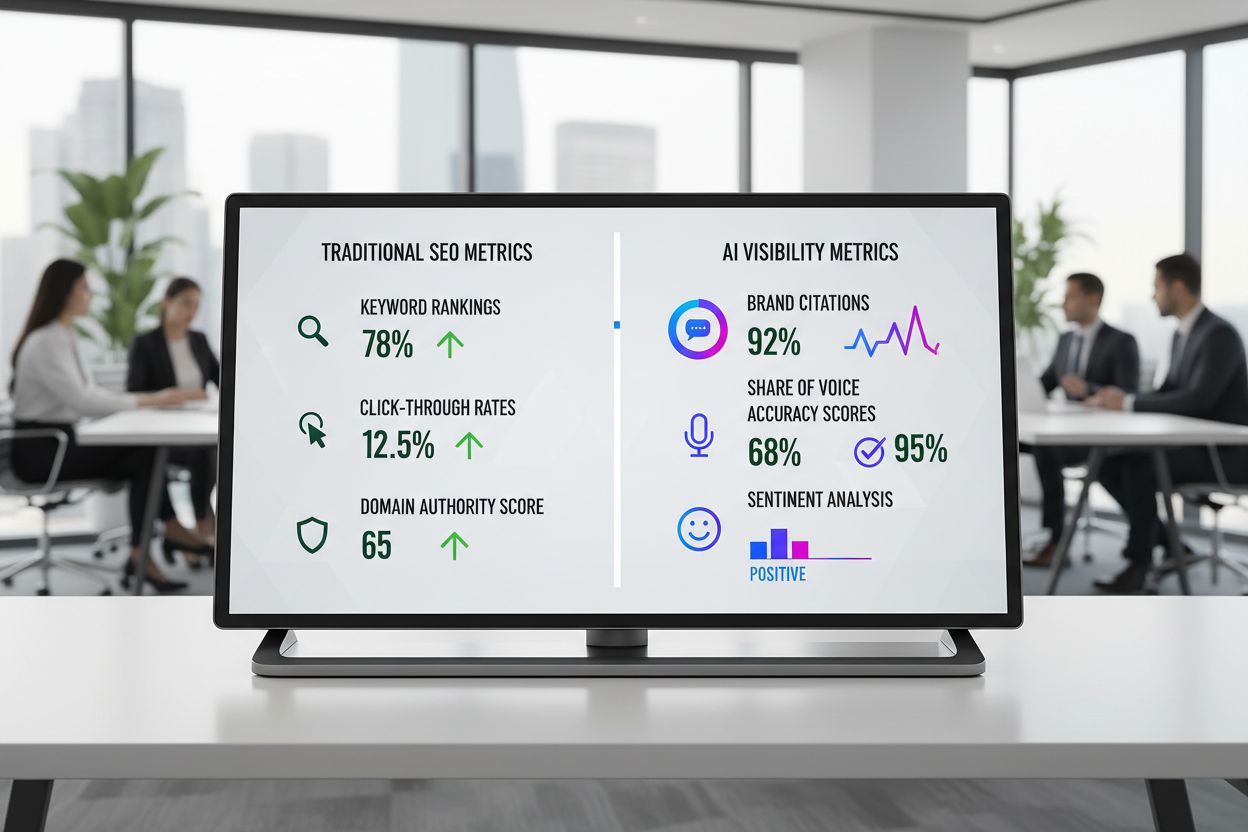

Traditional SEO dashboards were built for a different era—one where search results were dominated by blue links and click-through rates were the primary success metric. Today’s zero-click phenomenon has fundamentally changed how audiences discover information, with AI-powered platforms like ChatGPT, Google AI Overviews, and Perplexity now intercepting search intent before users ever reach your website. Legacy dashboards fail to capture brand mentions in AI-generated answers, sentiment shifts in how AI platforms present your content, or the critical distinction between appearing in search results and being cited as a trusted source. To compete in this new landscape, marketing leaders need a completely different mental model—one that tracks visibility across AI platforms, measures citation accuracy, and connects AI presence directly to business outcomes.

Five essential metrics form the foundation of any AI visibility strategy, each measuring a different dimension of how your brand and content perform across AI platforms. AI Signal Rate measures the percentage of relevant queries where your brand or content appears in AI-generated responses, calculated by dividing the number of queries where you appear by total monitored queries and benchmarking against industry averages of 15-35% for established brands. Citation Rate tracks how often your content is explicitly cited or attributed in AI answers, with healthy benchmarks ranging from 40-70% of appearances, indicating whether AI systems recognize your authority. Share of Voice compares your visibility against competitors in the same space, calculated as your AI appearances divided by total competitor appearances, with leading brands typically capturing 25-40% of voice in their category. Sentiment measures how AI platforms frame your brand—whether mentions are positive, neutral, or negative—with most brands targeting 70%+ positive sentiment in AI-generated content. Accuracy evaluates whether AI systems represent your information correctly, calculated as accurate mentions divided by total mentions, with a benchmark target of 85%+ accuracy to maintain brand integrity.

| Metric Name | Definition | How to Calculate | Industry Benchmark |

|---|---|---|---|

| AI Signal Rate | % of queries where your brand/content appears in AI answers | (Appearances / Total Monitored Queries) × 100 | 15-35% for established brands |

| Citation Rate | % of AI appearances that explicitly cite your content | (Cited Appearances / Total Appearances) × 100 | 40-70% |

| Share of Voice | Your visibility vs. competitors in AI answers | (Your Appearances / Total Competitor Appearances) × 100 | 25-40% in category |

| Sentiment | Positive/neutral/negative framing of your brand in AI responses | Manual review or NLP classification | 70%+ positive sentiment |

| Accuracy | Correctness of information presented about your brand | (Accurate Mentions / Total Mentions) × 100 | 85%+ accuracy |

A robust data model is the backbone of any AI visibility dashboard, requiring careful architecture to handle the unique characteristics of AI-generated content. Your foundation should include fact tables that capture individual AI appearances with timestamps, platform source, query, and citation status, combined with dimension tables that store query metadata, competitor information, and content attributes. Key dimensions include query intent (problem-solving, solution-seeking, brand research, competitive comparison), platform type (Google AI Overview, ChatGPT, Perplexity, Gemini, Claude), geographic location, and content source (owned, earned, paid). This structure allows you to slice and dice visibility data across multiple dimensions while maintaining data integrity and enabling historical trend analysis. Privacy considerations are critical—ensure your data collection complies with platform terms of service and GDPR/CCPA regulations, particularly when capturing AI-generated responses that may contain user data. The most effective data models separate raw collection data from processed metrics, allowing you to recalculate benchmarks and adjust definitions as your understanding of AI visibility evolves.

Implementing a reliable data collection pipeline requires a systematic, seven-step process that ensures consistent, accurate monitoring across all AI platforms you’re tracking. The pipeline begins with defining your query set—typically 100-500 high-value queries that represent your core business, including branded, category, problem-solving, and competitive comparison queries. Next, schedule automated monitoring to capture AI responses at consistent intervals (daily for critical queries, weekly for broader monitoring), ensuring you have sufficient data for trend analysis without overwhelming your system. The capture phase involves using APIs or monitoring tools to retrieve AI-generated responses, storing both the full response and metadata about when it was captured. Parsing extracts structured data from responses—identifying your brand mentions, citations, sentiment indicators, and accuracy issues. Classification assigns each appearance to categories (cited vs. uncited, accurate vs. inaccurate, positive vs. negative sentiment) using both automated rules and manual review for edge cases. Loading transfers processed data into your data warehouse or dashboard platform, maintaining version control and audit trails. Finally, version control documents any changes to query definitions, classification rules, or metric calculations, ensuring your historical data remains comparable and your team understands how metrics have evolved.

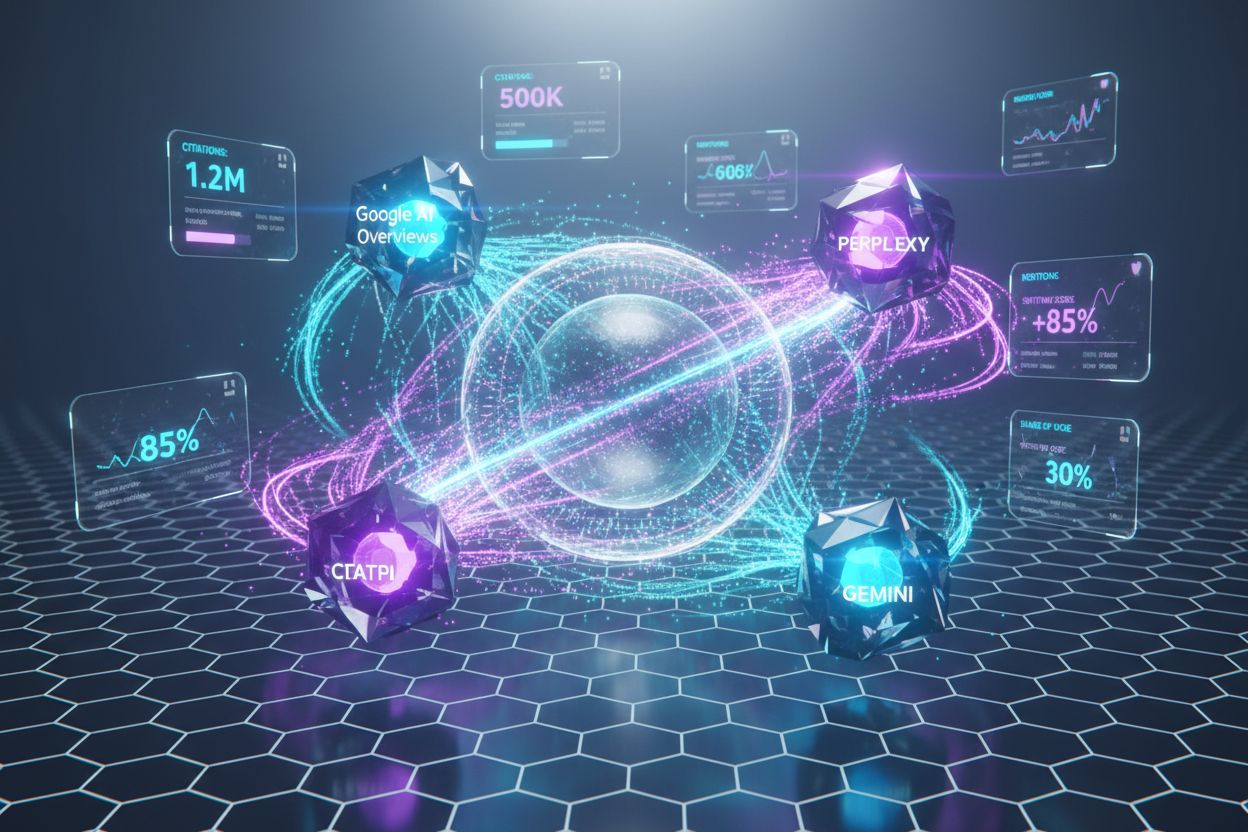

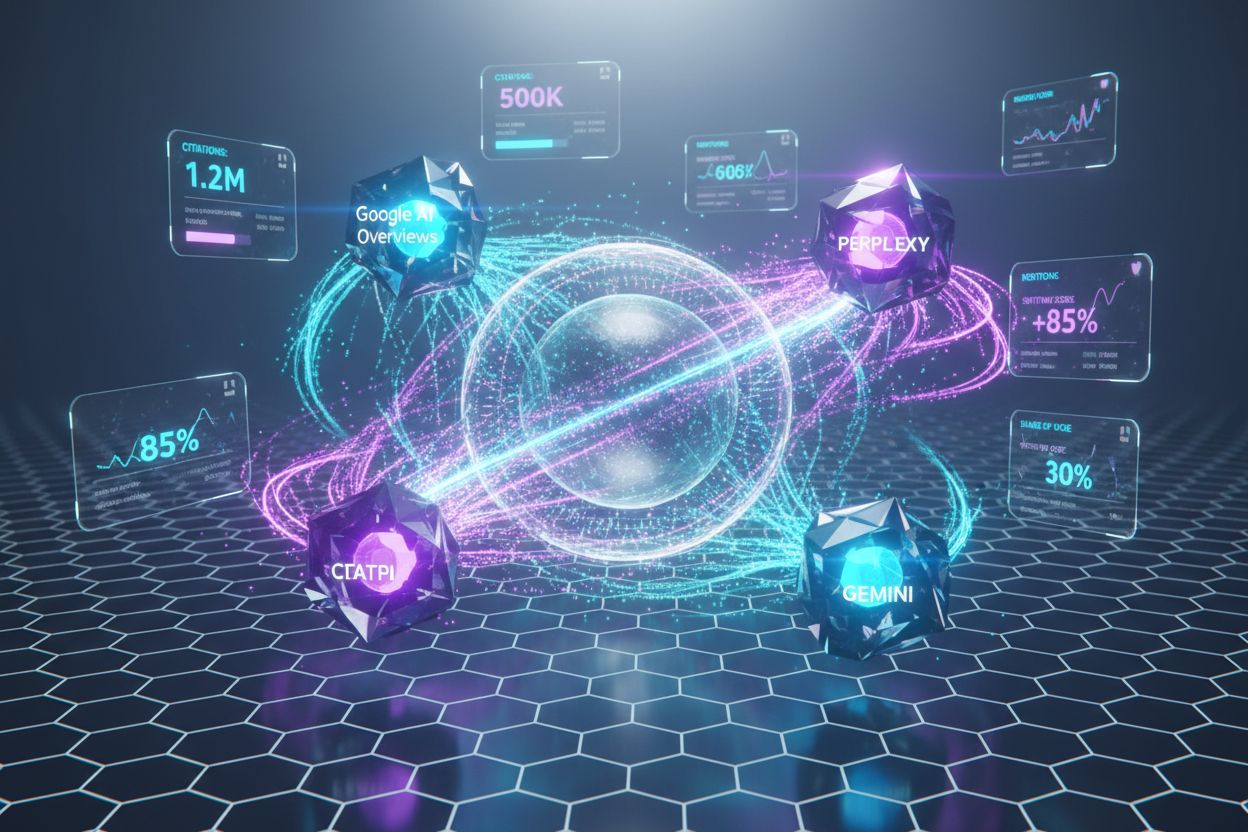

AI visibility monitoring must account for fundamental differences across platforms, as each has distinct training data, update cycles, and user behaviors that affect how your content appears. Google AI Overviews prioritize recent, authoritative content and integrate directly with search results, making them critical for capturing branded and informational queries. ChatGPT relies on training data with a knowledge cutoff and emphasizes conversational relevance, often citing sources when users ask for citations but sometimes omitting attribution. Perplexity explicitly prioritizes citation and transparency, making it ideal for measuring how well your content is recognized as authoritative. Gemini (Google’s conversational AI) bridges search and chat, with behavior patterns that shift as Google continues updating its models. Claude serves a different user base focused on detailed analysis and reasoning, requiring separate monitoring if your audience uses this platform. Your tracking strategy should monitor each platform independently while maintaining consistent query sets and metric definitions, allowing you to identify platform-specific opportunities and risks. Additionally, consider localization requirements—AI responses vary significantly by geography and language, so establish regional monitoring for markets where you operate. Brand safety and compliance become increasingly important across platforms, requiring regular audits to ensure AI systems aren’t misrepresenting your products, making false claims, or associating your brand with inappropriate content.

Different stakeholders need different views of AI visibility data, and designing persona-specific dashboards ensures each team member can quickly access the metrics that drive their decisions. The CMO dashboard should focus on high-level business impact—AI Signal Rate trends, Share of Voice against competitors, sentiment distribution, and correlation between AI visibility and conversion metrics, with monthly trend views and executive summaries. The Head of SEO dashboard needs deeper technical insights including citation rates by content type, accuracy issues requiring correction, query-level performance data, and competitive benchmarking, with daily updates and drill-down capabilities. The Content Lead dashboard emphasizes content performance—which pieces are cited most frequently, accuracy issues in AI responses, sentiment trends, and recommendations for content updates or new content opportunities. The Product Marketing dashboard tracks how product-specific queries perform, competitive positioning in AI answers, and messaging accuracy, with alerts when competitors gain Share of Voice. The Growth dashboard connects AI visibility to business outcomes—tracking which AI-visible queries drive traffic, conversion rates from AI-sourced visitors, and ROI of content investments. Each dashboard should include role-specific KPIs, automated alerts for anomalies, and drill-down capabilities that allow users to investigate trends without requiring data science expertise.

Dashboards only create value when they drive action, which requires implementing automated alerts and documented workflows that operationalize your AI visibility monitoring. Set up alerts for critical events: when your Share of Voice drops below target thresholds, when accuracy issues emerge (particularly for product claims or pricing), when competitor visibility spikes, or when sentiment shifts negative. Establish a weekly review cadence where your team examines alerts, investigates root causes, and identifies required actions—whether that’s updating content, reaching out to AI platforms, or adjusting your content strategy. Create experimentation playbooks that document how to test content changes and measure their impact on AI visibility, ensuring you’re continuously learning what drives better performance. Assign clear ownership for different query categories or platforms, so team members know who’s responsible for monitoring and responding to changes. Document your workflows and decision trees—when should you update content vs. reach out to platforms vs. create new content? What’s the escalation path for critical accuracy issues? How do you prioritize between competing opportunities? The most effective teams treat AI visibility monitoring as an ongoing operational discipline, not a one-time project, with regular reviews, experimentation, and continuous optimization.

While building custom monitoring infrastructure is possible, most organizations benefit from specialized AI visibility platforms that handle the complexity of multi-platform tracking, data aggregation, and dashboard creation. The market includes several strong options, each with different strengths depending on your specific needs and technical capabilities.

| Tool Name | Multi-Platform Tracking | Sentiment Analysis | Historical Archiving | Custom Dashboards | Real-Time Alerts | Best For |

|---|---|---|---|---|---|---|

| AmICited.com | ChatGPT, Perplexity, Google AI, Gemini, Claude | Yes, AI-powered | 12+ months | Fully customizable | Yes, with playbooks | Enterprise teams needing comprehensive AI visibility |

| Geneo | Google AI, ChatGPT, Perplexity | Yes, manual review | 6+ months | Pre-built templates | Yes | Mid-market brands focused on Google AI |

| Peec AI | ChatGPT, Perplexity, Google AI | Basic sentiment | 3-6 months | Limited customization | Yes | Startups and SMBs with focused monitoring |

| SE Ranking | Google AI Overview | Yes | 6+ months | Customizable | Yes | Teams already using SE Ranking for SEO |

| Profound | Multiple AI platforms | Yes, advanced NLP | 12+ months | Highly customizable | Yes | Enterprise organizations with complex needs |

| Semrush | Google AI Overview | Basic | 6+ months | Limited to Semrush interface | Yes | Teams using Semrush for broader SEO |

AmICited.com stands out as the most comprehensive solution, offering real-time monitoring across all major AI platforms (ChatGPT, Perplexity, Google AI Overviews, Gemini, Claude), advanced sentiment analysis powered by AI, historical data archiving for trend analysis, and fully customizable dashboards designed for different personas. The platform includes automated alert workflows and playbooks that help teams operationalize their AI visibility strategy, making it ideal for marketing leaders and analytics teams serious about measuring and improving their AI presence.

Effective AI visibility management requires a structured weekly workflow that keeps your monitoring current, identifies opportunities, and drives continuous improvement. Begin by building your prompt set—organize your 100-500 monitored queries into five categories: problem-solving queries (how to, best practices, troubleshooting), solution-seeking queries (product comparisons, feature questions), category queries (industry trends, market analysis), brand queries (your company name, product names), and competitive comparison queries (your brand vs. competitors). Each week, test your full prompt set across all monitored AI platforms, capturing responses and metadata. Score each appearance against your metrics—did your content appear? Was it cited? Was the information accurate? What was the sentiment? Aggregate these scores into your dashboard metrics. Identify gaps and opportunities—which queries show declining visibility? Where are accuracy issues emerging? Which competitors are gaining Share of Voice? Which content pieces are driving the most citations? Update and optimize content based on findings—refresh underperforming content, correct inaccuracies, create new content for high-value queries where you’re missing, and improve content structure to make it more citation-worthy. Finally, re-test updated content in the following week to measure the impact of your changes, creating a continuous feedback loop that drives improvement.

AI visibility metrics only matter if they drive business results, which requires establishing clear connections between your dashboard metrics and revenue-generating outcomes. Implement GA4 tracking that identifies traffic sourced from AI platforms (through referrer data and custom parameters), allowing you to measure how much qualified traffic AI visibility generates. Analyze conversion rates for AI-sourced traffic compared to traditional search traffic—many organizations find that AI-sourced visitors have higher intent and convert at premium rates because they’ve already been pre-qualified by AI systems. Establish correlation analysis between your Share of Voice metrics and branded search volume, as increased AI visibility often drives incremental branded search traffic as users verify information they encountered in AI responses. Conduct customer interviews to understand how many customers discovered your brand through AI platforms before converting, providing qualitative validation of AI visibility’s business impact. Build attribution models that credit AI visibility for conversions, even when the final conversion comes through a different channel—many customers follow a path of AI discovery → branded search → conversion. Track cost per acquisition for AI-sourced customers compared to other channels, demonstrating ROI and justifying continued investment in AI visibility optimization. The most sophisticated organizations create dashboards that show both AI visibility metrics and business outcomes side-by-side, making the connection between monitoring activities and revenue crystal clear.

Organizations new to AI visibility monitoring often make predictable mistakes that undermine their dashboards’ effectiveness and ROI. The first mistake is prioritizing volume over accuracy—monitoring 1,000 queries with poor accuracy is less valuable than monitoring 200 queries with rigorous accuracy standards. Ensure your classification rules are clear, your manual review process is consistent, and you’re regularly auditing your data quality. A second mistake is ignoring citation context—appearing in an AI response is only valuable if you’re actually cited or if the response drives traffic to your site; uncited appearances in negative contexts can actually harm your brand. The third mistake is using generic, low-intent prompts that don’t reflect how real customers search; your query set should mirror actual customer behavior and business priorities. Many teams treat AI visibility monitoring as a one-time project rather than an ongoing operational discipline, launching dashboards and then neglecting them; successful programs require weekly reviews, continuous optimization, and dedicated ownership. A critical mistake is failing to connect AI visibility to revenue—if you can’t demonstrate business impact, stakeholder support will evaporate; establish clear attribution and ROI metrics from the start. Sampling bias is another common pitfall—if you only monitor queries where you already rank well, you’ll miss opportunities and threats; ensure your query set includes competitive and aspirational queries. Finally, avoid changing metric definitions frequently—consistency matters for trend analysis; if you must adjust definitions, document the change and recalculate historical data so comparisons remain valid.

The AI landscape is evolving rapidly, with new models, platforms, and capabilities emerging constantly, requiring a strategy that can adapt without requiring complete rebuilds. Focus on durable concepts that will remain relevant regardless of which specific AI platforms dominate—concepts like citation accuracy, sentiment analysis, Share of Voice, and conversion attribution are fundamental to AI visibility and will matter whether you’re monitoring ChatGPT, Gemini, Claude, or platforms that don’t yet exist. Build flexibility into your data collection infrastructure, using modular architectures that allow you to add new platforms or adjust monitoring approaches without disrupting historical data or existing dashboards. Establish a regular review cadence (quarterly or semi-annually) where you assess emerging AI platforms, evaluate whether they’re relevant to your audience, and adjust your monitoring strategy accordingly. Stay informed about platform updates and algorithm changes—AI systems are updated frequently, and understanding these changes helps you interpret metric shifts and adjust your strategy proactively. Invest in team education so your organization understands AI visibility fundamentals deeply enough to adapt as the landscape evolves; teams that understand the “why” behind their metrics can adjust the “how” more effectively. Finally, recognize that AI visibility is complementary to, not a replacement for, traditional SEO—the most resilient strategies monitor both traditional search visibility and AI visibility, ensuring you’re visible regardless of how users discover information.

For critical queries and high-priority topics, monitor daily or weekly. For broader monitoring, weekly updates are typically sufficient. The key is consistency—establish a regular cadence and stick to it so you can identify meaningful trends rather than daily noise. Most organizations find that weekly reviews with daily alerts for critical issues provides the right balance.

Traditional backlinks are links from other websites to your content, while AI citations are references to your content within AI-generated answers. AI citations don't always include clickable links, but they still establish authority and influence how AI systems perceive your brand. Both matter, but AI citations are increasingly important as users rely on AI platforms for discovery.

AI hallucinations—false claims or inaccurate information—should be tracked as accuracy issues in your dashboard. Create a 'ground truth' document with validated facts about your brand, and regularly compare AI outputs against it. When hallucinations occur, document them, consider updating your source content to be clearer, and in some cases, reach out to AI platforms to provide corrections.

Yes, you can start with manual tracking using spreadsheets or free tools like AirOps Brand Visibility Tracker. For 20-50 queries, manual monitoring is feasible. However, as you scale to hundreds of queries across multiple platforms, automated tools like AmICited become essential for efficiency and consistency. Start small and upgrade as your needs grow.

Prioritize based on where your audience actually searches. If your customers use ChatGPT and Google AI Overviews, monitor those first. Perplexity is critical for research-heavy audiences. Gemini and Claude matter if your target users rely on those platforms. Start with 2-3 platforms and expand as you understand the business impact of each.

Most organizations see initial improvements within 2-4 weeks of content updates, with more significant results appearing within 2-3 months. However, AI systems update at different rates—Google AI Overviews may reflect changes faster than ChatGPT's training data. Treat this as a long-term strategy, not a quick fix, and focus on consistent optimization rather than expecting overnight results.

Enable your sales team to ask prospects how they first heard about your brand, and explicitly include AI assistants and overviews as options. Track these responses in your CRM. Over time, correlate high AI visibility for specific topics with sales conversations mentioning those topics. This qualitative data validates your metrics and helps prioritize optimization efforts.

Start with 100-200 high-value keywords that represent your core business, competitive positioning, and customer problems. This focused approach allows you to establish baselines and see results faster. As you mature, expand to 500+ keywords. Avoid monitoring every possible keyword—focus on queries with commercial intent and strategic importance to your business.

AmICited helps you track how AI systems reference your brand across ChatGPT, Google AI Overviews, Perplexity, and more. Get real-time insights into your AI visibility and competitive positioning.

Learn how to build effective AI visibility dashboards to monitor your brand across ChatGPT, Perplexity, and Google AI Overviews. Best practices for GEO reportin...

Learn what AI visibility success means and how to measure it. Discover key metrics, benchmarks, and tools to track your brand's presence in ChatGPT, Perplexity,...

Learn what an AI Visibility Dashboard is and how it tracks your brand's citations across ChatGPT, Perplexity, Claude, and Google AI. Discover key metrics, top t...