What Affects AI Indexing Speed? Key Factors for Faster AI Discovery

Discover the critical factors affecting AI indexing speed including site performance, crawl budget, content structure, and technical optimization. Learn how to ...

Discover how site speed directly impacts AI visibility and citations in ChatGPT, Gemini, and Perplexity. Learn the 2.5-second threshold and optimization strategies for AI crawlers.

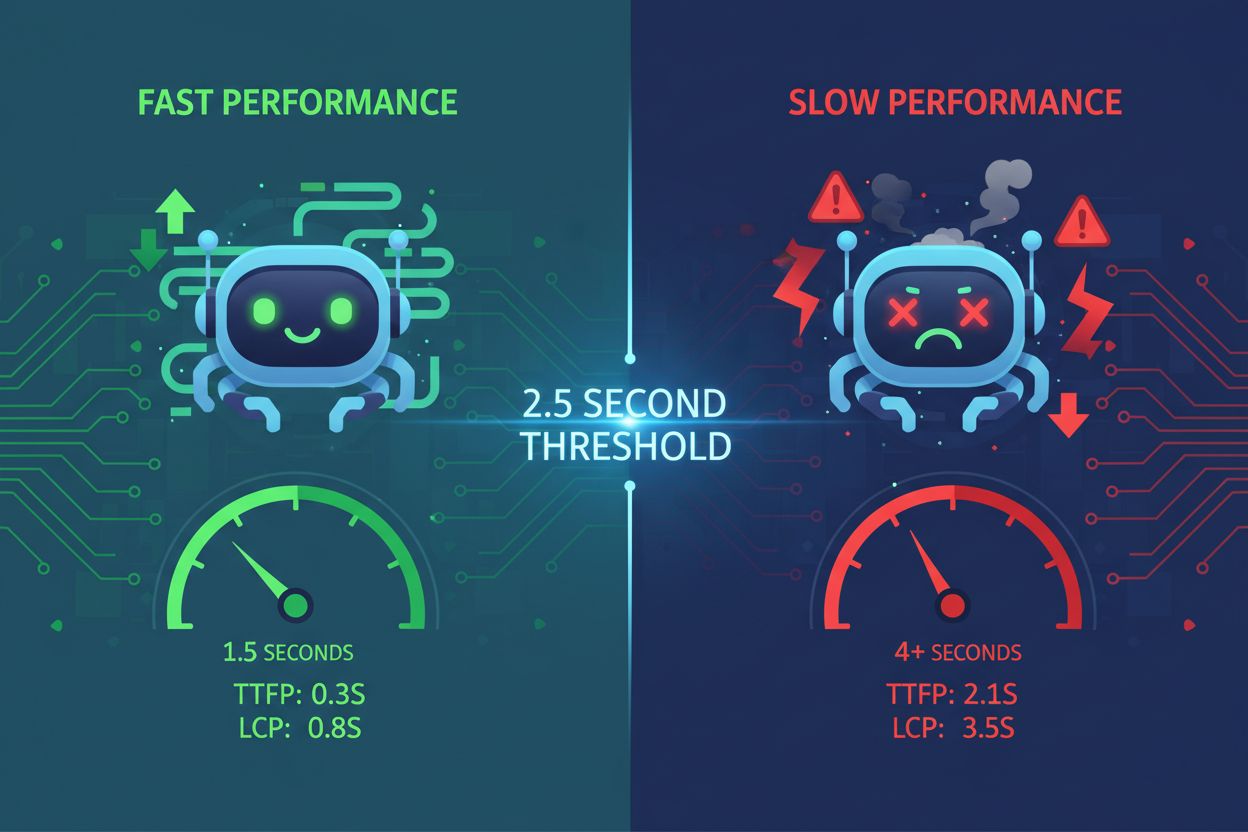

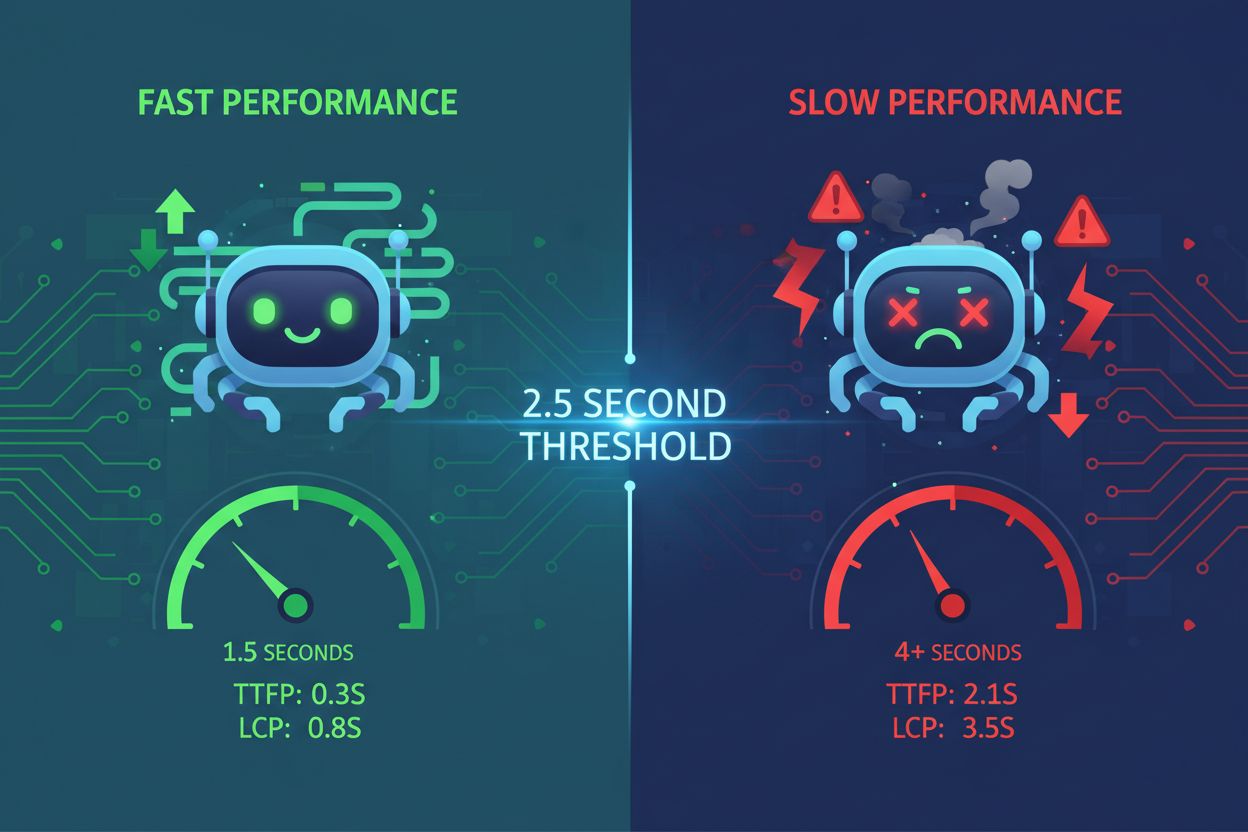

Site speed has become a critical factor in AI visibility, fundamentally reshaping how content gets discovered and cited by large language models. AI systems like ChatGPT, Gemini, and Perplexity operate under strict latency budgets—they cannot afford to wait for slow websites when retrieving information for user queries. When a page takes longer than 2.5 seconds to load, AI crawlers often skip it entirely, moving on to faster alternatives that can deliver the same information more efficiently. This creates a hard performance floor: sites that fail to meet this threshold are effectively invisible to AI systems, regardless of content quality. The implications are profound—poor site speed directly translates to reduced AI citations and diminished visibility in AI-powered search results. Understanding this threshold is the first step toward optimizing for AI visibility.

AI systems employ four distinct retrieval modes when gathering information: pre-training (historical data ingested during model training), real-time browsing (live web crawling during inference), API connectors (direct integrations with data sources), and RAG (Retrieval-Augmented Generation systems that fetch fresh content). Each mode has different performance requirements, but all are sensitive to core web vitals and server response metrics. When AI crawlers evaluate a page, they assess TTFB (Time to First Byte), LCP (Largest Contentful Paint), INP (Interaction to Next Paint), and CLS (Cumulative Layout Shift)—metrics that directly impact whether the crawler can efficiently extract and index content. Slow TTFB means the crawler waits longer before receiving any data; poor LCP means critical content renders late; high INP suggests JavaScript overhead; and CLS indicates unstable layouts that complicate content extraction.

| Metric | What it measures | Impact on LLM retrieval |

|---|---|---|

| TTFB | Time until first byte arrives from server | Determines initial crawl speed; slow TTFB causes timeouts |

| LCP | When largest visible content element renders | Delays content availability for extraction and indexing |

| INP | Responsiveness to user interactions | High INP suggests heavy JavaScript that slows parsing |

| CLS | Visual stability during page load | Unstable layouts confuse content extraction algorithms |

Research from Cloudflare Radar reveals a troubling disparity: AI bots crawl websites far more frequently than they actually refer traffic or citations. This crawl-to-referral ratio shows that not all crawling activity translates to visibility—some AI systems are simply indexing content without citing it in responses. Anthropic’s crawler, for example, exhibits a 70,900:1 ratio, meaning it crawls 70,900 pages for every one citation it generates. This suggests that crawl frequency alone is not a reliable indicator of AI visibility; what matters is whether the crawler can efficiently process your content and determine it’s valuable enough to cite. The implication is clear: optimizing for crawlability is necessary but insufficient—you must also ensure your content is fast enough to be processed and relevant enough to be selected. Understanding this ratio helps explain why some high-traffic sites still struggle with AI citations despite heavy crawler activity.

AI systems increasingly consider regional latency when selecting sources for user queries, particularly for location-sensitive searches. A site hosted on a single server in the US may load quickly for US-based crawlers but slowly for crawlers operating from other regions, affecting global AI visibility. CDN placement and data residency become critical factors—content served from geographically distributed edge locations loads faster for crawlers worldwide, improving the likelihood of selection. For queries containing “near me” or location modifiers, AI systems prioritize sources with fast regional performance, making local optimization essential for businesses targeting specific geographies. Sites that invest in global CDN infrastructure gain a competitive advantage in AI visibility across multiple regions. The performance threshold applies globally: a 2.5-second load time must be achievable from multiple geographic regions, not just from your primary market.

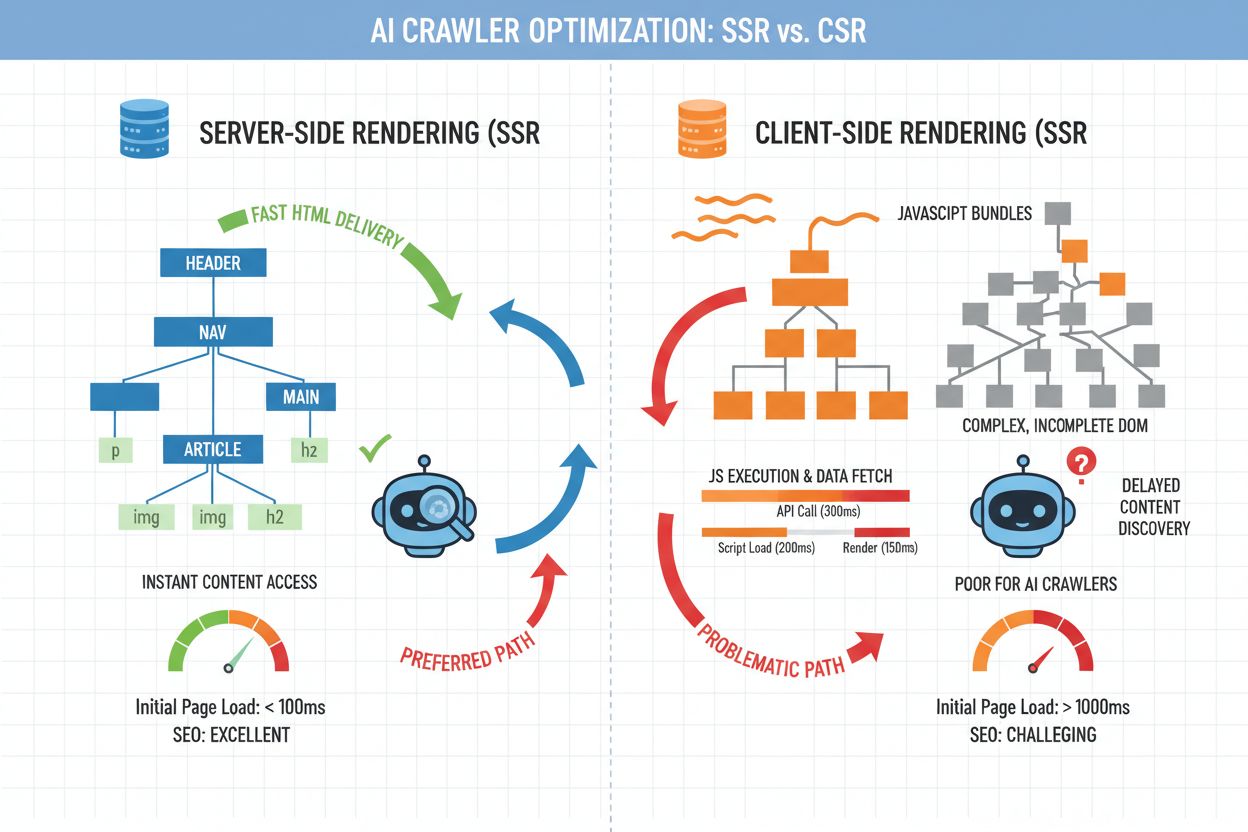

The choice between server-side rendering (SSR) and client-side rendering (CSR) has profound implications for AI visibility. AI crawlers strongly prefer clean, semantic HTML delivered in the initial response over JavaScript-heavy pages that require client-side rendering to display content. When a page relies on client-side rendering, the crawler must execute JavaScript, wait for API calls, and render the DOM—a process that adds latency and complexity. Minimal JavaScript, semantic markup, and logical heading hierarchies make content immediately accessible to AI systems, reducing processing time and improving crawl efficiency. Server-side rendering ensures that critical content is present in the initial HTML payload, allowing crawlers to extract information without executing code. Sites that prioritize fast, simple HTML over complex client-side frameworks consistently achieve better AI visibility. The architectural choice is not about abandoning modern frameworks—it’s about ensuring that core content is available in the initial response, with progressive enhancement for user interactions.

Optimizing for AI visibility requires a systematic approach to performance. The following checklist addresses the most impactful optimizations, each designed to reduce latency and improve crawlability:

Serve core content in initial HTML: Ensure that the main content, headlines, and key information are present in the server response, not hidden behind JavaScript or lazy-loading mechanisms. AI crawlers should be able to extract your most important content without executing code.

Keep TTFB and HTML size lean: Optimize server response time by reducing database queries, implementing caching, and minimizing initial HTML payload. A TTFB under 600ms and initial HTML under 50KB are realistic targets for most content sites.

Minimize render-blocking scripts and CSS: Defer non-critical JavaScript and inline only essential CSS. Render-blocking resources delay content availability and increase perceived latency for crawlers.

Use semantic HTML and logical headings: Structure content with proper heading hierarchy (H1, H2, H3), semantic tags (article, section, nav), and descriptive alt text. This helps AI systems understand content structure and importance.

Limit DOM complexity on high-value pages: Pages with thousands of DOM nodes take longer to parse and render. Simplify layouts on cornerstone content pages to reduce processing overhead.

Create lightweight variants for cornerstone content: Consider serving simplified, text-focused versions of your most important pages to AI crawlers, while maintaining rich experiences for human users. This can be done through user-agent detection or separate URLs.

Establishing a baseline is essential before optimizing for AI visibility. Use tools like Google PageSpeed Insights, WebPageTest, and Lighthouse to measure current performance across key metrics. Run controlled experiments by optimizing specific pages and monitoring whether AI citation rates increase over time—this requires tracking tools that correlate performance changes with visibility improvements. AmICited.com provides the infrastructure to monitor AI citations across multiple LLM platforms, allowing you to measure the direct impact of performance optimizations. Set up alerts for performance regressions and establish monthly reviews of both speed metrics and AI visibility trends. The goal is to create a feedback loop: measure baseline performance, implement optimizations, track citation increases, and iterate. Without measurement, you cannot prove the connection between speed and AI visibility—and without proof, it’s difficult to justify continued investment in performance optimization.

AmICited.com has emerged as the essential tool for tracking AI citations and monitoring visibility across ChatGPT, Gemini, Perplexity, and other AI systems. By integrating AmICited with your performance monitoring stack, you gain visibility into how speed improvements correlate with citation increases—a connection that’s difficult to establish through other means. Complementary tools like FlowHunt.io provide additional insights into AI crawler behavior and content indexing patterns. The competitive advantage comes from combining performance optimization with AI visibility monitoring: you can identify which speed improvements yield the highest citation gains, then prioritize those optimizations. Sites that systematically track both metrics—performance and AI citations—can make data-driven decisions about where to invest engineering resources. This integrated approach transforms site speed from a general best practice into a measurable driver of AI visibility and organic reach.

Many sites make critical errors when optimizing for AI visibility. Over-optimization that removes content is a common pitfall—stripping out images, removing explanatory text, or hiding content behind tabs to improve speed metrics often backfires by making content less valuable to AI systems. Focusing exclusively on desktop speed while ignoring mobile performance is another mistake, as AI crawlers increasingly simulate mobile user agents. Trusting platform defaults without testing is risky; default configurations often prioritize human UX over AI crawlability. Chasing PageSpeed Insights scores instead of actual load times can lead to misguided optimizations that improve metrics without improving real-world performance. Budget hosting choices that save money on server resources often result in slow TTFB and poor performance under load—a false economy that costs far more in lost AI visibility. Finally, treating performance optimization as a one-time project rather than ongoing maintenance leads to performance degradation over time as content accumulates and code complexity increases.

Site speed will continue to matter as AI search evolves and becomes more sophisticated. The 2.5-second threshold may tighten as AI systems become more selective about sources, or it may shift as new retrieval technologies emerge—but the fundamental principle remains: fast sites are more visible to AI systems. Treat performance optimization as an ongoing practice, not a completed project. Regularly audit your site’s speed metrics, monitor AI citation trends, and adjust your technical architecture as new best practices emerge. The sites that will dominate AI-powered search results are those that align their optimization efforts with both human user experience and AI crawler requirements. By maintaining strong performance fundamentals—fast TTFB, semantic HTML, minimal JavaScript, and clean architecture—you ensure that your content remains visible and citable regardless of how AI systems evolve. The future belongs to sites that treat speed as a strategic advantage, not an afterthought.

Traditional SEO considers speed as one of many ranking factors, but AI systems have strict latency budgets and skip slow sites entirely. If your page takes longer than 2.5 seconds to load, AI crawlers often abandon it before extracting content, making speed a hard requirement rather than a preference for AI visibility.

The critical threshold is 2.5 seconds for full page load. However, Time to First Byte (TTFB) should be under 600ms, and initial HTML should load within 1-1.5 seconds. These metrics ensure AI crawlers can efficiently access and process your content without timing out.

Test performance monthly using tools like Google PageSpeed Insights, WebPageTest, and Lighthouse. More importantly, correlate these metrics with AI citation tracking through tools like AmICited.com to measure the actual impact of performance changes on your visibility.

Yes, increasingly so. AI crawlers often simulate mobile user agents, and mobile performance is frequently slower than desktop. Ensure your mobile load time matches your desktop performance—this is critical for global AI visibility across different regions and network conditions.

You can make incremental improvements through caching, CDN optimization, and image compression. However, significant gains require architectural changes like server-side rendering, reducing JavaScript, and simplifying DOM structure. The best results come from addressing both infrastructure and code-level optimizations.

Use AmICited.com to track your AI citations across platforms, then correlate citation trends with performance metrics from Google PageSpeed Insights. If citations drop after a performance regression, or increase after optimization, you have clear evidence of the connection.

Core Web Vitals (LCP, INP, CLS) directly impact AI crawler efficiency. Poor LCP delays content availability, high INP suggests JavaScript overhead, and CLS confuses content extraction. While these metrics matter for human UX, they're equally critical for AI systems to efficiently process and index your content.

Optimize for both simultaneously—the same improvements that make your site fast for humans (clean code, semantic HTML, minimal JavaScript) also make it fast for AI crawlers. The 2.5-second threshold benefits both audiences, and there's no trade-off between human UX and AI visibility.

Track how your site speed affects AI visibility across ChatGPT, Gemini, and Perplexity. Get real-time insights into your AI citations and optimize for maximum visibility.

Discover the critical factors affecting AI indexing speed including site performance, crawl budget, content structure, and technical optimization. Learn how to ...

Learn how page speed impacts your visibility in AI search engines like ChatGPT, Perplexity, and Gemini. Discover optimization strategies and metrics that matter...

Community discussion on whether page speed affects AI search visibility. Real data from performance engineers and SEO professionals analyzing speed correlation ...