JavaScript Rendering for AI

Learn how JavaScript rendering impacts AI visibility. Discover why AI crawlers can't execute JavaScript, what content gets hidden, and how prerendering solution...

Discover how SSR and CSR rendering strategies affect AI crawler visibility, brand citations in ChatGPT and Perplexity, and your overall AI search presence.

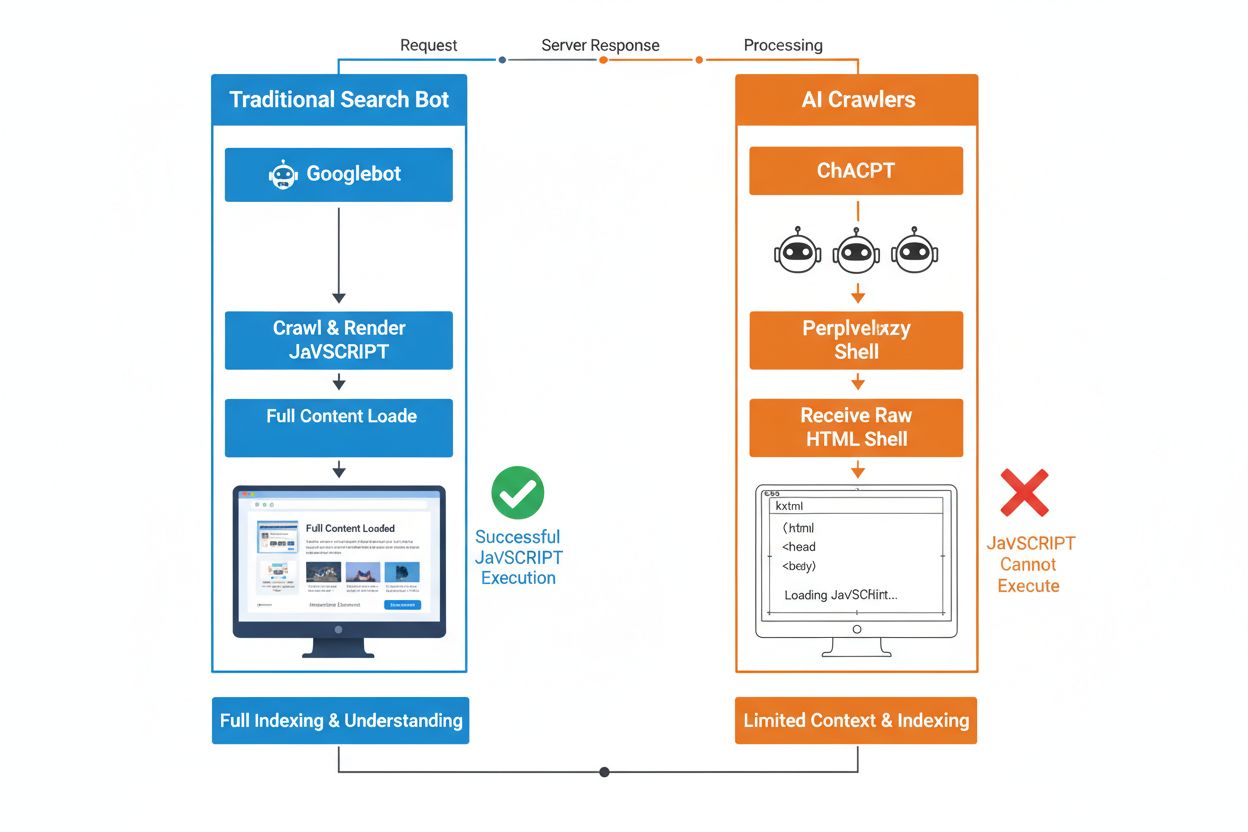

The fundamental difference between traditional search bots and AI crawlers lies in their approach to JavaScript execution. While Googlebot and other traditional search engines can render JavaScript (though with resource constraints), AI crawlers like GPTBot, ChatGPT-User, and OAI-SearchBot do not execute JavaScript at all—they only see the raw HTML delivered on the initial page load. This critical distinction means that if your website’s content depends on client-side JavaScript to render, AI systems will receive an incomplete or blank snapshot of your pages, missing product details, pricing information, reviews, and other dynamic content that users see in their browsers. Understanding this gap is essential because AI-powered search results are rapidly becoming a primary discovery channel for users seeking information.

Server-Side Rendering (SSR) fundamentally improves AI visibility by delivering fully rendered HTML directly from the server on the initial request, eliminating the need for AI crawlers to execute JavaScript. With SSR, all critical content—headings, body text, product information, metadata, and structured data—is present in the HTML that bots receive, making it immediately accessible for ingestion into AI training corpora and retrieval indices. This approach ensures consistent content delivery across all crawlers, faster indexing speeds, and complete metadata visibility that AI systems rely on to understand and cite your content accurately. The following table illustrates how different rendering strategies impact AI crawler visibility:

| Rendering Type | What AI Crawlers See | Indexing Speed | Content Completeness | Metadata Visibility |

|---|---|---|---|---|

| Server-Side Rendering (SSR) | Fully rendered HTML with all content | Fast (immediate) | Complete | Excellent |

| Client-Side Rendering (CSR) | Minimal HTML shell, missing dynamic content | Slow (if rendered at all) | Incomplete | Poor |

| Static Site Generation (SSG) | Pre-built, cached HTML | Very fast | Complete | Excellent |

| Hybrid/Incremental | Mix of static and dynamic routes | Moderate to fast | Good (if critical pages pre-rendered) | Good |

Client-Side Rendering (CSR) presents significant challenges for AI visibility because it forces crawlers to wait for JavaScript execution—something AI bots simply won’t do due to resource constraints and tight timeouts. When a CSR-based site loads, the initial HTML response contains only a minimal shell with loading spinners and placeholder elements, while the actual content loads asynchronously through JavaScript. AI crawlers impose strict timeouts of 1-5 seconds and don’t execute scripts, meaning they capture an empty or near-empty page snapshot that lacks product descriptions, pricing, reviews, and other critical information. This creates a cascading problem: incomplete content snapshots lead to poor chunking and embedding quality, which reduces the likelihood of your pages being selected for inclusion in AI-generated answers. For e-commerce sites, SaaS platforms, and content-heavy applications relying on CSR, this translates directly to lost visibility in AI Overviews, ChatGPT responses, and Perplexity answers—the very channels driving discovery in the AI era.

The technical reason AI bots cannot execute JavaScript stems from fundamental scalability and resource constraints inherent to their architecture. AI crawlers prioritize speed and efficiency over completeness, operating under strict timeouts because they must process billions of pages to train and update language models. Executing JavaScript requires spinning up headless browsers, allocating memory, and waiting for asynchronous operations to complete—luxuries that don’t scale when crawling at the volume required for LLM training. Instead, AI systems focus on extracting clean, semantically structured HTML that’s immediately available, treating static content as the canonical version of your site. This design choice reflects a fundamental truth: AI systems are optimized for static HTML delivery, not for rendering complex JavaScript frameworks like React, Vue, or Angular.

The impact on AI-generated answers and brand visibility is profound and directly affects your business outcomes. When AI crawlers cannot access your content due to JavaScript rendering, your brand becomes invisible in AI Overviews, missing from citations, and absent from LLM-powered search results—even if you rank well in traditional Google search. For e-commerce sites, this means product details, pricing, and availability information never reach AI systems, resulting in incomplete or inaccurate product recommendations and lost sales opportunities. SaaS companies lose visibility for feature comparisons and pricing pages that would otherwise drive qualified leads through AI-powered research tools. News and content sites see their articles excluded from AI summaries, reducing referral traffic from platforms like ChatGPT and Perplexity. The gap between what humans see and what AI systems see creates a two-tier visibility problem: your site may appear healthy in traditional SEO metrics while simultaneously becoming invisible to the fastest-growing discovery channel.

Pre-rendering and hybrid solutions offer practical ways to combine the benefits of both rendering approaches without requiring a complete architectural overhaul. Rather than choosing between CSR’s interactivity and SSR’s crawlability, modern teams deploy strategic combinations that serve different purposes:

These approaches allow you to maintain rich, interactive user experiences while ensuring that AI crawlers receive complete, fully-rendered HTML. Frameworks like Next.js, Nuxt, and SvelteKit make hybrid rendering accessible without requiring extensive custom development. The key is identifying which pages drive acquisition, revenue, or support deflection—those critical pages should always be pre-rendered or server-rendered to guarantee AI visibility.

Rendering strategy directly affects how AI systems reference and cite your brand, making it essential to monitor your visibility across AI platforms. Tools like AmICited.com track how AI systems cite your brand across ChatGPT, Perplexity, Google AI Overviews, and other LLM-powered platforms, revealing whether your content is actually reaching these systems. When your site uses CSR without pre-rendering, AmICited data often shows a stark gap: you may rank well in traditional search but receive zero citations in AI-generated answers. This monitoring reveals the true cost of JavaScript rendering choices—not just in crawl efficiency, but in lost brand visibility and citation opportunities. By implementing SSR or pre-rendering and then tracking results through AmICited, you can quantify the direct impact of rendering decisions on AI visibility, making it easier to justify engineering investment to stakeholders focused on traffic and conversions.

Auditing and optimizing your rendering strategy for AI visibility requires a systematic, step-by-step approach. Start by identifying which pages drive the most value: product pages, pricing pages, core documentation, and high-traffic blog posts should be your priority. Use tools like Screaming Frog (in “Text Only” mode) or Chrome DevTools to compare what bots see versus what users see—if critical content is missing from the page source, it’s JavaScript-dependent and invisible to AI crawlers. Next, choose your rendering strategy based on content freshness requirements: static pages can use SSG, frequently-updated content benefits from SSR or ISR, and interactive features can layer JavaScript on top of server-rendered HTML. Then, test with actual AI bots by submitting your pages to ChatGPT, Perplexity, and Claude to verify they can access your content. Finally, monitor crawl logs for AI user-agents (GPTBot, ChatGPT-User, OAI-SearchBot) to confirm these bots are successfully crawling your pre-rendered or server-rendered pages. This iterative approach transforms rendering from a technical detail into a measurable visibility lever.

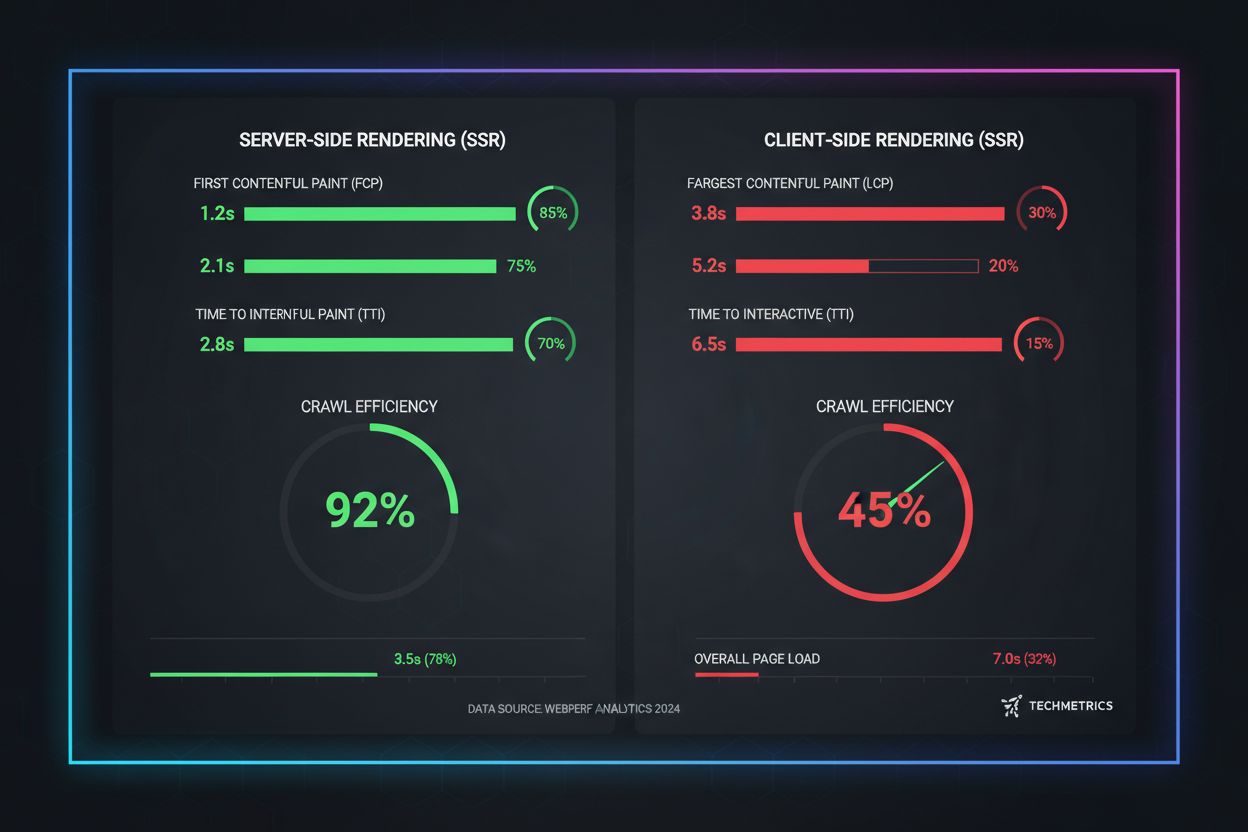

Real-world performance metrics reveal the dramatic differences between rendering approaches when it comes to AI crawlability. SSR and pre-rendered pages achieve First Contentful Paint (FCP) in 0.5-1.5 seconds, while CSR sites often require 2-4 seconds or more as JavaScript downloads and executes. For AI crawlers operating under 1-5 second timeouts, this difference is the difference between complete visibility and total invisibility. Crawl efficiency improves dramatically with SSR: a pre-rendered e-commerce site can be fully crawled and indexed in hours, while a CSR equivalent might take weeks as crawlers struggle with JavaScript rendering overhead. Indexing speed improvements are equally significant—SSR sites see new content indexed within 24-48 hours, while CSR sites often experience 7-14 day delays. For time-sensitive content like news articles, product launches, or limited-time offers, this delay directly translates to lost visibility during the critical window when users are actively searching.

The future of SEO is inseparable from AI search visibility, making rendering strategy a critical long-term investment rather than a technical afterthought. AI-powered search is growing exponentially—13.14% of all Google search results now trigger AI Overviews, and platforms like ChatGPT receive over four billion visits monthly, with Perplexity and Claude rapidly gaining adoption. As AI systems become the primary discovery channel for more users, the rendering decisions you make today will determine your visibility tomorrow. Continuous monitoring is essential because AI crawler behavior, timeout thresholds, and JavaScript support evolve as these systems mature. Teams that treat rendering as a one-time migration often find themselves invisible again within months as AI platforms change their crawling strategies. Instead, build rendering optimization into your quarterly planning cycle, include AI visibility checks in your regression testing, and use tools like AmICited to track whether your brand maintains visibility as the AI landscape shifts. The brands winning in AI search are those that treat rendering strategy as a core competitive advantage, not a technical debt to be addressed later.

AI crawlers like GPTBot and ChatGPT-User operate under strict resource constraints and tight timeouts (1-5 seconds) because they must process billions of pages to train language models. Executing JavaScript requires spinning up headless browsers and waiting for asynchronous operations—luxuries that don't scale at the volume required for LLM training. Instead, AI systems focus on extracting clean, static HTML that's immediately available.

Server-Side Rendering delivers fully rendered HTML on the initial request, making all content immediately accessible to AI crawlers without JavaScript execution. This ensures your product details, pricing, reviews, and metadata reach AI systems reliably, increasing the chances your brand gets cited in AI-generated answers and appears in AI Overviews.

Server-Side Rendering (SSR) renders pages on-demand when a request arrives, while pre-rendering generates static HTML files at build time. Pre-rendering works best for content that doesn't change frequently, while SSR is better for dynamic content that updates regularly. Both approaches ensure AI crawlers receive complete HTML without JavaScript execution.

Yes, but with significant limitations. You can use pre-rendering tools to generate static HTML snapshots of your CSR pages, or implement hybrid rendering where critical pages are server-rendered while less important pages remain client-rendered. However, without these optimizations, CSR sites are largely invisible to AI crawlers.

Use tools like Screaming Frog (Text Only mode), Chrome DevTools, or Google Search Console to compare what bots see versus what users see. If critical content is missing from the page source, it's JavaScript-dependent and invisible to AI crawlers. You can also test directly with ChatGPT, Perplexity, and Claude to verify they can access your content.

SSR and pre-rendered pages typically achieve First Contentful Paint (FCP) in 0.5-1.5 seconds, while CSR sites often require 2-4+ seconds. Since AI crawlers operate under 1-5 second timeouts, faster rendering directly translates to better AI crawlability. Improved Core Web Vitals also benefit user experience and traditional SEO rankings.

AmICited monitors how AI systems cite your brand across ChatGPT, Perplexity, and Google AI Overviews. By tracking your AI visibility before and after implementing SSR or pre-rendering, you can quantify the direct impact of rendering decisions on brand citations and AI search presence.

It depends on your content freshness requirements and business priorities. Static content benefits from SSG, frequently-updated content from SSR, and interactive features can layer JavaScript on top of server-rendered HTML. Start by identifying high-value pages (product pages, pricing, documentation) and prioritize those for SSR or pre-rendering first.

Monitor how ChatGPT, Perplexity, and Google AI Overviews reference your brand. Understand the real impact of your rendering strategy on AI citations.

Learn how JavaScript rendering impacts AI visibility. Discover why AI crawlers can't execute JavaScript, what content gets hidden, and how prerendering solution...

Learn how prerendering makes JavaScript content visible to AI crawlers like ChatGPT, Claude, and Perplexity. Discover the best technical solutions for AI search...

Discover the critical technical SEO factors affecting your visibility in AI search engines like ChatGPT, Perplexity, and Google AI Mode. Learn how page speed, s...