Data-Driven PR: Creating Research That AI Wants to Cite

Learn how to create original research and data-driven PR content that AI systems actively cite. Discover the 5 attributes of citation-worthy content and strateg...

Learn how proprietary survey data and original statistics become citation magnets for LLMs. Discover strategies to improve AI visibility and earn more citations from ChatGPT, Perplexity, and Google AI Overviews.

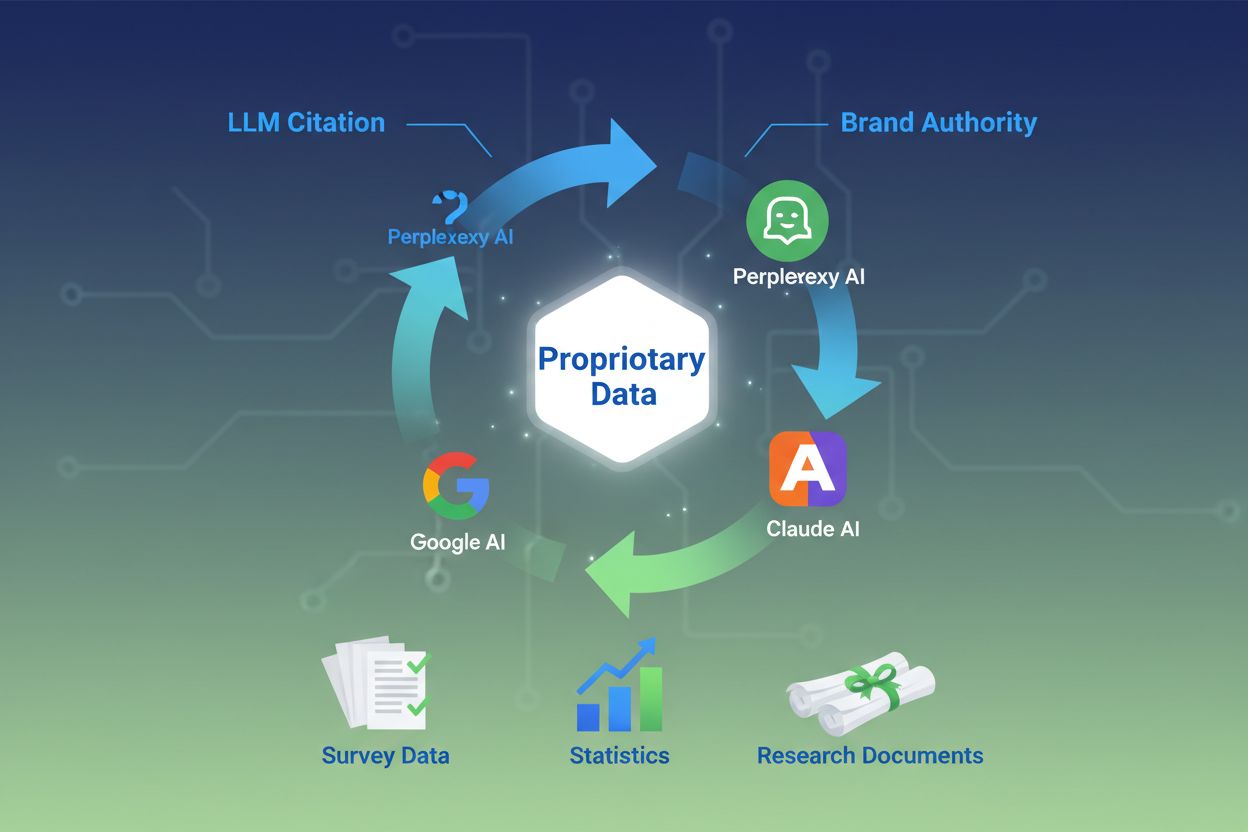

Large Language Models don’t invent data—they pull it from verifiable sources. When your team publishes unique statistics or original methodologies, you temporarily own that knowledge, giving LLMs a reason to cite you to validate their responses. This is the foundation of what IDX calls the “Authority Flywheel,” a system where proprietary research becomes your most powerful citation magnet.

The mechanics are straightforward: AI models evaluate sources based on whether they can verify claims through multiple channels. When you publish original research, you create a knowledge asset that exists nowhere else on the web. This uniqueness forces LLMs to cite your source if they want to include that data in their answers. A campaign for The Zebra, an insurance platform, demonstrates this principle perfectly—combining proprietary research with Digital PR generated more than 1,580 high-quality media links and drove a 354% increase in organic traffic.

According to recent research, 48.6% of SEO experts identified Digital PR as the most effective link-building tactic for 2025. But the real power lies in what happens after: when your proprietary data gets distributed across diverse, high-tier domains through Digital PR, it confirms your authority within multiple knowledge networks simultaneously. This multi-channel validation is exactly what LLMs look for when deciding whether to cite your brand.

The key insight: proprietary data creates what researchers call “temporary knowledge ownership.” Unlike generic content that competes with thousands of similar articles, your original research is the only source for that specific data. This scarcity principle makes LLMs more likely to cite you, because citing you is the only way to include that information in their responses.

Understanding how LLMs actually retrieve and select sources is critical to optimizing for citations. These systems don’t work like traditional search engines. Instead, they operate through two distinct knowledge pathways: parametric memory (knowledge stored during training) and retrieved knowledge (real-time information fetched through Retrieval-Augmented Generation, or RAG).

Parametric knowledge represents everything an LLM “knows” from pre-training. This knowledge is static and fixed at the model’s training cutoff. Approximately 60% of ChatGPT queries are answered purely from parametric knowledge without triggering any web search. Entities mentioned frequently across authoritative sources during training develop stronger neural representations, making them more likely to be recalled. Wikipedia content represents about 22% of major LLM training data, which explains why Wikipedia citations appear so frequently in AI-generated answers.

Retrieved knowledge operates differently. When an LLM needs current information, it uses RAG systems that combine semantic search (dense vectors) with keyword matching (BM25) using Reciprocal Rank Fusion. Research shows that hybrid retrieval delivers 48% improvement over single-method approaches. The system then reranks results using cross-encoder models before injecting the top 5-10 chunks into the LLM’s prompt as context.

| Signal | Traditional SEO Priority | LLM Citation Priority | Why It Matters |

|---|---|---|---|

| Domain Authority | High (core ranking factor) | Weak/Neutral | LLMs prioritize content structure over domain power |

| Backlink Quantity | High (primary signal) | Weak/Neutral | LLMs evaluate source credibility differently |

| Content Structure | Medium | Critical | Clear headings and answer blocks are essential for extraction |

| Proprietary Data | Low | Very High | Unique information forces citation |

| Brand Search Volume | Low | Highest (0.334 correlation) | Indicates real-world authority and demand |

| Freshness | Medium | High | LLMs prefer recently updated content |

| E-E-A-T Signals | Medium | High | Author credentials and transparency matter |

The critical difference: LLMs don’t rank pages—they extract semantic chunks. A page with poor traditional SEO metrics but crystal-clear structure and proprietary data can outperform a high-authority page with vague positioning. This fundamental shift means your citation strategy must prioritize machine readability and content clarity over traditional link-building metrics.

The metrics that matter for AI visibility have fundamentally shifted from traditional SEO signals. For two decades, domain authority, backlinks, and keyword rankings defined success. In 2025, these metrics have become almost irrelevant for LLM citations. Instead, a new hierarchy has emerged based on how AI systems actually evaluate and select sources.

Brand search volume is now the strongest predictor of LLM citations, with a 0.334 correlation coefficient—significantly higher than any traditional SEO metric. This makes intuitive sense: if millions of people search for your brand name, it signals real-world authority and demand. LLMs recognize this signal and weight it heavily when deciding whether to cite you. Meanwhile, backlinks show weak or neutral correlation with AI citations, contradicting decades of SEO wisdom.

The shift extends to content evaluation. Adding statistics to your content increases AI visibility by 22%. Including quotations boosts visibility by 37%. Original research is cited 3x more often than generic content. These aren’t marginal improvements—they represent fundamental changes in how LLMs evaluate source quality.

| Metric | Old Focus (Pre-2024) | New Focus (2025+) | Impact on LLM Citations |

|---|---|---|---|

| Link Quality Indicator | Domain Authority Score (DA/DR) | Topical Relevance & Editorial Context | Grounding and source diversity |

| Anchor Text Strategy | Exact Match Keywords | Branded/Entity Mentions | Entity recognition and consistency |

| Content Type | Guest Posts (Volume) | Original Research/Data Journalism | 3x higher citation likelihood |

| Goal Measurement | Ranking Position Increase | Citation Rate in AI Overviews | Trust and authority validation |

| Outreach Approach | Acquiring Links | Building Relationships/Providing Value | Higher editorial quality |

This matrix reveals a critical insight: the brands winning in AI visibility aren’t necessarily those with the most backlinks or highest domain authority. They’re the brands creating original research, maintaining consistent brand signals, and publishing content structured for machine extraction. The competitive advantage has shifted from link quantity to content quality and uniqueness.

Proprietary survey data serves a unique role in AI visibility strategy. Unlike generic industry reports that LLMs can find from multiple sources, your original survey data can only be cited from your website. This creates a citation advantage that competitors cannot replicate, no matter how strong their backlink profiles are.

Survey data works because it provides what LLMs call “grounding”—verifiable evidence that validates claims. When you state that “78% of marketing leaders prioritize AI visibility,” LLMs can cite your survey as proof. Without this proprietary data, the same claim would be speculative, and LLMs would either skip it or cite a competitor’s research instead.

The most effective survey data addresses specific questions your target audience is asking:

The impact is measurable. Research shows that adding statistics increases AI visibility by 22%, while quotations increase visibility by 37%. Original research is cited 3x more often than generic content. These multipliers compound when you combine multiple types of proprietary data in a single content asset.

The key is transparency. LLMs evaluate methodology as carefully as findings. If your survey methodology is sound, sample size is adequate, and findings are presented honestly (including limitations), LLMs will cite you confidently. If methodology is vague or findings seem cherry-picked, LLMs will deprioritize your source in favor of more transparent competitors.

Publishing proprietary data is only half the battle. The other half is structuring that data so LLMs can easily extract and cite it. Content architecture matters as much as the data itself.

Start with direct answers. LLMs prefer content that leads with the answer, not the journey. Instead of “We conducted a survey to understand marketing priorities, and here’s what we found,” write “78% of marketing leaders now prioritize AI visibility in their 2025 strategy.” This direct structure makes extraction easier and increases citation probability.

Optimal paragraph length for LLM extraction is 40-60 words. This length allows LLMs to pull a complete thought without truncation. Longer paragraphs get chunked, potentially losing context. Shorter paragraphs might not contain enough information to be useful.

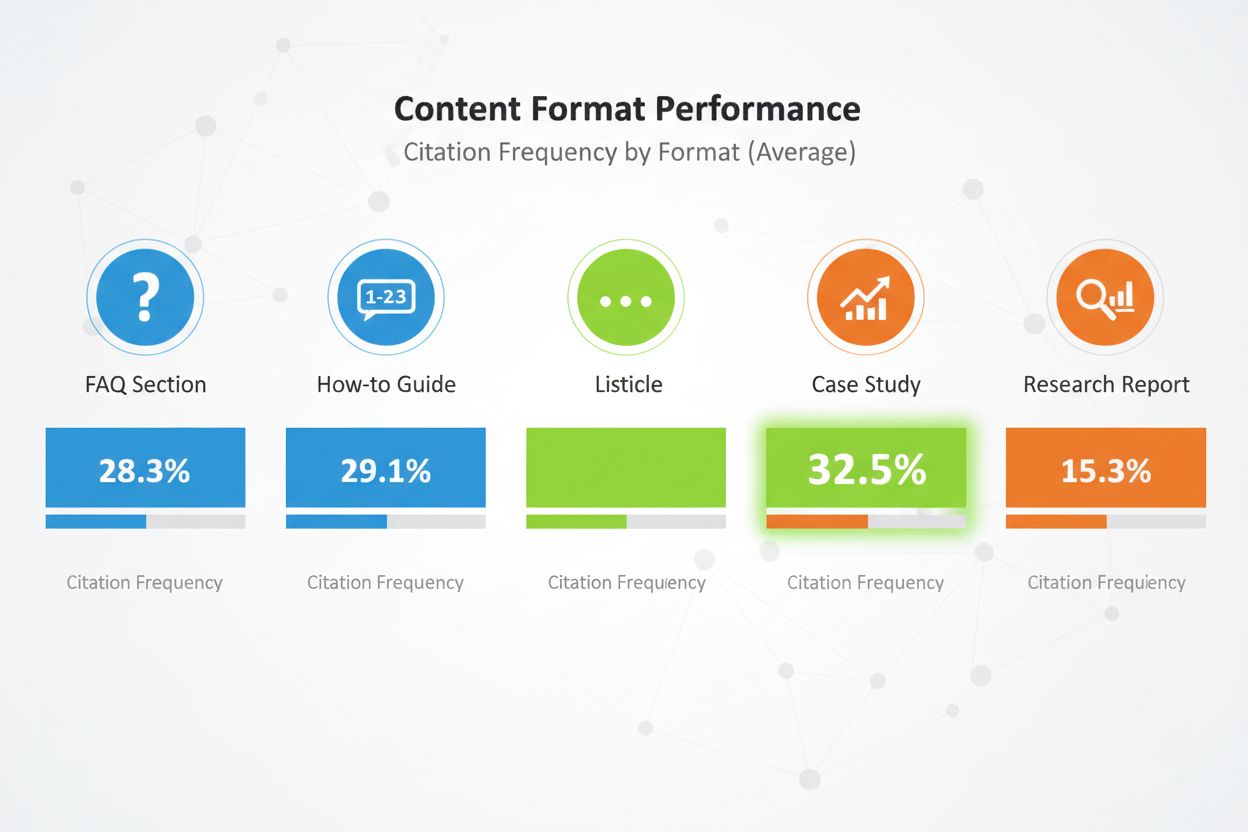

Content formats matter significantly. Comparative listicles receive 32.5% of all AI citations—the highest rate of any format. FAQ sections perform exceptionally well because they match how users query AI systems. How-to guides, case studies, and research reports all perform well, but listicles consistently outperform other formats.

Structure your content with clear heading hierarchy. Use H2 headings that mirror likely search queries. Under each H2, use H3 headings for sub-topics. This hierarchy helps LLMs understand your content structure and extract relevant sections.

Implement E-E-A-T signals throughout. Include author bios with credentials and real-world experience. Link to third-party validation of your claims. Be transparent about methodology. Cite your sources. These signals tell LLMs that your content is trustworthy and worth citing.

Use semantic HTML throughout. Structure data with proper <table>, <ul>, and <ol> tags rather than CSS-styled divs. This makes your content easier for AI to parse and summarize. Include schema markup (Article, FAQPage, HowTo) to provide additional context about your content type.

Finally, update your content regularly. LLMs prefer fresher content, especially for time-sensitive data. If your survey is from 2024, update it in 2025. Add “Last Updated” timestamps to show you actively maintain your content. This signals to LLMs that your data remains current and reliable.

Publishing proprietary data on your website is necessary but insufficient. LLMs discover content through multiple channels, and your distribution strategy determines how many of those channels carry your data.

Digital PR is the most effective distribution channel for proprietary data. When your research appears in industry publications, news outlets, and authoritative blogs, it creates multiple citation opportunities. LLMs index these third-party mentions and use them to validate your original source. A brand that appears on 4+ platforms is 2.8x more likely to be cited in ChatGPT responses compared to brands with limited platform presence.

Effective distribution channels include:

Each distribution channel serves a purpose. Press releases create initial awareness and earn media coverage. Industry publications provide credibility and reach decision-makers. LinkedIn amplification reaches professionals at scale. Reddit engagement demonstrates community trust. Review platforms provide structured data that LLMs can easily parse.

The multiplier effect is significant. When your proprietary data appears in multiple authoritative sources, LLMs see consistent signals across the web. This consistency increases confidence in your data and makes citation more likely. A single mention on your website might get overlooked. The same data mentioned on your website, in a press release, on an industry publication, and in a review platform becomes impossible to ignore.

Timing matters too. Distribute your proprietary data strategically. Release it first on your website with a press release. Follow up with industry publication placements. Then amplify through social channels and community engagement. This staggered approach creates a sustained visibility wave rather than a single spike.

Publishing proprietary data without measuring its impact is like running ads without tracking conversions. You need visibility into whether your data is actually earning citations and improving your AI visibility.

Start with citation frequency tracking. Identify 20-50 high-value buyer questions that your proprietary data answers. Query major AI platforms (ChatGPT, Perplexity, Claude, Google AI Overviews) monthly using these questions. Document whether your brand appears, in what position, and whether the citation includes a link to your website.

Calculate your citation frequency as a percentage: (Prompts where you’re mentioned) / (Total prompts) × 100. Aim for 30%+ citation frequency for your core category queries. Top-performing brands in competitive categories achieve 50%+ citation frequency.

Track AI Share of Voice (AI SOV) by running identical prompts and calculating your percentage of total brand mentions. If your brand appears in 3 out of 10 AI responses while competitors appear in 2 each, your AI SOV is 30%. In competitive categories, aim for AI SOV exceeding your traditional market share by 10-20%.

Monitor sentiment analysis. Beyond just being mentioned, track whether AI systems describe your brand positively, neutrally, or negatively. Use tools like Profound AI that specialize in hallucination detection—identifying when AI provides false or outdated information about your brand. Aim for 70%+ positive sentiment across AI platforms.

Set up a Knowledge-Based Indicator (KBI) dashboard tracking:

Update these metrics monthly. Look for trends rather than individual data points. A single month of low citations might be noise. Three months of declining citations indicates a problem requiring investigation and response.

Tracking proprietary data citations manually is time-consuming and error-prone. AmICited.com provides the infrastructure to monitor your AI visibility at scale, specifically designed for brands using proprietary data as a citation strategy.

The platform monitors how AI systems cite your proprietary research across ChatGPT, Perplexity, Google AI Overviews, Claude, Gemini, and emerging AI platforms. Rather than manually querying each platform monthly, AmICited automates the process, running your target prompts continuously and tracking citation patterns in real-time.

Key features include:

The platform integrates with your existing analytics stack, feeding AI citation data into your marketing dashboards alongside traditional SEO metrics. This unified view helps you understand the full impact of your proprietary data strategy on brand visibility and pipeline generation.

For brands serious about AI visibility, AmICited provides the measurement infrastructure that makes optimization possible. You can’t improve what you can’t measure, and traditional analytics tools were never designed to track LLM citations. AmICited fills that gap, giving you the visibility you need to maximize ROI on your proprietary data investments.

Even well-intentioned proprietary data strategies often fail due to preventable mistakes. Understanding these pitfalls helps you avoid them.

The most common mistake is hiding data behind “Contact Sales” forms. LLMs cannot access gated content, so they’ll rely on incomplete or speculative information from forums instead. If your survey findings are hidden, LLMs will cite a Reddit discussion about your product rather than your official research. Publish key findings publicly with transparent methodology. You can gate detailed reports while keeping summary data and insights publicly available.

Inconsistent terminology across platforms creates confusion. If your website calls your product a “marketing automation platform” while your LinkedIn profile describes it as “CRM software,” LLMs struggle to build a coherent understanding of your business. Use consistent category language everywhere. Define your terminology map and apply it uniformly across your website, LinkedIn, Crunchbase, and other platforms.

Missing author credentials undermine trust. LLMs evaluate E-E-A-T signals carefully. If your survey lacks author bios with real credentials, LLMs deprioritize it. Include detailed author bios with relevant experience, certifications, and previous publications. Link to author profiles on LinkedIn and other platforms.

Outdated statistics damage credibility. If your survey is from 2023 but you’re still citing it in 2025, LLMs notice. Update your research regularly. Add “Last Updated” timestamps. Conduct new surveys annually to maintain freshness. LLMs prefer recent data, especially for time-sensitive topics.

Vague methodology reduces citation probability. If your survey methodology isn’t transparent, LLMs question the validity of your findings. Publish your methodology openly. Explain sample size, sampling method, survey period, and any limitations. Transparency builds confidence.

Keyword stuffing in your proprietary data content performs worse in AI systems than in traditional search. LLMs detect and penalize artificial language. Write naturally. Focus on clarity and accuracy rather than keyword density. Your proprietary data should read like genuine research, not marketing copy.

Thin content around your proprietary data gets actively penalized. A single paragraph mentioning your survey findings isn’t enough. Create comprehensive content that explores implications, provides context, and answers follow-up questions. Aim for 2,000+ words of substantive content around each major proprietary data asset.

Real-world examples demonstrate the power of proprietary data for AI visibility. These brands invested in original research and saw measurable results.

The Zebra’s Digital PR Success: The Zebra, an insurance comparison platform, combined proprietary research with Digital PR to generate 1,580+ high-quality media links and drive a 354% increase in organic traffic. By publishing original insurance industry research and distributing it through earned media, The Zebra became the go-to source for insurance data. LLMs now cite The Zebra’s research when answering questions about insurance trends and pricing.

Tally’s Community Engagement Strategy: Tally, an online form builder, improved its AI visibility by actively engaging in community forums and sharing its product roadmap. Rather than just publishing research, Tally became a trusted voice in communities where its users congregate. This authentic engagement made ChatGPT a top referral source, driving significant weekly signup increases. By grounding GPT-4 in curated, context-specific evidence, Tally raised its factual accuracy from 56% to 89%.

HubSpot’s Ongoing Research Program: HubSpot publishes regular research reports on marketing trends, sales effectiveness, and customer service best practices. These reports have become industry standards that LLMs cite frequently. HubSpot’s commitment to continuous research has made the brand synonymous with marketing data and insights. When LLMs answer questions about marketing trends, HubSpot research appears consistently.

These case studies share common elements: original research, transparent methodology, consistent distribution, and ongoing updates. None of these brands relied on a single research project. Instead, they built research programs that continuously generate new proprietary data, creating a sustained citation advantage.

The lesson is clear: proprietary data isn’t a one-time tactic. It’s a strategic investment in becoming the authoritative source in your category. Brands that commit to regular research, transparent methodology, and strategic distribution earn consistent citations from LLMs and build lasting competitive advantages in AI visibility.

You don't need massive datasets. Even a focused survey of 100-500 respondents can provide valuable proprietary insights that LLMs will cite. The key is that the data is original, methodology is transparent, and findings are actionable. Quality and uniqueness matter more than quantity.

Customer satisfaction surveys, industry trend research, competitive analysis, user behavior studies, and market sizing research all perform well. The best data answers specific questions your target audience is asking and provides insights competitors don't have.

Real-time platforms like Perplexity may cite fresh data within weeks. ChatGPT and other models with less frequent updates may take 2-3 months. Consistent, high-quality proprietary data typically shows measurable citation increases within 3-6 months.

No. LLMs cannot access gated content, so they'll rely on incomplete or speculative information from forums instead. Publish key findings publicly with transparent methodology. You can gate detailed reports while keeping summary data and insights publicly available.

Use clear, consistent terminology across all platforms. Include transparent methodology in your research. Add author credentials and certifications. Link to third-party validation. Use schema markup to structure your data. Monitor citations monthly and correct inaccuracies quickly.

Yes. Original research typically earns backlinks and media coverage, which improves traditional rankings. Additionally, proprietary data creates content that's more comprehensive and authoritative, which helps with both traditional SEO and AI visibility.

Proprietary data is original research you conduct yourself. Generic reports are widely available. LLMs prefer proprietary data because it's unique and can only be cited from your source. This creates a citation advantage that competitors can't easily replicate.

Track citation frequency, AI Share of Voice, branded search volume, and traffic from AI platforms. Compare these metrics before and after publishing proprietary data. Calculate the value of AI-referred traffic (typically 4.4x higher conversion rate than traditional organic) to determine ROI.

Monitor how AI systems cite your proprietary data across ChatGPT, Perplexity, Google AI Overviews, and more. Get real-time insights into your AI visibility and competitive positioning.

Learn how to create original research and data-driven PR content that AI systems actively cite. Discover the 5 attributes of citation-worthy content and strateg...

Learn proven strategies to build authoritativeness and increase your brand's visibility in AI-generated answers from ChatGPT, Perplexity, and other AI search en...

Learn how citation authority works in AI-generated answers, how different platforms cite sources, and why it matters for your brand's visibility in AI search en...