How Do I Structure Content for AI Citations? Complete Guide for 2025

Learn how to structure your content to get cited by AI search engines like ChatGPT, Perplexity, and Google AI. Expert strategies for AI visibility and citations...

Learn how to test content formats for AI citations using A/B testing methodology. Discover which formats drive the highest AI visibility and citation rates across ChatGPT, Google AI Overviews, and Perplexity.

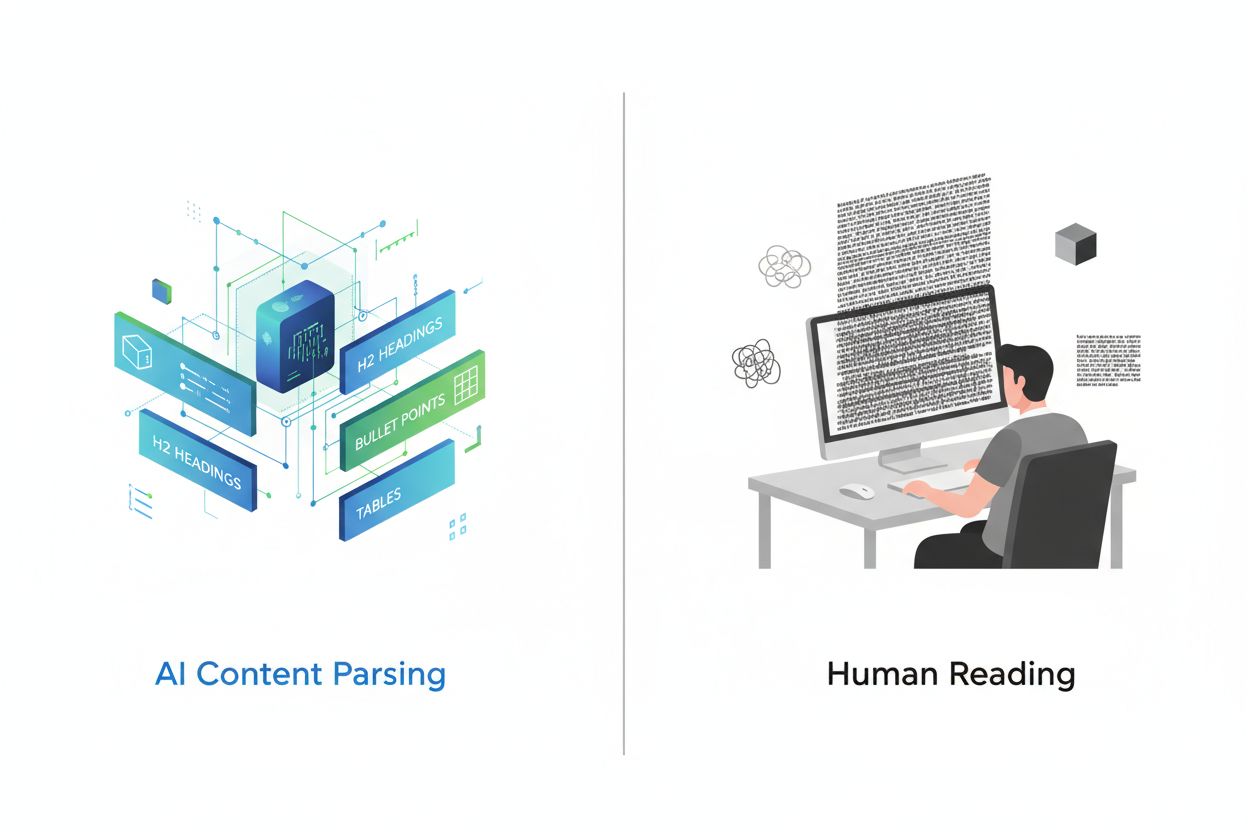

Artificial intelligence systems process content fundamentally differently than human readers, relying on structured signals to understand meaning and extract information. While humans can navigate through creative formatting or dense prose, AI models require clear organizational hierarchies and semantic markers to effectively parse and comprehend content value. Research demonstrates that structured content with proper heading hierarchies achieves citation rates 156% higher than unstructured alternatives, revealing a critical gap between human-friendly and AI-friendly content. This disparity exists because AI systems are trained on vast datasets where well-organized content typically correlates with authoritative, reliable sources. Understanding and testing different content formats has become essential for brands seeking visibility in AI-powered search results and answer engines.

Different AI platforms demonstrate distinct preferences for content sources and formats, creating a complex landscape for optimization. Research analyzing 680 million citations across major platforms reveals striking differences in how ChatGPT, Google AI Overviews, and Perplexity source their information. These platforms don’t simply cite the same sources—they prioritize different types of content based on their underlying algorithms and training data. Understanding these platform-specific patterns is crucial for developing targeted content strategies that maximize visibility across multiple AI systems.

| Platform | Top Cited Source | Citation Percentage | Preferred Format |

|---|---|---|---|

| ChatGPT | Wikipedia | 7.8% of total citations | Authoritative knowledge bases, encyclopedic content |

| Google AI Overviews | 2.2% of total citations | Community discussions, user-generated content | |

| Perplexity | 6.6% of total citations | Peer-to-peer information, community insights |

ChatGPT’s overwhelming preference for Wikipedia (representing 47.9% of its top 10 sources) demonstrates a bias toward authoritative, factual content with established credibility. In contrast, both Google AI Overviews and Perplexity show more balanced distributions, with Reddit dominating their citation patterns. This reveals that Perplexity prioritizes community-driven information at 46.7% of top sources, while Google maintains a more diverse approach across multiple platform types. The data clearly shows that a one-size-fits-all content strategy cannot succeed—brands must tailor their approach based on which AI platforms matter most for their audience.

Schema markup represents perhaps the most significant factor in AI citation probability, with properly implemented JSON-LD markup achieving citation rates 340% higher than identical content without structured data. This dramatic difference stems from how AI engines interpret semantic meaning—structured data provides explicit context that removes ambiguity from content interpretation. When an AI engine encounters schema markup, it immediately understands entity relationships, content types, and hierarchical importance without relying solely on natural language processing.

The most effective schema implementations include Article schema for blog posts, FAQ schema for question-and-answer sections, HowTo schema for instructional content, and Organization schema for brand recognition. JSON-LD format specifically outperforms other structured data formats because AI engines can parse it independently from HTML content, allowing for cleaner data extraction and reduced processing complexity. Semantic HTML tags like <header>, <nav>, <main>, <section>, and <article> provide additional clarity that helps AI systems understand content structure and hierarchy more effectively than basic markup.

A/B testing provides the most reliable methodology for determining which content formats drive the highest AI citation rates in your specific niche. Rather than relying on general best practices, controlled experiments allow you to measure the actual impact of format changes on your audience and AI visibility. The process requires careful planning to isolate variables and ensure statistical validity, but the insights gained justify the investment.

Follow this systematic A/B testing framework:

Statistical significance requires careful attention to sample size and test duration. In AI applications with sparse data or long-tail distributions, gathering sufficient observations quickly can be challenging. Most experts recommend running tests for at least 2-4 weeks to account for temporal variations and ensure reliable results.

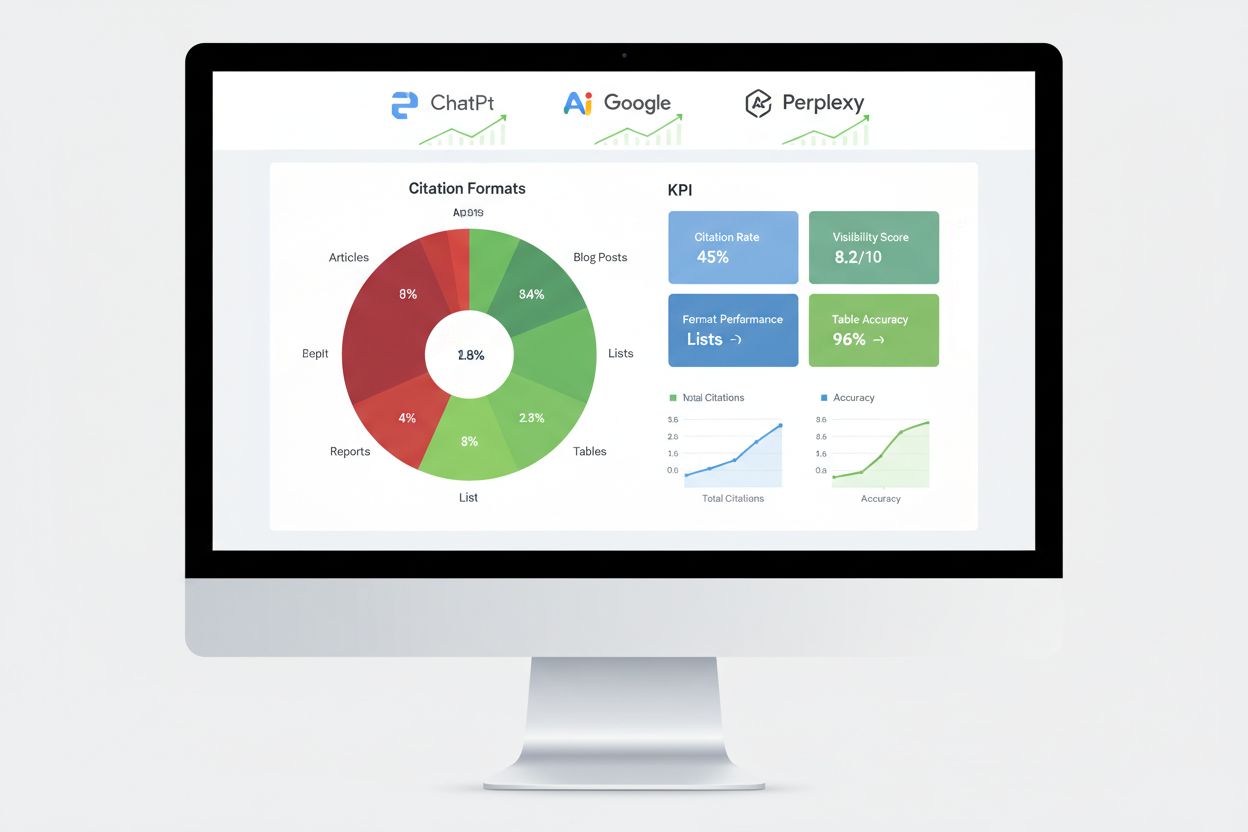

Research across thousands of AI citations reveals clear performance hierarchies among different content formats. List-based content receives 68% more AI citations than paragraph-heavy alternatives, primarily because lists provide discrete, parseable information units that AI engines can easily extract and synthesize. When generating responses, AI platforms can reference specific list items without requiring complex sentence restructuring or paraphrasing, making list-based content highly valuable for citation purposes.

Tables demonstrate exceptional performance with up to 96% accuracy in AI parsing, significantly outperforming prose descriptions of identical information. Tabular content allows AI systems to quickly extract specific data points without complex text parsing, making tables particularly valuable for factual, comparative, or statistical content. Question-and-answer formats achieve 45% higher AI visibility compared to traditional paragraph formats covering identical topics, because Q&A content mirrors how users interact with AI platforms and how AI systems generate responses.

Comparison formats (X vs Y) perform exceptionally well because they provide binary, easy-to-summarize structures that align with how AI systems expand queries into subtopics. Case studies blend narrative with data, making them persuasive for readers while remaining interpretable for AI through their problem-solution-results structure. Original research and expert insights receive preferential treatment because they provide proprietary data unavailable elsewhere, adding credibility signals that AI systems recognize and reward. The key insight is that no single format works universally—the best approach combines multiple formats strategically based on your content type and target AI platforms.

Implementing schema markup requires understanding the different types available and selecting those most relevant to your content. For blog posts and articles, Article schema provides comprehensive metadata including author, publication date, and content structure. FAQ schema works exceptionally well for question-and-answer sections, explicitly labeling questions and answers so AI systems can extract them reliably. HowTo schema benefits instructional content by defining sequential steps, while Product schema helps e-commerce sites communicate specifications and pricing.

{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is the best content format for AI citations?",

"acceptedAnswer": {

"@type": "Answer",

"text": "The best content format depends on your platform and audience, but structured formats like lists, tables, and Q&A sections consistently achieve higher AI citation rates. Lists receive 68% more citations than paragraphs, while tables achieve 96% parsing accuracy."

}

}

]

}

Implementation requires attention to syntax accuracy—invalid schema markup can actually harm AI citation chances rather than improve them. Use Google’s Rich Results Test or Schema.org’s validation tools to verify your markup before publishing. Maintain consistent formatting hierarchies with H2s for main sections, H3s for subpoints, and short paragraphs (50-75 words maximum) that focus on single concepts. Add TL;DR summaries at the beginning or end of sections to provide AI with ready-made snippets that can stand alone as usable answers.

Measuring AI engine performance requires different metrics than traditional SEO, focusing on citation tracking, response inclusion rates, and knowledge graph mentions rather than ranking positions. Citation monitoring across major platforms provides the most direct insight into whether your format testing efforts are succeeding, revealing which content pieces AI systems actually reference. Tools like AmICited specifically track how AI platforms cite your brand across ChatGPT, Google AI Overviews, Perplexity, and other answer engines, providing visibility into citation patterns and trends.

Essential measurement approaches include tracking featured snippet capture rates, which indicate content that AI systems find particularly valuable for direct answers. Knowledge panel appearances signal that AI systems recognize your brand as an authoritative entity worthy of dedicated information displays. Voice search result inclusions show whether your content appears in conversational AI responses, while generative engine response rates measure how frequently AI systems reference your content when answering user queries. A/B testing different format approaches provides the most reliable performance data by isolating single variables to identify specific impact factors. Establish baseline metrics before implementing changes, then monitor performance weekly to identify trends and anomalies that might indicate successful or unsuccessful format variations.

Many organizations conducting format testing fall into predictable traps that compromise their results and lead to incorrect conclusions. Insufficient sample sizes represent the most common error—testing with too few citations or interactions leads to statistically insignificant results that appear meaningful but actually reflect random variation. Ensure you gather at least 100 citations per variation before drawing conclusions, and use statistical calculators to determine the exact sample size needed for your confidence level and effect size.

Confounding variables introduce bias when multiple factors change simultaneously, making it impossible to determine which change caused observed differences. Keep all elements identical except the format being tested—maintain the same keywords, length, structure, and publication timing. Temporal bias occurs when testing during atypical periods (holidays, major news events, platform algorithm changes) that skew results. Run tests during normal periods and account for seasonal variations by testing for at least 2-4 weeks. Selection bias emerges when test groups differ in ways that affect results—ensure random assignment of content to variations. Misinterpreting correlation as causation leads to false conclusions when external factors coincidentally align with your test period. Always consider alternative explanations for observed changes and validate results through multiple testing cycles before implementing permanent changes.

A technology company testing content formats for AI visibility discovered that converting their product comparison articles from paragraph format to structured comparison tables increased AI citations by 52% within 60 days. The tables provided clear, scannable information that AI systems could extract directly, while the original prose required more complex parsing. They maintained identical content length and keyword optimization, isolating the format change as the sole variable.

A financial services firm implemented FAQ schema on their existing content without rewriting anything, simply adding structured markup to existing question-and-answer sections. This resulted in a 34% increase in featured snippet appearances and a 28% increase in AI citations within 45 days. The schema markup didn’t change the content itself but made it significantly easier for AI systems to identify and extract relevant answers. A SaaS company conducted multivariate testing across three formats simultaneously—lists, tables, and traditional paragraphs—for identical content about their product features. Results showed lists outperformed paragraphs by 68%, while tables achieved the highest accuracy in AI parsing but lower overall citation volume. This revealed that format effectiveness varies by content type and AI platform, confirming that testing is essential rather than relying on general best practices. These real-world examples demonstrate that format testing delivers measurable, significant improvements in AI visibility when executed properly.

The landscape of content format testing continues evolving as AI systems become more sophisticated and new optimization techniques emerge. Multi-armed bandit algorithms represent a significant advancement over traditional A/B testing, dynamically adjusting traffic allocation to different variations based on real-time performance rather than waiting for predetermined test periods to conclude. This approach reduces the time needed to identify winning variants and maximizes performance during the testing period itself.

Adaptive experimentation powered by reinforcement learning enables AI models to continuously learn and adapt from ongoing experiments, improving performance in real-time rather than through discrete testing cycles. AI-driven automation in A/B testing uses AI itself to automate experiment design, result analysis, and optimization recommendations, allowing organizations to test more variations simultaneously without proportional increases in complexity. These emerging approaches promise faster iteration cycles and more sophisticated optimization strategies. Organizations that master content format testing today will maintain competitive advantages as these advanced techniques become standard practice, positioning themselves to capitalize on emerging AI platforms and evolving citation algorithms before competitors adapt their strategies.

The best content format depends on your platform and audience, but structured formats like lists, tables, and Q&A sections consistently achieve higher AI citation rates. Lists receive 68% more citations than paragraphs, while tables achieve 96% parsing accuracy. The key is testing different formats with your specific content to identify what works best.

Most experts recommend running tests for at least 2-4 weeks to account for temporal variations and ensure reliable results. This duration allows you to gather sufficient data points (typically 100+ citations per variation) and account for seasonal variations or platform algorithm changes that might skew results.

Yes, you can conduct multivariate testing across multiple formats simultaneously, but this requires careful planning to avoid complexity in interpreting results. Start with simple A/B tests comparing two formats, then progress to multivariate testing once you understand the basics and have adequate statistical resources.

You typically need at least 100 citations or interactions per variation to achieve statistical significance. Use statistical calculators to determine the exact sample size needed for your specific confidence level and effect size. Larger sample sizes provide more reliable results but require longer testing periods.

Start by identifying the most relevant schema type for your content (Article, FAQ, HowTo, etc.), then use JSON-LD format to implement it. Validate your markup using Google's Rich Results Test or Schema.org's validation tools before publishing. Invalid schema markup can actually harm your AI citation chances, so accuracy is critical.

Prioritize based on your audience and business goals. ChatGPT favors authoritative sources like Wikipedia, Google AI Overviews prefer community content like Reddit, and Perplexity emphasizes peer-to-peer information. Analyze which platforms drive the most relevant traffic to your site and optimize for those first.

Implement continuous testing as part of your content strategy. Start with quarterly format testing cycles, then increase frequency as you develop expertise and establish baseline metrics. Regular testing helps you stay ahead of AI platform algorithm changes and discover emerging format preferences.

Track citation rate improvements, featured snippet capture rates, knowledge panel appearances, and generative engine response rates. Establish baseline metrics before testing, then monitor performance weekly to identify trends. A successful test typically shows 20%+ improvement in your primary metric within 4-8 weeks.

Track how AI platforms cite your content across different formats. Discover which content structures drive the most AI visibility and optimize your strategy with real data.

Learn how to structure your content to get cited by AI search engines like ChatGPT, Perplexity, and Google AI. Expert strategies for AI visibility and citations...

Learn how to restructure your content for AI systems with practical before and after examples. Discover techniques to improve AI citations and visibility across...

Learn the optimal content depth, structure, and detail requirements for getting cited by ChatGPT, Perplexity, and Google AI. Discover what makes content citatio...