The Long-Term Vision for AI Visibility: Where We're Headed

Explore the future of AI visibility monitoring, from transparency standards to regulatory compliance. Learn how brands can prepare for the AI-driven information...

Learn essential AI transparency and disclosure best practices. Discover behavioral, verbal, and technical disclosure methods to build trust and ensure compliance with evolving AI regulations.

AI transparency has become a critical imperative in an era where artificial intelligence systems influence everything from content creation to hiring decisions and financial recommendations. As AI-generated content proliferates across digital platforms, organizations face mounting pressure to disclose when and how they use these technologies, not merely as a legal obligation but as a fundamental requirement for maintaining trust and credibility with their audiences. The stakes are particularly high for brands and content creators, as consumers increasingly demand to know whether the information they consume originates from human expertise or algorithmic generation. Without transparent disclosure practices, organizations risk eroding the trust that took years to build, potentially facing reputational damage, regulatory penalties, and loss of audience confidence. The relationship between transparency and credibility is symbiotic—organizations that proactively disclose their AI usage demonstrate integrity and respect for their stakeholders, positioning themselves as honest actors in an increasingly AI-driven landscape.

The proliferation of AI-generated content has created a profound trust paradox: audiences struggle to distinguish between authentic human-created work and sophisticated AI-generated alternatives, yet transparency about AI use remains inconsistent across industries. This confusion undermines the fundamental contract between creators and consumers, where audiences expect to know the true origin of the content they encounter. When AI-generated content is presented without disclosure, it violates this implicit agreement, creating a “real vs. fake” dichotomy that extends beyond simple authenticity concerns—it touches on questions of deception, manipulation, and informed consent. The paradox deepens because some AI-generated content may be indistinguishable from human work in quality and style, making visual or stylistic cues unreliable indicators of origin. Transparency matters precisely because it resolves this paradox by giving audiences the information they need to make informed decisions about the content they consume and trust. Organizations that embrace clear disclosure practices transform potential skepticism into confidence, demonstrating that they have nothing to hide and everything to gain from honest communication.

The regulatory environment surrounding AI disclosure is rapidly evolving, with multiple jurisdictions implementing or proposing requirements that organizations must navigate carefully. The EU AI Act, one of the most comprehensive frameworks, mandates that AI-generated or heavily AI-edited content must include visible or metadata-level disclosure, with specific requirements for high-risk AI systems and transparency obligations for developers. In the United States, the FTC has issued warnings that failing to update Terms of Use and Privacy Policies for AI use could constitute deceptive practices, while state-level regulations—particularly California’s recent AI frontier model disclosure law—impose heightened transparency and reporting obligations on developers of large AI systems. Copyright and intellectual property considerations add another layer of complexity, as organizations must disclose whether training data included copyrighted material and how AI systems were trained. Additionally, GDPR and CCPA requirements extend to AI systems that process personal data, requiring organizations to disclose automated decision-making and provide individuals with meaningful information about AI processing. The following table summarizes key regulatory requirements across major jurisdictions:

| Jurisdiction | Regulation | Key Requirements | Effective Date |

|---|---|---|---|

| European Union | EU AI Act | Disclosure for high-risk AI; transparency reports; metadata labeling for AI-generated content | Phased (2024-2026) |

| United States (Federal) | FTC Guidelines | Update privacy policies; disclose AI use; avoid deceptive practices | Ongoing |

| California | AI Frontier Model Law | Standardized disclosure; reporting obligations; safety measures | 2025 |

| European Union | GDPR | Disclose automated decision-making; provide information about AI processing | Ongoing |

| United States (Multi-state) | CCPA & Similar | Disclose data use in AI systems; provide opt-out mechanisms | Varies by state |

| International | Copyright Considerations | Disclose training data sources; address copyright in AI outputs | Emerging |

Organizations operating across multiple jurisdictions must implement disclosure practices that satisfy the most stringent requirements, as compliance with one standard often exceeds the minimum for others.

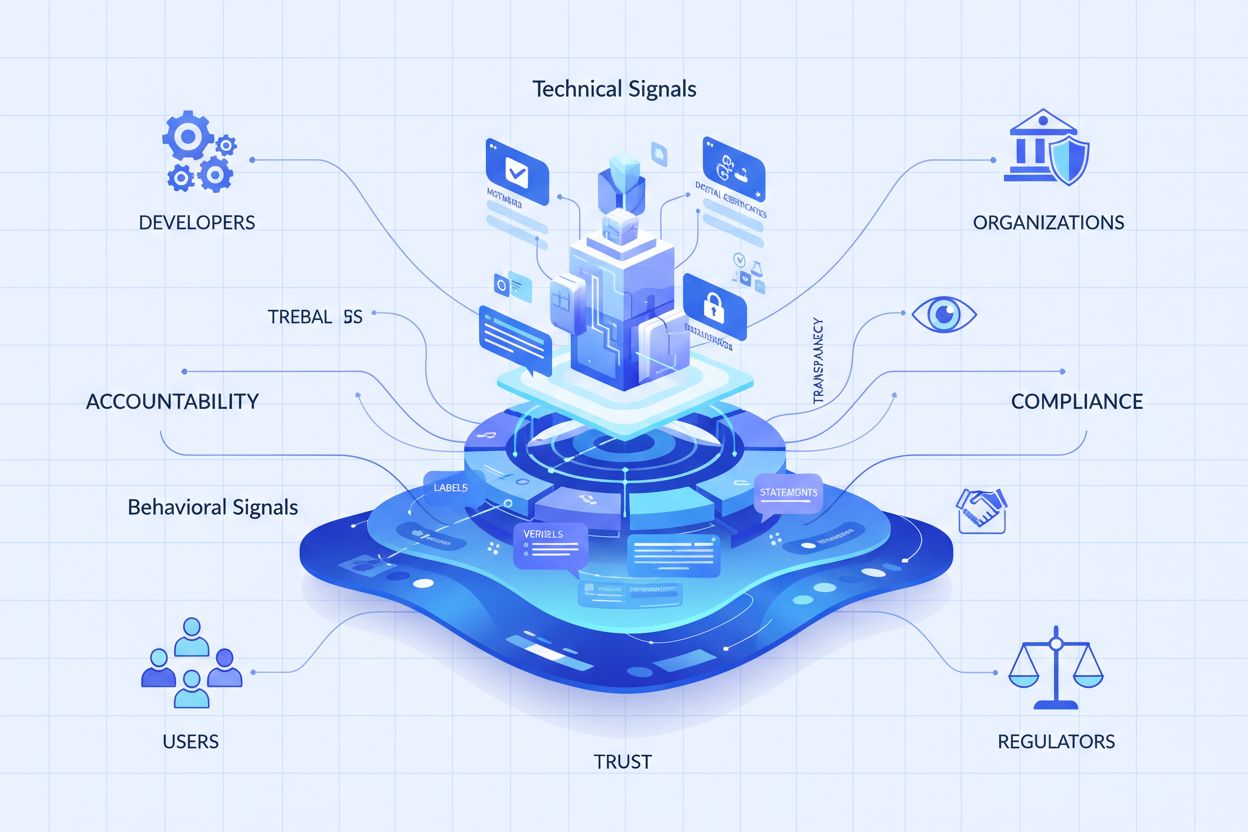

Behavioral signals represent the most subtle yet powerful form of AI disclosure, operating through the presentation, style, voice, and creative choices that audiences unconsciously process when evaluating content authenticity. These signals include distinctive patterns in writing style, consistency of voice, visual composition choices, avatar or persona characteristics, and the overall “fidelity” of creator representation—essentially, the degree to which the content reflects genuine human personality and decision-making. For example, AI-generated text often exhibits particular patterns in sentence structure, vocabulary choices, and logical flow that differ from human writing, while AI-generated images may show subtle inconsistencies in lighting, anatomy, or background details that trained observers can detect. The concept of creator fidelity is central here: audiences develop expectations about how a particular creator should sound, look, and present themselves, and deviations from this established pattern can signal AI involvement. However, relying solely on behavioral signals is problematic because AI systems are rapidly improving at mimicking human characteristics, and audiences cannot be expected to become AI forensics experts. Therefore, behavioral signals should complement rather than replace explicit disclosure methods, serving as a secondary layer of transparency that reinforces rather than substitutes for clear, direct communication about AI use.

Verbal disclosure strategies provide explicit, direct communication about AI use through language-based signals that leave no ambiguity about content origin and AI involvement. These methods are essential because they create a clear, documented record of disclosure and ensure that audiences receive unambiguous information about AI use. Organizations can implement verbal disclosure through multiple complementary approaches:

The appropriateness of each method depends on context: labels work well for social media and visual content, watermarks suit video and audio, bylines fit journalistic and creative work, disclosure fields serve longer-form content, and acknowledgments provide comprehensive context for complex projects. Organizations should select disclosure methods that match their content format and audience expectations, ensuring that disclosure is impossible to miss while remaining non-intrusive to the user experience.

Technical signals and metadata provide machine-readable, standardized information about AI involvement that enables automated detection, verification, and tracking of AI-generated content across digital ecosystems. These approaches leverage embedded data and cryptographic techniques to create permanent, tamper-resistant records of content origin and processing history. The IPTC standards, recently updated to include AI-specific metadata properties, allow creators to embed structured information about AI use directly into image files, including details about which AI systems were used, what processing was applied, and what human modifications were made. C2PA (Coalition for Content Provenance and Authenticity) represents an emerging industry standard that uses cryptographic signatures and content credentials to establish verifiable chains of custody for digital content, enabling audiences to trace content back to its original source and understand all modifications applied. These technical approaches address a critical limitation of human-readable disclosure: they create permanent, verifiable records that cannot be easily removed or altered, and they enable automated systems to identify and flag AI-generated content at scale. Organizations implementing technical disclosure should consider adopting C2PA credentials for high-value content, embedding IPTC metadata in images and media files, and maintaining detailed digital source type documentation that tracks AI involvement throughout the content lifecycle. The advantage of technical signals is their permanence and verifiability; the challenge is ensuring that audiences and platforms understand and respect these signals.

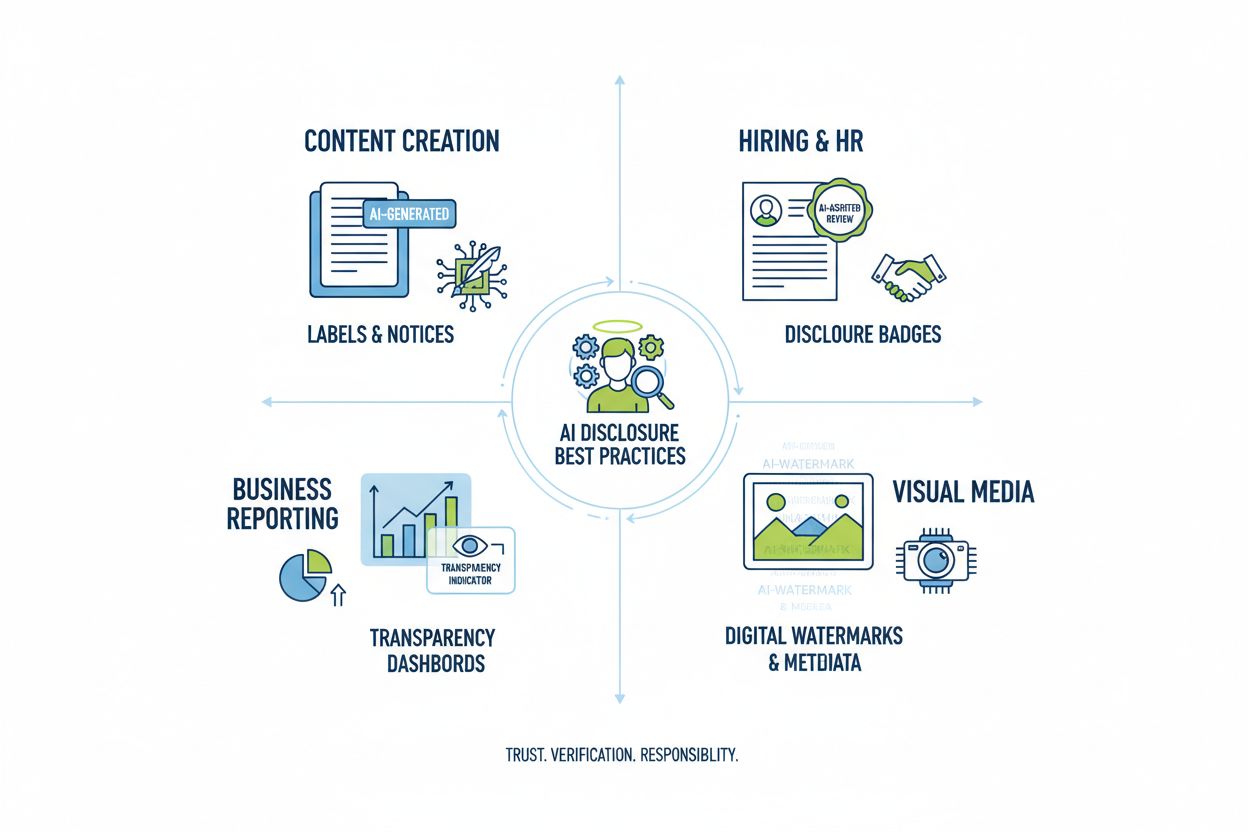

Successful AI disclosure requires institutionalizing transparency practices throughout content creation workflows, transforming disclosure from an afterthought into a fundamental component of content governance. Organizations should implement structured content frameworks that capture AI involvement at each stage of creation—from initial research and drafting through editing and publication—ensuring that disclosure information flows naturally through existing content management systems. Automation and workflow integration are essential: content management systems should include mandatory fields for AI disclosure, automated prompts that require creators to document AI use before publishing, and approval workflows that verify disclosure completeness before content goes live. This approach treats disclosure as a quality assurance requirement rather than an optional add-on, similar to how organizations manage fact-checking, legal review, or brand compliance. Organizations should also establish clear policies defining what constitutes AI use requiring disclosure (e.g., does using AI for research require disclosure? What about AI-assisted editing?), ensuring consistency across teams and preventing disclosure gaps. Training and documentation are equally important: content creators need clear guidance on how to disclose AI use appropriately for different content types and platforms. By embedding disclosure into content models and workflows, organizations transform transparency from a compliance burden into a standard operating procedure that protects both the organization and its audiences.

Different applications of AI require tailored disclosure approaches that account for context, audience expectations, and regulatory requirements. Content creation (articles, social media, marketing copy) should include clear labels or byline modifications indicating AI involvement, with additional disclosure fields explaining which AI tools were used and what human oversight was applied; this is particularly important for news and editorial content where audience trust depends on understanding content origin. Hiring and recruitment contexts demand the most rigorous disclosure, as candidates have a right to know when AI systems are evaluating their applications or conducting initial screening, with detailed explanations of how AI decisions are made and what human review processes follow. Reporting and analysis (financial reports, market research, data analysis) should include technical disclosures and metadata indicating which analyses were AI-assisted, particularly when AI systems identified patterns or generated insights that influenced conclusions. Visual content (images, videos, graphics) requires both visual watermarks and metadata disclosure, as audiences cannot reliably distinguish AI-generated visuals from authentic ones; this is especially critical for news photography, product images, and any content where authenticity affects purchasing or trust decisions. Customer-facing communications (chatbots, automated responses, customer service) should clearly identify AI involvement at the start of interactions, allowing customers to request human assistance if desired. Organizations should audit their AI use across these contexts and implement disclosure practices proportionate to the sensitivity and impact of each use case, recognizing that transparency requirements vary based on how AI decisions affect audiences.

The future of AI disclosure depends on industry-wide standardization and the development of interoperable frameworks that enable consistent, comparable transparency across organizations and platforms. Emerging standards like C2PA, IPTC metadata specifications, and NIST’s AI Risk Management Framework provide foundational structures, but widespread adoption requires coordinated effort from technology platforms, content creators, regulators, and industry bodies. The EU AI Act and similar regulatory frameworks are driving standardization by requiring specific disclosure formats and information categories, creating pressure for industry-wide adoption of common standards rather than idiosyncratic approaches. Industry initiatives such as the PRSA’s ethical AI guidelines and various transparency reporting frameworks demonstrate growing consensus around disclosure best practices, though voluntary adoption remains inconsistent. The critical next step involves moving from voluntary standards to regulatory requirements that mandate standardized disclosure, similar to how FDA nutrition labels standardized food transparency. Monitoring and visibility platforms—like AmICited.com—play an increasingly important role in this ecosystem by tracking how AI references brands and content across AI-generated answers, providing organizations with visibility into how their work appears in AI outputs and whether proper attribution and disclosure are occurring. As AI systems become more sophisticated and ubiquitous, the ability to monitor, verify, and audit AI disclosure practices becomes essential for maintaining trust in digital information ecosystems. Organizations that invest in robust disclosure practices and monitoring capabilities today will be best positioned to navigate the evolving regulatory landscape and maintain audience trust in an AI-driven future.

Behavioral disclosure uses presentation choices (style, voice, avatar) to signal AI involvement. Verbal disclosure uses explicit statements, labels, and watermarks. Technical disclosure embeds machine-readable metadata and cryptographic signatures. Each method serves different audiences and contexts, and organizations should use multiple methods together for comprehensive transparency.

Legal requirements vary by jurisdiction. The EU AI Act mandates disclosure for high-risk AI systems. The FTC requires disclosure when AI use could affect consumer decisions. State laws like California's AI Frontier Model Law impose reporting obligations. Copyright law requires disclosure of AI-generated content. Organizations should consult legal counsel for jurisdiction-specific requirements.

AI-generated images should include both visual watermarks and metadata disclosure. Visual watermarks should be visible but non-intrusive, clearly indicating AI generation. Metadata should include IPTC digital source type information and C2PA credentials when available. Captions and disclosure fields should explicitly state that images are AI-generated, especially for news, product, or promotional content.

IPTC standards provide structured metadata fields for documenting AI use in images and media files. They enable automated detection and tracking of AI-generated content across platforms. IPTC digital source type categories include 'Trained Algorithmic Media,' 'Composite Synthetic,' and 'Algorithmic Media.' These standards matter because they create permanent, verifiable records of AI involvement that cannot be easily removed or altered.

Companies should conduct an AI audit to identify all AI use cases, implement disclosure policies aligned with applicable regulations, integrate disclosure into content management workflows, train teams on disclosure requirements, and monitor compliance regularly. Consulting with legal experts familiar with AI regulations in your jurisdiction is essential, as requirements vary significantly across regions.

Consequences include regulatory penalties (FTC fines, state law violations), reputational damage and loss of audience trust, legal liability for copyright infringement or deceptive practices, and potential lawsuits from affected parties. Organizations that fail to disclose AI use risk eroding the credibility that took years to build, making proactive disclosure a strategic investment in long-term trust.

Different stakeholders need different information levels. General audiences need simple, clear statements about AI involvement. Regulators need detailed technical documentation and compliance evidence. Journalists and content creators need specific information about AI tools and human oversight. Customers need to understand how AI affects their experience. Tailor disclosure format and detail to each audience's needs and expectations.

C2PA (Coalition for Content Provenance and Authenticity) provides cryptographic credentials for content verification. IPTC standards enable metadata embedding in images. Content management systems increasingly include AI disclosure fields. Monitoring platforms like AmICited.com track AI references and visibility. Industry frameworks from PRSA, NIST, and others provide guidance. Adoption of these tools and standards is accelerating as regulations tighten.

Track how AI systems reference your brand across GPTs, Perplexity, and Google AI Overviews. Ensure your content receives proper attribution and visibility in AI-generated answers.

Explore the future of AI visibility monitoring, from transparency standards to regulatory compliance. Learn how brands can prepare for the AI-driven information...

Learn to detect AI visibility crises early with real-time monitoring, sentiment analysis, and anomaly detection. Discover warning signs and best practices for p...

Learn how media companies achieve visibility in AI-generated answers through content optimization, earned media, digital PR, and strategic positioning across AI...