Trust Signal

Trust signals are credibility indicators that establish brand reliability for users and AI systems. Learn how verified badges, testimonials, and security elemen...

Learn how AI systems evaluate trust signals through E-E-A-T framework. Discover the credibility factors that help LLMs cite your content and build authority.

Trust signals are the digital indicators and markers that AI systems use to evaluate the credibility and reliability of content when generating responses. As artificial intelligence becomes increasingly integrated into search and information retrieval, understanding how these systems assess trustworthiness has become essential for content creators and brands. The E-E-A-T framework—which stands for Experience, Expertise, Authoritativeness, and Trustworthiness—provides a structured approach to understanding how AI evaluates content quality. Among these four pillars, trustworthiness has emerged as the most critical factor, as AI systems recognize that even highly knowledgeable sources can be unreliable if they lack transparency and verifiable credentials. Modern language models like ChatGPT, Perplexity, and Google AI Overviews analyze multiple categories of signals—including content quality, technical infrastructure, behavioral patterns, and contextual alignment—to determine which sources deserve prominence in their responses.

| Pillar | Definition | AI Signal | Example |

|---|---|---|---|

| Experience | Practical, hands-on knowledge gained through direct involvement | Content demonstrating real-world application and personal involvement in the subject matter | A software developer writing about debugging techniques they’ve personally used in production environments |

| Expertise | Deep, specialized knowledge and skill in a particular domain | Technical accuracy, use of domain-specific terminology, and demonstrated mastery of subject matter | A cardiologist explaining heart disease risk factors with precise medical terminology and current research citations |

| Authoritativeness | Recognition and respect within an industry or field | Citations from other authoritative sources, media mentions, speaking engagements, and industry leadership positions | A published researcher whose work is frequently cited by peers and featured in major industry publications |

| Trustworthiness | Reliability, transparency, and honesty in communication | Clear author attribution, disclosure of conflicts of interest, verifiable credentials, and consistent accuracy over time | A financial advisor who clearly discloses affiliate relationships, maintains updated credentials, and provides balanced perspectives |

Each pillar works in concert with the others to create a comprehensive trust profile that AI systems evaluate. Experience demonstrates that an author has lived through the subject matter, making their insights more valuable than purely theoretical knowledge. Expertise signals that the author possesses the specialized knowledge necessary to provide accurate, nuanced information. Authoritativeness indicates that the broader industry recognizes and respects the author’s contributions. Trustworthiness, however, serves as the foundation—without it, the other three pillars lose their credibility. AI systems weight trustworthiness heavily because they understand that a trustworthy source with moderate expertise is more valuable than an untrustworthy source claiming exceptional expertise.

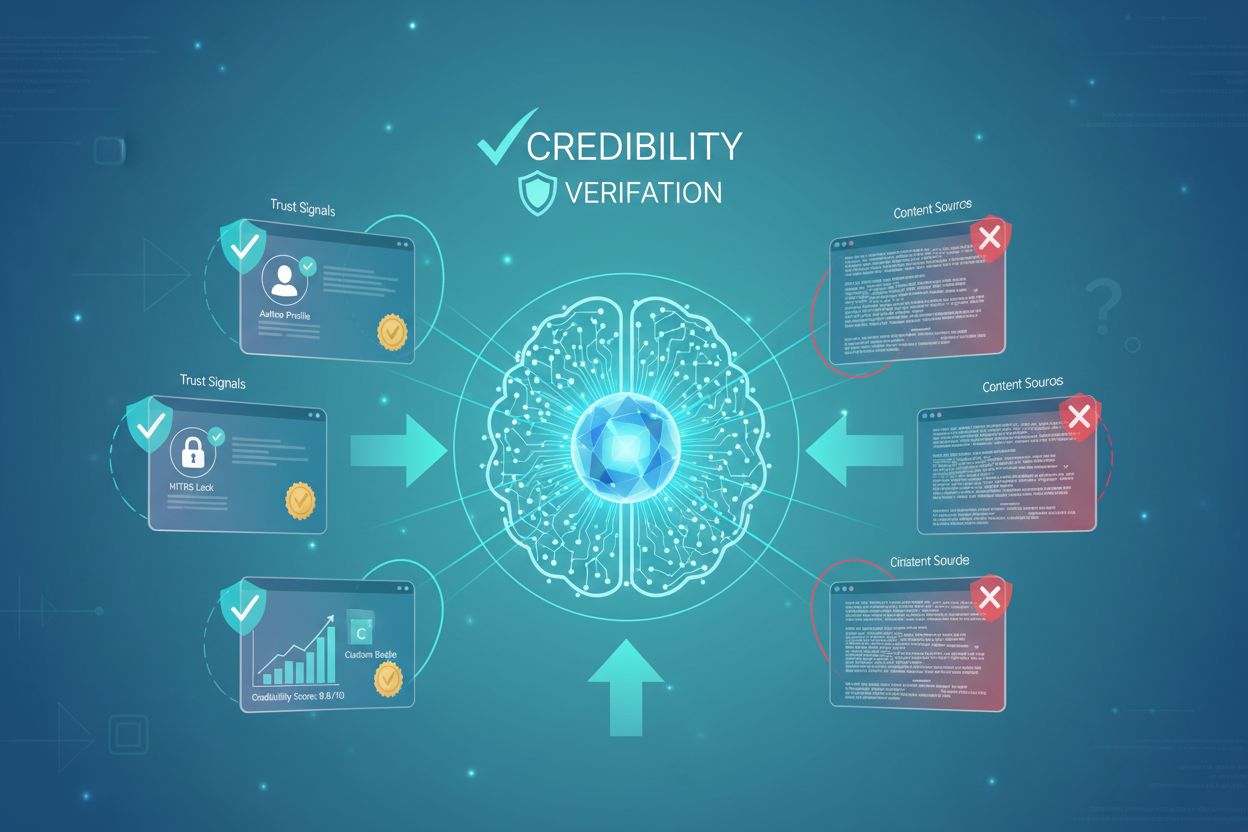

AI systems employ a sophisticated multi-stage process to evaluate trust signals in content. When a user submits a query, the system begins with query understanding, analyzing the intent and context to determine what type of information is needed. The system then performs content retrieval, pulling relevant passages from indexed sources across the web. During the passage ranking phase, AI algorithms assess the credibility of each source using trust signals, moving higher-quality, more trustworthy sources to the top of the candidate pool. Source verification involves checking for author credentials, publication dates, domain authority, and consistency with other authoritative sources on the same topic. The system then performs citation selection, choosing which sources to attribute in the final response based on their trustworthiness scores. Finally, safety filters examine the selected content to ensure it aligns with accuracy standards and doesn’t propagate misinformation. This entire process happens in milliseconds, with AI systems making split-second decisions about which sources deserve to be cited in their responses.

Author credibility serves as one of the most powerful trust signals that AI systems evaluate when determining source reliability. When content includes clear author attribution with verifiable credentials, AI systems can cross-reference that information against known databases of experts, professionals, and recognized authorities. The presence of a named author creates accountability—AI systems recognize that individuals who attach their names to content are more likely to ensure accuracy and maintain professional standards. Beyond simple name attribution, AI systems look for several key credibility markers:

When AI systems encounter content with comprehensive author attribution, they assign higher trust scores because they can verify the author’s background and track record. This verification process helps AI systems distinguish between genuine experts and opportunistic content creators who lack real credentials. Organizations that invest in building strong author profiles—complete with bios, credentials, and consistent publishing records—signal to AI systems that they take content quality seriously and stand behind their claims.

AI systems evaluate content quality through multiple factuality indicators that reveal whether information is reliable and accurate. Specific, quantifiable claims signal higher trustworthiness than vague generalizations—when content includes precise statistics, dates, and measurements, AI systems recognize that the author has invested effort in research and verification. Grounded information that references specific studies, reports, or documented events carries more weight than abstract assertions, as AI systems can cross-reference these claims against known reliable sources. Concrete examples that illustrate concepts with real-world scenarios demonstrate deeper understanding and provide readers with actionable insights, which AI systems recognize as a hallmark of quality content. The absence of factual errors is particularly important; AI systems maintain internal knowledge bases and can identify when content contradicts well-established facts or contains outdated information. Additionally, balanced presentation of multiple perspectives on complex topics signals trustworthiness, as AI systems recognize that oversimplified or one-sided arguments often mask incomplete understanding or hidden agendas.

The technical infrastructure underlying your content sends important trust signals to AI systems that evaluate your site’s reliability and legitimacy. HTTPS encryption is now a baseline expectation; AI systems recognize that sites using secure protocols take user data protection seriously and are more likely to maintain high standards across all operations. Site performance metrics including fast load times and mobile responsiveness indicate that you’ve invested in quality infrastructure, which correlates with overall content quality and professionalism. Crawlability and indexability ensure that AI systems can efficiently access and analyze your content; sites with proper robots.txt files, XML sitemaps, and clean URL structures signal that you understand and respect how search engines and AI systems discover content. Schema markup and structured data implementation (using formats like JSON-LD for author information, publication dates, and article metadata) provide AI systems with machine-readable information that confirms and validates the claims made in your content. These technical elements work together to create an environment where AI systems can confidently assess and cite your content, knowing that the underlying infrastructure supports accuracy and reliability.

Transparency is a cornerstone of trustworthiness that AI systems actively evaluate when assessing source credibility. About and Contact pages that provide clear information about your organization, mission, and how to reach you demonstrate that you’re willing to be held accountable for your content. Affiliate disclaimers and conflict-of-interest disclosures are particularly important; AI systems recognize that sources that openly acknowledge potential biases are more trustworthy than those that hide financial relationships. Privacy policies that explain how you handle user data signal respect for privacy and compliance with regulations, which AI systems associate with overall trustworthiness. Publication dates and update timestamps allow AI systems to assess content freshness and understand when information was originally created versus when it was last revised—this is crucial for topics where information changes frequently. Corrections documentation that shows you’ve identified and fixed errors demonstrates intellectual honesty and commitment to accuracy. Organizations that maintain transparent practices across all these dimensions signal to AI systems that they prioritize accuracy and user trust over short-term gains, which results in higher credibility scores.

External validation from other authoritative sources significantly amplifies your trustworthiness signals in the eyes of AI systems. Backlinks from established, high-authority domains serve as endorsements; when respected organizations link to your content, AI systems interpret this as third-party verification of your credibility. Media mentions and press coverage in recognized publications indicate that journalists and editors have vetted your expertise and found your insights worthy of sharing with their audiences. Industry recognition through awards, certifications, or inclusion in authoritative directories provides AI systems with objective evidence of your standing within your field. Speaking engagements at conferences and contributions to industry publications demonstrate that peers and industry leaders recognize your expertise and are willing to associate their own credibility with yours. Entity recognition by knowledge bases and AI systems themselves—where your organization or personal brand is identified as a notable entity in your domain—creates a positive feedback loop that increases your authority scores. These external validation signals work cumulatively; the more authoritative sources that reference and endorse your content, the higher the trust score AI systems assign to your future content.

User behavior patterns provide AI systems with indirect but powerful indicators of content trustworthiness and value. Time on page metrics reveal whether readers find your content substantive enough to warrant extended engagement; AI systems recognize that people spend more time with content they find valuable and trustworthy. Engagement metrics such as comments, shares, and interactions indicate that your content resonates with audiences and sparks meaningful discussion, which correlates with quality and reliability. Bounce rates that are lower than industry averages suggest that visitors find what they’re looking for and trust the information enough to explore further, rather than immediately leaving to search elsewhere. Return visits from the same users signal that your content has proven valuable over time and that readers trust you enough to come back for additional information. Social sharing patterns, particularly shares from accounts with established credibility and engaged followings, amplify your trustworthiness signals across the web. AI systems analyze these behavioral signals because they understand that genuine user trust—demonstrated through sustained engagement and repeated visits—is one of the most authentic indicators of content quality and reliability.

Developing a strong trust profile requires a systematic approach to implementing and optimizing E-E-A-T signals across your content and digital presence. Begin by conducting a comprehensive audit of your existing E-E-A-T signals, identifying which pillars are strong and which need development; this baseline assessment reveals where to focus your efforts for maximum impact. Implement clear author attribution on all content, including detailed author bios that establish credentials, experience, and expertise; ensure that author information is consistent across all platforms where your content appears. Add schema markup to your website using JSON-LD format to provide AI systems with machine-readable information about authors, publication dates, article topics, and organizational details. Build high-quality backlinks by creating genuinely valuable content that other authoritative sources want to reference and cite; focus on earning links from relevant, respected domains rather than pursuing quantity. Maintain content freshness by regularly updating existing content to reflect current information, adding new research, and removing outdated claims; AI systems recognize that actively maintained content is more trustworthy than abandoned articles. Monitor how AI systems cite your brand using tools like AmICited, which tracks when and how AI Overviews, ChatGPT, Perplexity, and other AI systems reference your content; this visibility allows you to understand which trust signals are working and where you need improvement. By systematically building these trust signals, you create a strong foundation that helps AI systems confidently cite your content and recommend it to users seeking reliable information.

E-E-A-T is a framework that encompasses four pillars: Experience, Expertise, Authoritativeness, and Trustworthiness. Trust signals are the specific indicators and markers that AI systems use to evaluate each pillar. Think of E-E-A-T as the overall quality standard, while trust signals are the measurable evidence that demonstrates you meet that standard.

Small websites can build trust signals by focusing on author attribution, creating high-quality content in a specific niche, implementing schema markup, earning backlinks from relevant sources, and maintaining transparent practices. You don't need massive traffic or brand recognition—AI systems value depth of expertise and consistency in a focused area over broad coverage.

Yes, HTTPS is now a baseline expectation for trustworthiness. AI systems recognize that sites using secure protocols take user data protection seriously and are more likely to maintain high standards across all operations. It's one of the foundational technical signals that contributes to your overall credibility score.

Building genuine trust signals is a long-term strategy that typically takes months to show meaningful results. However, implementing technical improvements like schema markup and author attribution can have more immediate effects. The key is consistency—regularly publishing quality content, maintaining accuracy, and building external validation over time.

AI systems are increasingly sophisticated at detecting inconsistencies and false claims. They cross-reference author information against known databases, check for factual accuracy against their training data, and analyze patterns across multiple sources. Attempting to fake credentials or make false claims is risky and will likely damage your credibility when discovered.

You can test this by searching for your topics in AI-powered platforms like ChatGPT with browsing, Perplexity, and Google's AI Overviews, then looking for your URLs in the citations. For systematic monitoring, tools like AmICited track when and how AI systems reference your content across multiple platforms, providing visibility into your AI citations.

User engagement signals like time on page, return visits, and social sharing indicate to AI systems that your content is valuable and trustworthy. AI systems recognize that genuine user trust—demonstrated through sustained engagement—is one of the most authentic indicators of content quality and reliability.

Yes, transparency about how content was created is important for trustworthiness. If you use AI tools to assist in content creation, disclosing this and explaining how AI was used helps readers and AI systems understand the content's origin. Transparency about your processes builds trust more effectively than hiding how content was produced.

Track how AI systems like ChatGPT, Perplexity, and Google AI Overviews reference your brand. Understand your trust signals and improve your AI visibility.

Trust signals are credibility indicators that establish brand reliability for users and AI systems. Learn how verified badges, testimonials, and security elemen...

Discover how trust signals differ between AI search engines and traditional SEO. Learn which credibility factors matter most for AI systems like ChatGPT and Per...

Learn how to increase AI trust signals across ChatGPT, Perplexity, and Google AI Overviews. Build entity identity, evidence, and technical trust to boost AI cit...