Crawl Frequency

Crawl frequency is how often search engines and AI crawlers visit your site. Learn what affects crawl rates, why it matters for SEO and AI visibility, and how t...

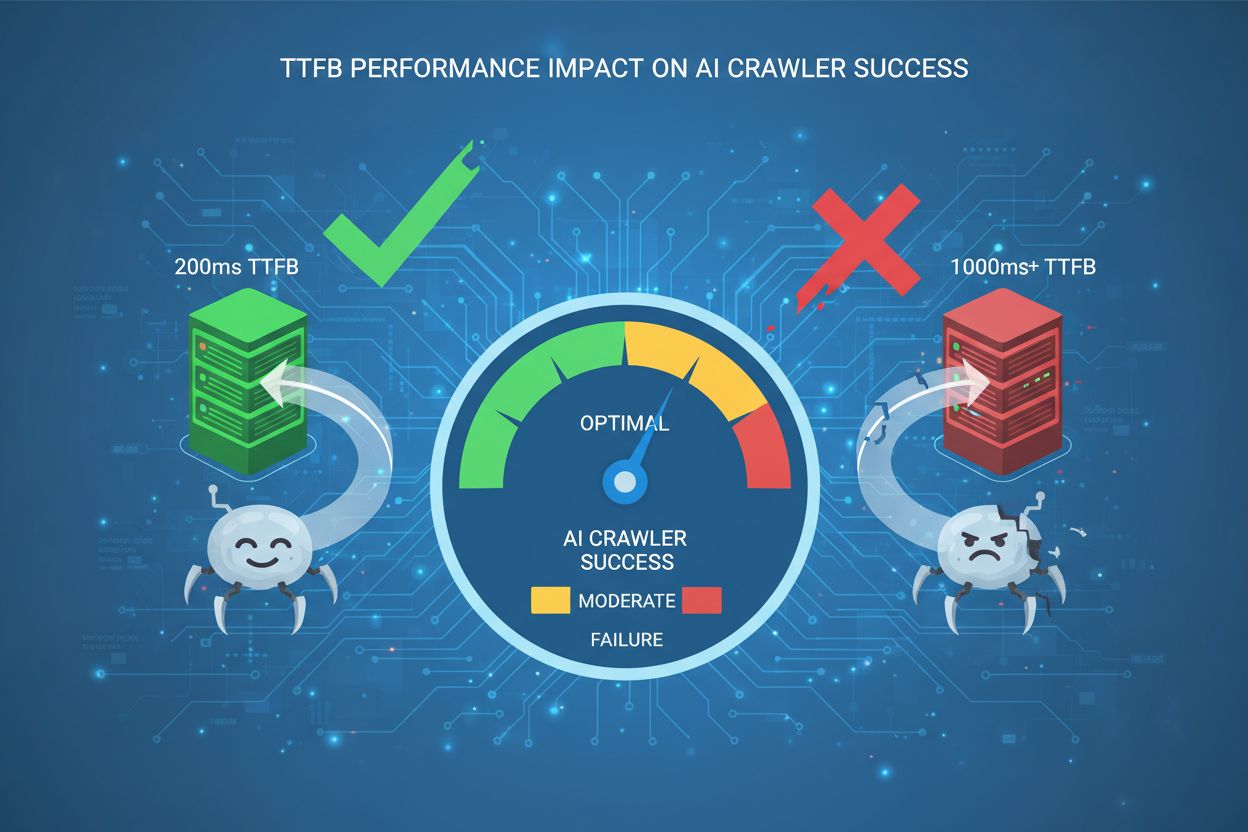

Learn how Time to First Byte impacts AI crawler success. Discover why 200ms is the gold standard threshold and how to optimize server response times for better visibility in AI-generated answers.

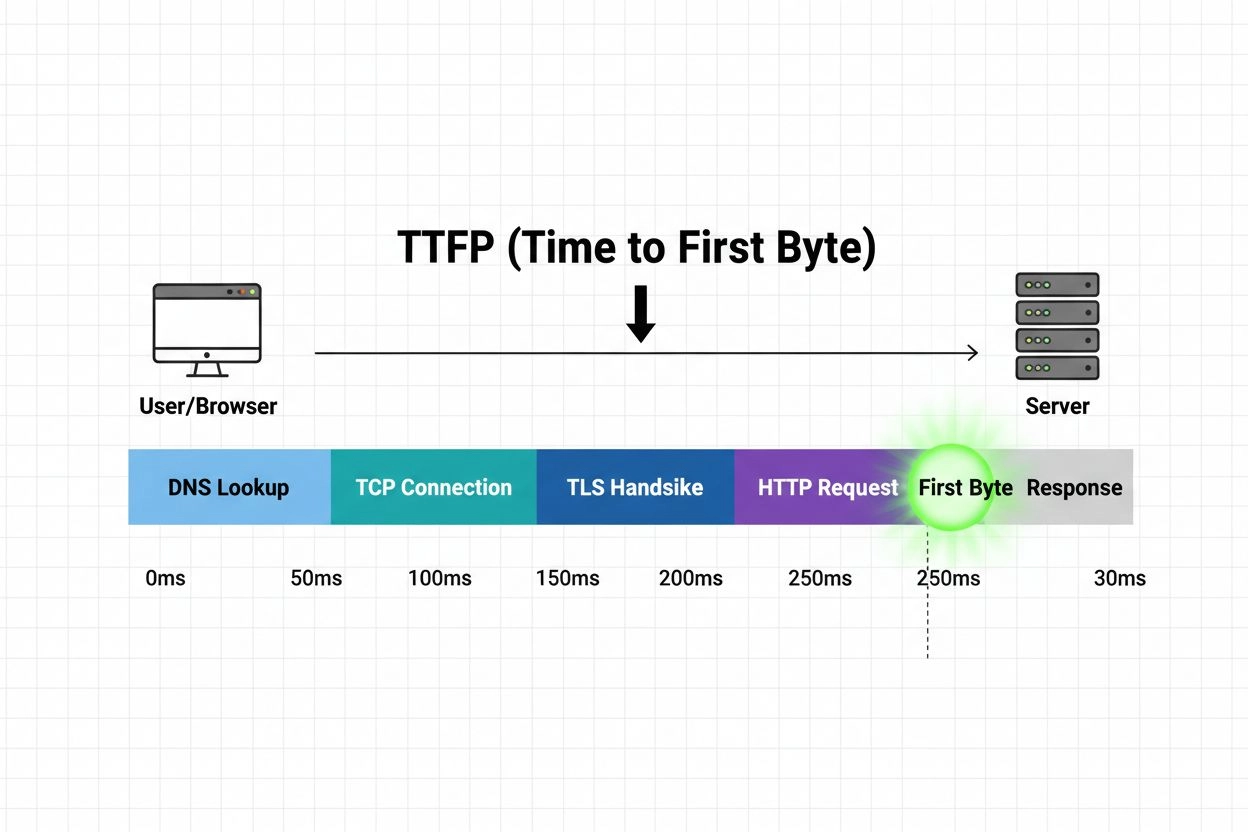

Time to First Byte (TTFB) is the duration between a user’s browser sending an HTTP request and receiving the first byte of data from the server. This metric measures server responsiveness and network latency combined, making it a foundational indicator of overall website performance. For AI crawlers indexing your content for GPTs, Perplexity, Google AI Overviews, and other large language models, TTFB is critical because it directly determines how quickly these bots can access and process your pages. Unlike traditional search engines that cache aggressively and crawl less frequently, AI crawlers operate with different patterns and priorities—they need rapid access to fresh content to train and update their models. A slow TTFB forces AI crawlers to wait longer before they can even begin parsing your content, which can lead to incomplete indexing, reduced visibility in AI-generated answers, and lower citation rates. In essence, TTFB is the gatekeeper metric that determines whether AI systems can efficiently discover and incorporate your content into their responses.

AI crawlers operate fundamentally differently from traditional search engine bots like Googlebot, exhibiting more aggressive crawling patterns and different prioritization strategies. While traditional search bots respect crawl budgets and focus on indexing for keyword-based retrieval, AI crawlers prioritize content freshness and semantic understanding, often making multiple requests to the same pages within shorter timeframes. Traditional bots typically crawl a site once every few weeks or months, whereas AI crawlers from systems like ChatGPT, Claude, and Perplexity may revisit high-value content multiple times per week or even daily. This aggressive behavior means your server infrastructure must handle significantly higher concurrent request volumes from AI sources alone.

| Characteristic | Traditional Search Bots | AI Crawlers |

|---|---|---|

| Crawl Frequency | Weekly to monthly | Daily to multiple times daily |

| Request Concurrency | Low to moderate | High and variable |

| Content Priority | Keyword relevance | Semantic understanding & freshness |

| Caching Behavior | Aggressive caching | Minimal caching, frequent re-crawls |

| Response Time Sensitivity | Moderate tolerance | High sensitivity to delays |

| User-Agent Patterns | Consistent, identifiable | Diverse, sometimes masked |

Key differences in bot characteristics:

The implications are clear: your infrastructure must be optimized not just for human visitors and traditional search engines, but specifically for the demanding patterns of AI crawlers. A TTFB that’s acceptable for traditional SEO may be inadequate for AI visibility.

The 200ms TTFB threshold has emerged as the gold standard for AI crawler success, representing the point where server response times remain fast enough for efficient content ingestion without triggering timeout mechanisms. This threshold is not arbitrary—it’s derived from the operational requirements of major AI systems, which typically implement timeout windows of 5-10 seconds for complete page loads. When TTFB exceeds 200ms, the remaining time available for downloading, parsing, and processing page content shrinks significantly, increasing the risk that AI crawlers will abandon the request or receive incomplete data. Research indicates that sites maintaining TTFB under 200ms see substantially higher citation rates in AI-generated responses, with some studies showing 40-60% improvement in AI visibility compared to sites with TTFB between 500-1000ms. The 200ms benchmark also correlates directly with LLM model selection—AI systems are more likely to prioritize and cite content from fast-responding domains when multiple sources provide similar information. Beyond this threshold, each additional 100ms of delay compounds the problem, reducing the likelihood that your content will be fully processed and incorporated into AI responses.

TTFB serves as the foundational metric upon which all other performance indicators depend, directly influencing Largest Contentful Paint (LCP) and First Contentful Paint (FCP)—two critical Core Web Vitals that affect both traditional search rankings and AI crawler behavior. When TTFB is slow, the browser must wait longer before receiving the first byte of HTML, which delays the entire rendering pipeline and pushes LCP and FCP metrics into poor ranges. LCP measures when the largest visible element on the page becomes interactive, while FCP marks when the browser renders the first DOM content—both metrics start their timers only after TTFB completes. A site with TTFB of 800ms will struggle to achieve an LCP under 2.5 seconds (Google’s “good” threshold), even with optimized rendering and resource delivery. The relationship is multiplicative rather than additive: poor TTFB doesn’t just add delay, it cascades through the entire performance chain, affecting perceived load time, user engagement, and crucially, AI crawler efficiency. For AI systems, this means slow TTFB directly reduces the probability that your content will be fully indexed and available for citation in AI-generated responses.

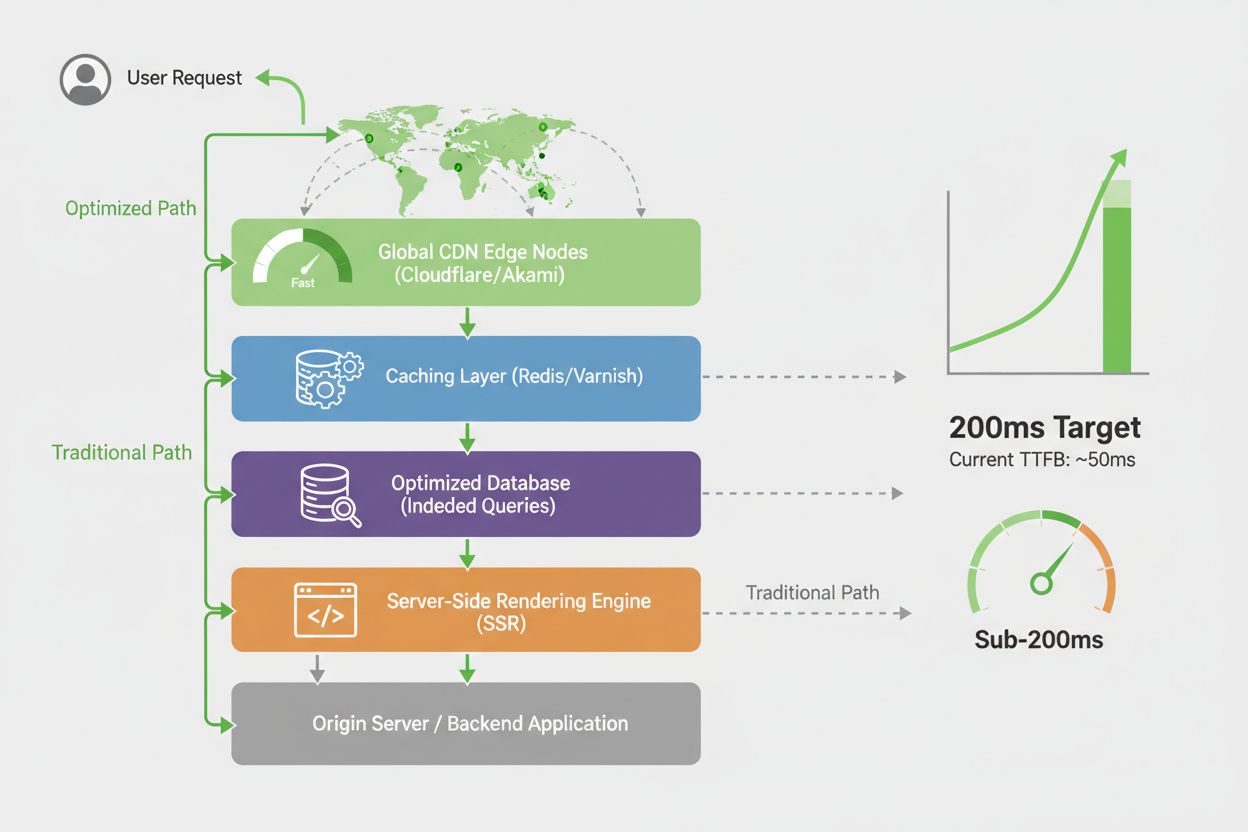

Geographic location and network infrastructure create significant variations in TTFB across different regions, directly impacting how effectively AI crawlers can access your content from different parts of the world. An AI crawler operating from a data center in Singapore may experience 300-400ms latency to a server hosted in Virginia, while the same crawler accessing a CDN-distributed site might achieve 50-80ms latency through a regional edge server. Content Delivery Networks (CDNs) are essential for maintaining consistent TTFB across regions, distributing your content to edge servers geographically closer to crawler origins and reducing the network hops required for data transmission. Without CDN optimization, sites hosted in single regions face a critical disadvantage: AI crawlers from distant geographic locations will experience degraded TTFB, potentially missing your content entirely if timeouts trigger. Real-world examples demonstrate this impact clearly—a news organization serving primarily US audiences but hosted on a single East Coast server might achieve 80ms TTFB for local crawlers but 400ms+ for crawlers originating from Asia-Pacific regions. This geographic disparity means AI systems in different regions may have inconsistent access to your content, leading to uneven citation rates and reduced global visibility. Implementing a global CDN strategy ensures that AI crawlers worldwide experience consistent, sub-200ms TTFB regardless of their origin location.

Accurate TTFB measurement requires the right tools and consistent testing methodology, as different measurement approaches can yield varying results depending on network conditions, server state, and testing location. Several industry-standard tools provide reliable TTFB data:

Google PageSpeed Insights - Provides real-world TTFB data from Chrome User Experience Report, showing actual user and crawler metrics. Free, integrated with Google Search Console, and reflects how Google’s systems perceive your site’s performance.

WebPageTest - Offers granular TTFB measurement from multiple geographic locations and connection types, allowing you to test from different regions where AI crawlers originate. Provides waterfall charts showing exact timing breakdowns.

GTmetrix - Combines Lighthouse and WebPageTest data, offering TTFB metrics alongside other performance indicators. Useful for tracking TTFB trends over time with historical data and performance recommendations.

Cloudflare Analytics - If using Cloudflare’s CDN, provides real-time TTFB data from actual traffic, showing how your site performs for real crawlers and users across different regions.

New Relic or Datadog - Enterprise monitoring solutions that track TTFB for both synthetic tests and real user monitoring (RUM), providing detailed insights into server performance and bottlenecks.

curl and Command-Line Tools - For technical teams, command-line tools like curl -w can measure TTFB directly, useful for automated monitoring and integration into CI/CD pipelines.

When measuring TTFB, test from multiple geographic locations to understand regional variations, measure during peak traffic times to identify bottlenecks under load, and establish baseline metrics before implementing optimizations. Consistent measurement methodology ensures you can accurately track improvements and identify when TTFB drifts above acceptable thresholds.

Achieving and maintaining TTFB under 200ms requires a multi-layered optimization approach addressing server infrastructure, caching strategies, and content delivery mechanisms. Here are the most effective strategies:

Implement Server-Side Caching - Cache database query results, rendered HTML, and API responses at the application level. Redis or Memcached can reduce database round-trips from 50-200ms to 1-5ms, dramatically improving TTFB.

Deploy a Global CDN - Distribute static and dynamic content to edge servers worldwide, reducing network latency from origin servers. CDNs like Cloudflare, Akamai, or AWS CloudFront can reduce TTFB by 60-80% for geographically distant crawlers.

Optimize Database Queries - Profile slow queries, add appropriate indexes, and implement query result caching. Database optimization often yields the largest TTFB improvements, as database access frequently accounts for 30-60% of server response time.

Use Server-Side Rendering (SSR) - Pre-render content on the server rather than relying on client-side JavaScript execution. SSR ensures AI crawlers receive complete, rendered HTML immediately, eliminating JavaScript parsing delays.

Implement HTTP/2 or HTTP/3 - Modern HTTP protocols reduce connection overhead and enable multiplexing, improving TTFB by 10-30% compared to HTTP/1.1.

Optimize Server Hardware and Configuration - Ensure adequate CPU, memory, and I/O resources. Misconfigured servers with insufficient resources will consistently exceed TTFB thresholds regardless of code optimization.

Reduce Third-Party Script Impact - Minimize blocking third-party scripts that execute before the server sends the first byte. Defer non-critical scripts or load them asynchronously to prevent TTFB delays.

Implement Edge Computing - Use serverless functions or edge workers to process requests closer to users and crawlers, reducing latency and improving TTFB for dynamic content.

Server-Side Rendering (SSR) is substantially superior to Client-Side Rendering (CSR) for AI crawler accessibility and TTFB performance, as it delivers fully-rendered HTML to crawlers immediately rather than requiring JavaScript execution. With CSR, the server sends a minimal HTML shell and JavaScript bundles that must be downloaded, parsed, and executed in the browser before content becomes available—a process that can add 500ms to 2+ seconds to the time before AI crawlers can access actual content. SSR eliminates this delay by rendering the complete page on the server before sending it to the client, meaning the first byte of HTML already contains the full page structure and content. For AI crawlers with strict timeout windows, this difference is critical: a CSR site might timeout before JavaScript finishes executing, resulting in crawlers receiving only the empty HTML shell without any actual content to index. SSR also provides more consistent TTFB across different network conditions, as rendering happens once on the server rather than varying based on client-side JavaScript performance. While SSR requires more server resources and careful implementation, the performance benefits for AI crawler success make it essential for sites prioritizing AI visibility. Hybrid approaches using SSR for initial page load combined with client-side hydration can provide the best of both worlds—fast TTFB for crawlers and interactive experiences for users.

The practical impact of TTFB optimization on AI visibility is substantial and measurable across diverse industries and content types. A technology news publication reduced TTFB from 850ms to 180ms through CDN implementation and database query optimization, resulting in a 52% increase in citations within AI-generated articles over a three-month period. An e-commerce site serving product information improved TTFB from 1.2 seconds to 220ms by implementing Redis caching for product data and switching to SSR for category pages, seeing a corresponding 38% increase in product mentions in AI shopping assistants. A research institution publishing academic papers achieved TTFB under 150ms through edge computing and static site generation, enabling their papers to be cited more frequently in AI-generated research summaries and literature reviews. These improvements weren’t achieved through single optimizations but through systematic approaches addressing multiple TTFB bottlenecks simultaneously. The common pattern across successful implementations is that each 100ms reduction in TTFB correlates with measurable increases in AI crawler success rates and citation frequency. Organizations that maintain TTFB consistently under 200ms report 3-5x higher visibility in AI-generated content compared to competitors with TTFB above 800ms, demonstrating that this threshold directly translates to business impact through increased AI-driven traffic and citations.

Establishing robust TTFB monitoring is essential for maintaining optimal performance and quickly identifying degradation before it impacts AI crawler success. Begin by setting baseline metrics using tools like WebPageTest or Google PageSpeed Insights, measuring TTFB from multiple geographic locations to understand regional variations and identify problem areas. Implement continuous monitoring using synthetic tests that regularly measure TTFB from different regions and network conditions, alerting your team when metrics exceed thresholds—most organizations should set alerts at 250ms to catch issues before they reach the 200ms threshold. Real User Monitoring (RUM) provides complementary data showing actual TTFB experienced by crawlers and users, revealing performance variations that synthetic tests might miss. Establish a testing process for changes: before deploying infrastructure or code changes, measure TTFB impact in staging environments, ensuring optimizations actually improve performance rather than introducing regressions. Create a performance dashboard visible to your entire team, making TTFB a shared responsibility rather than an isolated technical concern. Schedule regular performance reviews—monthly or quarterly—to analyze trends, identify emerging bottlenecks, and plan optimization initiatives. This continuous improvement mindset ensures TTFB remains optimized as your site grows, traffic patterns change, and new features are added.

AmICited.com provides specialized monitoring for how AI systems cite and reference your content, offering unique insights into the relationship between TTFB and AI visibility that general performance tools cannot provide. While traditional monitoring tools measure TTFB in isolation, AmICited tracks how TTFB performance directly correlates with citation frequency across GPTs, Perplexity, Google AI Overviews, and other major AI systems. The platform monitors AI crawler behavior patterns, identifying when crawlers access your content, how frequently they return, and whether slow TTFB causes incomplete indexing or timeouts. AmICited’s analytics reveal which content receives citations in AI-generated responses, allowing you to correlate this data with your TTFB metrics and understand the direct business impact of performance optimization. The platform provides alerts when AI crawler access patterns change, potentially indicating TTFB issues or other technical problems affecting AI visibility. For organizations serious about maximizing AI-driven traffic and citations, AmICited offers the critical visibility needed to understand whether TTFB optimizations are actually translating into improved AI visibility. By combining AmICited’s AI citation monitoring with traditional TTFB measurement tools, you gain a complete picture of how server performance directly impacts your presence in AI-generated content—the most important metric for modern content visibility.

The gold standard TTFB for AI crawler success is under 200ms. This threshold ensures AI systems can efficiently access and process your content within their timeout windows. TTFB between 200-500ms is acceptable but suboptimal, while TTFB above 800ms significantly reduces AI visibility and citation rates.

TTFB acts as a qualifying factor for AI inclusion rather than a direct ranking signal. Slow TTFB can cause AI crawlers to timeout or receive incomplete content, reducing the likelihood your pages are indexed and cited. Sites maintaining TTFB under 200ms see 40-60% higher citation rates compared to slower competitors.

Yes, several optimizations can improve TTFB without changing hosts: implement server-side caching (Redis/Memcached), deploy a CDN, optimize database queries, enable HTTP/2, and minimize render-blocking scripts. These changes often yield 30-50% TTFB improvements. However, shared hosting may have inherent limitations that prevent reaching the 200ms threshold.

Use tools like Google PageSpeed Insights, WebPageTest, GTmetrix, or Cloudflare Analytics to measure TTFB. Test from multiple geographic locations to understand regional variations. Establish baseline metrics before optimizations, then monitor continuously using synthetic tests and real user monitoring to track improvements.

Both matter, but they serve different purposes. Content quality determines whether AI systems want to cite your content, while TTFB determines whether they can access it efficiently. Excellent content with poor TTFB may never be indexed, while mediocre content with excellent TTFB will be consistently accessible. Optimize both for maximum AI visibility.

Implement continuous monitoring with alerts set at 250ms to catch issues before they impact AI visibility. Conduct detailed performance reviews monthly or quarterly to identify trends and plan optimizations. Monitor more frequently during major infrastructure changes or traffic spikes to ensure TTFB remains stable.

TTFB measures only the time until the first byte of response arrives from the server, while page load time includes downloading all resources, rendering, and executing JavaScript. TTFB is foundational—it's the starting point for all other performance metrics. A fast TTFB is necessary but not sufficient for fast overall page load times.

Geographic distance between crawler origin and your server significantly impacts TTFB. A crawler in Singapore accessing a Virginia-hosted server might experience 300-400ms latency, while a CDN-distributed site achieves 50-80ms through regional edge servers. Implementing a global CDN ensures consistent sub-200ms TTFB regardless of crawler origin location.

Track how AI crawlers access your site and optimize for better visibility in AI answers. AmICited helps you understand the direct relationship between TTFB and AI citations.

Crawl frequency is how often search engines and AI crawlers visit your site. Learn what affects crawl rates, why it matters for SEO and AI visibility, and how t...

Learn how to create TOFU content optimized for AI search. Master awareness-stage strategies for ChatGPT, Perplexity, Google AI Overviews, and Claude.

Page speed measures how quickly a webpage loads. Learn about Core Web Vitals metrics, why page speed matters for SEO and conversions, and how to optimize loadin...