Matching Content to Prompts: Optimization Based on Query Intent

Learn how to align your content with AI query intent to increase citations across ChatGPT, Perplexity, and Google AI. Master content-prompt matching strategies ...

Learn how large language models interpret user intent beyond keywords. Discover query expansion, semantic understanding, and how AI systems determine which content to cite in answers.

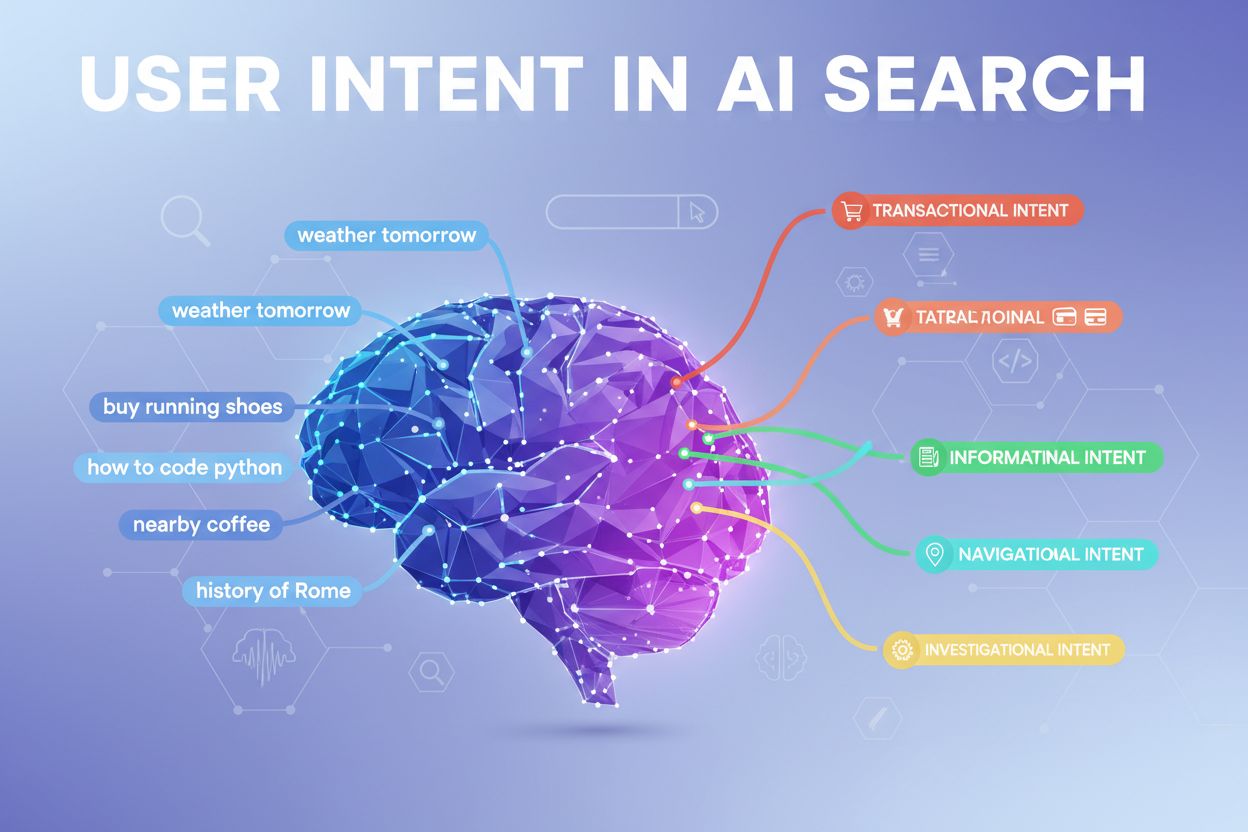

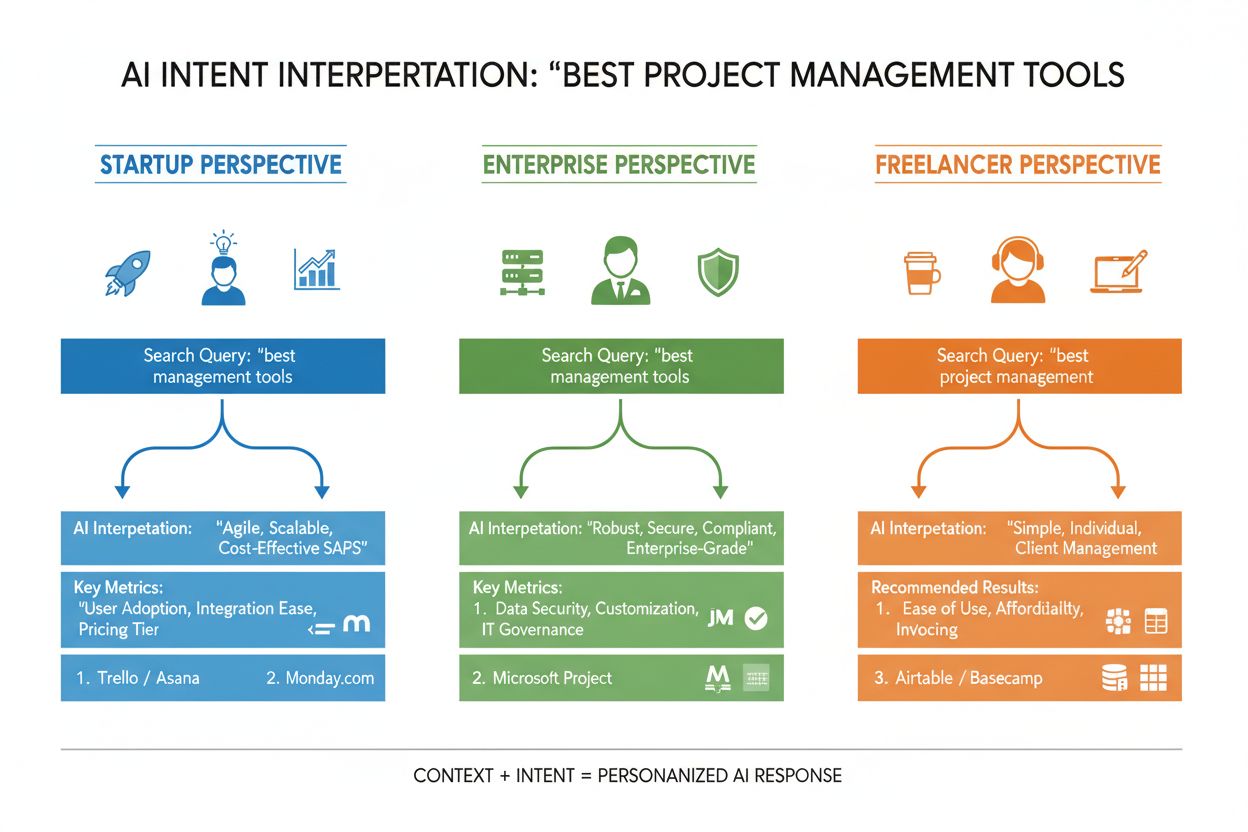

User intent in AI search refers to the underlying goal or purpose behind a query, rather than just the keywords someone types. When you search for “best project management tools,” you might be looking for a quick comparison, pricing information, or integration capabilities—and large language models (LLMs) like ChatGPT, Perplexity, and Google’s Gemini work to understand which of these goals you’re actually pursuing. Unlike traditional search engines that match keywords to pages, LLMs interpret the semantic meaning of your query by analyzing context, phrasing, and related signals to predict what you truly want to accomplish. This shift from keyword matching to intent understanding is fundamental to how modern AI search systems work, and it directly determines which sources get cited in AI-generated answers. Understanding user intent has become critical for brands seeking visibility in AI search results, as tools like AmICited now monitor how AI systems reference your content based on intent alignment.

When you enter a single query into an AI search system, something remarkable happens behind the scenes: the model doesn’t just answer your question directly. Instead, it expands your query into dozens of related micro-questions, a process researchers call “query fan-out.” For example, a simple search like “Notion vs Trello” might trigger sub-queries such as “Which is better for team collaboration?”, “What are the pricing differences?”, “Which one integrates better with Slack?”, and “What’s easier for beginners?” This expansion allows LLMs to explore different angles of your intent and gather more comprehensive information before generating a response. The system then evaluates passages from different sources at a granular level, rather than ranking entire pages, meaning a single paragraph from your content might be selected while the rest of your page is ignored. This passage-level analysis is why clarity and specificity in every section matters more than ever—a well-structured answer to a specific sub-intent could be the reason your content gets pulled into an AI-generated answer.

| Original Query | Sub-Intent 1 | Sub-Intent 2 | Sub-Intent 3 | Sub-Intent 4 |

|---|---|---|---|---|

| “Best project management tools” | “Which is best for remote teams?” | “What’s the pricing?” | “Which integrates with Slack?” | “What’s easiest for beginners?” |

| “How to improve productivity” | “What tools help with time management?” | “What are proven productivity methods?” | “How to reduce distractions?” | “What habits boost focus?” |

| “AI search engines explained” | “How do they differ from Google?” | “Which AI search is most accurate?” | “How do they handle privacy?” | “What’s the future of AI search?” |

LLMs don’t evaluate your query in isolation—they build what researchers call a “user embedding,” a vector-based profile that captures your evolving intent based on your search history, location, device type, time of day, and even previous conversations. This contextual understanding allows the system to personalize results dramatically: two users searching for “best CRM tools” might receive completely different recommendations if one is a startup founder and the other is an enterprise manager. Real-time re-ranking further refines results based on how you interact with them—if you click on certain results, spend time reading specific sections, or ask follow-up questions, the system adjusts its understanding of your intent and updates future recommendations accordingly. This behavioral feedback loop means that AI systems are constantly learning what users actually want, not just what they initially typed. For content creators and marketers, this emphasizes the importance of creating content that satisfies intent across multiple user contexts and decision-making stages.

Modern AI systems classify user intent into several distinct categories, each requiring different types of content and responses:

LLMs automatically classify these intents by analyzing query structure, keywords, and contextual signals, then selecting content that best matches the detected intent type. Understanding these categories helps content creators structure their pages to address the specific intent users bring to their searches.

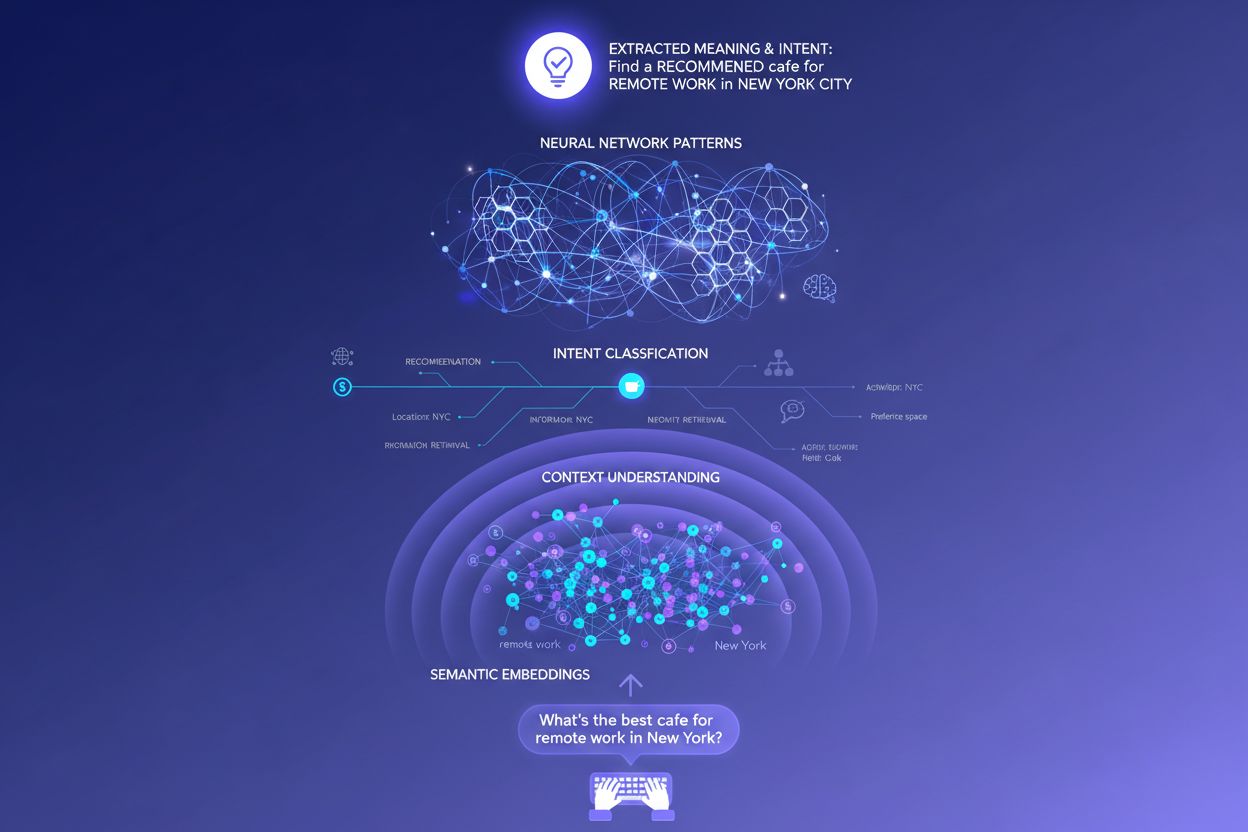

Traditional keyword-based search engines operate through simple string matching—if your page contains the exact words someone searches for, it might rank. This approach fails spectacularly with synonyms, paraphrasing, and context. If someone searches “affordable project management software” and your page uses the phrase “budget-friendly task coordination platform,” traditional search might miss the connection entirely. Semantic embeddings solve this problem by converting words and phrases into mathematical vectors that capture meaning rather than just surface-level text. These vectors exist in a high-dimensional space where semantically similar concepts cluster together, allowing LLMs to recognize that “affordable,” “budget-friendly,” “inexpensive,” and “low-cost” all express the same intent. This semantic approach also handles long-tail and conversational queries far better than keyword matching—a query like “I’m a freelancer and need something simple but powerful” can be matched to relevant content even though it contains no traditional keywords. The practical result is that AI systems can surface relevant answers to vague, complex, or unconventional queries, making them dramatically more useful than their keyword-based predecessors.

At the technical heart of intent interpretation lies the transformer architecture, a neural network design that processes language by analyzing relationships between words through a mechanism called “attention.” Rather than reading text sequentially like a human, transformers evaluate how each word relates to every other word in a query, allowing them to capture nuanced meaning and context. Semantic embeddings are the numerical representations that emerge from this process—each word, phrase, or concept gets converted into a vector of numbers that encodes its meaning. Models like BERT (Bidirectional Encoder Representations from Transformers) and RankBrain use these embeddings to understand that “best CRM for startups” and “top customer relationship management platform for new companies” express similar intent, even though they use completely different words. The attention mechanism is particularly powerful because it allows the model to focus on the most relevant parts of a query—in “best project management tools for remote teams with limited budgets,” the system learns to weight “remote teams” and “limited budgets” as critical intent signals. This technical sophistication is why modern AI search feels so much more intelligent than traditional keyword-based systems.

Understanding how LLMs interpret intent fundamentally changes content strategy. Rather than writing one comprehensive guide that tries to rank for a single keyword, successful content now addresses multiple sub-intents within modular sections that can stand alone. If you’re writing about project management tools, instead of one massive comparison, create distinct sections answering “Which is best for remote teams?”, “What’s the most affordable option?”, and “Which integrates with Slack?"—each section becomes a potential answer card that LLMs can extract and cite. Citation-ready formatting matters enormously: use facts rather than vague statements, include specific numbers and dates, and structure information so it’s easy for AI systems to quote or summarize. Bullet points, clear headings, and short paragraphs help LLMs parse your content more effectively than dense prose. Tools like AmICited now allow marketers to monitor how AI systems reference their content across ChatGPT, Perplexity, and Google AI, revealing which intent alignments are working and where content gaps exist. This data-driven approach to content strategy—optimizing for how AI systems actually interpret and cite your work—represents a fundamental shift from traditional SEO.

Consider an e-commerce example: when someone searches “waterproof jacket under $200,” they’re expressing multiple intents simultaneously—they want durability information, price confirmation, and product recommendations. An AI system might expand this into sub-queries about waterproofing technology, price comparisons, brand reviews, and warranty information. A brand that addresses all these angles in modular, well-structured content is far more likely to be cited in the AI-generated answer than a competitor with a generic product page. In the SaaS space, the same query “How do I invite my team to this workspace?” might appear hundreds of times in support logs, signaling a critical content gap. An AI assistant trained on your documentation might struggle to answer this question clearly, leading to poor user experience and reduced visibility in AI-generated support answers. In news and informational contexts, a query like “What’s happening with AI regulation?” will be interpreted differently depending on user context—a policymaker might need legislative details, a business leader might need competitive implications, and a technologist might need technical standards information. Successful content addresses these different intent contexts explicitly.

Despite their sophistication, LLMs face real challenges in intent interpretation. Ambiguous queries like “Java” could refer to the programming language, the island, or the coffee—even with context, the system might misclassify intent. Mixed or layered intents complicate matters further: “Is this CRM better than Salesforce and where can I try it free?” combines comparison, evaluation, and transactional intent in one query. Context window limitations mean that LLMs can only consider a finite amount of conversation history, so in long multi-turn conversations, earlier intent signals might be forgotten. Hallucinations and factual errors remain a concern, particularly in domains requiring high accuracy like healthcare, finance, or legal advice. Privacy considerations also matter—as systems collect more behavioral data to improve personalization, they must balance intent accuracy against user privacy concerns. Understanding these limitations helps content creators and marketers set realistic expectations about AI search visibility and recognize that not every query will be interpreted perfectly.

Intent-based search is evolving rapidly toward more sophisticated understanding and interaction. Conversational AI will become increasingly natural, with systems maintaining context across longer, more complex multi-turn dialogues where intent can shift and evolve. Multimodal intent understanding will combine text, images, voice, and even video to interpret user goals more holistically—imagine asking an AI assistant “find me something like this” while showing a photo. Zero-query search represents an emerging frontier where AI systems anticipate user needs before they’re explicitly stated, using behavioral signals and context to proactively surface relevant information. Improved personalization will make results increasingly tailored to individual user profiles, decision-making stages, and contextual situations. Integration with recommendation systems will blur the line between search and discovery, with AI systems suggesting relevant content users didn’t know to search for. As these capabilities mature, the competitive advantage will increasingly belong to brands and creators who understand intent deeply and structure their content to satisfy it comprehensively across multiple contexts and user types.

User intent refers to the underlying goal or purpose behind a query, rather than just the keywords typed. LLMs interpret semantic meaning by analyzing context, phrasing, and related signals to predict what users truly want to accomplish. This is why the same query can yield different results based on user context and decision-making stage.

LLMs use a process called 'query fan-out' to break down a single query into dozens of related micro-questions. For example, 'Notion vs Trello' might expand into sub-queries about team collaboration, pricing, integrations, and ease of use. This allows AI systems to explore different angles of intent and gather comprehensive information.

Understanding intent helps content creators optimize for how AI systems actually interpret and cite their work. Content that addresses multiple sub-intents in modular sections is more likely to be selected by LLMs. This directly impacts visibility in AI-generated answers across ChatGPT, Perplexity, and Google AI.

Semantic embeddings convert words and phrases into mathematical vectors that capture meaning rather than just surface-level text. This allows LLMs to recognize that 'affordable,' 'budget-friendly,' and 'inexpensive' express the same intent, even though they use different words. This semantic approach handles synonyms, paraphrasing, and context far better than traditional keyword matching.

Yes, LLMs face challenges with ambiguous queries, mixed intents, and context limitations. Queries like 'Java' could refer to programming language, geography, or coffee. Long conversations might exceed context windows, causing earlier intent signals to be forgotten. Understanding these limitations helps set realistic expectations about AI search visibility.

Brands should create modular content that addresses multiple sub-intents in distinct sections. Use citation-ready formatting with facts, specific numbers, and clear structure. Monitor how AI systems reference your content using tools like AmICited to identify intent alignment gaps and optimize accordingly.

Intent is task-focused—what users want to accomplish right now. Interest is broader general curiosity. AI systems prioritize intent because it directly determines which content gets selected for answers. A user might be interested in productivity tools generally, but their intent might be finding something specifically for remote team collaboration.

AI systems cite sources that best match the detected intent. If your content clearly addresses a specific sub-intent with well-structured, factual information, it's more likely to be selected. Tools like AmICited track these citation patterns, showing which intent alignments drive visibility in AI-generated answers.

Understand how LLMs reference your content across ChatGPT, Perplexity, and Google AI. Track intent alignment and optimize for AI visibility with AmICited.

Learn how to align your content with AI query intent to increase citations across ChatGPT, Perplexity, and Google AI. Master content-prompt matching strategies ...

Learn how to identify and optimize for search intent in AI search engines. Discover methods to classify user queries, analyze AI SERPs, and structure content fo...

Explore AI search intent categories and how generative engines like ChatGPT, Perplexity, and Google AI interpret user goals. Learn the 4 core types and advanced...